概述

kubeadm 已?持集群部署,且在1.13 版本中 GA,?持多 master,多 etcd 集群化部署,它也是官?最為推薦的部署?式,?來是由它的 sig 組來推進的,?來 kubeadm 在很多??確實很好的利?了 kubernetes 的許多特性,接下來?篇我們來實踐并了解下它的魅?。

?標

1. 通過 kubeadm 搭建?可? kubernetes 集群,并新建管理?戶

2. 為后續做版本升級演示,此處使?1.13.1版本,到下?篇再升級到 v1.14

3. kubeadm 的原理解讀

本?主要介紹 kubeadm 對?可?集群的部署

kubeadm 部署 k8s v1.13 ?可?集群

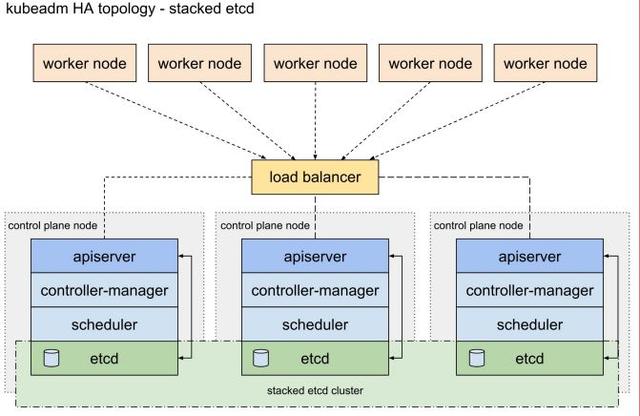

?式有兩種

- Stacked etcd topology

- 即每臺 etcd 各?獨?,分別部署在 3 臺 master 上,互不通信,優點是簡單,缺點是缺乏 etcd ?可?性

- 需要?少 4 臺機器(3master 和 etcd,1node)

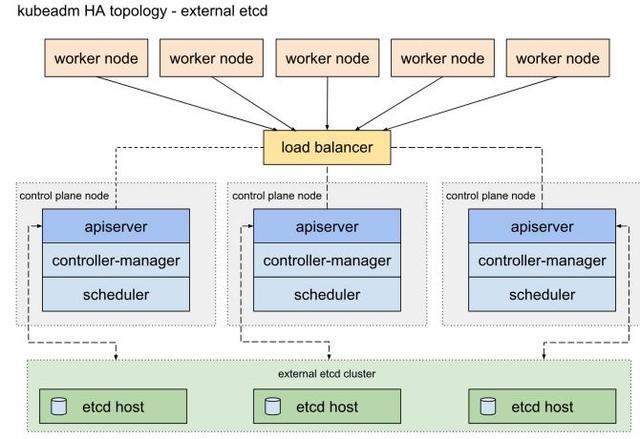

- External etcd topology

- 即采?集群外 etcd 拓撲結構,這樣的冗余性更好,但需要?少7臺機器(3master,3etcd,1node)

- ?產環境建議采?該?案

- 本?也采?這個拓撲

步驟

- 環境準備

- 安裝組件:docker,kubelet,kubeadm(所有節點)

- 使?上述組件部署 etcd ?可?集群

- 部署 master

- 加?node

- ?絡安裝

- 驗證

- 總結

機環境準備

- 系統環境

#操作系統版本(?必須,僅為此處案例)$cat /etc/redhat-releaseCentOS Linux release 7.2.1511 (Core)#內核版本(?必須,僅為此處案例)$uname -r4.17.8-1.el7.elrepo.x86_64#數據盤開啟ftype(在每臺節點上執?)umount /datamkfs.xfs -n ftype=1 -f /dev/vdb#禁?swapswapoff -ased -i "s#^/swapfile##/swapfile#g" /etc/fstabmount -adocker,kubelet,kubeadm 的安裝(所有節點)

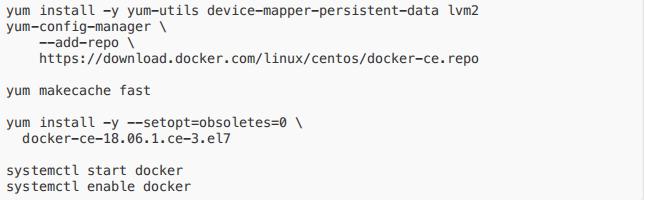

安裝運?時(docker)

- k8s1.13 版本根據官?建議,暫不采?最新的 18.09,這?我們采?18.06,安裝時需指 定版本

- 來源:kubeadm now properly recognizes Docker 18.09.0 and newer, but still treats 18.06 as the default supported version.

- 安裝腳本如下(在每臺節點上執?):

安裝 kubeadm,kubelet,kubectl

- 官?的 Google yum 源?法從國內服務器上直接下載,所以可先在其他渠道下載好,在上傳到服務器上

cat < /etc/yum.repos.d/kubernetes.repo[kubernetes]name=Kubernetesbaseurl=https://packages.cloud.google.com/yum/repos/kubernetes-el7-x86_64enabled=1gpgcheck=1repo_gpgcheck=1gpgkey=https://packages.cloud.google.com/yum/doc/yum-key.gpghttps://packages.cloud.google.com/yum/doc/rpm-package-key.gpgexclude=kube*EOF$ yum -y install --downloadonly --downloaddir=k8s kubelet-1.13.1 kubeadm-1.13.1kubectl-1.13.1$ ls k8s/25cd948f63fea40e81e43fbe2e5b635227cc5bbda6d5e15d42ab52decf09a5ac-kubelet-1.13.1-0.x86_64.rpm53edc739a0e51a4c17794de26b13ee5df939bd3161b37f503fe2af8980b41a89-cri-tools-1.12.0-0.x86_64.rpm5af5ecd0bc46fca6c51cc23280f0c0b1522719c282e23a2b1c39b8e720195763-kubeadm-1.13.1-0.x86_64.rpm7855313ff2b42ebcf499bc195f51d56b8372abee1a19bbf15bb4165941c0229d-kubectl-1.13.1-0.x86_64.rpmfe33057ffe95bfae65e2f269e1b05e99308853176e24a4d027bc082b471a07c0-kubernetes-cni-0.6.0-0.x86_64.rpmsocat-1.7.3.2-2.el7.x86_64.rpm- 本地安裝

# 禁?selinuxsetenforce 0sed -i 's/^SELINUX=enforcing$/SELINUX=disabled/' /etc/selinux/config# 本地安裝yum localinstall -y k8s/*.rpmsystemctl enable --now kubelet- ?絡修復,已知 centos7 會因 iptables 被繞過?將流量錯誤路由,因此需確保sysctl 配置中的 net.bridge.bridgenf-call-iptables 被設置為 1

cat < /etc/sysctl.d/k8s.confnet.bridge.bridge-nf-call-ip6tables = 1net.bridge.bridge-nf-call-iptables = 1EOFsysctl --system使?上述組件部署 etcd ?可?集群

1. 在 etcd 節點上,將 etcd 服務設置為由 kubelet 啟動管理

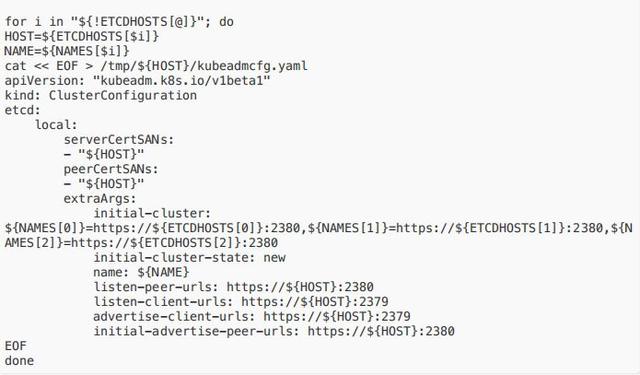

cat << EOF > /etc/systemd/system/kubelet.service.d/20-etcd-service-manager.conf[Service]ExecStart=ExecStart=/usr/bin/kubelet --address=127.0.0.1 --pod-manifestpath=/etc/kubernetes/manifests --allow-privileged=trueRestart=alwaysEOFsystemctl daemon-reloadsystemctl restart kubelet2. 給每臺 etcd 主機?成 kubeadm 配置?件,確保每臺主機運??個 etcd 實例:在 etcd1(即上述的 hosts0)上執?上述

命令,可以在 /tmp ?錄下看到?個主機名的?錄

# Update HOST0, HOST1, and HOST2 with the IPs or resolvable names of your hostsexport HOST0=10.10.184.226export HOST1=10.10.213.222export HOST2=10.10.239.108# Create temp directories to store files that will end up on other hosts.mkdir -p /tmp/${HOST0}/ /tmp/${HOST1}/ /tmp/${HOST2}/ETCDHOSTS=(${HOST0} ${HOST1} ${HOST2})NAMES=("infra0" "infra1" "infra2")?

3. 制作 CA:在host0上執?命令?成證書,它將創建兩個?件:/etc/kubernetes/pki/etcd/ca.crt/etc/kubernetes/pki/etcd/ca.key (這?步需要翻墻)

[root@10-10-184-226 ~]# kubeadm init phase certs etcd-ca[certs] Generating "etcd/ca" certificate and key4. 在 host0 上給每個 etcd 節點?成證書:

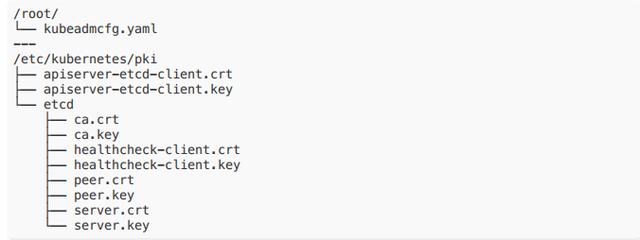

export HOST0=10.10.184.226export HOST1=10.10.213.222export HOST2=10.10.239.108kubeadm init phase certs etcd-server --config=/tmp/${HOST2}/kubeadmcfg.yamlkubeadm init phase certs etcd-peer --config=/tmp/${HOST2}/kubeadmcfg.yamlkubeadm init phase certs etcd-healthcheck-client --config=/tmp/${HOST2}/kubeadmcfg.yamlkubeadm init phase certs apiserver-etcd-client --config=/tmp/${HOST2}/kubeadmcfg.yamlcp -R /etc/kubernetes/pki /tmp/${HOST2}/# cleanup non-reusable certificatesfind /etc/kubernetes/pki -not -name ca.crt -not -name ca.key -type f -deletekubeadm init phase certs etcd-server --config=/tmp/${HOST1}/kubeadmcfg.yamlkubeadm init phase certs etcd-peer --config=/tmp/${HOST1}/kubeadmcfg.yamlkubeadm init phase certs etcd-healthcheck-client --config=/tmp/${HOST1}/kubeadmcfg.yamlkubeadm init phase certs apiserver-etcd-client --config=/tmp/${HOST1}/kubeadmcfg.yamlcp -R /etc/kubernetes/pki /tmp/${HOST1}/find /etc/kubernetes/pki -not -name ca.crt -not -name ca.key -type f -deletekubeadm init phase certs etcd-server --config=/tmp/${HOST0}/kubeadmcfg.yamlkubeadm init phase certs etcd-peer --config=/tmp/${HOST0}/kubeadmcfg.yamlkubeadm init phase certs etcd-healthcheck-client --config=/tmp/${HOST0}/kubeadmcfg.yamlkubeadm init phase certs apiserver-etcd-client --config=/tmp/${HOST0}/kubeadmcfg.yaml# No need to move the certs because they are for HOST0# clean up certs that should not be copied off this hostfind /tmp/${HOST2} -name ca.key -type f -deletefind /tmp/${HOST1} -name ca.key -type f -delete- 將證書和 kubeadmcfg.yaml 下發到各個 etcd 節點上效果為

5. ?成靜態 pod manifest ,在 3 臺 etcd 節點上分別執?:(需翻墻)

$ kubeadm init phase etcd local --config=/root/kubeadmcfg.yaml[etcd] Creating static Pod manifest for local etcd in "/etc/kubernetes/manifests"6. 檢查 etcd 集群狀態,?此 etcd 集群搭建完成

docker run --rm -it --net host -v /etc/kubernetes:/etc/kubernetesk8s.gcr.io/etcd:3.2.24 etcdctl --cert-file /etc/kubernetes/pki/etcd/peer.crt --keyfile /etc/kubernetes/pki/etcd/peer.key --ca-file /etc/kubernetes/pki/etcd/ca.crt --endpoints https://${HOST0}:2379 cluster-healthmember 9969ee7ea515cbd2 is healthy: got healthy result fromhttps://10.10.213.222:2379member cad4b939d8dfb250 is healthy: got healthy result fromhttps://10.10.239.108:2379member e6e86b3b5b495dfb is healthy: got healthy result fromhttps://10.10.184.226:2379cluster is healthy使? kubeadm 部署 master

- 將任意?臺 etcd 上的證書拷?到 master1 節點

export CONTROL_PLANE="ubuntu@10.0.0.7"+scp /etc/kubernetes/pki/etcd/ca.crt "${CONTROL_PLANE}":+scp /etc/kubernetes/pki/apiserver-etcd-client.crt "${CONTROL_PLANE}":+scp /etc/kubernetes/pki/apiserver-etcd-client.key "${CONTROL_PLANE}":- 在第?臺 master 上編寫配置?件 kubeadm-config.yaml 并初始化

注意:這?的 k8s.paas.test:6443 是?個 LB,如果沒有可?虛擬 IP 來做

- 使?私有倉庫(?定義鏡像功能) kubeadm ?持通過修改配置?件中的參數來靈活定制集群初始化?作,如 imageRepository 可以設置鏡像前綴,我們可以在將鏡像傳到??內部私服上之后,編輯 kubeadm-config.yaml 中的該參數之后再執? init

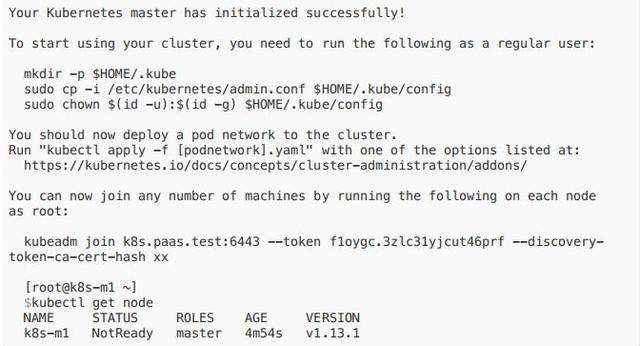

- 在 master1 上執?:kubeadm init --config kubeadm-config.yaml

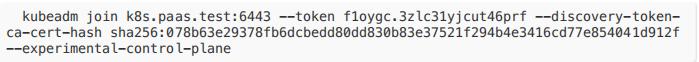

安裝另外2臺 master

- master1 上的 admin.conf 配置?件和 pki 相關證書拷?到另外 2 臺 master 同樣?錄下如:

/etc/kubernetes/pki/ca.crt/etc/kubernetes/pki/ca.key/etc/kubernetes/pki/sa.key/etc/kubernetes/pki/sa.pub/etc/kubernetes/pki/front-proxy-ca.crt/etc/kubernetes/pki/front-proxy-ca.key/etc/kubernetes/pki/etcd/ca.crt/etc/kubernetes/pki/etcd/ca.key (官??檔中此處需要拷?,但實際不需要)/etc/kubernetes/admin.conf注:官??檔少了兩個?件/etc/kubernetes/pki/apiserver-etcd-client.crt /etc/kubernetes/pki/apiserver-etcdclient.key,不加 apiserver 會啟動失敗并報錯:

Unable to create storage backend: config (&{ /registry []/etc/kubernetes/pki/apiserver-etcd-client.key /etc/kubernetes/pki/apiserver-etcdclient.crt /etc/kubernetes/pki/etcd/ca.crt true 0xc000133c20 5m0s 1m0s}),err (open /etc/kubernetes/pki/apiserver-etcd-client.crt: no such file ordirectory)- 在 2 和 3 master 中執?加?:

加? node 節點

[root@k8s-n1 ~]$ kubeadm join k8s.paas.test:6443 --token f1oygc.3zlc31yjcut46prf --discovery-tokenca-cert-hash sha256:078b63e29378fb6dcbedd80dd830b83e37521f294b4e3416cd77e854041d912f[preflight] Running pre-flight checks[discovery] Trying to connect to API Server "k8s.paas.test:6443"[discovery] Created cluster-info discovery client, requesting info from"https://k8s.paas.test:6443"[discovery] Requesting info from "https://k8s.paas.test:6443" again to validate TLSagainst the pinned public key[discovery] Cluster info signature and contents are valid and TLS certificatevalidates against pinned roots, will use API Server "k8s.paas.test:6443"[discovery] Successfully established connection with API Server "k8s.paas.test:6443"[join] Reading configuration from the cluster...[join] FYI: You can look at this config file with 'kubectl -n kube-system get cmkubeadm-config -oyaml'[kubelet] Downloading configuration for the kubelet from the "kubelet-config-1.13"ConfigMap in the kube-system namespace[kubelet-start] Writing kubelet configuration to file "/var/lib/kubelet/config.yaml"[kubelet-start] Writing kubelet environment file with flags to file"/var/lib/kubelet/kubeadm-flags.env"[kubelet-start] Activating the kubelet service[tlsbootstrap] Waiting for the kubelet to perform the TLS Bootstrap...[patchnode] Uploading the CRI Socket information "/var/run/dockershim.sock" to theNode API object "k8s-n1" as an annotation?This node has joined the cluster:* Certificate signing request was sent to apiserver and a response was received.* The Kubelet was informed of the new secure connection details.?Run 'kubectl get nodes' on the master to see this node join the cluster.網絡安裝

kubectl apply -fhttps://raw.githubusercontent.com/coreos/flannel/a70459be0084506e4ec919aa1c114638878db11b/Documentation/kube-flannel.ymlkubectl get pod --all-namespaces -o wideNAMESPACE NAME READY STATUS RESTARTS AGEIP NODE NOMINATED NODE READINESS GATESkube-system coredns-86c58d9df4-dc4t2 1/1 Running 0 14m172.17.0.3 k8s-m1 kube-system coredns-86c58d9df4-jxv6v 1/1 Running 0 14m172.17.0.2 k8s-m1 kube-system kube-apiserver-k8s-m1 1/1 Running 0 13m10.10.119.128 k8s-m1 kube-system kube-apiserver-k8s-m2 1/1 Running 0 5m10.10.76.80 k8s-m2 kube-system kube-apiserver-k8s-m3 1/1 Running 0 4m58s10.10.56.27 k8s-m3 kube-system kube-controller-manager-k8s-m1 1/1 Running 0 13m10.10.119.128 k8s-m1 kube-system kube-controller-manager-k8s-m2 1/1 Running 0 5m10.10.76.80 k8s-m2 kube-system kube-controller-manager-k8s-m3 1/1 Running 0 4m58s10.10.56.27 k8s-m3 kube-system kube-flannel-ds-amd64-nvmtk 1/1 Running 0 44s10.10.56.27 k8s-m3 kube-system kube-flannel-ds-amd64-pct2g 1/1 Running 0 44s10.10.76.80 k8s-m2 kube-system kube-flannel-ds-amd64-ptv9z 1/1 Running 0 44s10.10.119.128 k8s-m1 kube-system kube-flannel-ds-amd64-zcv49 1/1 Running 0 44s10.10.175.146 k8s-n1 kube-system kube-proxy-9cmg2 1/1 Running 0 2m34s10.10.175.146 k8s-n1 kube-system kube-proxy-krlkf 1/1 Running 0 4m58s10.10.56.27 k8s-m3 kube-system kube-proxy-p9v66 1/1 Running 0 14m10.10.119.128 k8s-m1 kube-system kube-proxy-wcgg6 1/1 Running 0 5m10.10.76.80 k8s-m2 kube-system kube-scheduler-k8s-m1 1/1 Running 0 13m10.10.119.128 k8s-m1 kube-system kube-scheduler-k8s-m2 1/1 Running 0 5m10.10.76.80 k8s-m2 kube-system kube-scheduler-k8s-m3 1/1 Running 0 4m58s10.10.56.27 k8s-m3 安裝完成

驗證

- ?先驗證 kube-apiserver, kube-controller-manager, kube-scheduler, pod network 是否正常:

$kubectl create deployment nginx --image=nginx:alpine$kubectl get pods -l app=nginx -o wideNAME READY STATUS RESTARTS AGE IP NODENOMINATED NODE READINESS GATESnginx-54458cd494-r6hqm 1/1 Running 0 5m24s 10.244.4.2 k8s-n1- kube-proxy 驗證

$kubectl expose deployment nginx --port=80 --type=NodePortservice/nginx exposed?[root@k8s-m1 ~]$kubectl get svcNAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGEkubernetes ClusterIP 10.96.0.1 443/TCP 122mnginx NodePort 10.108.192.221 80:30992/TCP 4s[root@k8s-m1 ~]$kubectl get pods -l app=nginx -o wideNAME READY STATUS RESTARTS AGE IP NODENOMINATED NODE READINESS GATESnginx-54458cd494-r6hqm 1/1 Running 0 6m53s 10.244.4.2 k8s-n1?$curl -I k8s-n1:30992HTTP/1.1 200 OK- 驗證 dns,pod ?絡狀態

kubectl run --generator=run-pod/v1 -it curl --image=radial/busyboxplus:curlIf you don't see a command prompt, try pressing enter.[ root@curl:/ ]$ nslookup nginxServer: 10.96.0.10Address 1: 10.96.0.10 kube-dns.kube-system.svc.cluster.localName: nginxAddress 1: 10.108.192.221 nginx.default.svc.cluster.local- ?可?

關機 master1,在?個隨機 pod 中訪問 Nginx 關機 master1,在?個隨機 pod 中訪問 Nginx

while true;do curl -I nginx && sleep 1 ;done總結

關于版本

- 內核版本 4.19 更加穩定此處不建議4.17(再新就是5了)

- docker 最新穩定版是 1.17.12,此處是 1.18.06,雖 k8s 官?也確認兼容1.18.09 了但?產上還是建議 1.17.12

關于?絡,各家選擇不同,flannel 在中?公司較為普遍,但部署前要選好?絡插件,在配置?件中提前設置好(官?博客?開始的 kubeadm 配置中沒寫,后?在?絡設置中?要求必須加)

出錯處理

- 想重置環境的話,kubeadm reset 是個很好的?具,但它并不會完全重置,在etcd 中的的部分數據(如 configmap secure 等)是沒有被清空的,所以如果有必要重置真個環境,記得在 reset 后將 etcd 也重置。

- 重置 etcd 辦法為清空 etcd 節點 /var/lib/etcd,重啟 docker 服務)

翻墻

- 鏡像:kubeadm 已?持?定義鏡像前綴,kubeadm-config.yaml 中設置 imageRepository 即可

- yum,可提前下載導?,也可以設置 http_proxy 來訪問

- init,簽發證書和 init 時需要連接 google,也可以設置 http_proxy來訪問。

更多

- 證書、升級問題將在下?篇繼續介紹。

參考:

- https://kubernetes.io/docs/setup/independent/setup-ha-etcd-with-kubeadm/

想要加群的可以添加WeChat:18310139238

)

)