- 🍨 本文為🔗365天深度學習訓練營 中的學習記錄博客

- 🍖 原作者:K同學啊 | 接輔導、項目定制

文章目錄

- 前言

- 1 我的環境

- 2 代碼實現與執行結果

- 2.1 前期準備

- 2.1.1 引入庫

- 2.1.2 設置GPU(如果設備上支持GPU就使用GPU,否則使用CPU)

- 2.1.3 導入數據

- 2.1.4 可視化數據

- 2.1.4 圖像數據變換

- 2.1.4 劃分數據集

- 2.1.4 加載數據

- 2.1.4 查看數據

- 2.2 構建CNN網絡模型

- 2.3 訓練模型

- 2.3.1 訓練模型

- 2.3.2 編寫訓練函數

- 2.3.3 編寫測試函數

- 2.3.4 正式訓練

- 2.4 結果可視化

- 2.4 指定圖片進行預測

- 2.6 保存并加載模型

- 3 知識點詳解

- 3.1 torch.utils.data.DataLoader()參數詳解

- 3.2 torch.squeeze()與torch.unsqueeze()詳解

- 3.3 拔高嘗試--更改優化器為Adam

- 3.4 拔高嘗試--更改優化器為Adam+增加dropout層

- 3.5 拔高嘗試--更改優化器為Adam+增加dropout層+保存最好的模型

- 總結

前言

本文將采用pytorch框架創建CNN網絡,實現猴痘病識別。講述實現代碼與執行結果,并淺談涉及知識點。

關鍵字: torch.utils.data.DataLoader()參數詳解,torch.squeeze()與torch.unsqueeze()詳解,拔高嘗試–更改優化器為Adam,增加dropout層,保存最好的模型

1 我的環境

- 電腦系統:Windows 11

- 語言環境:python 3.8.6

- 編譯器:pycharm2020.2.3

- 深度學習環境:

torch == 1.9.1+cu111

torchvision == 0.10.1+cu111 - 顯卡:NVIDIA GeForce RTX 4070

2 代碼實現與執行結果

2.1 前期準備

2.1.1 引入庫

import torch

import torch.nn as nn

from torchvision import transforms, datasets

import time

from pathlib import Path

from PIL import Image

from torchinfo import summary

import torch.nn.functional as F

import matplotlib.pyplot as pltplt.rcParams['font.sans-serif'] = ['SimHei'] # 用來正常顯示中文標簽

plt.rcParams['axes.unicode_minus'] = False # 用來正常顯示負號

plt.rcParams['figure.dpi'] = 100 # 分辨率

import warningswarnings.filterwarnings('ignore') # 忽略一些warning內容,無需打印

2.1.2 設置GPU(如果設備上支持GPU就使用GPU,否則使用CPU)

"""前期準備-設置GPU"""

# 如果設備上支持GPU就使用GPU,否則使用CPUdevice = torch.device("cuda" if torch.cuda.is_available() else "cpu")print("Using {} device".format(device))

輸出

Using cuda device

2.1.3 導入數據

[猴痘病數據](https://pan.baidu.com/s/11r_uOUV0ToMNXQtxahb0yg?pwd=7qtp)

'''前期工作-導入數據'''

data_dir = r"D:\DeepLearning\data\monkeypox_recognition"

data_dir = Path(data_dir)data_paths = list(data_dir.glob('*'))

classeNames = [str(path).split("\\")[-1] for path in data_paths]

print(classeNames)

輸出

['Monkeypox', 'Others']

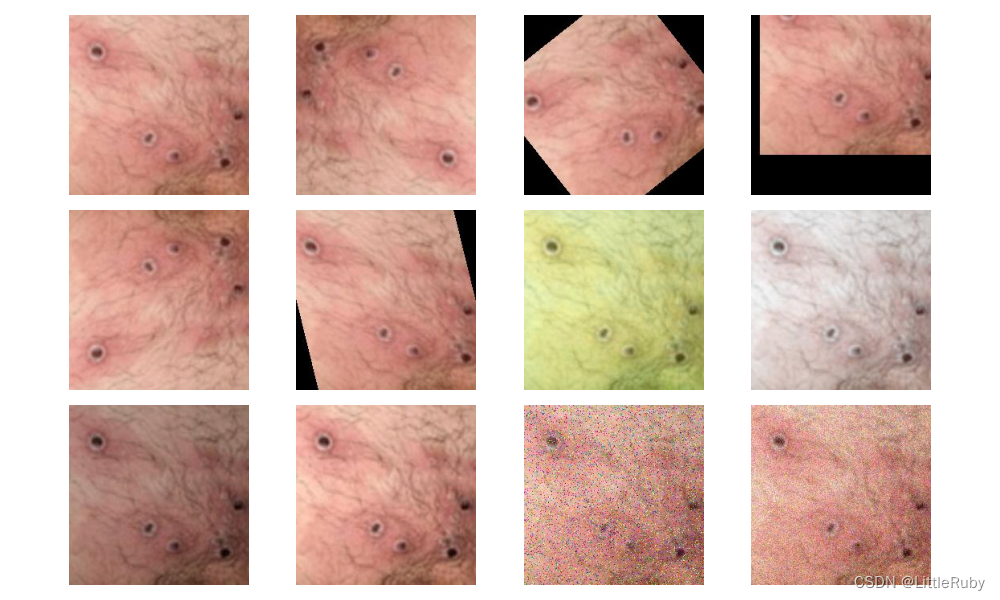

2.1.4 可視化數據

'''前期工作-可視化數據'''

cloudyPath = Path(data_dir)/"Monkeypox"

image_files = list(p.resolve() for p in cloudyPath.glob('*') if p.suffix in [".jpg", ".png", ".jpeg"])

plt.figure(figsize=(10, 6))

for i in range(len(image_files[:12])):image_file = image_files[i]ax = plt.subplot(3, 4, i + 1)img = Image.open(str(image_file))plt.imshow(img)plt.axis("off")

# 顯示圖片

plt.tight_layout()

plt.show()

2.1.4 圖像數據變換

'''前期工作-圖像數據變換'''

total_datadir = data_dir# 關于transforms.Compose的更多介紹可以參考:https://blog.csdn.net/qq_38251616/article/details/124878863

train_transforms = transforms.Compose([transforms.Resize([224, 224]), # 將輸入圖片resize成統一尺寸transforms.ToTensor(), # 將PIL Image或numpy.ndarray轉換為tensor,并歸一化到[0,1]之間transforms.Normalize( # 標準化處理-->轉換為標準正太分布(高斯分布),使模型更容易收斂mean=[0.485, 0.456, 0.406],std=[0.229, 0.224, 0.225]) # 其中 mean=[0.485,0.456,0.406]與std=[0.229,0.224,0.225] 從數據集中隨機抽樣計算得到的。

])

total_data = datasets.ImageFolder(total_datadir, transform=train_transforms)

print(total_data)

print(total_data.class_to_idx)

輸出

Dataset ImageFolderNumber of datapoints: 2142Root location: D:\DeepLearning\data\monkeypox_recognitionStandardTransform

Transform: Compose(Resize(size=[224, 224], interpolation=bilinear, max_size=None, antialias=None)ToTensor()Normalize(mean=[0.485, 0.456, 0.406], std=[0.229, 0.224, 0.225]))

{'Monkeypox': 0, 'Others': 1}

2.1.4 劃分數據集

'''前期工作-劃分數據集'''

train_size = int(0.8 * len(total_data)) # train_size表示訓練集大小,通過將總體數據長度的80%轉換為整數得到;

test_size = len(total_data) - train_size # test_size表示測試集大小,是總體數據長度減去訓練集大小。

# 使用torch.utils.data.random_split()方法進行數據集劃分。該方法將總體數據total_data按照指定的大小比例([train_size, test_size])隨機劃分為訓練集和測試集,

# 并將劃分結果分別賦值給train_dataset和test_dataset兩個變量。

train_dataset, test_dataset = torch.utils.data.random_split(total_data, [train_size, test_size])

print("train_dataset={}\ntest_dataset={}".format(train_dataset, test_dataset))

print("train_size={}\ntest_size={}".format(train_size, test_size))

輸出

train_dataset=<torch.utils.data.dataset.Subset object at 0x00000231AFD1B550>

test_dataset=<torch.utils.data.dataset.Subset object at 0x00000231AFD1B430>

train_size=1713

test_size=429

2.1.4 加載數據

'''前期工作-加載數據'''

batch_size = 32train_dl = torch.utils.data.DataLoader(train_dataset,batch_size=batch_size,shuffle=True,num_workers=1)

test_dl = torch.utils.data.DataLoader(test_dataset,batch_size=batch_size,shuffle=True,num_workers=1)

2.1.4 查看數據

'''前期工作-查看數據'''

for X, y in test_dl:print("Shape of X [N, C, H, W]: ", X.shape)print("Shape of y: ", y.shape, y.dtype)break

輸出

Shape of X [N, C, H, W]: torch.Size([32, 3, 224, 224])

Shape of y: torch.Size([32]) torch.int64

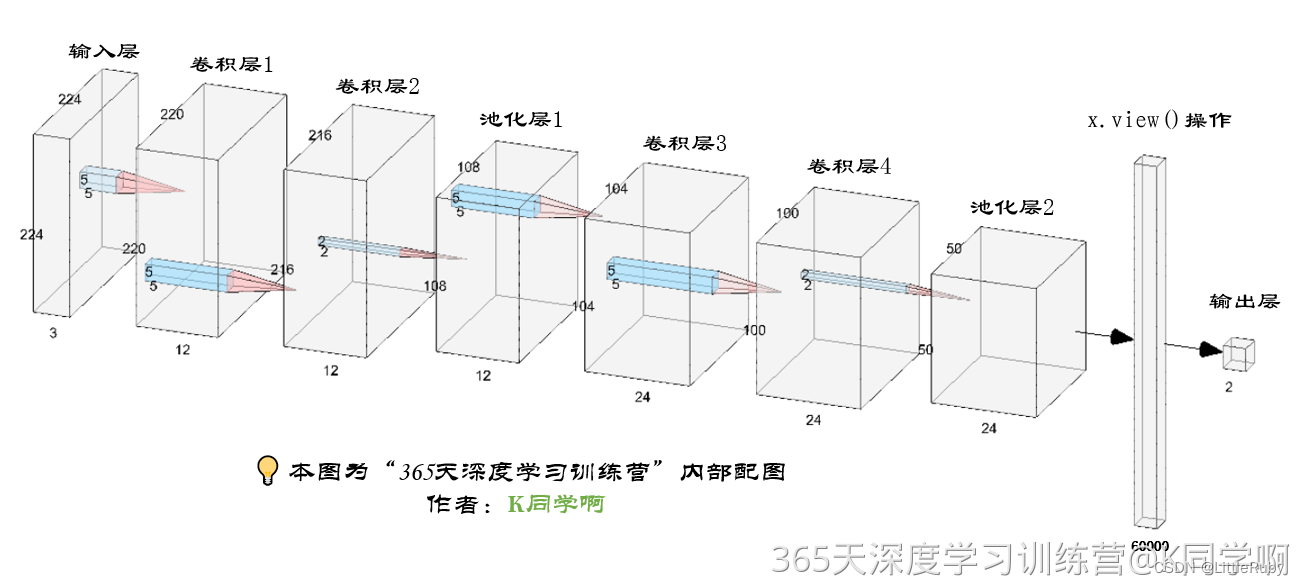

2.2 構建CNN網絡模型

"""構建CNN網絡"""

class Network_bn(nn.Module):def __init__(self):super(Network_bn, self).__init__()"""nn.Conv2d()函數:第一個參數(in_channels)是輸入的channel數量第二個參數(out_channels)是輸出的channel數量第三個參數(kernel_size)是卷積核大小第四個參數(stride)是步長,默認為1第五個參數(padding)是填充大小,默認為0"""self.conv1 = nn.Conv2d(in_channels=3, out_channels=12, kernel_size=5, stride=1, padding=0)self.bn1 = nn.BatchNorm2d(12)self.conv2 = nn.Conv2d(in_channels=12, out_channels=12, kernel_size=5, stride=1, padding=0)self.bn2 = nn.BatchNorm2d(12)self.pool = nn.MaxPool2d(2, 2)self.conv4 = nn.Conv2d(in_channels=12, out_channels=24, kernel_size=5, stride=1, padding=0)self.bn4 = nn.BatchNorm2d(24)self.conv5 = nn.Conv2d(in_channels=24, out_channels=24, kernel_size=5, stride=1, padding=0)self.bn5 = nn.BatchNorm2d(24)self.fc1 = nn.Linear(24 * 50 * 50, len(classeNames))def forward(self, x):x = F.relu(self.bn1(self.conv1(x)))x = F.relu(self.bn2(self.conv2(x)))x = self.pool(x)x = F.relu(self.bn4(self.conv4(x)))x = F.relu(self.bn5(self.conv5(x)))x = self.pool(x)x = x.view(-1, 24 * 50 * 50)x = self.fc1(x)return xmodel = Network_bn().to(device)

print(model)

summary(model)

輸出

Network_bn((conv1): Conv2d(3, 12, kernel_size=(5, 5), stride=(1, 1))(bn1): BatchNorm2d(12, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(conv2): Conv2d(12, 12, kernel_size=(5, 5), stride=(1, 1))(bn2): BatchNorm2d(12, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(pool): MaxPool2d(kernel_size=2, stride=2, padding=0, dilation=1, ceil_mode=False)(conv4): Conv2d(12, 24, kernel_size=(5, 5), stride=(1, 1))(bn4): BatchNorm2d(24, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(conv5): Conv2d(24, 24, kernel_size=(5, 5), stride=(1, 1))(bn5): BatchNorm2d(24, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(fc1): Linear(in_features=60000, out_features=2, bias=True)

)

=================================================================

Layer (type:depth-idx) Param #

=================================================================

Network_bn --

├─Conv2d: 1-1 912

├─BatchNorm2d: 1-2 24

├─Conv2d: 1-3 3,612

├─BatchNorm2d: 1-4 24

├─MaxPool2d: 1-5 --

├─Conv2d: 1-6 7,224

├─BatchNorm2d: 1-7 48

├─Conv2d: 1-8 14,424

├─BatchNorm2d: 1-9 48

├─Linear: 1-10 120,002

=================================================================

Total params: 146,318

Trainable params: 146,318

Non-trainable params: 0

=================================================================

2.3 訓練模型

2.3.1 訓練模型

"""訓練模型--設置超參數"""

loss_fn = nn.CrossEntropyLoss() # 創建損失函數,計算實際輸出和真實相差多少,交叉熵損失函數,事實上,它就是做圖片分類任務時常用的損失函數

learn_rate = 1e-4 # 學習率

opt = torch.optim.SGD(model.parameters(), lr=learn_rate) # 作用是定義優化器,用來訓練時候優化模型參數;其中,SGD表示隨機梯度下降,用于控制實際輸出y與真實y之間的相差有多大2.3.2 編寫訓練函數

"""訓練模型--編寫訓練函數"""

# 訓練循環

def train(dataloader, model, loss_fn, optimizer):size = len(dataloader.dataset) # 訓練集的大小,一共60000張圖片num_batches = len(dataloader) # 批次數目,1875(60000/32)train_loss, train_acc = 0, 0 # 初始化訓練損失和正確率for X, y in dataloader: # 加載數據加載器,得到里面的 X(圖片數據)和 y(真實標簽)X, y = X.to(device), y.to(device) # 用于將數據存到顯卡# 計算預測誤差pred = model(X) # 網絡輸出loss = loss_fn(pred, y) # 計算網絡輸出和真實值之間的差距,targets為真實值,計算二者差值即為損失# 反向傳播optimizer.zero_grad() # 清空過往梯度loss.backward() # 反向傳播,計算當前梯度optimizer.step() # 根據梯度更新網絡參數# 記錄acc與losstrain_acc += (pred.argmax(1) == y).type(torch.float).sum().item()train_loss += loss.item()train_acc /= sizetrain_loss /= num_batchesreturn train_acc, train_loss

2.3.3 編寫測試函數

"""訓練模型--編寫測試函數"""

# 測試函數和訓練函數大致相同,但是由于不進行梯度下降對網絡權重進行更新,所以不需要傳入優化器

def test(dataloader, model, loss_fn):size = len(dataloader.dataset) # 測試集的大小,一共10000張圖片num_batches = len(dataloader) # 批次數目,313(10000/32=312.5,向上取整)test_loss, test_acc = 0, 0# 當不進行訓練時,停止梯度更新,節省計算內存消耗with torch.no_grad(): # 測試時模型參數不用更新,所以 no_grad,整個模型參數正向推就ok,不反向更新參數for imgs, target in dataloader:imgs, target = imgs.to(device), target.to(device)# 計算losstarget_pred = model(imgs)loss = loss_fn(target_pred, target)test_loss += loss.item()test_acc += (target_pred.argmax(1) == target).type(torch.float).sum().item()#統計預測正確的個數test_acc /= sizetest_loss /= num_batchesreturn test_acc, test_loss2.3.4 正式訓練

"""訓練模型--正式訓練"""

epochs = 20

train_loss = []

train_acc = []

test_loss = []

test_acc = []for epoch in range(epochs):model.train()epoch_train_acc, epoch_train_loss = train(train_dl, model, loss_fn, opt)model.eval()epoch_test_acc, epoch_test_loss = test(test_dl, model, loss_fn)train_acc.append(epoch_train_acc)train_loss.append(epoch_train_loss)test_acc.append(epoch_test_acc)test_loss.append(epoch_test_loss)template = ('Epoch:{:2d}, Train_acc:{:.1f}%, Train_loss:{:.3f}, Test_acc:{:.1f}%,Test_loss:{:.3f}')print(template.format(epoch + 1, epoch_train_acc * 100, epoch_train_loss, epoch_test_acc * 100, epoch_test_loss))

print('Done')

輸出

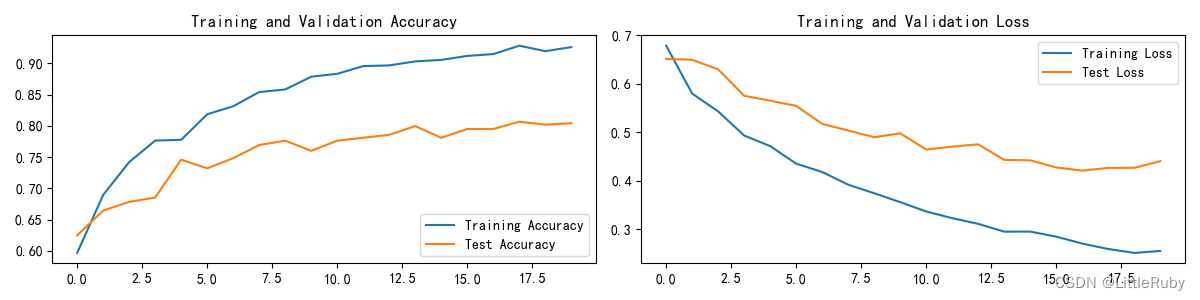

Epoch: 1, duration:7062ms, Train_acc:59.7%, Train_loss:0.679, Test_acc:62.5%,Test_loss:0.651

Epoch: 2, duration:5408ms, Train_acc:68.9%, Train_loss:0.580, Test_acc:66.4%,Test_loss:0.650

Epoch: 3, duration:5328ms, Train_acc:74.2%, Train_loss:0.543, Test_acc:67.8%,Test_loss:0.630

Epoch: 4, duration:5345ms, Train_acc:77.6%, Train_loss:0.493, Test_acc:68.5%,Test_loss:0.575

Epoch: 5, duration:5340ms, Train_acc:77.8%, Train_loss:0.471, Test_acc:74.6%,Test_loss:0.565

Epoch: 6, duration:5295ms, Train_acc:81.8%, Train_loss:0.435, Test_acc:73.2%,Test_loss:0.555

Epoch: 7, duration:5309ms, Train_acc:83.1%, Train_loss:0.418, Test_acc:74.8%,Test_loss:0.517

Epoch: 8, duration:5268ms, Train_acc:85.4%, Train_loss:0.392, Test_acc:76.9%,Test_loss:0.504

Epoch: 9, duration:5395ms, Train_acc:85.8%, Train_loss:0.374, Test_acc:77.6%,Test_loss:0.490

Epoch:10, duration:5346ms, Train_acc:87.9%, Train_loss:0.356, Test_acc:76.0%,Test_loss:0.498

Epoch:11, duration:5297ms, Train_acc:88.3%, Train_loss:0.336, Test_acc:77.6%,Test_loss:0.464

Epoch:12, duration:5291ms, Train_acc:89.6%, Train_loss:0.323, Test_acc:78.1%,Test_loss:0.470

Epoch:13, duration:5259ms, Train_acc:89.7%, Train_loss:0.311, Test_acc:78.6%,Test_loss:0.475

Epoch:14, duration:5343ms, Train_acc:90.3%, Train_loss:0.295, Test_acc:80.0%,Test_loss:0.443

Epoch:15, duration:5363ms, Train_acc:90.5%, Train_loss:0.295, Test_acc:78.1%,Test_loss:0.442

Epoch:16, duration:5305ms, Train_acc:91.2%, Train_loss:0.284, Test_acc:79.5%,Test_loss:0.427

Epoch:17, duration:5279ms, Train_acc:91.5%, Train_loss:0.270, Test_acc:79.5%,Test_loss:0.421

Epoch:18, duration:5356ms, Train_acc:92.8%, Train_loss:0.259, Test_acc:80.7%,Test_loss:0.426

Epoch:19, duration:5284ms, Train_acc:91.9%, Train_loss:0.251, Test_acc:80.2%,Test_loss:0.427

Epoch:20, duration:5274ms, Train_acc:92.6%, Train_loss:0.255, Test_acc:80.4%,Test_loss:0.440

Done

2.4 結果可視化

"""訓練模型--結果可視化"""

epochs_range = range(epochs)plt.figure(figsize=(12, 3))

plt.subplot(1, 2, 1)plt.plot(epochs_range, train_acc, label='Training Accuracy')

plt.plot(epochs_range, test_acc, label='Test Accuracy')

plt.legend(loc='lower right')

plt.title('Training and Validation Accuracy')plt.subplot(1, 2, 2)

plt.plot(epochs_range, train_loss, label='Training Loss')

plt.plot(epochs_range, test_loss, label='Test Loss')

plt.legend(loc='upper right')

plt.title('Training and Validation Loss')

plt.show()

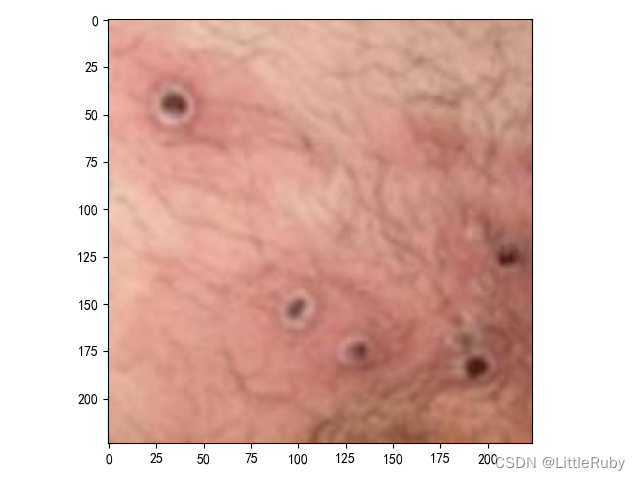

2.4 指定圖片進行預測

def predict_one_image(image_path, model, transform, classes):test_img = Image.open(image_path).convert('RGB')plt.imshow(test_img) # 展示預測的圖片plt.show()test_img = transform(test_img)img = test_img.to(device).unsqueeze(0)model.eval()output = model(img)_, pred = torch.max(output, 1)pred_class = classes[pred]print(f'預測結果是:{pred_class}')classes = list(total_data.class_to_idx)

# 預測訓練集中的某張照片

predict_one_image(image_path=str(Path(data_dir)/"Monkeypox"/"M01_01_00.jpg"),model=model,transform=train_transforms,classes=classes)

輸出

預測結果是:Monkeypox

2.6 保存并加載模型

"""保存并加載模型"""

# 模型保存

PATH = './model.pth' # 保存的參數文件名

torch.save(model.state_dict(), PATH)# 將參數加載到model當中

model.load_state_dict(torch.load(PATH, map_location=device))

3 知識點詳解

3.1 torch.utils.data.DataLoader()參數詳解

torch.utils.data.DataLoader 是 PyTorch 中用于加載和管理數據的一個實用工具類。它允許你以小批次的方式迭代你的數據集,這對于訓練神經網絡和其他機器學習任務非常有用。DataLoader 構造函數接受多個參數,下面是一些常用的參數及其解釋:

dataset(必需參數):這是你的數據集對象,通常是 torch.utils.data.Dataset 的子類,它包含了你的數據樣本。batch_size(可選參數):指定每個小批次中包含的樣本數。默認值為 1。shuffle(可選參數):如果設置為 True,則在每個 epoch 開始時對數據進行洗牌,以隨機打亂樣本的順序。這對于訓練數據的隨機性很重要,以避免模型學習到數據的順序性。默認值為 False。num_workers(可選參數):用于數據加載的子進程數量。通常,將其設置為大于 0 的值可以加快數據加載速度,特別是當數據集很大時。默認值為 0,表示在主進程中加載數據。pin_memory(可選參數):如果設置為 True,則數據加載到 GPU 時會將數據存儲在 CUDA 的鎖頁內存中,這可以加速數據傳輸到 GPU。默認值為 False。drop_last(可選參數):如果設置為 True,則在最后一個小批次可能包含樣本數小于 batch_size 時,丟棄該小批次。這在某些情況下很有用,以確保所有小批次具有相同的大小。默認值為 False。timeout(可選參數):如果設置為正整數,它定義了每個子進程在等待數據加載器傳遞數據時的超時時間(以秒為單位)。這可以用于避免子進程卡住的情況。默認值為 0,表示沒有超時限制。worker_init_fn(可選參數):一個可選的函數,用于初始化每個子進程的狀態。這對于設置每個子進程的隨機種子或其他初始化操作很有用。

3.2 torch.squeeze()與torch.unsqueeze()詳解

torch.squeeze()

對數據的維度進行壓縮,去掉維數為1的的維度

函數原型

torch.squeeze(input, dim=None, *, out=None)

關鍵參數

● input (Tensor):輸入Tensor

● dim (int, optional):如果給定,輸入將只在這個維度上被壓縮

實戰案例

>>> x = torch.zeros(2, 1, 2, 1, 2)

>>> x.size()

torch.Size([2, 1, 2, 1, 2])

>>> y = torch.squeeze(x)

>>> y.size()

torch.Size([2, 2, 2])

>>> y = torch.squeeze(x, 0)

>>> y.size()

torch.Size([2, 1, 2, 1, 2])

>>> y = torch.squeeze(x, 1)

>>> y.size()

torch.Size([2, 2, 1, 2])

torch.unsqueeze()

對數據維度進行擴充。給指定位置加上維數為一的維度

函數原型

torch.unsqueeze(input, dim)

關鍵參數說明:

●input (Tensor):輸入Tensor

●dim (int):插入單例維度的索引

實戰案例:

>>> x = torch.tensor([1, 2, 3, 4])

>>> torch.unsqueeze(x, 0)

tensor([[ 1, 2, 3, 4]])

>>> torch.unsqueeze(x, 1)

tensor([[ 1],[ 2],[ 3],[ 4]])

3.3 拔高嘗試–更改優化器為Adam

opt = torch.optim.Adam(model.parameters(), lr=learn_rate)

訓練過程如下

Epoch: 1, duration:8545ms, Train_acc:62.6%, Train_loss:0.822, Test_acc:61.3%,Test_loss:0.622

Epoch: 2, duration:5357ms, Train_acc:77.5%, Train_loss:0.462, Test_acc:80.0%,Test_loss:0.448

Epoch: 3, duration:5594ms, Train_acc:85.0%, Train_loss:0.340, Test_acc:79.3%,Test_loss:0.417

Epoch: 4, duration:5536ms, Train_acc:89.3%, Train_loss:0.263, Test_acc:80.4%,Test_loss:0.485

Epoch: 5, duration:5387ms, Train_acc:91.8%, Train_loss:0.226, Test_acc:77.2%,Test_loss:0.524

Epoch: 6, duration:5337ms, Train_acc:95.1%, Train_loss:0.175, Test_acc:84.6%,Test_loss:0.388

Epoch: 7, duration:5445ms, Train_acc:96.0%, Train_loss:0.136, Test_acc:86.9%,Test_loss:0.384

Epoch: 8, duration:5413ms, Train_acc:97.1%, Train_loss:0.108, Test_acc:86.7%,Test_loss:0.368

Epoch: 9, duration:5402ms, Train_acc:97.8%, Train_loss:0.094, Test_acc:85.5%,Test_loss:0.374

Epoch:10, duration:5360ms, Train_acc:98.6%, Train_loss:0.077, Test_acc:87.4%,Test_loss:0.370

Epoch:11, duration:5327ms, Train_acc:99.1%, Train_loss:0.057, Test_acc:86.2%,Test_loss:0.389

Epoch:12, duration:5432ms, Train_acc:99.5%, Train_loss:0.044, Test_acc:84.1%,Test_loss:0.500

Epoch:13, duration:5385ms, Train_acc:99.5%, Train_loss:0.043, Test_acc:86.2%,Test_loss:0.399

Epoch:14, duration:5419ms, Train_acc:99.8%, Train_loss:0.031, Test_acc:86.9%,Test_loss:0.400

Epoch:15, duration:5375ms, Train_acc:99.8%, Train_loss:0.025, Test_acc:86.9%,Test_loss:0.380

Epoch:16, duration:5373ms, Train_acc:99.9%, Train_loss:0.023, Test_acc:87.6%,Test_loss:0.374

Epoch:17, duration:5383ms, Train_acc:99.8%, Train_loss:0.023, Test_acc:88.8%,Test_loss:0.390

Epoch:18, duration:5398ms, Train_acc:99.9%, Train_loss:0.021, Test_acc:88.1%,Test_loss:0.425

Epoch:19, duration:5491ms, Train_acc:99.9%, Train_loss:0.020, Test_acc:87.6%,Test_loss:0.393

Epoch:20, duration:5405ms, Train_acc:99.9%, Train_loss:0.015, Test_acc:87.2%,Test_loss:0.400

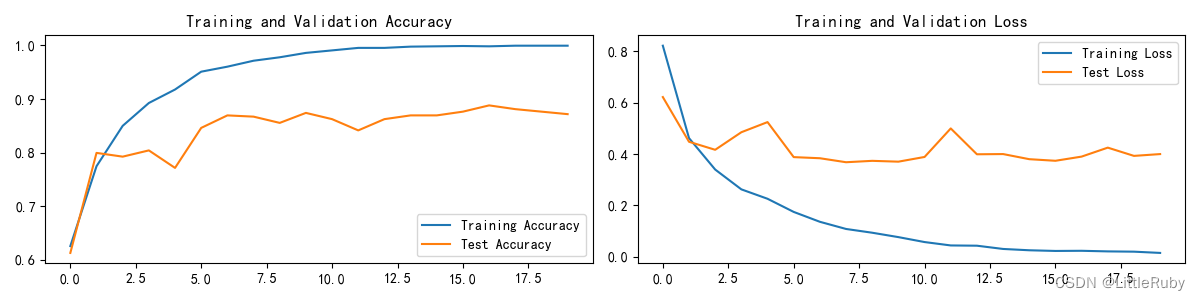

acc與loss圖如下,測試精度最高達到88.8%

3.4 拔高嘗試–更改優化器為Adam+增加dropout層

在更改優化器為Adam代碼的基礎上,修改網絡模型結構,提升測試精度

class Network_bn(nn.Module):def __init__(self):super(Network_bn, self).__init__()"""nn.Conv2d()函數:第一個參數(in_channels)是輸入的channel數量第二個參數(out_channels)是輸出的channel數量第三個參數(kernel_size)是卷積核大小第四個參數(stride)是步長,默認為1第五個參數(padding)是填充大小,默認為0"""self.conv1 = nn.Conv2d(in_channels=3, out_channels=12, kernel_size=5, stride=1, padding=0)self.bn1 = nn.BatchNorm2d(12)self.conv2 = nn.Conv2d(in_channels=12, out_channels=12, kernel_size=5, stride=1, padding=0)self.bn2 = nn.BatchNorm2d(12)self.pool = nn.MaxPool2d(2, 2)self.conv4 = nn.Conv2d(in_channels=12, out_channels=24, kernel_size=5, stride=1, padding=0)self.bn4 = nn.BatchNorm2d(24)self.conv5 = nn.Conv2d(in_channels=24, out_channels=24, kernel_size=5, stride=1, padding=0)self.bn5 = nn.BatchNorm2d(24)self.dropout = nn.Dropout(p=0.5)self.fc1 = nn.Linear(24 * 50 * 50, len(classeNames))def forward(self, x):x = F.relu(self.bn1(self.conv1(x)))x = F.relu(self.bn2(self.conv2(x)))x = self.pool(x)x = F.relu(self.bn4(self.conv4(x)))x = F.relu(self.bn5(self.conv5(x)))x = self.pool(x)x = self.dropout(x)x = x.view(-1, 24 * 50 * 50)x = self.fc1(x)return x訓練過程如下

Epoch: 1, duration:7376ms, Train_acc:62.3%, Train_loss:0.812, Test_acc:69.7%,Test_loss:0.576

Epoch: 2, duration:5362ms, Train_acc:75.0%, Train_loss:0.522, Test_acc:77.4%,Test_loss:0.470

Epoch: 3, duration:5439ms, Train_acc:84.5%, Train_loss:0.369, Test_acc:78.3%,Test_loss:0.418

Epoch: 4, duration:5418ms, Train_acc:87.6%, Train_loss:0.305, Test_acc:84.4%,Test_loss:0.369

Epoch: 5, duration:5422ms, Train_acc:90.6%, Train_loss:0.253, Test_acc:83.0%,Test_loss:0.377

Epoch: 6, duration:5418ms, Train_acc:92.1%, Train_loss:0.215, Test_acc:87.6%,Test_loss:0.334

Epoch: 7, duration:5414ms, Train_acc:92.4%, Train_loss:0.196, Test_acc:86.5%,Test_loss:0.343

Epoch: 8, duration:5373ms, Train_acc:95.6%, Train_loss:0.140, Test_acc:84.4%,Test_loss:0.454

Epoch: 9, duration:5403ms, Train_acc:96.4%, Train_loss:0.125, Test_acc:87.2%,Test_loss:0.331

Epoch:10, duration:5402ms, Train_acc:96.8%, Train_loss:0.111, Test_acc:87.9%,Test_loss:0.300

Epoch:11, duration:5448ms, Train_acc:98.0%, Train_loss:0.081, Test_acc:89.5%,Test_loss:0.293

Epoch:12, duration:5394ms, Train_acc:98.4%, Train_loss:0.073, Test_acc:89.0%,Test_loss:0.314

Epoch:13, duration:5444ms, Train_acc:98.5%, Train_loss:0.064, Test_acc:88.6%,Test_loss:0.352

Epoch:14, duration:5400ms, Train_acc:98.0%, Train_loss:0.074, Test_acc:89.7%,Test_loss:0.322

Epoch:15, duration:5396ms, Train_acc:98.9%, Train_loss:0.052, Test_acc:89.7%,Test_loss:0.329

Epoch:16, duration:5519ms, Train_acc:99.0%, Train_loss:0.045, Test_acc:86.2%,Test_loss:0.397

Epoch:17, duration:5374ms, Train_acc:99.2%, Train_loss:0.038, Test_acc:90.2%,Test_loss:0.302

Epoch:18, duration:5464ms, Train_acc:99.3%, Train_loss:0.037, Test_acc:91.1%,Test_loss:0.314

Epoch:19, duration:5668ms, Train_acc:98.6%, Train_loss:0.054, Test_acc:88.3%,Test_loss:0.341

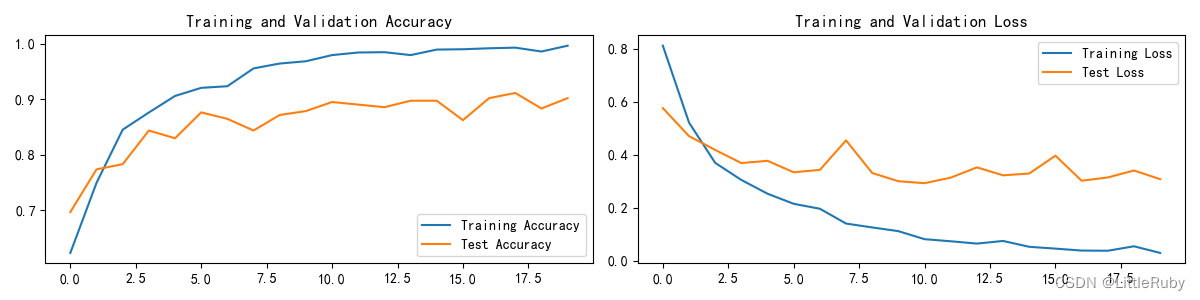

Epoch:20, duration:5540ms, Train_acc:99.6%, Train_loss:0.029, Test_acc:90.2%,Test_loss:0.308acc與loss圖如下,測試精度最高達到91.1%

3.5 拔高嘗試–更改優化器為Adam+增加dropout層+保存最好的模型

在更改優化器為Adam+增加dropout層代碼的基礎上在訓練模型階段增加部分代碼,保存最好的模型,預測前加載模型

"""訓練模型--正式訓練"""epochs = 20train_loss = []train_acc = []test_loss = []test_acc = []best_test_acc=0PATH = './model.pth' # 保存的參數文件名for epoch in range(epochs):milliseconds_t1 = int(time.time() * 1000)model.train()epoch_train_acc, epoch_train_loss = train(train_dl, model, loss_fn, opt)model.eval()epoch_test_acc, epoch_test_loss = test(test_dl, model, loss_fn)train_acc.append(epoch_train_acc)train_loss.append(epoch_train_loss)test_acc.append(epoch_test_acc)test_loss.append(epoch_test_loss)milliseconds_t2 = int(time.time() * 1000)template = ('Epoch:{:2d}, duration:{}ms, Train_acc:{:.1f}%, Train_loss:{:.3f}, Test_acc:{:.1f}%,Test_loss:{:.3f}')if best_test_acc < epoch_test_acc:best_test_acc = epoch_test_acc# 模型保存torch.save(model.state_dict(), PATH)template = ('Epoch:{:2d}, duration:{}ms, Train_acc:{:.1f}%, Train_loss:{:.3f}, Test_acc:{:.1f}%,Test_loss:{:.3f},save model.pth')print(template.format(epoch + 1, milliseconds_t2-milliseconds_t1, epoch_train_acc * 100, epoch_train_loss, epoch_test_acc * 100, epoch_test_loss))print('Done')

訓練記錄如下

Epoch: 1, duration:7342ms, Train_acc:61.6%, Train_loss:0.837, Test_acc:68.3%,Test_loss:0.796,save model.pth

Epoch: 2, duration:5390ms, Train_acc:75.4%, Train_loss:0.524, Test_acc:77.6%,Test_loss:0.472,save model.pth

Epoch: 3, duration:5348ms, Train_acc:82.4%, Train_loss:0.417, Test_acc:84.6%,Test_loss:0.411,save model.pth

Epoch: 4, duration:5377ms, Train_acc:85.1%, Train_loss:0.351, Test_acc:82.3%,Test_loss:0.424

Epoch: 5, duration:5362ms, Train_acc:85.7%, Train_loss:0.326, Test_acc:83.7%,Test_loss:0.426

Epoch: 6, duration:5371ms, Train_acc:88.3%, Train_loss:0.270, Test_acc:84.6%,Test_loss:0.353

Epoch: 7, duration:5426ms, Train_acc:92.6%, Train_loss:0.199, Test_acc:89.0%,Test_loss:0.320,save model.pth

Epoch: 8, duration:5432ms, Train_acc:89.7%, Train_loss:0.256, Test_acc:83.0%,Test_loss:0.478

Epoch: 9, duration:5447ms, Train_acc:93.1%, Train_loss:0.189, Test_acc:85.5%,Test_loss:0.395

Epoch:10, duration:5630ms, Train_acc:95.2%, Train_loss:0.133, Test_acc:87.4%,Test_loss:0.316

Epoch:11, duration:5469ms, Train_acc:95.2%, Train_loss:0.127, Test_acc:87.2%,Test_loss:0.352

Epoch:12, duration:5366ms, Train_acc:96.3%, Train_loss:0.106, Test_acc:90.0%,Test_loss:0.328,save model.pth

Epoch:13, duration:5543ms, Train_acc:97.3%, Train_loss:0.085, Test_acc:88.6%,Test_loss:0.284

Epoch:14, duration:5500ms, Train_acc:97.4%, Train_loss:0.084, Test_acc:89.3%,Test_loss:0.299

Epoch:15, duration:5398ms, Train_acc:97.9%, Train_loss:0.068, Test_acc:90.2%,Test_loss:0.269,save model.pth

Epoch:16, duration:5436ms, Train_acc:98.4%, Train_loss:0.056, Test_acc:88.8%,Test_loss:0.282

Epoch:17, duration:5447ms, Train_acc:99.1%, Train_loss:0.050, Test_acc:87.6%,Test_loss:0.325

Epoch:18, duration:5483ms, Train_acc:97.7%, Train_loss:0.067, Test_acc:89.5%,Test_loss:0.294

Epoch:19, duration:5431ms, Train_acc:98.7%, Train_loss:0.046, Test_acc:90.2%,Test_loss:0.298

Epoch:20, duration:5553ms, Train_acc:99.4%, Train_loss:0.032, Test_acc:90.7%,Test_loss:0.278,save model.pth

總結

通過本文的學習,pytorch實現猴痘病識別,并通過改變優化器的方式,以及增加dropout層,提升了原有模型的測試精度,并保存訓練過程中最好的模型。

:10月銷額增長53%)

函數實現統計每個元素出現的頻數+并將最終統計頻數結果轉換成dataframe數據框形式)