一、本文介紹

本文給大家帶來的改進機制是EfficientViT(高效的視覺變換網絡),EfficientViT的核心是一種輕量級的多尺度線性注意力模塊,能夠在只使用硬件高效操作的情況下實現全局感受野和多尺度學習。本文帶來是2023年的最新版本的EfficientViT網絡結構,論文題目是'EfficientViT: Multi-Scale Linear Attention for High-Resolution Dense Prediction'這個版本的模型結構(這點大家需要注意以下)。同時本文通過介紹其模型原理,然后手把手教你添加到網絡結構中去,最后提供我完美運行的記錄,如果大家運行過程中的有任何問題,都可以評論區留言,我都會進行回復。親測在小目標檢測和大尺度目標檢測的數據集上都有大幅度的漲點效果(mAP直接漲了大概有0.1左右)

推薦指數:?????

漲點效果:?????

專欄回顧:YOLOv8改進系列專欄——本專欄持續復習各種頂會內容——科研必備????

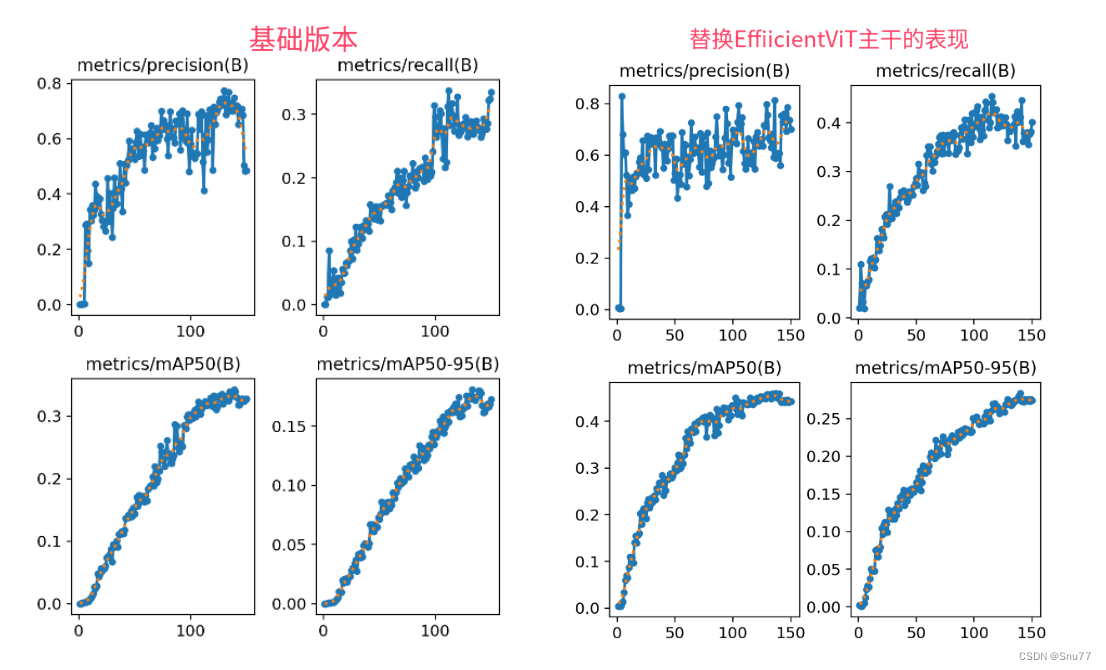

訓練結果對比圖->??

這次試驗我用的數據集大概有七八百張照片訓練了150個epochs,雖然沒有完全擬合但是效果有很高的漲點幅度,所以大家可以進行嘗試畢竟不同的數據集上效果也可能差很多,同時我在后面給了多種yaml文件大家可以分別進行實驗來檢驗效果。

可以看到這個漲點幅度mAP直接漲了大概有0.1左右。

目錄

一、本文介紹

二、EfficientViT模型原理

2.1??EfficientViT的基本原理

2.2?多尺度線性注意力機制

2.3?輕量級和硬件高效操作

2.4?顯著的性能提升和速度加快

三、EfficienViT的完整代碼

四、手把手叫你天EfficienViT網絡結構

修改一

修改二

修改三?

修改四

修改五?

修改六?

修改七

五、EfficientViT2023yaml文件

六、成功運行記錄?

七、本文總結

二、EfficientViT模型原理

論文地址:官方論文地址

代碼地址:官方代碼地址

?

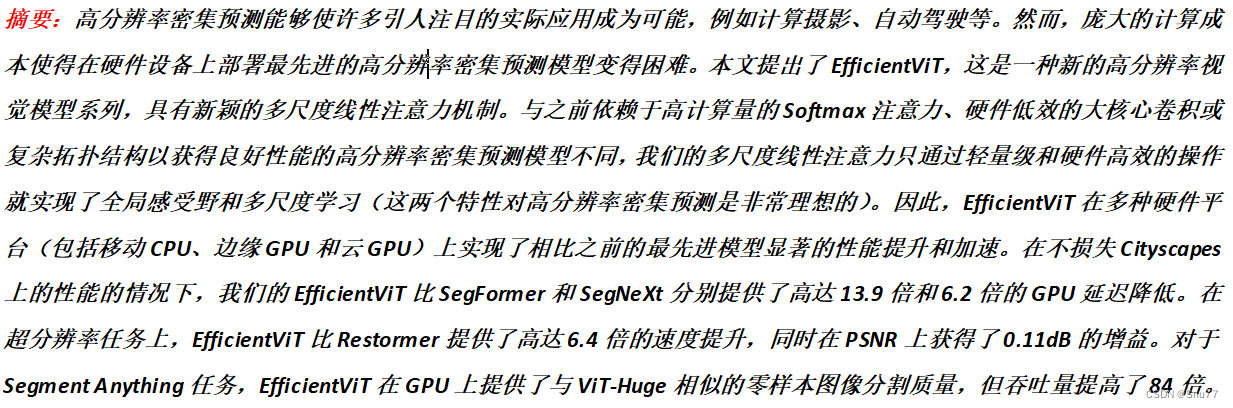

?

2.1??EfficientViT的基本原理

EfficientViT是一種高效的視覺變換網絡,專為處理高分辨率圖像而設計。它通過創新的多尺度線性注意力機制來提高模型的性能,同時減少計算成本。這種模型優化了注意力機制,使其更適合于硬件實現,能夠在多種硬件平臺上,包括移動CPU、邊緣GPU和云GPU上實現快速的圖像處理。相比于傳統的高分辨率密集預測模型,EfficientViT在保持高性能的同時,大幅提高了計算效率。

我們可以將EfficientViT的基本原理概括為以下幾點:

1. 多尺度線性注意力機制:EfficientViT采用了一種新型的多尺度線性注意力機制,這種方法旨在提高模型處理高分辨率圖像時的效率和效果。

2. 輕量級和硬件高效操作:與傳統的高分辨率密集預測模型不同,EfficientViT通過輕量級和硬件高效的操作來實現全局感受野和多尺度學習,這有助于降低計算成本。

3. 顯著的性能提升和速度加快:在多種硬件平臺上,包括移動CPU、邊緣GPU和云GPU,EfficientViT實現了相比之前的模型顯著的性能提升和加速。

2.2?多尺度線性注意力機制

多尺度線性注意力機制是一種輕量級的注意力模塊,用于提高處理高分辨率圖像時的效率。它旨在通過簡化的操作來實現全局感受野和多尺度學習,這對于高分辨率密集預測尤其重要。這種注意力機制在保持硬件效率的同時,能夠有效捕獲長距離依賴關系,是高分辨率視覺識別任務的理想選擇。

下圖展示了EfficientViT的構建模塊,左側是EfficientViT的基本構建塊,包括多尺度線性注意力模塊和帶有深度卷積的前饋網絡(FFN+DWConv)。右側詳細展示了多尺度線性注意力,它通過聚合鄰近令牌來獲得多尺度的Q/K/V令牌。

?

?

在通過線性投影層得到Q/K/V令牌之后,使用輕量級的小核卷積生成多尺度令牌,然后通過ReLU線性注意力對這些多尺度令牌進行處理。最后,這些輸出被聯合起來,送入最終的線性投影層以進行特征融合。這種設計旨在以計算和存儲效率高的方式捕獲上下文信息和局部信息。

2.3?輕量級和硬件高效操作

EfficientViT中的輕量級和硬件高效操作主要指的是在模型中采用了簡化的注意力機制和卷積操作,這些設計使得EfficientViT能夠在各種硬件平臺上高效運行。具體來說,模型通過使用多尺度線性注意力和深度卷積的前饋網絡,以及在注意力模塊中避免使用計算成本高的Softmax函數,實現了既保持模型性能又顯著減少計算復雜性的目標。這些操作包括使用多尺度線性注意力機制來替代傳統的Softmax注意力,以及采用深度可分離卷積(Depthwise Convolution)來減少參數和計算量。

下圖為大家展示的是EfficientViT的宏觀架構:

?

?

EfficientViT的宏觀架構包括一個標準的后端骨干網絡和頭部/編碼器-解碼器設計。EfficientViT模塊被插入到骨干網絡的第三和第四階段。這種設計遵循了常見的做法,即將來自最后三個階段(P2, P3, 和 P4)的特征送入頭部,并采用加法來融合這些特征,以簡化和提高效率。頭部設計簡單,由幾個MBConv塊和輸出層組成。在這個框架中,EfficientViT通過提供一種新的輕量級多尺度注意力機制,能夠高效處理高分辨率的圖像,同時保持對不同硬件平臺的適應性。

2.4?顯著的性能提升和速度加快

顯著性能提升和速度加快主要是指模型在各種硬件平臺上,相對于以前的模型,在圖像處理任務中表現出了更好的效率和速度。這得益于EfficientViT在設計上的優化,如多尺度線性注意力和深度可分離卷積等。這些改進使得模型在處理高分辨率任務時,如城市景觀(Cityscapes)數據集,能夠在保持性能的同時大幅減少計算延遲。在某些應用中,EfficientViT與現有最先進模型相比,提供了多達數倍的GPU延遲降低,這些優化使其在資源受限的設備上具有很高的實用性。

三、EfficienViT的完整代碼

import torch.nn as nn

import torch

from inspect import signature

from timm.models.efficientvit_mit import val2tuple, ResidualBlock

from torch.cuda.amp import autocast

import torch.nn.functional as Fclass LayerNorm2d(nn.LayerNorm):def forward(self, x: torch.Tensor) -> torch.Tensor:out = x - torch.mean(x, dim=1, keepdim=True)out = out / torch.sqrt(torch.square(out).mean(dim=1, keepdim=True) + self.eps)if self.elementwise_affine:out = out * self.weight.view(1, -1, 1, 1) + self.bias.view(1, -1, 1, 1)return outREGISTERED_NORM_DICT: dict[str, type] = {"bn2d": nn.BatchNorm2d,"ln": nn.LayerNorm,"ln2d": LayerNorm2d,

}# register activation function here

REGISTERED_ACT_DICT: dict[str, type] = {"relu": nn.ReLU,"relu6": nn.ReLU6,"hswish": nn.Hardswish,"silu": nn.SiLU,

}class FusedMBConv(nn.Module):def __init__(self,in_channels: int,out_channels: int,kernel_size=3,stride=1,mid_channels=None,expand_ratio=6,groups=1,use_bias=False,norm=("bn2d", "bn2d"),act_func=("relu6", None),):super().__init__()use_bias = val2tuple(use_bias, 2)norm = val2tuple(norm, 2)act_func = val2tuple(act_func, 2)mid_channels = mid_channels or round(in_channels * expand_ratio)self.spatial_conv = ConvLayer(in_channels,mid_channels,kernel_size,stride,groups=groups,use_bias=use_bias[0],norm=norm[0],act_func=act_func[0],)self.point_conv = ConvLayer(mid_channels,out_channels,1,use_bias=use_bias[1],norm=norm[1],act_func=act_func[1],)def forward(self, x: torch.Tensor) -> torch.Tensor:x = self.spatial_conv(x)x = self.point_conv(x)return xclass DSConv(nn.Module):def __init__(self,in_channels: int,out_channels: int,kernel_size=3,stride=1,use_bias=False,norm=("bn2d", "bn2d"),act_func=("relu6", None),):super(DSConv, self).__init__()use_bias = val2tuple(use_bias, 2)norm = val2tuple(norm, 2)act_func = val2tuple(act_func, 2)self.depth_conv = ConvLayer(in_channels,in_channels,kernel_size,stride,groups=in_channels,norm=norm[0],act_func=act_func[0],use_bias=use_bias[0],)self.point_conv = ConvLayer(in_channels,out_channels,1,norm=norm[1],act_func=act_func[1],use_bias=use_bias[1],)def forward(self, x: torch.Tensor) -> torch.Tensor:x = self.depth_conv(x)x = self.point_conv(x)return xclass MBConv(nn.Module):def __init__(self,in_channels: int,out_channels: int,kernel_size=3,stride=1,mid_channels=None,expand_ratio=6,use_bias=False,norm=("bn2d", "bn2d", "bn2d"),act_func=("relu6", "relu6", None),):super(MBConv, self).__init__()use_bias = val2tuple(use_bias, 3)norm = val2tuple(norm, 3)act_func = val2tuple(act_func, 3)mid_channels = mid_channels or round(in_channels * expand_ratio)self.inverted_conv = ConvLayer(in_channels,mid_channels,1,stride=1,norm=norm[0],act_func=act_func[0],use_bias=use_bias[0],)self.depth_conv = ConvLayer(mid_channels,mid_channels,kernel_size,stride=stride,groups=mid_channels,norm=norm[1],act_func=act_func[1],use_bias=use_bias[1],)self.point_conv = ConvLayer(mid_channels,out_channels,1,norm=norm[2],act_func=act_func[2],use_bias=use_bias[2],)def forward(self, x: torch.Tensor) -> torch.Tensor:x = self.inverted_conv(x)x = self.depth_conv(x)x = self.point_conv(x)return xclass EfficientViTBlock(nn.Module):def __init__(self,in_channels: int,heads_ratio: float = 1.0,dim=32,expand_ratio: float = 4,norm="bn2d",act_func="hswish",):super(EfficientViTBlock, self).__init__()self.context_module = ResidualBlock(LiteMLA(in_channels=in_channels,out_channels=in_channels,heads_ratio=heads_ratio,dim=dim,norm=(None, norm),),IdentityLayer(),)local_module = MBConv(in_channels=in_channels,out_channels=in_channels,expand_ratio=expand_ratio,use_bias=(True, True, False),norm=(None, None, norm),act_func=(act_func, act_func, None),)self.local_module = ResidualBlock(local_module, IdentityLayer())def forward(self, x: torch.Tensor) -> torch.Tensor:x = self.context_module(x)x = self.local_module(x)return xclass ResBlock(nn.Module):def __init__(self,in_channels: int,out_channels: int,kernel_size=3,stride=1,mid_channels=None,expand_ratio=1,use_bias=False,norm=("bn2d", "bn2d"),act_func=("relu6", None),):super().__init__()use_bias = val2tuple(use_bias, 2)norm = val2tuple(norm, 2)act_func = val2tuple(act_func, 2)mid_channels = mid_channels or round(in_channels * expand_ratio)self.conv1 = ConvLayer(in_channels,mid_channels,kernel_size,stride,use_bias=use_bias[0],norm=norm[0],act_func=act_func[0],)self.conv2 = ConvLayer(mid_channels,out_channels,kernel_size,1,use_bias=use_bias[1],norm=norm[1],act_func=act_func[1],)def forward(self, x: torch.Tensor) -> torch.Tensor:x = self.conv1(x)x = self.conv2(x)return xclass LiteMLA(nn.Module):r"""Lightweight multi-scale linear attention"""def __init__(self,in_channels: int,out_channels: int,heads: int or None = None,heads_ratio: float = 1.0,dim=8,use_bias=False,norm=(None, "bn2d"),act_func=(None, None),kernel_func="relu6",scales: tuple[int, ...] = (5,),eps=1.0e-15,):super(LiteMLA, self).__init__()self.eps = epsheads = heads or int(in_channels // dim * heads_ratio)total_dim = heads * dimuse_bias = val2tuple(use_bias, 2)norm = val2tuple(norm, 2)act_func = val2tuple(act_func, 2)self.dim = dimself.qkv = ConvLayer(in_channels,3 * total_dim,1,use_bias=use_bias[0],norm=norm[0],act_func=act_func[0],)self.aggreg = nn.ModuleList([nn.Sequential(nn.Conv2d(3 * total_dim,3 * total_dim,scale,padding=get_same_padding(scale),groups=3 * total_dim,bias=use_bias[0],),nn.Conv2d(3 * total_dim, 3 * total_dim, 1, groups=3 * heads, bias=use_bias[0]),)for scale in scales])self.kernel_func = build_act(kernel_func, inplace=False)self.proj = ConvLayer(total_dim * (1 + len(scales)),out_channels,1,use_bias=use_bias[1],norm=norm[1],act_func=act_func[1],)@autocast(enabled=False)def relu_linear_att(self, qkv: torch.Tensor) -> torch.Tensor:B, _, H, W = list(qkv.size())if qkv.dtype == torch.float16:qkv = qkv.float()qkv = torch.reshape(qkv,(B,-1,3 * self.dim,H * W,),)qkv = torch.transpose(qkv, -1, -2)q, k, v = (qkv[..., 0 : self.dim],qkv[..., self.dim : 2 * self.dim],qkv[..., 2 * self.dim :],)# lightweight linear attentionq = self.kernel_func(q)k = self.kernel_func(k)# linear matmultrans_k = k.transpose(-1, -2)v = F.pad(v, (0, 1), mode="constant", value=1)kv = torch.matmul(trans_k, v)out = torch.matmul(q, kv)out = torch.clone(out)out = out[..., :-1] / (out[..., -1:] + self.eps)out = torch.transpose(out, -1, -2)out = torch.reshape(out, (B, -1, H, W))return outdef forward(self, x: torch.Tensor) -> torch.Tensor:# generate multi-scale q, k, vqkv = self.qkv(x)multi_scale_qkv = [qkv]device, types = qkv.device, qkv.dtypefor op in self.aggreg:if device.type == 'cuda' and types == torch.float32:qkv = qkv.to(torch.float16)x1 = op(qkv)multi_scale_qkv.append(x1)multi_scale_qkv = torch.cat(multi_scale_qkv, dim=1)out = self.relu_linear_att(multi_scale_qkv)out = self.proj(out)return out@staticmethoddef configure_litemla(model: nn.Module, **kwargs) -> None:eps = kwargs.get("eps", None)for m in model.modules():if isinstance(m, LiteMLA):if eps is not None:m.eps = epsdef build_kwargs_from_config(config: dict, target_func: callable) -> dict[str, any]:valid_keys = list(signature(target_func).parameters)kwargs = {}for key in config:if key in valid_keys:kwargs[key] = config[key]return kwargsdef build_norm(name="bn2d", num_features=None, **kwargs) -> nn.Module or None:if name in ["ln", "ln2d"]:kwargs["normalized_shape"] = num_featureselse:kwargs["num_features"] = num_featuresif name in REGISTERED_NORM_DICT:norm_cls = REGISTERED_NORM_DICT[name]args = build_kwargs_from_config(kwargs, norm_cls)return norm_cls(**args)else:return Nonedef get_same_padding(kernel_size: int or tuple[int, ...]) -> int or tuple[int, ...]:if isinstance(kernel_size, tuple):return tuple([get_same_padding(ks) for ks in kernel_size])else:assert kernel_size % 2 > 0, "kernel size should be odd number"return kernel_size // 2def build_act(name: str, **kwargs) -> nn.Module or None:if name in REGISTERED_ACT_DICT:act_cls = REGISTERED_ACT_DICT[name]args = build_kwargs_from_config(kwargs, act_cls)return act_cls(**args)else:return Noneclass ConvLayer(nn.Module):def __init__(self,in_channels: int,out_channels: int,kernel_size=3,stride=1,dilation=1,groups=1,use_bias=False,dropout=0,norm="bn2d",act_func="relu",):super(ConvLayer, self).__init__()padding = get_same_padding(kernel_size)padding *= dilationself.dropout = nn.Dropout2d(dropout, inplace=False) if dropout > 0 else Noneself.conv = nn.Conv2d(in_channels,out_channels,kernel_size=(kernel_size, kernel_size),stride=(stride, stride),padding=padding,dilation=(dilation, dilation),groups=groups,bias=use_bias,)self.norm = build_norm(norm, num_features=out_channels)self.act = build_act(act_func)def forward(self, x: torch.Tensor) -> torch.Tensor:if self.dropout is not None:x = self.dropout(x)device, type = x.device, x.dtypechoose = Falseif device.type == 'cuda' and type == torch.float32:x = x.to(torch.float16)choose = Truex = self.conv(x)if self.norm:x = self.norm(x)if self.act:x = self.act(x)if choose:x = x.to(torch.float16)return xclass IdentityLayer(nn.Module):def forward(self, x: torch.Tensor) -> torch.Tensor:return xclass OpSequential(nn.Module):def __init__(self, op_list: list[nn.Module or None]):super(OpSequential, self).__init__()valid_op_list = []for op in op_list:if op is not None:valid_op_list.append(op)self.op_list = nn.ModuleList(valid_op_list)def forward(self, x: torch.Tensor) -> torch.Tensor:for op in self.op_list:x = op(x)return xclass EfficientViTBackbone(nn.Module):def __init__(self,width_list: list[int],depth_list: list[int],in_channels=3,dim=32,expand_ratio=4,norm="ln2d",act_func="hswish",) -> None:super().__init__()self.width_list = []# input stemself.input_stem = [ConvLayer(in_channels=3,out_channels=width_list[0],stride=2,norm=norm,act_func=act_func,)]for _ in range(depth_list[0]):block = self.build_local_block(in_channels=width_list[0],out_channels=width_list[0],stride=1,expand_ratio=1,norm=norm,act_func=act_func,)self.input_stem.append(ResidualBlock(block, IdentityLayer()))in_channels = width_list[0]self.input_stem = OpSequential(self.input_stem)self.width_list.append(in_channels)# stagesself.stages = []for w, d in zip(width_list[1:3], depth_list[1:3]):stage = []for i in range(d):stride = 2 if i == 0 else 1block = self.build_local_block(in_channels=in_channels,out_channels=w,stride=stride,expand_ratio=expand_ratio,norm=norm,act_func=act_func,)block = ResidualBlock(block, IdentityLayer() if stride == 1 else None)stage.append(block)in_channels = wself.stages.append(OpSequential(stage))self.width_list.append(in_channels)for w, d in zip(width_list[3:], depth_list[3:]):stage = []block = self.build_local_block(in_channels=in_channels,out_channels=w,stride=2,expand_ratio=expand_ratio,norm=norm,act_func=act_func,fewer_norm=True,)stage.append(ResidualBlock(block, None))in_channels = wfor _ in range(d):stage.append(EfficientViTBlock(in_channels=in_channels,dim=dim,expand_ratio=expand_ratio,norm=norm,act_func=act_func,))self.stages.append(OpSequential(stage))self.width_list.append(in_channels)self.stages = nn.ModuleList(self.stages)@staticmethoddef build_local_block(in_channels: int,out_channels: int,stride: int,expand_ratio: float,norm: str,act_func: str,fewer_norm: bool = False,) -> nn.Module:if expand_ratio == 1:block = DSConv(in_channels=in_channels,out_channels=out_channels,stride=stride,use_bias=(True, False) if fewer_norm else False,norm=(None, norm) if fewer_norm else norm,act_func=(act_func, None),)else:block = MBConv(in_channels=in_channels,out_channels=out_channels,stride=stride,expand_ratio=expand_ratio,use_bias=(True, True, False) if fewer_norm else False,norm=(None, None, norm) if fewer_norm else norm,act_func=(act_func, act_func, None),)return blockdef forward(self, x: torch.Tensor) -> dict[str, torch.Tensor]:outputs = []for stage_id, stage in enumerate(self.stages):x = stage(x)if x.device.type == 'cuda':x = x.to(torch.float16)outputs.append(x)return outputsdef efficientvit_backbone_b0(**kwargs) -> EfficientViTBackbone:backbone = EfficientViTBackbone(width_list=[3, 16, 32, 64, 128],depth_list=[1, 2, 2, 2, 2],dim=16,**build_kwargs_from_config(kwargs, EfficientViTBackbone),)return backbonedef efficientvit_backbone_b1(**kwargs) -> EfficientViTBackbone:backbone = EfficientViTBackbone(width_list=[3, 32, 64, 128, 256],depth_list=[1, 2, 3, 3, 4],dim=16,**build_kwargs_from_config(kwargs, EfficientViTBackbone),)return backbonedef efficientvit_backbone_b2(**kwargs) -> EfficientViTBackbone:backbone = EfficientViTBackbone(width_list=[3, 48, 96, 192, 384],depth_list=[1, 3, 4, 4, 6],dim=32,**build_kwargs_from_config(kwargs, EfficientViTBackbone),)return backbonedef efficientvit_backbone_b3(**kwargs) -> EfficientViTBackbone:backbone = EfficientViTBackbone(width_list=[3, 64, 128, 256, 512],depth_list=[1, 4, 6, 6, 9],dim=32,**build_kwargs_from_config(kwargs, EfficientViTBackbone),)return backbone

四、手把手教你添加EfficienViT網絡結構

這個主干的網絡結構添加起來算是所有的改進機制里最麻煩的了,因為有一些網略結構可以用yaml文件搭建出來,有一些網絡結構其中的一些細節根本沒有辦法用yaml文件去搭建,用yaml文件去搭建會損失一些細節部分(而且一個網絡結構設計很多細節的結構修改方式都不一樣,一個一個去修改大家難免會出錯),所以這里讓網絡直接返回整個網絡,然后修改部分 yolo代碼以后就都以這種形式添加了,以后我提出的網絡模型基本上都會通過這種方式修改,我也會進行一些模型細節改進。創新出新的網絡結構大家直接拿來用就可以的。下面開始添加教程->

(同時每一個后面都有代碼,大家拿來復制粘貼替換即可,但是要看好了不要復制粘貼替換多了)

修改一

我們復制網絡結構代碼到“ultralytics/nn/modules”目錄下創建一個py文件復制粘貼進去 ,我這里起的名字是EfficientV2。

修改二

找到如下的文件"ultralytics/nn/tasks.py" 在開始的部分導入我們的模型如下圖。

from .modules.EfficientV2 import efficientvit_backbone_b0,efficientvit_backbone_b1,efficientvit_backbone_b2,efficientvit_backbone_b3修改三?

添加如下一行代碼

修改四

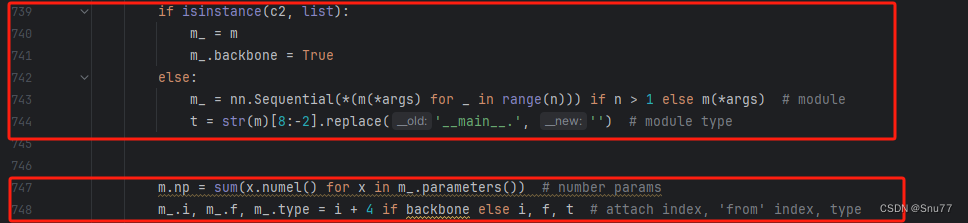

找到七百多行大概把具體看圖片,按照圖片來修改就行,添加紅框內的部分,注意沒有()只是函數名。

elif m in {efficientvit_backbone_b0, efficientvit_backbone_b1, efficientvit_backbone_b2, efficientvit_backbone_b3}:m = m()c2 = m.width_list # 返回通道列表backbone = True修改五?

下面的兩個紅框內都是需要改動的。?

if isinstance(c2, list):m_ = mm_.backbone = Trueelse:m_ = nn.Sequential(*(m(*args) for _ in range(n))) if n > 1 else m(*args) # modulet = str(m)[8:-2].replace('__main__.', '') # module typem.np = sum(x.numel() for x in m_.parameters()) # number paramsm_.i, m_.f, m_.type = i + 4 if backbone else i, f, t # attach index, 'from' index, type修改六?

如下的也需要修改,全部按照我的來。

代碼如下把原先的代碼替換了即可。?

save.extend(x % (i + 4 if backbone else i) for x in ([f] if isinstance(f, int) else f) if x != -1) # append to savelistlayers.append(m_)if i == 0:ch = []if isinstance(c2, list):ch.extend(c2)if len(c2) != 5:ch.insert(0, 0)else:ch.append(c2)修改七

修改七和前面的都不太一樣,需要修改前向傳播中的一個部分,?已經離開了parse_model方法了。

可以在圖片中開代碼行數,沒有離開task.py文件都是同一個文件。 同時這個部分有好幾個前向傳播都很相似,大家不要看錯了,是70多行左右的!!!,同時我后面提供了代碼,大家直接復制粘貼即可,有時間我針對這里會出一個視頻。

代碼如下->

def _predict_once(self, x, profile=False, visualize=False):"""Perform a forward pass through the network.Args:x (torch.Tensor): The input tensor to the model.profile (bool): Print the computation time of each layer if True, defaults to False.visualize (bool): Save the feature maps of the model if True, defaults to False.Returns:(torch.Tensor): The last output of the model."""y, dt = [], [] # outputsfor m in self.model:if m.f != -1: # if not from previous layerx = y[m.f] if isinstance(m.f, int) else [x if j == -1 else y[j] for j in m.f] # from earlier layersif profile:self._profile_one_layer(m, x, dt)if hasattr(m, 'backbone'):x = m(x)if len(x) != 5: # 0 - 5x.insert(0, None)for index, i in enumerate(x):if index in self.save:y.append(i)else:y.append(None)x = x[-1] # 最后一個輸出傳給下一層else:x = m(x) # runy.append(x if m.i in self.save else None) # save outputif visualize:feature_visualization(x, m.type, m.i, save_dir=visualize)return x到這里就完成了修改部分,但是這里面細節很多,大家千萬要注意不要替換多余的代碼,導致報錯,也不要拉下任何一部,都會導致運行失敗,而且報錯很難排查!!!很難排查!!!?

五、EfficientViT2023yaml文件

復制如下yaml文件進行運行!!!?

# Ultralytics YOLO 🚀, AGPL-3.0 license

# YOLOv8 object detection model with P3-P5 outputs. For Usage examples see https://docs.ultralytics.com/tasks/detect# Parameters

nc: 80 # number of classes

scales: # model compound scaling constants, i.e. 'model=yolov8n.yaml' will call yolov8.yaml with scale 'n'# [depth, width, max_channels]n: [0.33, 0.25, 1024] # YOLOv8n summary: 225 layers, 3157200 parameters, 3157184 gradients, 8.9 GFLOPss: [0.33, 0.50, 1024] # YOLOv8s summary: 225 layers, 11166560 parameters, 11166544 gradients, 28.8 GFLOPsm: [0.67, 0.75, 768] # YOLOv8m summary: 295 layers, 25902640 parameters, 25902624 gradients, 79.3 GFLOPsl: [1.00, 1.00, 512] # YOLOv8l summary: 365 layers, 43691520 parameters, 43691504 gradients, 165.7 GFLOPsx: [1.00, 1.25, 512] # YOLOv8x summary: 365 layers, 68229648 parameters, 68229632 gradients, 258.5 GFLOPs# YOLOv8.0n backbone

backbone:# [from, repeats, module, args]- [-1, 1, efficientvit_backbone_b0, []] # 4- [-1, 1, SPPF, [1024, 5]] # 5# YOLOv8.0n head

head:- [-1, 1, nn.Upsample, [None, 2, 'nearest']] # 6- [[-1, 3], 1, Concat, [1]] # 7 cat backbone P4- [-1, 3, C2f, [512]] # 8- [-1, 1, nn.Upsample, [None, 2, 'nearest']] # 9- [[-1, 2], 1, Concat, [1]] # 10 cat backbone P3- [-1, 3, C2f, [256]] # 11 (P3/8-small)- [-1, 1, Conv, [256, 3, 2]] # 12- [[-1, 8], 1, Concat, [1]] # 13 cat head P4- [-1, 3, C2f, [512]] # 14 (P4/16-medium)- [-1, 1, Conv, [512, 3, 2]] # 15- [[-1, 5], 1, Concat, [1]] # 16 cat head P5- [-1, 3, C2f, [1024]] # 17 (P5/32-large)- [[11, 14, 17], 1, Detect, [nc]] # Detect(P3, P4, P5)

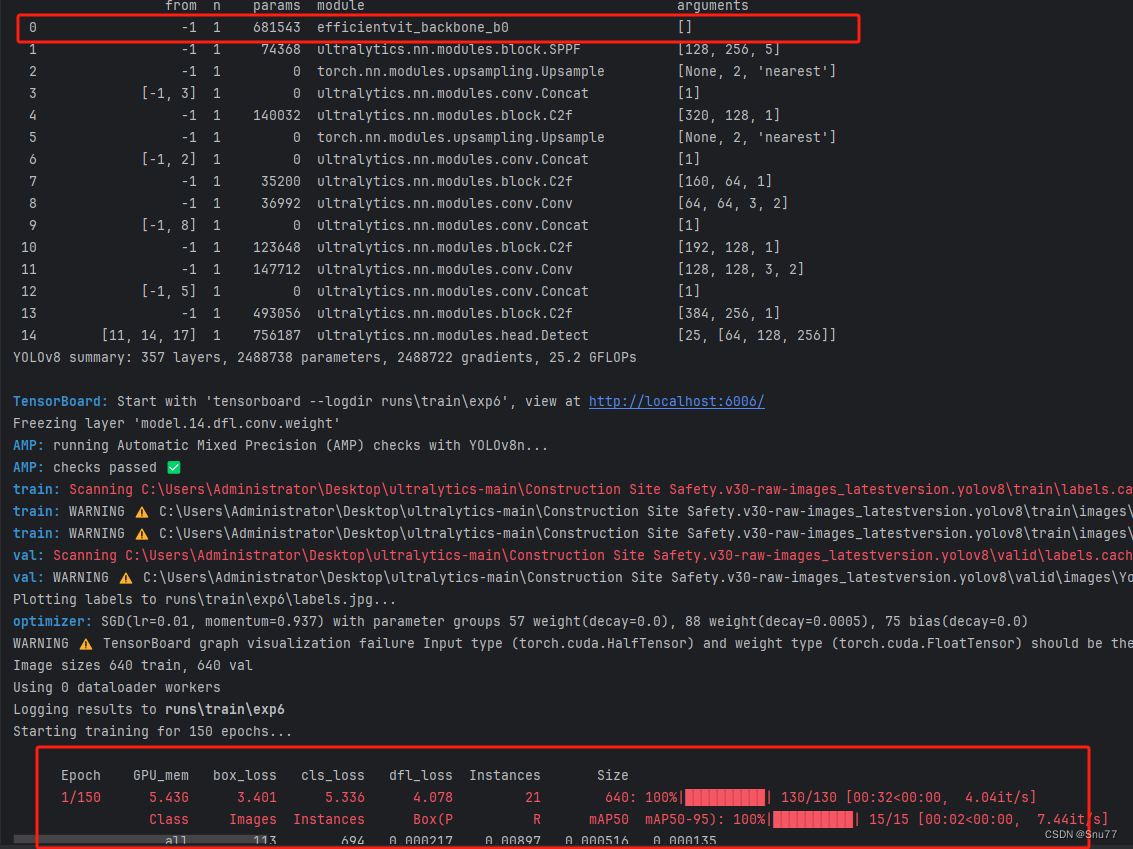

六、成功運行記錄?

下面是成功運行的截圖,已經完成了有1個epochs的訓練,圖片太大截不全第2個epochs了。?

七、本文總結

到此本文的正式分享內容就結束了,在這里給大家推薦我的YOLOv8改進有效漲點專欄,本專欄目前為新開的平均質量分98分,后期我會根據各種最新的前沿頂會進行論文復現,也會對一些老的改進機制進行補充,目前本專欄免費閱讀(暫時,大家盡早關注不迷路~),如果大家覺得本文幫助到你了,訂閱本專欄,關注后續更多的更新~

專欄回顧:YOLOv8改進系列專欄——本專欄持續復習各種頂會內容——科研必備

)

)

)

725.完全數)