目錄

一、語音喚醒部分

1、首先在科大訊飛官網注冊開發者賬號

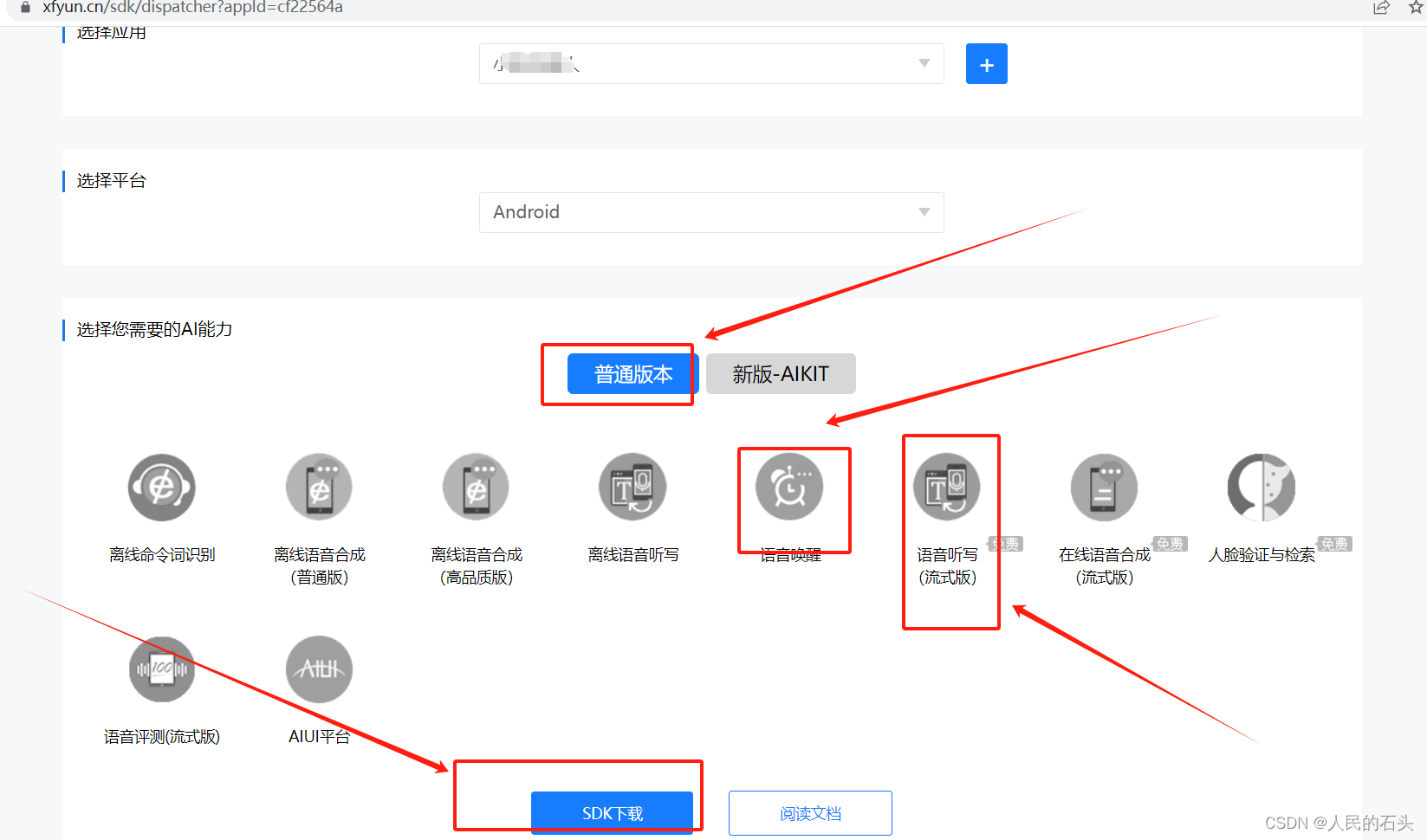

2、配置喚醒詞然后下載sdk

3、選擇對應功能下載

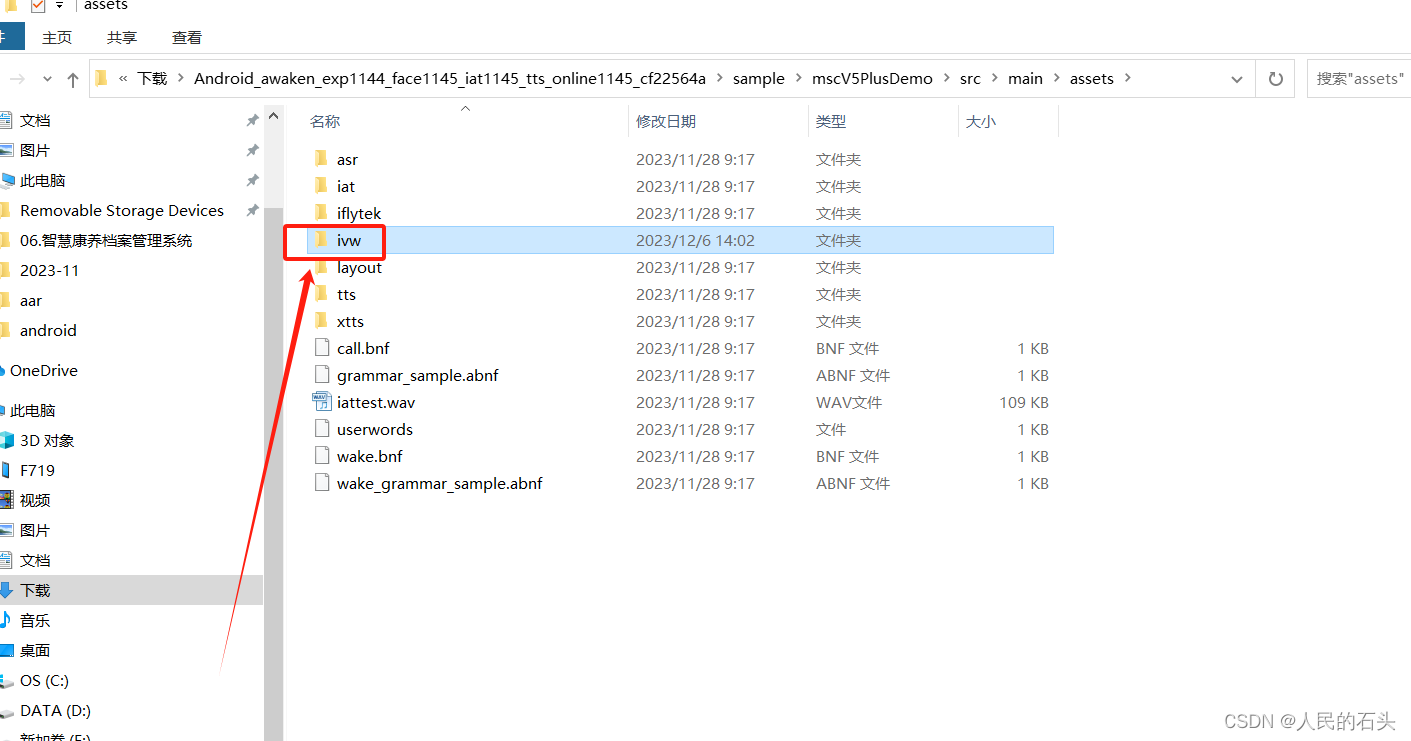

4、語音喚醒lib包全部復制到工程目錄下

5、把語音喚醒詞文件復制到工程的assets目錄

6、復制對應權限到AndroidManifest.xml中

7、喚醒工具類封裝

二、語音識別

1、工具類

2、使用

一、語音喚醒部分

1、首先在科大訊飛官網注冊開發者賬號

控制臺-訊飛開放平臺

2、配置喚醒詞然后下載sdk

3、選擇對應功能下載

4、語音喚醒lib包全部復制到工程目錄下

5、把語音喚醒詞文件復制到工程的assets目錄

6、復制對應權限到AndroidManifest.xml中

<uses-permission android:name="android.permission.INTERNET" /><uses-permission android:name="android.permission.RECORD_AUDIO" /><uses-permission android:name="android.permission.READ_PHONE_STATE" /><!-- App 需要使用的部分權限 --><uses-permission android:name="android.permission.READ_PHONE_STATE" /><uses-permission android:name="android.permission.WRITE_EXTERNAL_STORAGE" /><!-- 科大訊飛 --><uses-permissionandroid:name="android.permission.MOUNT_UNMOUNT_FILESYSTEMS"tools:ignore="ProtectedPermissions" /><uses-permissionandroid:name="android.permission.READ_PRIVILEGED_PHONE_STATE"tools:ignore="ProtectedPermissions" /><uses-permissionandroid:name="android.permission.MANAGE_EXTERNAL_STORAGE"tools:ignore="ProtectedPermissions" /><uses-permissionandroid:name="android.permission.READ_PHONE_NUMBERS"tools:ignore="ProtectedPermissions" />

7、喚醒工具類封裝

?其中IflytekAPP_id為科大訊飛平臺的應用id

public abstract class WakeUpUtil {private static AutoTouch autoTouch = new AutoTouch();//自動點擊屏幕/*** 喚醒的回調*/public abstract void wakeUp(String resultString);// Log標簽private static final String TAG = "WakeUpUtil";// 上下文private static Context mContext;// 語音喚醒對象private VoiceWakeuper mIvw;//喚醒門限值//門限值越高,則要求匹配度越高,才能喚醒//值范圍:[0,3000]//默認值:1450private static int curThresh = 1450;public WakeUpUtil(Context context) {initKedaXun(context);mContext = context;// 初始化喚醒對象mIvw = VoiceWakeuper.createWakeuper(context, null);Log.d("initLogData", "===進入喚醒工具類====");}/*** 獲取喚醒詞功能** @return 返回文件位置*/private static String getResource() {final String resPath = ResourceUtil.generateResourcePath(mContext, RESOURCE_TYPE.assets, "ivw/" + "cf22564a" + ".jet");return resPath;}/*** 喚醒*/public void wake() {Log.d("initLogData", "===進入喚醒工具類====");// 非空判斷,防止因空指針使程序崩潰VoiceWakeuper mIvw = VoiceWakeuper.getWakeuper();if (mIvw != null) {// textView.setText(resultString);// 清空參數mIvw.setParameter(SpeechConstant.PARAMS, null);// 設置喚醒資源路徑mIvw.setParameter(SpeechConstant.IVW_RES_PATH, getResource());// 喚醒門限值,根據資源攜帶的喚醒詞個數按照“id:門限;id:門限”的格式傳入mIvw.setParameter(SpeechConstant.IVW_THRESHOLD, "0:" + curThresh);// 設置喚醒模式mIvw.setParameter(SpeechConstant.IVW_SST, "wakeup");// 設置持續進行喚醒mIvw.setParameter(SpeechConstant.KEEP_ALIVE, "1");mIvw.startListening(mWakeuperListener);Log.d("initLogData", "====喚醒====");} else {Log.d("initLogData", "===喚醒未初始化11====");

// Toast.makeText(mContext, "喚醒未初始化1", Toast.LENGTH_SHORT).show();}}public void stopWake() {mIvw = VoiceWakeuper.getWakeuper();if (mIvw != null) {mIvw.stopListening();} else {Log.d("initLogData", "===喚醒未初始化222====");

// Toast.makeText(mContext, "喚醒未初始化2", Toast.LENGTH_SHORT).show();}}String resultString = "";private WakeuperListener mWakeuperListener = new WakeuperListener() {@Overridepublic void onResult(WakeuperResult result) {try {String text = result.getResultString();JSONObject object;object = new JSONObject(text);StringBuffer buffer = new StringBuffer();buffer.append("【RAW】 " + text);buffer.append("\n");buffer.append("【操作類型】" + object.optString("sst"));buffer.append("\n");buffer.append("【喚醒詞id】" + object.optString("id"));buffer.append("\n");buffer.append("【得分】" + object.optString("score"));buffer.append("\n");buffer.append("【前端點】" + object.optString("bos"));buffer.append("\n");buffer.append("【尾端點】" + object.optString("eos"));resultString = buffer.toString();stopWake();autoTouch.autoClickPos( 0.1, 0.1);wakeUp(resultString);

// MyEventManager.postMsg("" + resultString, "voicesWakeListener");} catch (JSONException e) {MyEventManager.postMsg("" + "結果解析出錯", "voicesWakeListener");resultString = "結果解析出錯";wakeUp(resultString);e.printStackTrace();}// Logger.d("===開始說話==="+resultString);}@Overridepublic void onError(SpeechError error) {MyEventManager.postMsg("" + "喚醒出錯", "voicesWakeListener");}@Overridepublic void onBeginOfSpeech() {Log.d("initLogData", "===喚醒onBeginOfSpeech====");}@Overridepublic void onEvent(int eventType, int isLast, int arg2, Bundle obj) {

// Log.d("initLogData", "===喚醒onEvent===" + eventType);}@Overridepublic void onVolumeChanged(int i) {

// Log.d("initLogData", "===開始說話==="+i);}};/*** 科大訊飛* 語音sdk* 初始化*/public void initKedaXun(Context context) {// 初始化參數構建StringBuffer param = new StringBuffer();//IflytekAPP_id為我們申請的Appidparam.append("appid=" + context.getString(R.string.IflytekAPP_id));param.append(",");// 設置使用v5+param.append(SpeechConstant.ENGINE_MODE + "=" + SpeechConstant.MODE_MSC);SpeechUtility.createUtility(context, param.toString());Log.d("initLogData", "===在appacation中初始化=====");}}

使用直接調用即可

/*** 科大訊飛* 語音喚醒* 對象*/private WakeUpUtil wakeUpUtil;private void voiceWake() {Log.d("initLogData", "===執行喚醒服務====");wakeUpUtil = new WakeUpUtil(this) {@Overridepublic void wakeUp(String result) {MyEventManager.postMsg("" + "喚醒成功", "voicesWakeListener");Log.d("initLogData", "====喚醒成功===========" + result);// 開啟喚醒wakeUpUtil.wake();}};wakeUpUtil.wake();}到此語音喚醒已經集成結束,接下來是語音識別。

二、語音識別

1、工具類

/*** 科大訊飛* 語音識別* 工具類*/

public class KDVoiceRegUtils {private SpeechRecognizer mIat;private RecognizerListener mRecognizerListener;private InitListener mInitListener;private StringBuilder result = new StringBuilder();// 函數調用返回值private int resultCode = 0;/*** 利用AtomicReference*/private static final AtomicReference<KDVoiceRegUtils> INSTANCE = new AtomicReference<KDVoiceRegUtils>();/*** 私有化*/private KDVoiceRegUtils() {}/*** 用CAS確保線程安全*/public static final KDVoiceRegUtils getInstance() {for (; ; ) {KDVoiceRegUtils current = INSTANCE.get();if (current != null) {return current;}current = new KDVoiceRegUtils();if (INSTANCE.compareAndSet(null, current)) {return current;}Log.d("initLogData", "===科大訊飛實例化===大哥大哥==");}}/*** 初始化* 監聽*/public void initVoiceRecorgnise(Context ct) {if (mInitListener != null || mRecognizerListener != null) {return;}mInitListener = new InitListener() {@Overridepublic void onInit(int code) {

// Log.e(TAG, "SpeechRecognizer init() code = " + code);Log.d("initLogData", "===科大訊飛喚醒初始化===" + code);if (code != ErrorCode.SUCCESS) {

// showToast("初始化失敗,錯誤碼:" + code + ",請點擊網址https://www.xfyun.cn/document/error-code查詢解決方案");}}};//識別監聽mRecognizerListener = new RecognizerListener() {@Overridepublic void onBeginOfSpeech() {// 此回調表示:sdk內部錄音機已經準備好了,用戶可以開始語音輸入Log.d("initLogData", "=====開始說話======");}@Overridepublic void onError(SpeechError error) {// Tips:// 錯誤碼:10118(您沒有說話),可能是錄音機權限被禁,需要提示用戶打開應用的錄音權限。

// Log.d("initLogData", "====錯誤說話=====" + error.getPlainDescription(true));senVoicesMsg(300, "識別錯誤 ");//100啟動語音識別 200識別成功 300識別錯誤mIat.stopListening();hideDialog();}@Overridepublic void onEndOfSpeech() {// 此回調表示:檢測到了語音的尾端點,已經進入識別過程,不再接受語音輸入mIat.stopListening();

// Log.d("initLogData", "=====結束說話======");hideDialog();}@Overridepublic void onResult(RecognizerResult results, boolean isLast) {String text = parseIatResult(results.getResultString());

// Log.d("initLogData", "==說話==語音識別結果==initVoice==" + text);result.append(text);if (!text.trim().isEmpty() && boxDialog != null) {senVoicesMsg(200, "識別成功");//100啟動語音識別 200識別成功 300識別錯誤boxDialog.showTxtContent(result.toString());senVoicesMsg(200, "" + result.toString());}if (isLast) {result.setLength(0);}}@Overridepublic void onVolumeChanged(int volume, byte[] data) {//showToast("當前正在說話,音量大小:" + volume);if (volume > 0 && boxDialog != null) {boxDialog.showTxtContent("錄音中...");}Log.d("initLogData", "===說話==onVolumeChanged:====" + volume);}@Overridepublic void onEvent(int eventType, int arg1, int arg2, Bundle obj) {// 以下代碼用于獲取與云端的會話id,當業務出錯時將會話id提供給技術支持人員,可用于查詢會話日志,定位出錯原因// 若使用本地能力,會話id為nullif (SpeechEvent.EVENT_SESSION_ID == eventType) {String sid = obj.getString(SpeechEvent.KEY_EVENT_SESSION_ID);}}};// 初始化識別無UI識別對象// 使用SpeechRecognizer對象,可根據回調消息自定義界面;mIat = SpeechRecognizer.createRecognizer(ct, mInitListener);if (mIat != null) {setIatParam();//參數配置}}/*** 執行語音* 識別*/public void startVoice(Context context) {senVoicesMsg(100, "啟動語音識別");//100啟動語音識別 200識別成功 300識別錯誤if (mIat != null) {showDialog(context);mIat.startListening(mRecognizerListener);}}/*** 科大訊飛* 語音識別* 參數配置*/private void setIatParam() {// 清空參數mIat.setParameter(com.iflytek.cloud.SpeechConstant.PARAMS, null);// 設置聽寫引擎mIat.setParameter(com.iflytek.cloud.SpeechConstant.ENGINE_TYPE, com.iflytek.cloud.SpeechConstant.TYPE_CLOUD);// 設置返回結果格式mIat.setParameter(com.iflytek.cloud.SpeechConstant.RESULT_TYPE, "json");// 設置語言mIat.setParameter(com.iflytek.cloud.SpeechConstant.LANGUAGE, "zh_cn");// 設置語言區域mIat.setParameter(com.iflytek.cloud.SpeechConstant.ACCENT, "mandarin");// 設置語音前端點:靜音超時時間,即用戶多長時間不說話則當做超時處理mIat.setParameter(com.iflytek.cloud.SpeechConstant.VAD_BOS, "4000");// 設置語音后端點:后端點靜音檢測時間,即用戶停止說話多長時間內即認為不再輸入, 自動停止錄音mIat.setParameter(com.iflytek.cloud.SpeechConstant.VAD_EOS, "500");// 設置標點符號,設置為"0"返回結果無標點,設置為"1"返回結果有標點mIat.setParameter(com.iflytek.cloud.SpeechConstant.ASR_PTT, "0");Log.d("initLogData", "==語音是被==初始化成功:====");// 設置音頻保存路徑,保存音頻格式支持pcm、wav,設置路徑為sd卡請注意WRITE_EXTERNAL_STORAGE權限// 注:AUDIO_FORMAT參數語記需要更新版本才能生效

// mIatDialog.setParameter(SpeechConstant.AUDIO_FORMAT, "wav");

// mIatDialog.setParameter(SpeechConstant.ASR_AUDIO_PATH, Environment.getExternalStorageDirectory() + "/MyApplication/" + filename + ".wav");}/*** 語音* 識別* 解析*/public static String parseIatResult(String json) {StringBuffer ret = new StringBuffer();try {JSONTokener tokener = new JSONTokener(json);JSONObject joResult = new JSONObject(tokener);JSONArray words = joResult.getJSONArray("ws");for (int i = 0; i < words.length(); i++) {// 轉寫結果詞,默認使用第一個結果JSONArray items = words.getJSONObject(i).getJSONArray("cw");JSONObject obj = items.getJSONObject(0);ret.append(obj.getString("w"));}} catch (Exception e) {e.printStackTrace();}return ret.toString();}/*** 對話框* getApplicationContext()*/private VoiceDialog boxDialog;private void showDialog(Context context) {View inflate = LayoutInflater.from(context).inflate(R.layout.donghua_layout, null, false);boxDialog = new VoiceDialog(context, inflate, VoiceDialog.LocationView.BOTTOM);boxDialog.show();}/*** 隱藏* 對話框*/private void hideDialog() {if (boxDialog != null) {boxDialog.dismiss();}}/*** 發送語音* 識別消息** @param code* @param conn*/private void senVoicesMsg(int code, String conn) {VoiceRecognizeResult voiceRecognizeResult = new VoiceRecognizeResult();voiceRecognizeResult.setCode(code);//100啟動語音識別 200識別成功 300識別錯誤voiceRecognizeResult.setMsg("" + conn);String std = JSON.toJSONString(voiceRecognizeResult);MyEventManager.postMsg("" + std, "VoiceRecognizeResult");}/*** 科大訊飛* 語音sdk* 初始化*/public void initKedaXun(Context context) {// 初始化參數構建StringBuffer param = new StringBuffer();//IflytekAPP_id為我們申請的Appidparam.append("appid=" + context.getString(R.string.IflytekAPP_id));param.append(",");// 設置使用v5+param.append(SpeechConstant.ENGINE_MODE + "=" + SpeechConstant.MODE_MSC);SpeechUtility.createUtility(context, param.toString());Log.d("initLogData", "===在appacation中初始化=====");}}

2、使用

KDVoiceRegUtils.getInstance().initKedaXun(mWXSDKInstance.getContext());KDVoiceRegUtils.getInstance().initVoiceRecorgnise(mUniSDKInstance.getContext());//語音識別初始化KDVoiceRegUtils.getInstance().startVoice(mUniSDKInstance.getContext());注意其實代碼還可以優化,由于公司業務需要,封裝的不怎么徹底,使用者可在此基礎上進一步封裝。

知識點總結)

———MySQL 刪除數據表)

![neuq-acm預備隊訓練week 8 P8794 [藍橋杯 2022 國 A] 環境治理](http://pic.xiahunao.cn/neuq-acm預備隊訓練week 8 P8794 [藍橋杯 2022 國 A] 環境治理)

)

Command Line)

>--案例(五點一))