prometheus監控k8s集群資源

- 一,通過CADvisior 監控pod的資源狀態

- 1.1 授權外邊用戶可以訪問prometheus接口。

- 1.2 獲取token保存

- 1.3 配置prometheus.yml 啟動并查看狀態

- 1.4 Grafana 導入儀表盤

- 二,通過kube-state-metrics 監控k8s資源狀態

- 2.1 部署 kube-state-metrics

- 2.2 配置prometheus.yml

- 2.3 Grafana 導入儀表盤

- 2.4 Grafana沒有數據,添加路由轉發

二進制安裝的prometheus,監控k8s集群信息。

| 監控指標 | 實現方式 | 舉例 |

|---|---|---|

| Pod資源利用率 | cAdvisor | 容器CPU、內存利用率 |

| K8s資源狀態 | kube-state-metrics | controller控制器、Node、Namespace、Pod、ReplicaSet、service等 |

一,通過CADvisior 監控pod的資源狀態

1.1 授權外邊用戶可以訪問prometheus接口。

apiVersion: v1

kind: ServiceAccount

metadata:name: prometheusnamespace: kube-system

---

apiVersion: rbac.authorization.k8s.io/v1beta1

kind: ClusterRole

metadata:name: prometheus

rules:

- apiGroups:- ""resources:- nodes- services- endpoints- pods- nodes/proxyverbs:- get- list- watch

- apiGroups:- "extensions"resources:- ingressesverbs:- get- list- watch

- apiGroups:- ""resources:- configmaps- nodes/metricsverbs:- get

- nonResourceURLs:- /metricsverbs:- get

---

apiVersion: rbac.authorization.k8s.io/v1beta1

kind: ClusterRoleBinding

metadata:name: prometheus

roleRef:apiGroup: rbac.authorization.k8s.iokind: ClusterRolename: prometheus

subjects:

- kind: ServiceAccountname: prometheusnamespace: kube-system

kubectl apply -f rbac.yaml

1.2 獲取token保存

kubectl get secrets -n kube-system |grep prometheus #查看toekn name

name:prometheus-token-vgxhckubectl describe secret prometheus-token-vgxhc -n kube-system > token.k8s

#kubectl get secrets -n kube-system -o yaml prometheus-token-vgxhc |grep token

scp token.k8s prometheus #拷貝到prometheus服務器prometheus的目錄下

我的token放在 /opt/monitor/prometheus/token.k8s

1.3 配置prometheus.yml 啟動并查看狀態

vim prometheus.yml

- job_name: kubernetes-nodes-cadvisormetrics_path: /metricsscheme: httpskubernetes_sd_configs:- role: nodeapi_server: https://172.18.0.0:6443bearer_token_file: /opt/monitor/prometheus/token.k8s tls_config:insecure_skip_verify: truebearer_token_file: /opt/monitor/prometheus/token.k8s tls_config:insecure_skip_verify: truerelabel_configs:# 將標簽(.*)作為新標簽名,原有值不變- action: labelmapregex: __meta_kubernetes_node_label_(.*)# 修改NodeIP:10250為APIServerIP:6443- action: replaceregex: (.*)source_labels: ["__address__"]target_label: __address__replacement: 172.18.0.0:6443# 實際訪問指標接口 https://NodeIP:10250/metrics/cadvisor 這個接口只能APISERVER訪問,故此重新標記標簽使用APISERVER代理訪問- action: replacesource_labels: [__meta_kubernetes_node_name]target_label: __metrics_path__regex: (.*)replacement: /api/v1/nodes/${1}/proxy/metrics/cadvisor ./promtool check config prometheus.yml

重啟prometheus 或 kill -HUP PrometheusPid

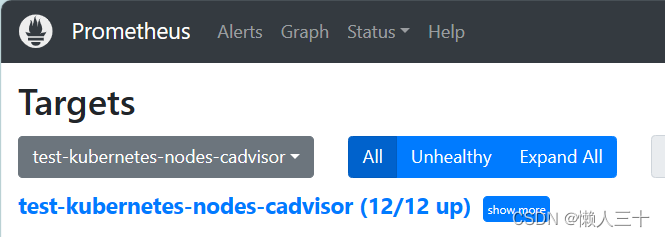

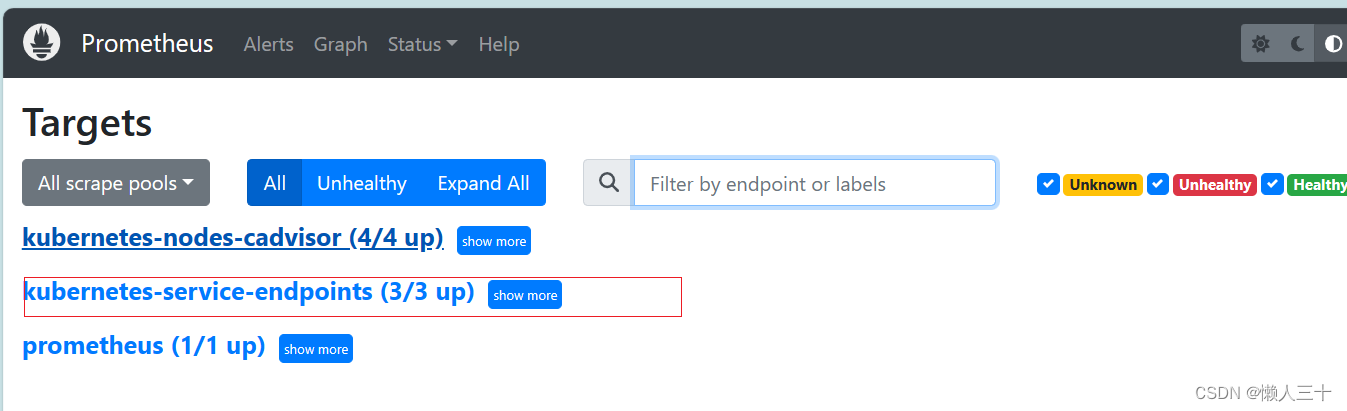

在prometheus的target頁面查看

http://172.18.0.0:9090

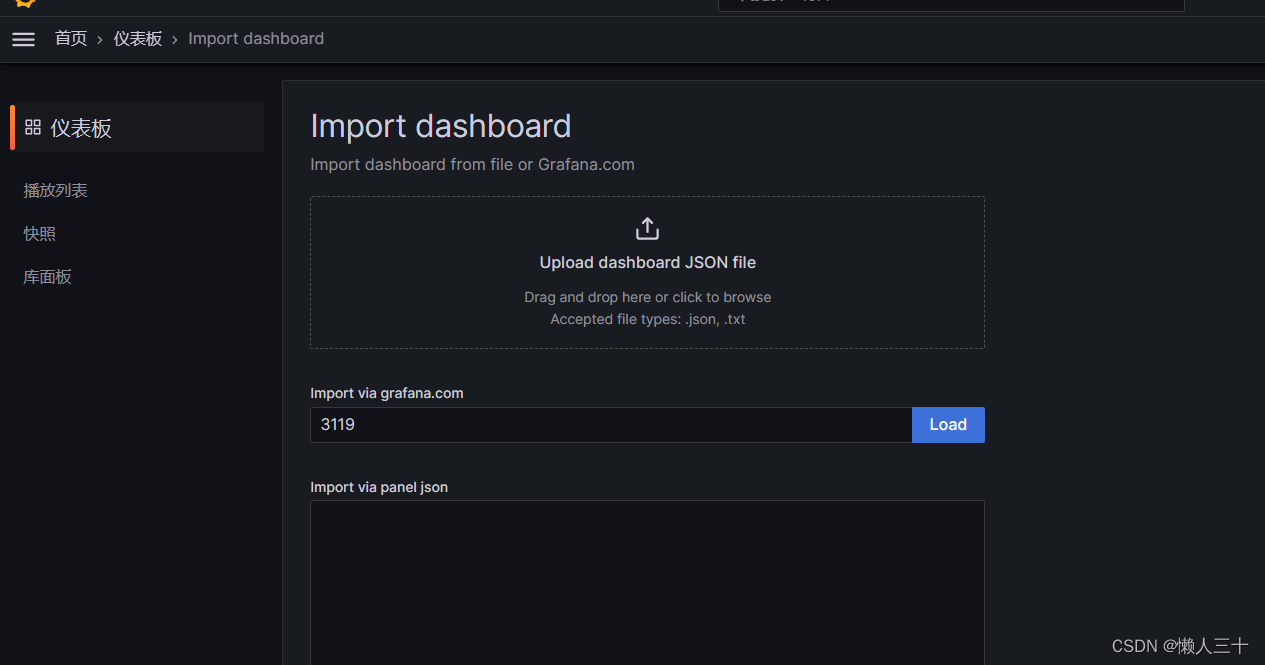

1.4 Grafana 導入儀表盤

導入3119 儀表盤

完成pod資源監控

完成pod資源監控

二,通過kube-state-metrics 監控k8s資源狀態

2.1 部署 kube-state-metrics

apiVersion: v1

kind: ServiceAccount

metadata:name: kube-state-metricsnamespace: kube-systemlabels:kubernetes.io/cluster-service: "true"addonmanager.kubernetes.io/mode: Reconcile

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:name: kube-state-metricslabels:kubernetes.io/cluster-service: "true"addonmanager.kubernetes.io/mode: Reconcile

rules:

- apiGroups: [""]resources:- configmaps- secrets- nodes- pods- services- resourcequotas- replicationcontrollers- limitranges- persistentvolumeclaims- persistentvolumes- namespaces- endpointsverbs: ["list", "watch"]

- apiGroups: ["apps"]resources:- statefulsets- daemonsets- deployments- replicasetsverbs: ["list", "watch"]

- apiGroups: ["batch"]resources:- cronjobs- jobsverbs: ["list", "watch"]

- apiGroups: ["autoscaling"]resources:- horizontalpodautoscalersverbs: ["list", "watch"]

- apiGroups: ["networking.k8s.io", "extensions"]resources:- ingresses verbs: ["list", "watch"]

- apiGroups: ["storage.k8s.io"]resources:- storageclasses verbs: ["list", "watch"]

- apiGroups: ["certificates.k8s.io"]resources:- certificatesigningrequestsverbs: ["list", "watch"]

- apiGroups: ["policy"]resources:- poddisruptionbudgets verbs: ["list", "watch"]---

apiVersion: rbac.authorization.k8s.io/v1

kind: Role

metadata:name: kube-state-metrics-resizernamespace: kube-systemlabels:kubernetes.io/cluster-service: "true"addonmanager.kubernetes.io/mode: Reconcile

rules:

- apiGroups: [""]resources:- podsverbs: ["get"]

- apiGroups: ["extensions","apps"]resources:- deploymentsresourceNames: ["kube-state-metrics"]verbs: ["get", "update"]

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:name: kube-state-metricslabels:kubernetes.io/cluster-service: "true"addonmanager.kubernetes.io/mode: Reconcile

roleRef:apiGroup: rbac.authorization.k8s.iokind: ClusterRolename: kube-state-metrics

subjects:

- kind: ServiceAccountname: kube-state-metricsnamespace: kube-system

---

apiVersion: rbac.authorization.k8s.io/v1

kind: RoleBinding

metadata:name: kube-state-metricsnamespace: kube-systemlabels:kubernetes.io/cluster-service: "true"addonmanager.kubernetes.io/mode: Reconcile

roleRef:apiGroup: rbac.authorization.k8s.iokind: Rolename: kube-state-metrics-resizer

subjects:

- kind: ServiceAccountname: kube-state-metricsnamespace: kube-system---apiVersion: apps/v1

kind: Deployment

metadata:name: kube-state-metricsnamespace: kube-systemlabels:k8s-app: kube-state-metricskubernetes.io/cluster-service: "true"addonmanager.kubernetes.io/mode: Reconcileversion: v1.3.0

spec:selector:matchLabels:k8s-app: kube-state-metricsversion: v1.3.0replicas: 1template:metadata:labels:k8s-app: kube-state-metricsversion: v1.3.0annotations:scheduler.alpha.kubernetes.io/critical-pod: ''spec:priorityClassName: system-cluster-criticalserviceAccountName: kube-state-metricscontainers:- name: kube-state-metricsimage: harbor.cpit.com.cn/monitor/kube-state-metrics:v1.8.0ports:- name: http-metricscontainerPort: 8080- name: telemetrycontainerPort: 8081readinessProbe:httpGet:path: /healthzport: 8080initialDelaySeconds: 5timeoutSeconds: 5- name: addon-resizerimage: harbor.cpit.com.cn/monitor/addon-resizer:1.8.6resources:limits:cpu: 1000mmemory: 500Mirequests:cpu: 1000mmemory: 500Mienv:- name: MY_POD_NAMEvalueFrom:fieldRef:fieldPath: metadata.name- name: MY_POD_NAMESPACEvalueFrom:fieldRef:fieldPath: metadata.namespacevolumeMounts:- name: config-volumemountPath: /etc/configcommand:- /pod_nanny- --config-dir=/etc/config- --container=kube-state-metrics- --cpu=100m- --extra-cpu=1m- --memory=100Mi- --extra-memory=2Mi- --threshold=5- --deployment=kube-state-metricsvolumes:- name: config-volumeconfigMap:name: kube-state-metrics-config

---

# Config map for resource configuration.

apiVersion: v1

kind: ConfigMap

metadata:name: kube-state-metrics-confignamespace: kube-systemlabels:k8s-app: kube-state-metricskubernetes.io/cluster-service: "true"addonmanager.kubernetes.io/mode: Reconcile

data:NannyConfiguration: |-apiVersion: nannyconfig/v1alpha1kind: NannyConfiguration---apiVersion: v1

kind: Service

metadata:name: kube-state-metricsnamespace: kube-systemlabels:kubernetes.io/cluster-service: "true"addonmanager.kubernetes.io/mode: Reconcilekubernetes.io/name: "kube-state-metrics"annotations:prometheus.io/scrape: 'true'

spec:ports:- name: http-metricsport: 8080targetPort: http-metricsprotocol: TCP- name: telemetryport: 8081targetPort: telemetryprotocol: TCPselector:k8s-app: kube-state-metrics

部署

kubectl apply -f kube-state-metrics.yaml

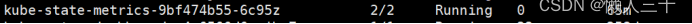

kubectl get pods -n kube-system

pod的正常運行

2.2 配置prometheus.yml

- job_name: kubernetes-service-endpointskubernetes_sd_configs:- role: endpointsapi_server: https://192.168.0.0:6443bearer_token_file: /opt/monitor/prometheus/token.k8stls_config:insecure_skip_verify: truebearer_token_file: /opt/monitor/prometheus/token.k8stls_config:insecure_skip_verify: trueService沒配置注解prometheus.io/scrape的不采集relabel_configs:- action: keepregex: truesource_labels:- __meta_kubernetes_service_annotation_prometheus_io_scrape重命名采集目標協議- action: replaceregex: (https?)source_labels:- __meta_kubernetes_service_annotation_prometheus_io_schemetarget_label: __scheme__重命名采集目標指標URL路徑- action: replaceregex: (.+)source_labels:- __meta_kubernetes_service_annotation_prometheus_io_pathtarget_label: __metrics_path__重命名采集目標地址- action: replaceregex: ([^:]+)(?::\d+)?;(\d+)replacement: $1:$2source_labels:- __address__- __meta_kubernetes_service_annotation_prometheus_io_porttarget_label: __address__將K8s標簽(.*)作為新標簽名,原有值不變- action: labelmapregex: __meta_kubernetes_service_label_(.+)生成命名空間標簽- action: replacesource_labels:- __meta_kubernetes_namespacetarget_label: kubernetes_namespace生成Service名稱標簽- action: replacesource_labels:- __meta_kubernetes_service_nametarget_label: kubernetes_service_name./promtool check config prometheus.yml

重啟prometheus 或 kill -HUP PrometheusPid

在prometheus的target頁面查看

http://172.18.0.0:9090

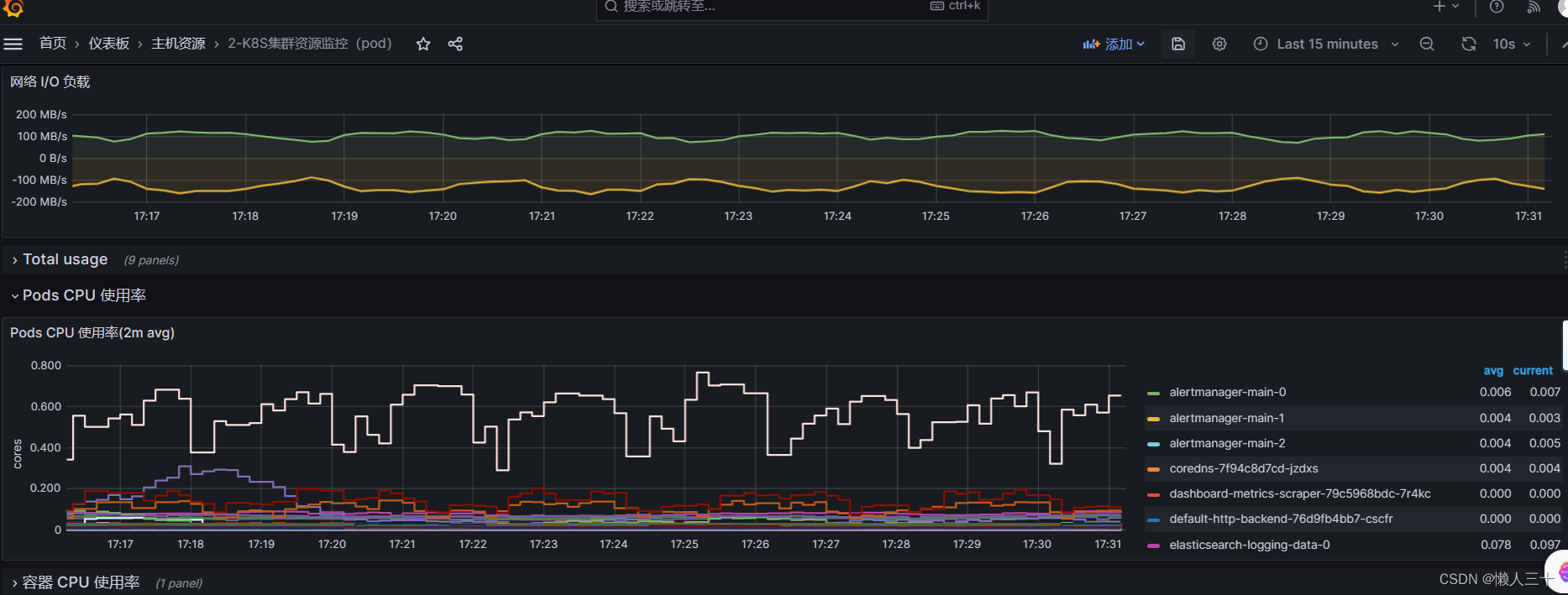

2.3 Grafana 導入儀表盤

Grafana導入k8s集群資源對象監控儀表盤 6417

完成k8s集群資源對象監控儀表盤監控

2.4 Grafana沒有數據,添加路由轉發

ip route

ip route add 172.40.0.0/16 via 172.18.2.30 dev eth0

ip route

#172.40.1.208:kube-state-metrics pod 集群內部ip

#172.18.2.30:k8s master 節點ip

然后在查看Grafana儀表盤。

(etcd))

)

)

)

,1688API接口開發系列)