過擬合泛化性弱

欠擬合解決方法:

? ? ? ? 增加輸入特征項

? ? ? ? 增加網絡參數

? ? ? ? 減少正則化參數

過擬合的解決方法:

? ? ? ? 數據清洗

? ? ? ? 增大訓練集

? ? ? ? 采用正則化

? ? ? ? 增大正則化參數

正則化緩解過擬合

正則化在損失函數中引入模型復雜度指標,利用給w增加權重,弱化數據集的噪聲,loss = loss(y與y_) + REGULARIZER*loss(w)

模型中所有參數的損失函數,如交叉上海,均方誤差

利用超參數REGULARIZER給出參數w在總loss中的比例,即正則化權重, w是需要正則化的參數

正則化的選擇

L1正則化大概率會使很多參數變為0,因此該方法可通過系數參數,減少參數的數量,降低復雜度

L2正則化會使參數很接近0但不為0,因此該方法可通過減少參數值的大小降低復雜度?

with tf.GradientTape() as tape:h1 = tf.matul(x_train, w1) + b1h1 = tf.nn.relu(h1)y = tf.matmul(h1, w2) + b2loss_mse = tf.reduce_mean(tf.square(y_train - y))loss_ragularization = []loss_regularization.append(tf.nn.l2_loss(w1))loss_regularization.append(tf.nn.l2_loss(w2))loss_regularization = tf.reduce_sum(loss_regularization)loss = loss_mse + 0.03 * loss_regularization

variables = [w1, b1, w2, b2】

grads = tape.gradient(loss, variables)生成網格覆蓋這些點,會對每個坐標生成一個預測值,輸出預測值為0.5的連成線,這個線就是紅點和藍點的分界線。

# 導入所需模塊

import tensorflow as tf

from matplotlib import pyplot as plt

import numpy as np

import pandas as pd# 讀入數據/標簽 生成x_train y_train

df = pd.read_csv('dot.csv')

x_data = np.array(df[['x1', 'x2']])

y_data = np.array(df['y_c'])x_train = x_data

y_train = y_data.reshape(-1, 1)Y_c = [['red' if y else 'blue'] for y in y_train]# 轉換x的數據類型,否則后面矩陣相乘時會因數據類型問題報錯

x_train = tf.cast(x_train, tf.float32)

y_train = tf.cast(y_train, tf.float32)# from_tensor_slices函數切分傳入的張量的第一個維度,生成相應的數據集,使輸入特征和標簽值一一對應

train_db = tf.data.Dataset.from_tensor_slices((x_train, y_train)).batch(32)# 生成神經網絡的參數,輸入層為4個神經元,隱藏層為32個神經元,2層隱藏層,輸出層為3個神經元

# 用tf.Variable()保證參數可訓練

w1 = tf.Variable(tf.random.normal([2, 11]), dtype=tf.float32)

b1 = tf.Variable(tf.constant(0.01, shape=[11]))w2 = tf.Variable(tf.random.normal([11, 1]), dtype=tf.float32)

b2 = tf.Variable(tf.constant(0.01, shape=[1]))lr = 0.005 # 學習率為

epoch = 800 # 循環輪數# 訓練部分

for epoch in range(epoch):for step, (x_train, y_train) in enumerate(train_db):with tf.GradientTape() as tape: # 記錄梯度信息h1 = tf.matmul(x_train, w1) + b1 # 記錄神經網絡乘加運算h1 = tf.nn.relu(h1)y = tf.matmul(h1, w2) + b2# 采用均方誤差損失函數mse = mean(sum(y-out)^2)loss_mse = tf.reduce_mean(tf.square(y_train - y))# 添加l2正則化loss_regularization = []# tf.nn.l2_loss(w)=sum(w ** 2) / 2loss_regularization.append(tf.nn.l2_loss(w1))loss_regularization.append(tf.nn.l2_loss(w2))# 求和# 例:x=tf.constant(([1,1,1],[1,1,1]))# tf.reduce_sum(x)# >>>6loss_regularization = tf.reduce_sum(loss_regularization)loss = loss_mse + 0.03 * loss_regularization # REGULARIZER = 0.03# 計算loss對各個參數的梯度variables = [w1, b1, w2, b2]grads = tape.gradient(loss, variables)# 實現梯度更新# w1 = w1 - lr * w1_gradw1.assign_sub(lr * grads[0])b1.assign_sub(lr * grads[1])w2.assign_sub(lr * grads[2])b2.assign_sub(lr * grads[3])# 每200個epoch,打印loss信息if epoch % 20 == 0:print('epoch:', epoch, 'loss:', float(loss))# 預測部分

print("*******predict*******")

# xx在-3到3之間以步長為0.01,yy在-3到3之間以步長0.01,生成間隔數值點

xx, yy = np.mgrid[-3:3:.1, -3:3:.1]

# 將xx, yy拉直,并合并配對為二維張量,生成二維坐標點

grid = np.c_[xx.ravel(), yy.ravel()]

grid = tf.cast(grid, tf.float32)

# 將網格坐標點喂入神經網絡,進行預測,probs為輸出

probs = []

for x_predict in grid:# 使用訓練好的參數進行預測h1 = tf.matmul([x_predict], w1) + b1h1 = tf.nn.relu(h1)y = tf.matmul(h1, w2) + b2 # y為預測結果probs.append(y)# 取第0列給x1,取第1列給x2

x1 = x_data[:, 0]

x2 = x_data[:, 1]

# probs的shape調整成xx的樣子

probs = np.array(probs).reshape(xx.shape)

plt.scatter(x1, x2, color=np.squeeze(Y_c))

# 把坐標xx yy和對應的值probs放入contour函數,給probs值為0.5的所有點上色 plt.show()后 顯示的是紅藍點的分界線

plt.contour(xx, yy, probs, levels=[.5])

plt.show()# 讀入紅藍點,畫出分割線,包含正則化

# 不清楚的數據,建議print出來查看

存在過擬合現象,輪廓不夠平滑, 使用l2正則化緩解過擬合

存在過擬合現象,輪廓不夠平滑, 使用l2正則化緩解過擬合

# 導入所需模塊

import tensorflow as tf

from matplotlib import pyplot as plt

import numpy as np

import pandas as pd# 讀入數據/標簽 生成x_train y_train

df = pd.read_csv('dot.csv')

x_data = np.array(df[['x1', 'x2']])

y_data = np.array(df['y_c'])x_train = x_data

y_train = y_data.reshape(-1, 1)Y_c = [['red' if y else 'blue'] for y in y_train]# 轉換x的數據類型,否則后面矩陣相乘時會因數據類型問題報錯

x_train = tf.cast(x_train, tf.float32)

y_train = tf.cast(y_train, tf.float32)# from_tensor_slices函數切分傳入的張量的第一個維度,生成相應的數據集,使輸入特征和標簽值一一對應

train_db = tf.data.Dataset.from_tensor_slices((x_train, y_train)).batch(32)# 生成神經網絡的參數,輸入層為4個神經元,隱藏層為32個神經元,2層隱藏層,輸出層為3個神經元

# 用tf.Variable()保證參數可訓練

w1 = tf.Variable(tf.random.normal([2, 11]), dtype=tf.float32)

b1 = tf.Variable(tf.constant(0.01, shape=[11]))w2 = tf.Variable(tf.random.normal([11, 1]), dtype=tf.float32)

b2 = tf.Variable(tf.constant(0.01, shape=[1]))lr = 0.005 # 學習率為

epoch = 800 # 循環輪數# 訓練部分

for epoch in range(epoch):for step, (x_train, y_train) in enumerate(train_db):with tf.GradientTape() as tape: # 記錄梯度信息h1 = tf.matmul(x_train, w1) + b1 # 記錄神經網絡乘加運算h1 = tf.nn.relu(h1)y = tf.matmul(h1, w2) + b2# 采用均方誤差損失函數mse = mean(sum(y-out)^2)loss_mse = tf.reduce_mean(tf.square(y_train - y))# 添加l2正則化loss_regularization = []# tf.nn.l2_loss(w)=sum(w ** 2) / 2loss_regularization.append(tf.nn.l2_loss(w1))loss_regularization.append(tf.nn.l2_loss(w2))# 求和# 例:x=tf.constant(([1,1,1],[1,1,1]))# tf.reduce_sum(x)# >>>6loss_regularization = tf.reduce_sum(loss_regularization)loss = loss_mse + 0.03 * loss_regularization # REGULARIZER = 0.03# 計算loss對各個參數的梯度variables = [w1, b1, w2, b2]grads = tape.gradient(loss, variables)# 實現梯度更新# w1 = w1 - lr * w1_gradw1.assign_sub(lr * grads[0])b1.assign_sub(lr * grads[1])w2.assign_sub(lr * grads[2])b2.assign_sub(lr * grads[3])# 每200個epoch,打印loss信息if epoch % 20 == 0:print('epoch:', epoch, 'loss:', float(loss))# 預測部分

print("*******predict*******")

# xx在-3到3之間以步長為0.01,yy在-3到3之間以步長0.01,生成間隔數值點

xx, yy = np.mgrid[-3:3:.1, -3:3:.1]

# 將xx, yy拉直,并合并配對為二維張量,生成二維坐標點

grid = np.c_[xx.ravel(), yy.ravel()]

grid = tf.cast(grid, tf.float32)

# 將網格坐標點喂入神經網絡,進行預測,probs為輸出

probs = []

for x_predict in grid:# 使用訓練好的參數進行預測h1 = tf.matmul([x_predict], w1) + b1h1 = tf.nn.relu(h1)y = tf.matmul(h1, w2) + b2 # y為預測結果probs.append(y)# 取第0列給x1,取第1列給x2

x1 = x_data[:, 0]

x2 = x_data[:, 1]

# probs的shape調整成xx的樣子

probs = np.array(probs).reshape(xx.shape)

plt.scatter(x1, x2, color=np.squeeze(Y_c))

# 把坐標xx yy和對應的值probs放入contour函數,給probs值為0.5的所有點上色 plt.show()后 顯示的是紅藍點的分界線

plt.contour(xx, yy, probs, levels=[.5])

plt.show()# 讀入紅藍點,畫出分割線,包含正則化

# 不清楚的數據,建議print出來查看

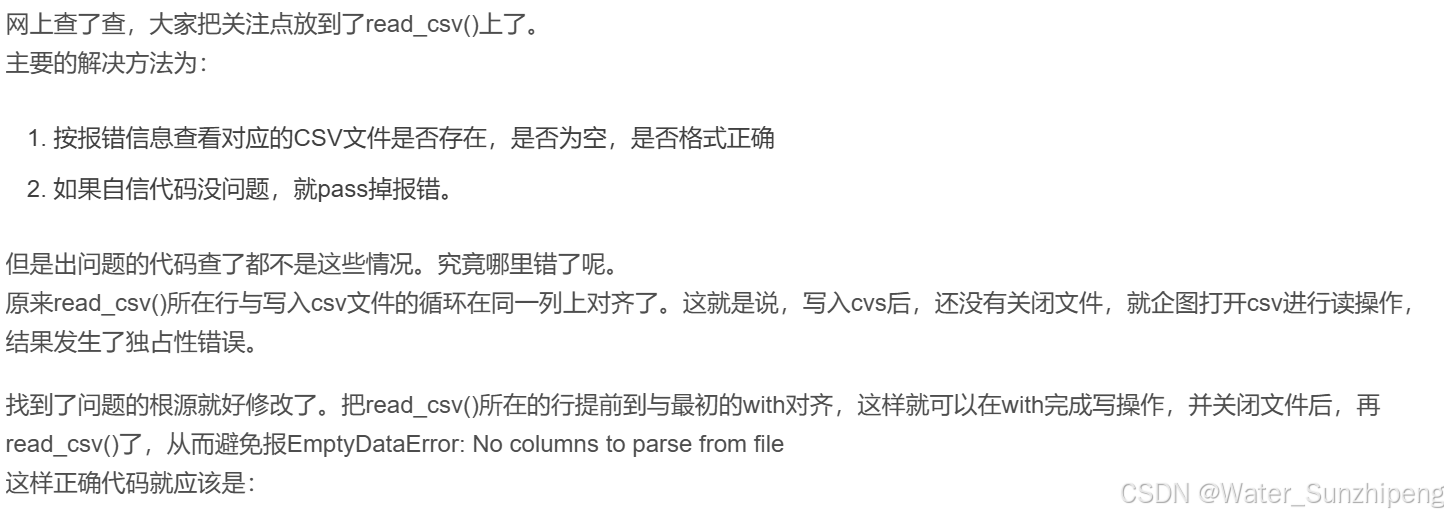

python EmptyDataError No columns to parse from file sites:stackoverflow.com

)

,收藏~)

)