摘要:

MindSpore AI框架使用MobileNetv2模型開發垃圾分檢代碼。檢測本地圖像中的垃圾物體,保存檢測結果到文件。記錄了開發過程和步驟,包括環境準備、數據下載、加載和預處理、模型搭建、訓練、測試、推理應用等。

1、實驗目的

了解垃圾分類應用代碼的編寫(Python語言);

了解Linux操作系統的基本使用;

掌握atc命令進行模型轉換的基本操作。

2、概念

MobileNetv2網絡模型原理

????????Google?2017年提出

????????用于輕量級CNN網絡

????????????????移動端

????????????????嵌入式或IoT設備

????????技術采用

????????????????使用深度可分離卷積(Depthwise Separable Convolution)

????????????????引入寬度系數α和分辨率系數β

????????????????倒殘差結構(Inverted residual block)

????????????????Linear Bottlenecks

????????優點

????????????????減少模型參數

????????????????減小運算量

????????????????提高模型的準確率,且優化后的模型更小

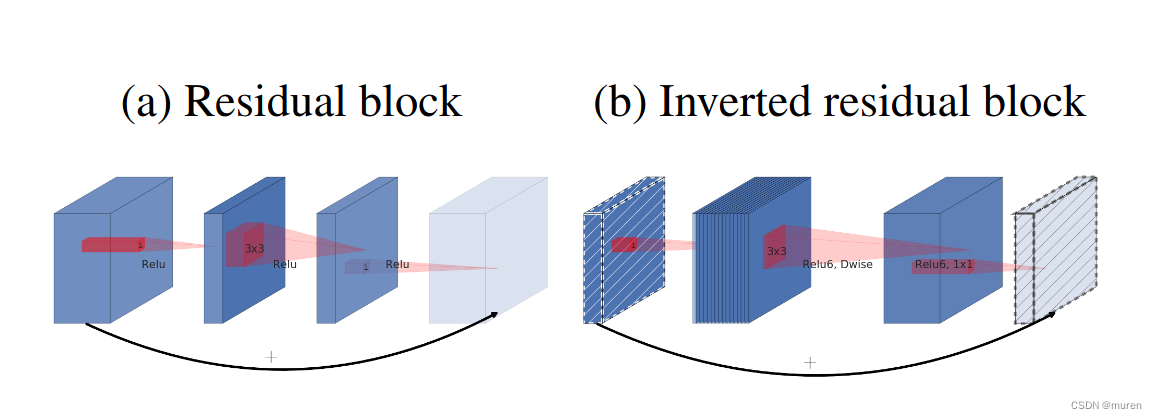

Inverted residual block結構

????????1x1卷積升維

????????3x3?DepthWise卷積

????????1x1卷積降維

Residual block結構

????????1x1卷積降維

????????3x3卷積

????????1x1卷積升維

3、實驗環境

支持win_x86和Linux系統,CPU/GPU/Ascend均可

參考《MindSpore環境搭建實驗手冊》

%%capture captured_output

# 實驗環境已經預裝了mindspore==2.2.14,如需更換mindspore版本,可更改下面mindspore的版本號

!pip uninstall mindspore -y

!pip install -i https://pypi.mirrors.ustc.edu.cn/simple mindspore==2.2.14

# 查看當前 mindspore 版本

!pip show mindspore輸出:

Name: mindspore

Version: 2.2.14

Summary: MindSpore is a new open source deep learning training/inference framework that could be used for mobile, edge and cloud scenarios.

Home-page: https://www.mindspore.cn

Author: The MindSpore Authors

Author-email: contact@mindspore.cn

License: Apache 2.0

Location: /home/nginx/miniconda/envs/jupyter/lib/python3.9/site-packages

Requires: asttokens, astunparse, numpy, packaging, pillow, protobuf, psutil, scipy

Required-by: mindnlp4、數據處理

4.1數據集格式

ImageFolder格式:每一類圖片整理成單獨的一個文件夾。

數據集結構:

└─ImageFolder

├─train

│ class1Folder

│ ......

└─evalclass1Folder

......4.2下載數據集

from download import download

?

# 下載data_en數據集

url = "https://ascend-professional-construction-dataset.obs.cn-north-4.myhuaweicloud.com:443/MindStudio-pc/data_en.zip"

path = download(url, "./", kind="zip", replace=True)輸出:

Downloading data from https://ascend-professional-construction-dataset.obs.cn-north-4.myhuaweicloud.com:443/MindStudio-pc/data_en.zip (21.3 MB)file_sizes: 100%|███████████████████████████| 22.4M/22.4M [00:00<00:00, 123MB/s]

Extracting zip file...

Successfully downloaded / unzipped to ./4.3下載訓練權重文件

from download import download

?

# 下載預訓練權重文件

url = "https://ascend-professional-construction-dataset.obs.cn-north-4.myhuaweicloud.com:443/ComputerVision/mobilenetV2-200_1067.zip"

path = download(url, "./", kind="zip", replace=True)輸出:

Downloading data from https://ascend-professional-construction-dataset.obs.cn-north-4.myhuaweicloud.com:443/ComputerVision/mobilenetV2-200_1067.zip (25.5 MB)file_sizes: 100%|███████████████████████████| 26.7M/26.7M [00:00<00:00, 109MB/s]

Extracting zip file...

Successfully downloaded / unzipped to ./4.4數據加載

將模塊導入,具體如下:

import math

import numpy as np

import os

import random

?

from matplotlib import pyplot as plt

from easydict import EasyDict

from PIL import Image

import numpy as np

import mindspore.nn as nn

from mindspore import ops as P

from mindspore.ops import add

from mindspore import Tensor

import mindspore.common.dtype as mstype

import mindspore.dataset as de

import mindspore.dataset.vision as C

import mindspore.dataset.transforms as C2

import mindspore as ms

from mindspore import set_context, nn, Tensor, load_checkpoint, save_checkpoint, export

from mindspore.train import Model

from mindspore.train import Callback, LossMonitor, ModelCheckpoint, CheckpointConfig

?

os.environ['GLOG_v'] = '3' # Log level includes 3(ERROR), 2(WARNING), 1(INFO), 0(DEBUG).

os.environ['GLOG_logtostderr'] = '0' # 0:輸出到文件,1:輸出到屏幕

os.environ['GLOG_log_dir'] = '../../log' # 日志目錄

os.environ['GLOG_stderrthreshold'] = '2' # 輸出到目錄也輸出到屏幕:3(ERROR), 2(WARNING), 1(INFO), 0(DEBUG).

set_context(mode=ms.GRAPH_MODE, device_target="CPU", device_id=0) # 設置采用圖模式執行,設備為Ascend#配置后續訓練、驗證、推理用到的參數:

# 垃圾分類數據集標簽,以及用于標簽映射的字典。

garbage_classes = {'干垃圾': ['貝殼', '打火機', '舊鏡子', '掃把', '陶瓷碗', '牙刷', '一次性筷子', '臟污衣服'],'可回收物': ['報紙', '玻璃制品', '籃球', '塑料瓶', '硬紙板', '玻璃瓶', '金屬制品', '帽子', '易拉罐', '紙張'],'濕垃圾': ['菜葉', '橙皮', '蛋殼', '香蕉皮'],'有害垃圾': ['電池', '藥片膠囊', '熒光燈', '油漆桶']

}

?

class_cn = ['貝殼', '打火機', '舊鏡子', '掃把', '陶瓷碗', '牙刷', '一次性筷子', '臟污衣服','報紙', '玻璃制品', '籃球', '塑料瓶', '硬紙板', '玻璃瓶', '金屬制品', '帽子', '易拉罐', '紙張','菜葉', '橙皮', '蛋殼', '香蕉皮','電池', '藥片膠囊', '熒光燈', '油漆桶']

class_en = ['Seashell', 'Lighter','Old Mirror', 'Broom','Ceramic Bowl', 'Toothbrush','Disposable Chopsticks','Dirty Cloth','Newspaper', 'Glassware', 'Basketball', 'Plastic Bottle', 'Cardboard','Glass Bottle', 'Metalware', 'Hats', 'Cans', 'Paper','Vegetable Leaf','Orange Peel', 'Eggshell','Banana Peel','Battery', 'Tablet capsules','Fluorescent lamp', 'Paint bucket']

?

index_en = {'Seashell': 0, 'Lighter': 1, 'Old Mirror': 2, 'Broom': 3, 'Ceramic Bowl': 4, 'Toothbrush': 5, 'Disposable Chopsticks': 6, 'Dirty Cloth': 7,'Newspaper': 8, 'Glassware': 9, 'Basketball': 10, 'Plastic Bottle': 11, 'Cardboard': 12, 'Glass Bottle': 13, 'Metalware': 14, 'Hats': 15, 'Cans': 16, 'Paper': 17,'Vegetable Leaf': 18, 'Orange Peel': 19, 'Eggshell': 20, 'Banana Peel': 21,'Battery': 22, 'Tablet capsules': 23, 'Fluorescent lamp': 24, 'Paint bucket': 25}

?

# 訓練超參

config = EasyDict({"num_classes": 26,"image_height": 224,"image_width": 224,#"data_split": [0.9, 0.1],"backbone_out_channels":1280,"batch_size": 16,"eval_batch_size": 8,"epochs": 10,"lr_max": 0.05,"momentum": 0.9,"weight_decay": 1e-4,"save_ckpt_epochs": 1,"dataset_path": "./data_en","class_index": index_en,"pretrained_ckpt": "./mobilenetV2-200_1067.ckpt" # mobilenetV2-200_1067.ckpt

})4.5數據預處理操作

讀取垃圾分類數據集

????????ImageFolderDataset方法

????????????????指定訓練集和測試集

預處理

????????歸一化

????????修改圖像頻道

????????訓練集增加豐富度

????????????????RandomCropDecodeResize

????????????????RandomHorizontalFlip

????????????????RandomColorAdjust

????????????????Shuffle

????????測試集

????????????????Decode

????????????????Resize

????????????????CenterCrop

def create_dataset(dataset_path, config, training=True, buffer_size=1000):"""create a train or eval dataset

?Args:dataset_path(string): the path of dataset.config(struct): the config of train and eval in diffirent platform.

?Returns:train_dataset, val_dataset"""data_path = os.path.join(dataset_path, 'train' if training else 'test')ds = de.ImageFolderDataset(data_path, num_parallel_workers=4, class_indexing=config.class_index)resize_height = config.image_heightresize_width = config.image_widthnormalize_op = C.Normalize(mean=[0.485*255, 0.456*255, 0.406*255], std=[0.229*255, 0.224*255, 0.225*255])change_swap_op = C.HWC2CHW()type_cast_op = C2.TypeCast(mstype.int32)

?if training:crop_decode_resize = C.RandomCropDecodeResize(resize_height, scale=(0.08, 1.0), ratio=(0.75, 1.333))horizontal_flip_op = C.RandomHorizontalFlip(prob=0.5)color_adjust = C.RandomColorAdjust(brightness=0.4, contrast=0.4, saturation=0.4)train_trans = [crop_decode_resize, horizontal_flip_op, color_adjust, normalize_op, change_swap_op]train_ds = ds.map(input_columns="image", operations=train_trans, num_parallel_workers=4)train_ds = train_ds.map(input_columns="label", operations=type_cast_op, num_parallel_workers=4)train_ds = train_ds.shuffle(buffer_size=buffer_size)ds = train_ds.batch(config.batch_size, drop_remainder=True)else:decode_op = C.Decode()resize_op = C.Resize((int(resize_width/0.875), int(resize_width/0.875)))center_crop = C.CenterCrop(resize_width)eval_trans = [decode_op, resize_op, center_crop, normalize_op, change_swap_op]eval_ds = ds.map(input_columns="image", operations=eval_trans, num_parallel_workers=4)eval_ds = eval_ds.map(input_columns="label", operations=type_cast_op, num_parallel_workers=4)ds = eval_ds.batch(config.eval_batch_size, drop_remainder=True)

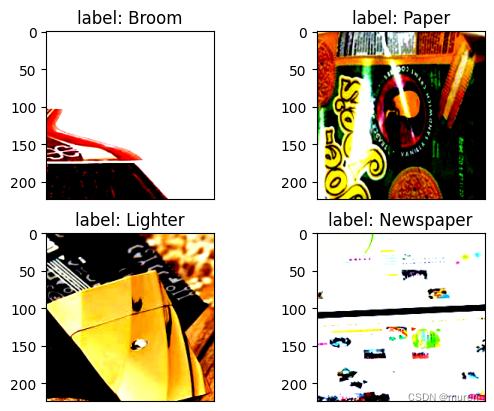

?return ds展示部分處理后的數據:

ds = create_dataset(dataset_path=config.dataset_path, config=config, training=False)

print(ds.get_dataset_size())

data = ds.create_dict_iterator(output_numpy=True)._get_next()

images = data['image']

labels = data['label']

?

for i in range(1, 5):plt.subplot(2, 2, i)plt.imshow(np.transpose(images[i], (1,2,0)))plt.title('label: %s' % class_en[labels[i]])plt.xticks([])

plt.show()輸出:

Clipping input data to the valid range for imshow with RGB data ([0..1] for floats or [0..255] for integers). Got range [-1.9831933..2.64].

Clipping input data to the valid range for imshow with RGB data ([0..1] for floats or [0..255] for integers). Got range [-2.117904..2.4285715].

Clipping input data to the valid range for imshow with RGB data ([0..1] for floats or [0..255] for integers). Got range [-2.0494049..2.273987].

Clipping input data to the valid range for imshow with RGB data ([0..1] for floats or [0..255] for integers). Got range [-1.9809059..2.64].

32

5、MobileNetV2模型搭建

定義MobileNetV2網絡

繼承mindspore.nn.Cell(基類)

????????__init__方法 ????定義神經網絡的各層

????????Construct ????????構造方法

????????ReLU6???????? ????原始模型激活函數

????????池化模塊 ????????全局平均池化層

__all__ = ['MobileNetV2', 'MobileNetV2Backbone', 'MobileNetV2Head', 'mobilenet_v2']

?

def _make_divisible(v, divisor, min_value=None):if min_value is None:min_value = divisornew_v = max(min_value, int(v + divisor / 2) // divisor * divisor)if new_v < 0.9 * v:new_v += divisorreturn new_v

?

class GlobalAvgPooling(nn.Cell):"""Global avg pooling definition.

?Args:

?Returns:Tensor, output tensor.

?Examples:>>> GlobalAvgPooling()"""

?def __init__(self):super(GlobalAvgPooling, self).__init__()

?def construct(self, x):x = P.mean(x, (2, 3))return x

?

class ConvBNReLU(nn.Cell):"""Convolution/Depthwise fused with Batchnorm and ReLU block definition.

?Args:in_planes (int): Input channel.out_planes (int): Output channel.kernel_size (int): Input kernel size.stride (int): Stride size for the first convolutional layer. Default: 1.groups (int): channel group. Convolution is 1 while Depthiwse is input channel. Default: 1.

?Returns:Tensor, output tensor.

?Examples:>>> ConvBNReLU(16, 256, kernel_size=1, stride=1, groups=1)"""

?def __init__(self, in_planes, out_planes, kernel_size=3, stride=1, groups=1):super(ConvBNReLU, self).__init__()padding = (kernel_size - 1) // 2in_channels = in_planesout_channels = out_planesif groups == 1:conv = nn.Conv2d(in_channels, out_channels, kernel_size, stride,

pad_mode='pad', padding=padding)else:out_channels = in_planesconv = nn.Conv2d(in_channels, out_channels, kernel_size, stride, pad_mode='pad',padding=padding, group=in_channels)

?layers = [conv, nn.BatchNorm2d(out_planes), nn.ReLU6()]self.features = nn.SequentialCell(layers)

?def construct(self, x):output = self.features(x)return output

?

class InvertedResidual(nn.Cell):"""Mobilenetv2 residual block definition.

?Args:inp (int): Input channel.oup (int): Output channel.stride (int): Stride size for the first convolutional layer. Default: 1.expand_ratio (int): expand ration of input channel

?Returns:Tensor, output tensor.

?Examples:>>> ResidualBlock(3, 256, 1, 1)"""

?def __init__(self, inp, oup, stride, expand_ratio):super(InvertedResidual, self).__init__()assert stride in [1, 2]

?hidden_dim = int(round(inp * expand_ratio))self.use_res_connect = stride == 1 and inp == oup

?layers = []if expand_ratio != 1:layers.append(ConvBNReLU(inp, hidden_dim, kernel_size=1))layers.extend([ConvBNReLU(hidden_dim, hidden_dim,stride=stride, groups=hidden_dim),nn.Conv2d(hidden_dim, oup, kernel_size=1,stride=1, has_bias=False),nn.BatchNorm2d(oup),])self.conv = nn.SequentialCell(layers)self.cast = P.Cast()

?def construct(self, x):identity = xx = self.conv(x)if self.use_res_connect:return P.add(identity, x)return x

?

class MobileNetV2Backbone(nn.Cell):"""MobileNetV2 architecture.

?Args:class_num (int): number of classes.width_mult (int): Channels multiplier for round to 8/16 and others. Default is 1.has_dropout (bool): Is dropout used. Default is falseinverted_residual_setting (list): Inverted residual settings. Default is Noneround_nearest (list): Channel round to . Default is 8Returns:Tensor, output tensor.

?Examples:>>> MobileNetV2(num_classes=1000)"""

?def __init__(self, width_mult=1., inverted_residual_setting=None, round_nearest=8,input_channel=32, last_channel=1280):super(MobileNetV2Backbone, self).__init__()block = InvertedResidual# setting of inverted residual blocksself.cfgs = inverted_residual_settingif inverted_residual_setting is None:self.cfgs = [# t, c, n, s[1, 16, 1, 1],[6, 24, 2, 2],[6, 32, 3, 2],[6, 64, 4, 2],[6, 96, 3, 1],[6, 160, 3, 2],[6, 320, 1, 1],]

?# building first layerinput_channel = _make_divisible(input_channel * width_mult, round_nearest)self.out_channels = _make_divisible(last_channel * max(1.0, width_mult), round_nearest)features = [ConvBNReLU(3, input_channel, stride=2)]# building inverted residual blocksfor t, c, n, s in self.cfgs:output_channel = _make_divisible(c * width_mult, round_nearest)for i in range(n):stride = s if i == 0 else 1features.append(block(input_channel, output_channel, stride, expand_ratio=t))input_channel = output_channelfeatures.append(ConvBNReLU(input_channel, self.out_channels, kernel_size=1))self.features = nn.SequentialCell(features)self._initialize_weights()

?def construct(self, x):x = self.features(x)return x

?def _initialize_weights(self):"""Initialize weights.

?Args:

?Returns:None.

?Examples:>>> _initialize_weights()"""self.init_parameters_data()for _, m in self.cells_and_names():if isinstance(m, nn.Conv2d):n = m.kernel_size[0] * m.kernel_size[1] * m.out_channelsm.weight.set_data(Tensor(np.random.normal(0, np.sqrt(2. / n),m.weight.data.shape).astype("float32")))if m.bias is not None:m.bias.set_data(Tensor(np.zeros(m.bias.data.shape, dtype="float32")))elif isinstance(m, nn.BatchNorm2d):m.gamma.set_data(Tensor(np.ones(m.gamma.data.shape, dtype="float32")))m.beta.set_data(Tensor(np.zeros(m.beta.data.shape, dtype="float32")))

?@propertydef get_features(self):return self.features

?

class MobileNetV2Head(nn.Cell):"""MobileNetV2 architecture.

?Args:class_num (int): Number of classes. Default is 1000.has_dropout (bool): Is dropout used. Default is falseReturns:Tensor, output tensor.

?Examples:>>> MobileNetV2(num_classes=1000)"""

?

def __init__(self, input_channel=1280, num_classes=1000,

has_dropout=False, activation="None"):super(MobileNetV2Head, self).__init__()# mobilenet headhead = ([GlobalAvgPooling(), nn.Dense(input_channel, num_classes, has_bias=True)]

if not has_dropout else[GlobalAvgPooling(), nn.Dropout(0.2), nn.Dense(input_channel, num_classes, has_bias=True)])self.head = nn.SequentialCell(head)self.need_activation = Trueif activation == "Sigmoid":self.activation = nn.Sigmoid()elif activation == "Softmax":self.activation = nn.Softmax()else:self.need_activation = Falseself._initialize_weights()

?def construct(self, x):x = self.head(x)if self.need_activation:x = self.activation(x)return x

?def _initialize_weights(self):"""Initialize weights.

?Args:

?Returns:None.

?Examples:>>> _initialize_weights()"""self.init_parameters_data()for _, m in self.cells_and_names():if isinstance(m, nn.Dense):m.weight.set_data(Tensor(np.random.normal(0, 0.01, m.weight.data.shape).astype("float32")))if m.bias is not None:m.bias.set_data(Tensor(np.zeros(m.bias.data.shape, dtype="float32")))@propertydef get_head(self):return self.head

?

class MobileNetV2(nn.Cell):"""MobileNetV2 architecture.

?Args:class_num (int): number of classes.width_mult (int): Channels multiplier for round to 8/16 and others. Default is 1.has_dropout (bool): Is dropout used. Default is falseinverted_residual_setting (list): Inverted residual settings. Default is Noneround_nearest (list): Channel round to . Default is 8Returns:Tensor, output tensor.

?Examples:>>> MobileNetV2(backbone, head)"""

?def __init__(self, num_classes=1000, width_mult=1., has_dropout=False, inverted_residual_setting=None, \round_nearest=8, input_channel=32, last_channel=1280):super(MobileNetV2, self).__init__()self.backbone = MobileNetV2Backbone(width_mult=width_mult, \inverted_residual_setting=inverted_residual_setting, \round_nearest=round_nearest, input_channel=input_channel, \

last_channel=last_channel).get_featuresself.head = MobileNetV2Head(input_channel=self.backbone.out_channel, \

num_classes=num_classes, \

has_dropout=has_dropout).get_head

?def construct(self, x):x = self.backbone(x)x = self.head(x)return x

?

class MobileNetV2Combine(nn.Cell):"""MobileNetV2Combine architecture.

?Args:backbone (Cell): the features extract layers.head (Cell): the fully connected layers.Returns:Tensor, output tensor.

?Examples:>>> MobileNetV2(num_classes=1000)"""

?def __init__(self, backbone, head):super(MobileNetV2Combine, self).__init__(auto_prefix=False)self.backbone = backboneself.head = head

?def construct(self, x):x = self.backbone(x)x = self.head(x)return x

?

def mobilenet_v2(backbone, head):return MobileNetV2Combine(backbone, head)6、MobileNetV2模型的訓練與測試

6.1訓練策略

靜態學習率,如0.01。

隨著訓練步數的增加,模型逐漸收斂,逐漸降低權重參數的更新幅度,減小模型訓練抖動。

動態下降學習率常見策略:

????????polynomial decay/square decay;

????????cosine decay,此例選用;

????????exponential decay;

????????stage decay.

def cosine_decay(total_steps, lr_init=0.0, lr_end=0.0, lr_max=0.1, warmup_steps=0):"""Applies cosine decay to generate learning rate array.

?Args:total_steps(int): all steps in training.lr_init(float): init learning rate.lr_end(float): end learning ratelr_max(float): max learning rate.warmup_steps(int): all steps in warmup epochs.

?Returns:list, learning rate array."""lr_init, lr_end, lr_max = float(lr_init), float(lr_end), float(lr_max)decay_steps = total_steps - warmup_stepslr_all_steps = []inc_per_step = (lr_max - lr_init) / warmup_steps if warmup_steps else 0for i in range(total_steps):if i < warmup_steps:lr = lr_init + inc_per_step * (i + 1)else:cosine_decay = 0.5 * (1 + math.cos(math.pi * (i - warmup_steps) / decay_steps))lr = (lr_max - lr_end) * cosine_decay + lr_endlr_all_steps.append(lr)

?return lr_all_steps6.2保存訓練模型

模型訓練過程中添加檢查點(Checkpoint)保存模型參數。

保存模型使用場景:

????????訓練后推理場景

????????????????用于后續的推理或預測操作。

????????????????保存實時驗證精度最高的模型參數用于預測操作。

????????再訓練場景

????????????????長時間訓練,斷點處繼續訓練。

????????Fine-tuning微調場景

????????????????微調用于其他類似任務的模型訓練。

下例加載ImageNet數據上預訓練的MobileNetv2進行Fine-tuning

????????只訓練最后修改的FC層

????????訓練過程中保存Checkpoint

def switch_precision(net, data_type):if ms.get_context('device_target') == "Ascend":net.to_float(data_type)for _, cell in net.cells_and_names():if isinstance(cell, nn.Dense):cell.to_float(ms.float32)6.3模型訓練與測試

訓練之前

????????定義訓練函數

????????讀取數據并對模型進行實例化,

????????定義優化器和損失函數

概念:

損失函數(目標函數)

????????衡量預測值與實際值的差異程度。

????????深度學習通過迭代縮小損失函數的值,提高模型性能。

優化器

????????最小化損失函數,在訓練過程中改進模型。

損失函數關于權重的梯度

????????指示優化器優化方向

參數更新

????????固定MobileNetV2Backbone層參數,訓練中不更新

????????只更新MobileNetV2Head模塊參數

MindSpore損失函數

????????SoftmaxCrossEntropyWithLogits,此例選用

????????L1Loss

????????MSELoss

訓練測試過程中打印loss值,總體趨勢逐步減小,精度逐步提高。

每一輪epoch模型會計算測試精度,不斷提升MobileNetV2模型的預測能力

from mindspore.amp import FixedLossScaleManager

import time

LOSS_SCALE = 1024

?

train_dataset = create_dataset(dataset_path=config.dataset_path, config=config)

eval_dataset = create_dataset(dataset_path=config.dataset_path, config=config)

step_size = train_dataset.get_dataset_size()backbone = MobileNetV2Backbone() #last_channel=config.backbone_out_channels

# Freeze parameters of backbone. You can comment these two lines.

for param in backbone.get_parameters():param.requires_grad = False

# load parameters from pretrained model

load_checkpoint(config.pretrained_ckpt, backbone)

?

head = MobileNetV2Head(input_channel=backbone.out_channels, num_classes=config.num_classes)

network = mobilenet_v2(backbone, head)

?

# define loss, optimizer, and model

loss = nn.SoftmaxCrossEntropyWithLogits(sparse=True, reduction='mean')

loss_scale = FixedLossScaleManager(LOSS_SCALE, drop_overflow_update=False)

lrs = cosine_decay(config.epochs * step_size, lr_max=config.lr_max)

opt = nn.Momentum(network.trainable_params(), lrs, config.momentum, config.weight_decay, loss_scale=LOSS_SCALE)

?

# 定義用于訓練的train_loop函數。

def train_loop(model, dataset, loss_fn, optimizer):# 定義正向計算函數def forward_fn(data, label):logits = model(data)loss = loss_fn(logits, label)return loss

?# 定義微分函數,使用mindspore.value_and_grad獲得微分函數grad_fn,輸出loss和梯度。# 由于是對模型參數求導,grad_position 配置為None,傳入可訓練參數。grad_fn = ms.value_and_grad(forward_fn, None, optimizer.parameters)

?# 定義 one-step training函數def train_step(data, label):loss, grads = grad_fn(data, label)optimizer(grads)return loss

?size = dataset.get_dataset_size()model.set_train()for batch, (data, label) in enumerate(dataset.create_tuple_iterator()):loss = train_step(data, label)

?if batch % 10 == 0:loss, current = loss.asnumpy(), batchprint(f"loss: {loss:>7f} [{current:>3d}/{size:>3d}]")

?

# 定義用于測試的test_loop函數。

def test_loop(model, dataset, loss_fn):num_batches = dataset.get_dataset_size()model.set_train(False)total, test_loss, correct = 0, 0, 0for data, label in dataset.create_tuple_iterator():pred = model(data)total += len(data)test_loss += loss_fn(pred, label).asnumpy()correct += (pred.argmax(1) == label).asnumpy().sum()test_loss /= num_batchescorrect /= totalprint(f"Test: \n Accuracy: {(100*correct):>0.1f}%, Avg loss: {test_loss:>8f} \n")

?

print("============== Starting Training ==============")

# 由于時間問題,訓練過程只進行了2個epoch ,可以根據需求調整。

epoch_begin_time = time.time()

epochs = 2

for t in range(epochs):begin_time = time.time()print(f"Epoch {t+1}\n-------------------------------")train_loop(network, train_dataset, loss, opt)ms.save_checkpoint(network, "save_mobilenetV2_model.ckpt")end_time = time.time()times = end_time - begin_timeprint(f"per epoch time: {times}s")test_loop(network, eval_dataset, loss)

epoch_end_time = time.time()

times = epoch_end_time - epoch_begin_time

print(f"total time: {times}s")

print("============== Training Success ==============")輸出:

============== Starting Training ==============

Epoch 1

-------------------------------

[ERROR] CORE(185595,ffff82d60930,python):2024-06-07-01:11:59.680.056 [mindspore/core/utils/file_utils.cc:253] GetRealPath] Get realpath failed, path[/tmp/ipykernel_185595/1438112663.py]

[ERROR] CORE(185595,ffff82d60930,python):2024-06-07-01:11:59.680.141 [mindspore/core/utils/file_utils.cc:253] GetRealPath] Get realpath failed, path[/tmp/ipykernel_185595/1438112663.py]

[ERROR] CORE(185595,ffff82d60930,python):2024-06-07-01:11:59.680.195 [mindspore/core/utils/file_utils.cc:253] GetRealPath] Get realpath failed, path[/tmp/ipykernel_185595/1438112663.py]

loss: 3.241972 [ 0/162]

loss: 3.257221 [ 10/162]

loss: 3.279036 [ 20/162]

loss: 3.319016 [ 30/162]

loss: 3.203032 [ 40/162]

loss: 3.281466 [ 50/162]

loss: 3.279725 [ 60/162]

loss: 3.229515 [ 70/162]

loss: 3.267290 [ 80/162]

loss: 3.188127 [ 90/162]

loss: 3.212995 [100/162]

loss: 3.241497 [110/162]

loss: 3.209007 [120/162]

loss: 3.172501 [130/162]

loss: 3.197280 [140/162]

loss: 3.272911 [150/162]

loss: 3.216317 [160/162]

per epoch time: 76.58693552017212s

[ERROR] CORE(185595,ffff82d60930,python):2024-06-07-01:13:15.037.060 [mindspore/core/utils/file_utils.cc:253] GetRealPath] Get realpath failed, path[/tmp/ipykernel_185595/3136751602.py]

[ERROR] CORE(185595,ffff82d60930,python):2024-06-07-01:13:15.037.167 [mindspore/core/utils/file_utils.cc:253] GetRealPath] Get realpath failed, path[/tmp/ipykernel_185595/3136751602.py]

Test: Accuracy: 8.0%, Avg loss: 3.194478 Epoch 2

-------------------------------

[ERROR] CORE(185595,ffff82d60930,python):2024-06-07-01:14:30.208.773 [mindspore/core/utils/file_utils.cc:253] GetRealPath] Get realpath failed, path[/tmp/ipykernel_185595/1438112663.py]

[ERROR] CORE(185595,ffff82d60930,python):2024-06-07-01:14:30.208.853 [mindspore/core/utils/file_utils.cc:253] GetRealPath] Get realpath failed, path[/tmp/ipykernel_185595/1438112663.py]

[ERROR] CORE(185595,ffff82d60930,python):2024-06-07-01:14:30.208.907 [mindspore/core/utils/file_utils.cc:253] GetRealPath] Get realpath failed, path[/tmp/ipykernel_185595/1438112663.py]

loss: 3.188095 [ 0/162]

loss: 3.165776 [ 10/162]

loss: 3.191228 [ 20/162]

loss: 3.138081 [ 30/162]

loss: 3.074074 [ 40/162]

loss: 3.163727 [ 50/162]

loss: 3.165467 [ 60/162]

loss: 3.173247 [ 70/162]

loss: 3.159379 [ 80/162]

loss: 3.143544 [ 90/162]

loss: 3.200886 [100/162]

loss: 3.201387 [110/162]

loss: 3.165534 [120/162]

loss: 3.171612 [130/162]

loss: 3.203765 [140/162]

loss: 3.166876 [150/162]

loss: 3.125233 [160/162]

per epoch time: 76.00073027610779s

Test: Accuracy: 17.4%, Avg loss: 3.111141 total time: 295.01550674438477s

============== Training Success ==============7、模型推理

加載模型Checkpoint

????????load_checkpoint接口加載數據

????????把數據傳入給原始網絡

CKPT="save_mobilenetV2_model.ckpt"

[13]:def image_process(image):"""Precess one image per time.Args:image: shape (H, W, C)"""mean=[0.485*255, 0.456*255, 0.406*255]std=[0.229*255, 0.224*255, 0.225*255]image = (np.array(image) - mean) / stdimage = image.transpose((2,0,1))img_tensor = Tensor(np.array([image], np.float32))return img_tensor

?

def infer_one(network, image_path):image = Image.open(image_path).resize((config.image_height, config.image_width))logits = network(image_process(image))pred = np.argmax(logits.asnumpy(), axis=1)[0]print(image_path, class_en[pred])

?

def infer():backbone = MobileNetV2Backbone(last_channel=config.backbone_out_channels)head = MobileNetV2Head(input_channel=backbone.out_channels, num_classes=config.num_classes)network = mobilenet_v2(backbone, head)load_checkpoint(CKPT, network)for i in range(91, 100):infer_one(network, f'data_en/test/Cardboard/000{i}.jpg')

infer()輸出:

[ERROR] CORE(185595,ffff82d60930,python):2024-06-07-01:16:53.811.718 [mindspore/core/utils/file_utils.cc:253] GetRealPath] Get realpath failed, path[/tmp/ipykernel_185595/3136751602.py]

[ERROR] CORE(185595,ffff82d60930,python):2024-06-07-01:16:53.811.810 [mindspore/core/utils/file_utils.cc:253] GetRealPath] Get realpath failed, path[/tmp/ipykernel_185595/3136751602.py]

data_en/test/Cardboard/00091.jpg Lighter

data_en/test/Cardboard/00092.jpg Broom

data_en/test/Cardboard/00093.jpg Basketball

data_en/test/Cardboard/00094.jpg Basketball

data_en/test/Cardboard/00095.jpg Basketball

data_en/test/Cardboard/00096.jpg Glassware

data_en/test/Cardboard/00097.jpg Lighter

data_en/test/Cardboard/00098.jpg Basketball

data_en/test/Cardboard/00099.jpg Basketball8、導出AIR/GEIR/ONNX模型文件

導出AIR模型文件,用于后續Atlas 200 DK上的模型轉換與推理。

當前僅支持MindSpore+Ascend環境。

backbone = MobileNetV2Backbone(last_channel=config.backbone_out_channels)

head = MobileNetV2Head(input_channel=backbone.out_channels, num_classes=config.num_classes)

network = mobilenet_v2(backbone, head)

load_checkpoint(CKPT, network)

?

input = np.random.uniform(0.0, 1.0, size=[1, 3, 224, 224]).astype(np.float32)

# export(network, Tensor(input), file_name='mobilenetv2.air', file_format='AIR')

# export(network, Tensor(input), file_name='mobilenetv2.pb', file_format='GEIR')

export(network, Tensor(input), file_name='mobilenetv2.onnx', file_format='ONNX')

)

與無監督學習(聚類))