1 Docker簡介及部署方法

1.1 Docker簡介

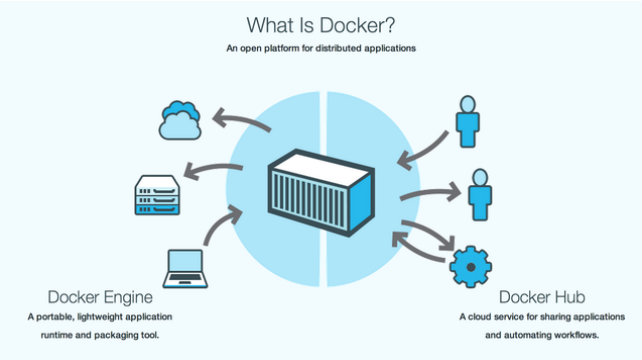

1.1.1 什么是docker?

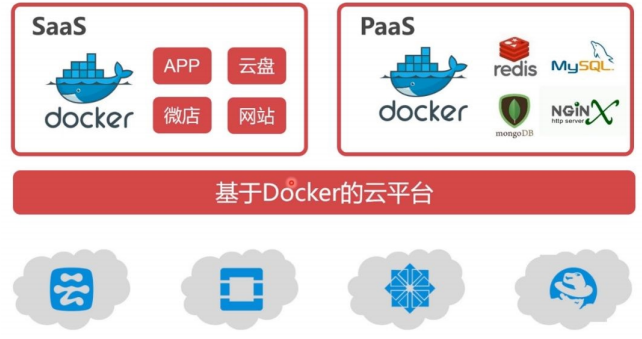

1.1.2 docker在企業中的應用場景

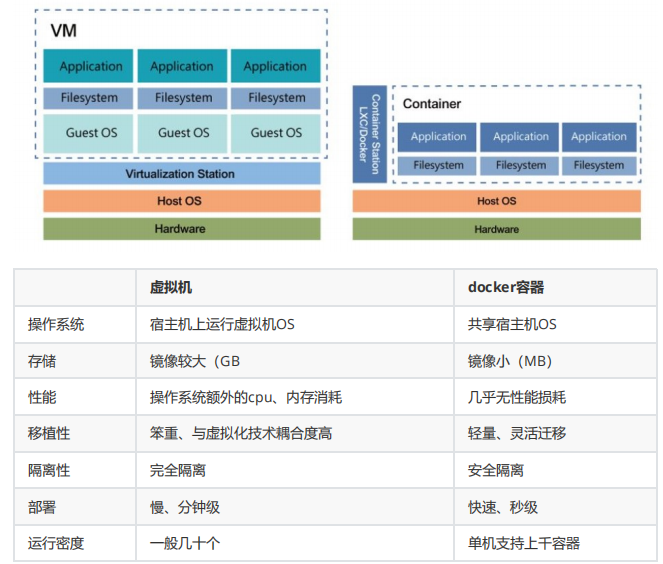

1.1.3 docker與虛擬化的對比

1.1.4 docker的優勢

- 對于開發人員:Build once、Run anywhere。

- 對于運維人員:Configure once、Run anything

- 容器技術大大提升了IT人員的幸福指數!

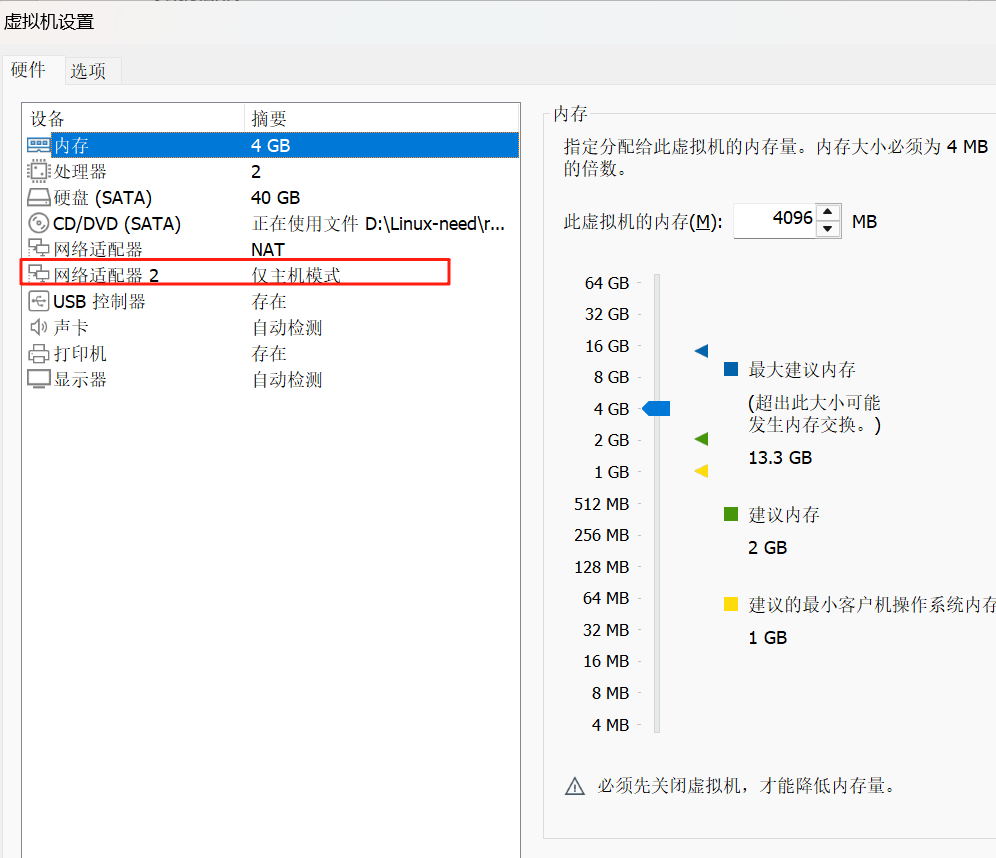

2 部署docker

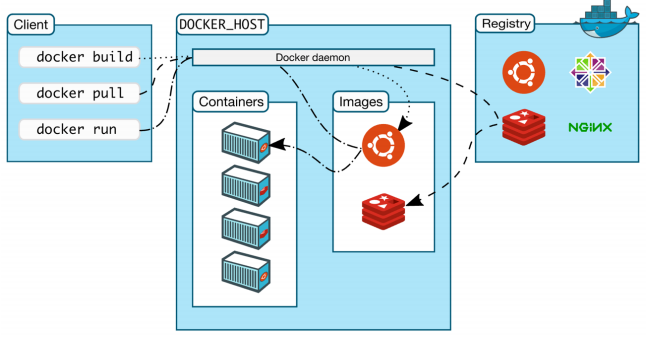

2.1 容器工作方法

2.2 部署第一個容器

2.3 安裝docker-ce

2.2.1 配置軟件倉庫

vim /etc/yum.repos.d/docker.repo編輯如下內容:?

[docker]

name=docker-ce

baseurl=https://mirrors.aliyun.com/docker-ce/linux/rhel/9/x86_64/stable

gpgcheck=0卸載podman:

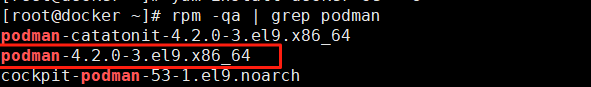

查詢podman包

rpm -qa | grep podman

進行卸載:?

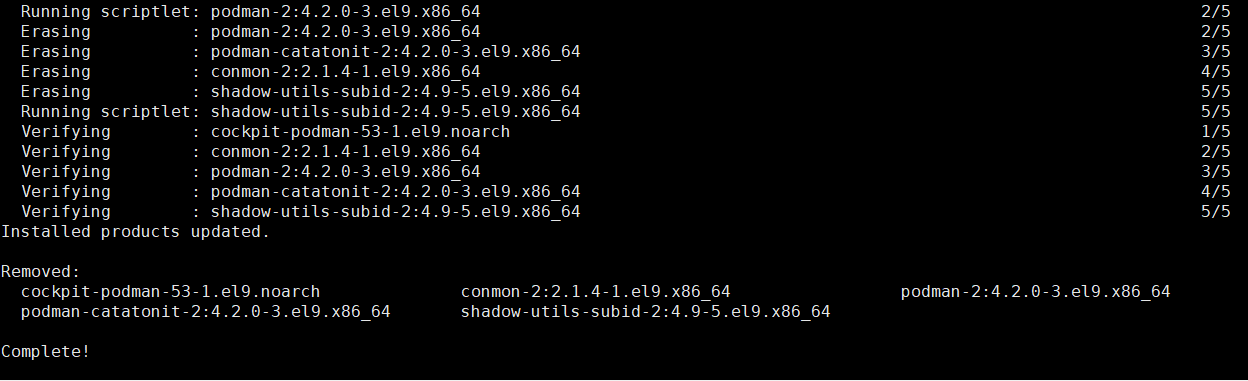

dnf remove podman-4.2.0-3.el9.x86_64 -y

再次查詢,確認卸載干凈:

rpm -qa | grep podman卸載沖突包:

sudo dnf remove -y buildah runc2.2.2 安裝docker-ce并啟動服務

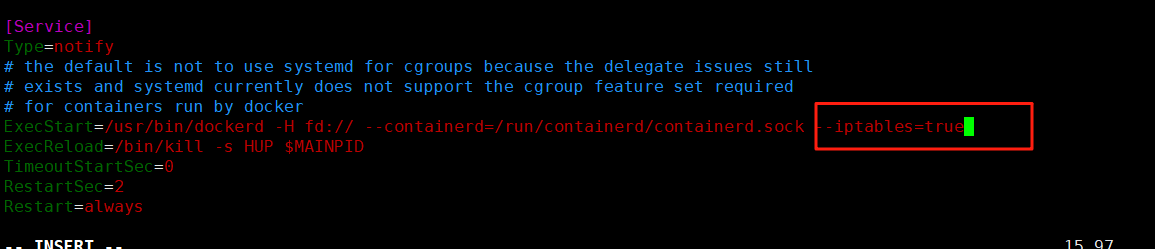

yum install -y docker-ce#編輯docker啟動文件,設定其使用iptables的網絡設定方式,默認使用nftables

vim /lib/systemd/system/docker.service ExecStart=/usr/bin/dockerd -H fd:// --containerd=/run/containerd/containerd.sock

--iptables=true

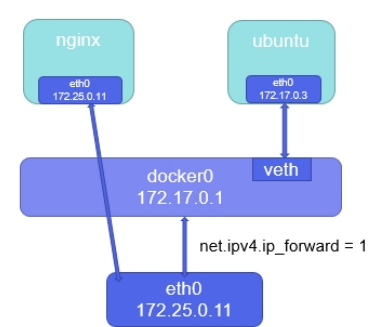

2.2.3 激活內核網絡選項

echo net.ipv4.ip_forward = 1 > /etc/sysctl.d/docker.conf刷新系統內核參數和重啟 Docker 服務:

sysctl --system

systemctl restart docker(注意)

#在rhel7中 需要

]# vim /etc/sysctl.d/docker.conf

net.bridge.bridge-nf-call-iptables = 1

net.bridge.bridge-nf-call-ip6tables = 1

net.ipv4.ip_forward = 1

]# sysctl --system

]# systemctl restart docker3 Docker的基本操作

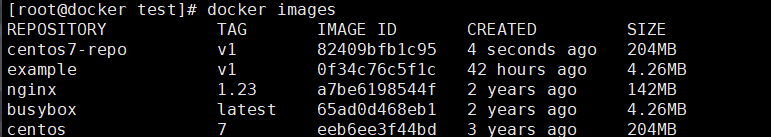

3.1 Docker鏡像管理:

3.1.1? 鏡像搜索:

docker search nginx查看本地鏡像:

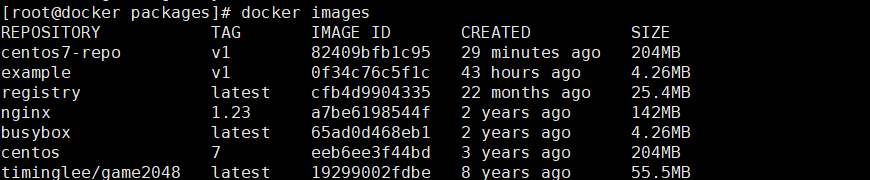

docker images[root@docker packages]# docker images

REPOSITORY TAG IMAGE ID CREATED SIZE

nginx 1.23 a7be6198544f 2 years ago 142MB3.1.2 拉取鏡像:

[root@Docker-node1 ~]# docker pull busybox

[root@Docker-node1 ~]# docker pull nginx:1.26-alpine

3.1.3 查看鏡像信息:

docker image inspect nginx:1.23[root@docker packages]# docker image inspect nginx:1.23

[{"Id": "sha256:a7be6198544f09a75b26e6376459b47c5b9972e7aa742af9f356b540fe852cd4","RepoTags": ["nginx:1.23"],"RepoDigests": [],"Parent": "","Comment": "","Created": "2023-05-23T08:51:38.011802405Z","DockerVersion": "20.10.23","Author": "","Architecture": "amd64","Os": "linux","Size": 142145851,"GraphDriver": {"Data": {"LowerDir": "/var/lib/docker/overlay2/e96e76939958426de535ea7d668c10ea0910a2bdafea9776271c0e2d37cf685c/diff:/var/lib/docker/overlay2/b716465abcc0d148b864758dff55bcc4c1ecaa7dab111ea8cedd12986cffdd3c/diff:/var/lib/docker/overlay2/d61e1a60886964ff9f407a0640b16941a0dddb80eb16446a73026364b5eafec9/diff:/var/lib/docker/overlay2/e89fafbf7412f392423c0d014ae9a9d7190ef256420db94746235c442cf466a2/diff:/var/lib/docker/overlay2/1a713b6c5dc96eef002a165325a805bde3b7de0cd00845b43b64b5b8aa90d8cf/diff","MergedDir": "/var/lib/docker/overlay2/181001765be769571578488be650304e134037bb0d4e5364fdd39facc541d91e/merged","UpperDir": "/var/lib/docker/overlay2/181001765be769571578488be650304e134037bb0d4e5364fdd39facc541d91e/diff","WorkDir": "/var/lib/docker/overlay2/181001765be769571578488be650304e134037bb0d4e5364fdd39facc541d91e/work"},"Name": "overlay2"},"RootFS": {"Type": "layers","Layers": ["sha256:8cbe4b54fa88d8fc0198ea0cc3a5432aea41573e6a0ee26eca8c79f9fbfa40e3","sha256:5dd6bfd241b4f4d0bb0a784cd7cefe00829edce2fccb2fcad71244df6344abff","sha256:043198f57be0cb6dd81abe9dd01531faa8dd2879239dc3b798870c0604e1bb3c","sha256:2731b5cfb6163ee5f1fe6126edb946ef11660de5a949404cc76207bf8a9c0e6e","sha256:6791458b394218a2c05b055f952309afa42ec238b74d5165cf1e2ebe9ffe6a33","sha256:4d33db9fdf22934a1c6007dcfbf84184739d590324c998520553d7559a172cfb"]},"Metadata": {"LastTagTime": "0001-01-01T00:00:00Z"},"Config": {"Cmd": ["nginx","-g","daemon off;"],"Entrypoint": ["/docker-entrypoint.sh"],"Env": ["PATH=/usr/local/sbin:/usr/local/bin:/usr/sbin:/usr/bin:/sbin:/bin","NGINX_VERSION=1.23.4","NJS_VERSION=0.7.11","PKG_RELEASE=1~bullseye"],"ExposedPorts": {"80/tcp": {}},"Labels": {"maintainer": "NGINX Docker Maintainers \u003cdocker-maint@nginx.com\u003e"},"OnBuild": null,"StopSignal": "SIGQUIT","User": "","Volumes": null,"WorkingDir": ""}}

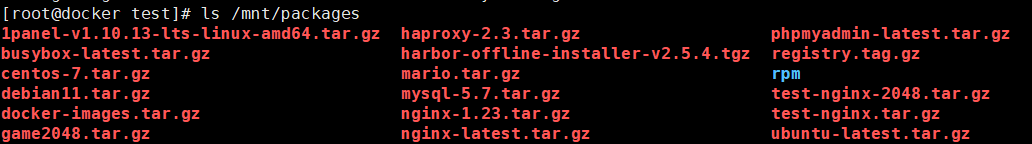

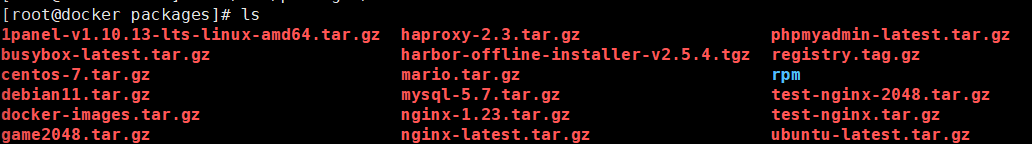

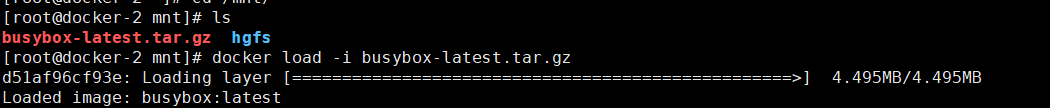

]3.1.4 導入鏡像:

docker load -i game2048.tar.gz[root@docker packages]# docker load -i game2048.tar.gz

011b303988d2: Loading layer [==================================================>] 5.05MB/5.05MB

36e9226e74f8: Loading layer [==================================================>] 51.46MB/51.46MB

192e9fad2abc: Loading layer [==================================================>] 3.584kB/3.584kB

6d7504772167: Loading layer [==================================================>] 4.608kB/4.608kB

88fca8ae768a: Loading layer [==================================================>] 629.8kB/629.8kB

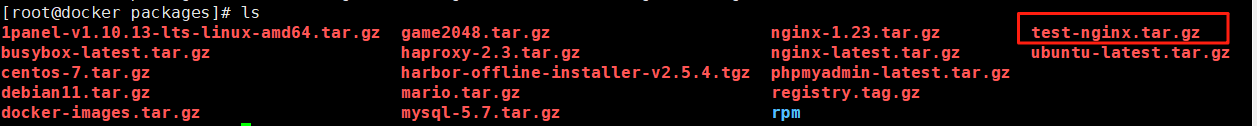

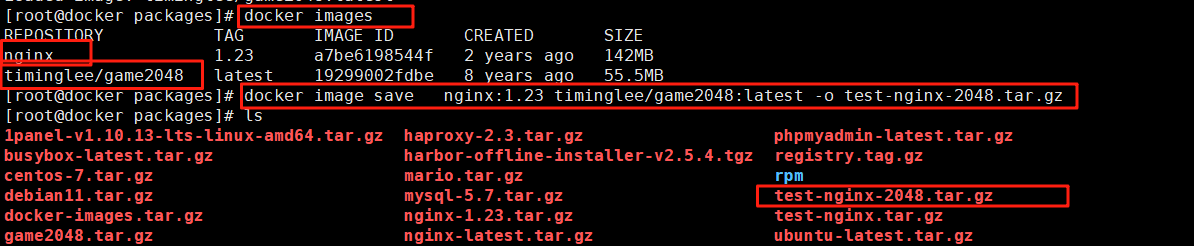

Loaded image: timinglee/game2048:latest3.1.5 導出鏡像:

鏡像保存,

docker image save??名字 : 版本? -o 輸出路徑

docker image save nginx:1.23 -o test-nginx.tar.gz

保存導出多個鏡像:

docker image save? 名字 : 版本? 名字 : 版本? -o 輸出路徑

docker image save nginx:1.23 timinglee/game2048:latest -o test-nginx-2048.tar.gz

保存所有鏡像:

docker save `docker images | awk 'NR>1{print $1":"$2}'` -o images.tar.gz3.1.6 刪除鏡像:

docker rmi nginx:1.23[root@docker packages]# docker rmi nginx:1.23

Untagged: nginx:1.23

Deleted: sha256:a7be6198544f09a75b26e6376459b47c5b9972e7aa742af9f356b540fe852cd4

Deleted: sha256:b142903ff5d25e779c293678ce1bb71604778bc243cda8c26180675454fbf11c

Deleted: sha256:185fa8597cdc03f83ab9ec3fe21d8ac6fbe49fa65cf8422f05d1d07b06b25fce

Deleted: sha256:1e3de4dfc3a245258917d9cd0860bd30969e1b430ecba95b1eaf2666d8882d24

Deleted: sha256:95b14e2b8329c1fcec4e1df001aac4874bc2247281ae96cdfe355847faf4caa9

Deleted: sha256:8566a5cb57a5d27b0eba5d952429bc542c05853014dc2c8f362540e7533fbff9

Deleted: sha256:8cbe4b54fa88d8fc0198ea0cc3a5432aea41573e6a0ee26eca8c79f9fbfa40e3刪除全部鏡像:

docker rmi `docker images | awk 'NR>1{print $1":"$2}'`3.2 容器的常用操作

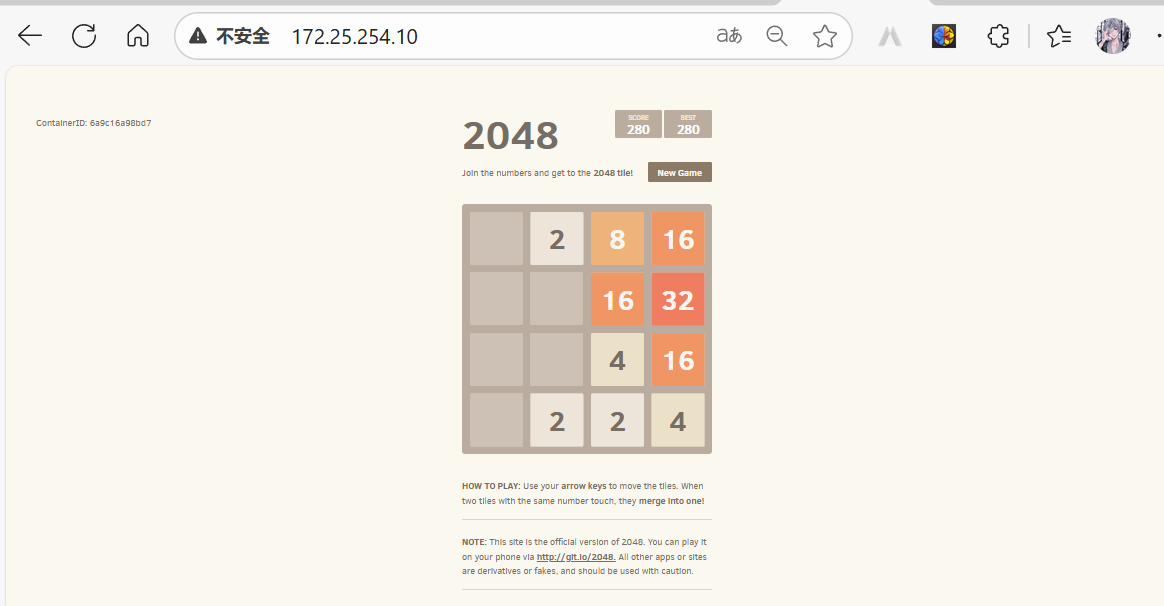

3.2.1 啟動容器:

docker run -d --name game2048 -p 80:80 timinglee/game2048查看進程:

docker ps訪問容器映射頁面:

172.25.254.10:80

-d #后臺運行-i #交互式運行-t #打開一個終端--name #指定容器名稱-p #端口映射 -p 80:8080 把容器8080端口映射到本機80端口--rm #容器停止自動刪除容器--network #指定容器使用的網絡

3.2.2 查看容器運行信息

[root@Docker-node1 ~]# docker ps #查看當前運行容器[root@Docker-node1 ~]# docker ps -a #查看所有容器[root@Docker-node1 ~]# docker inspect busybox #查看容器運行的詳細信息

3.2.3 停止和運行容器

[root@Docker-node1 ~]# docker stop busybox #停止容器[root@Docker-node1 ~]# docker kill busybox #殺死容器,可以使用信號[root@Docker-node1 ~]# docker start busybox #開啟停止的容器

3.2.4 刪除容器

[root@Docker-node1 ~]# docker rm centos7 #刪除停止的容器[root@Docker-node1 ~]# docker rm -f busybox #刪除運行的容器[root@Docker-node1 ~]# docker container prune -f #刪除所有停止的容器

[root@Docker-node1 ~]# docker run -it --name test busybox

/ # touch leefile #在容器中建立文件

/ # ls

bin etc leefile lib64 root tmp var

dev home lib proc sys usr

/ #

[root@Docker-node1 ~]# docker rm test #刪掉容器后

test

[root@Docker-node1 ~]# docker run -it --name test busybox #刪掉容器后開啟新的容器文

件不存在

/ # ls

bin dev etc home lib lib64 proc root sys tmp usr var

/ #

[root@Docker-node1 ~]# docker commit -m "add leefile" test busybox:v1

sha256:c8ff62b7480c951635acb6064acdfeb25282bd0c19cbffee0e51f3902cbfa4bd

[root@Docker-node1 ~]# docker images

REPOSITORY TAG IMAGE ID CREATED

SIZE

busybox v1 c8ff62b7480c 12 seconds ago

4.26MB

[root@Docker-node1 ~]# docker image history busybox:v1

IMAGE CREATED CREATED BY SIZE

COMMENT

c8ff62b7480c 2 minutes ago sh 17B add

leefile

65ad0d468eb1 15 months ago BusyBox 1.36.1 (glibc), Debian 12 4.26MB3.2.6 系統中的文件和容器中的文件傳輸

[root@Docker-node1 ~]# docker cp test2:/leefile /mnt #把容器中的文件復制到本機

Successfully copied 1.54kB to /mnt

[root@Docker-node1 ~]# docker cp /etc/fstab test2:/fstab #把本機文件復制到容器中3.2.7 查詢容器內部日志

[root@Docker-node1 ~]# docker logs web

/docker-entrypoint.sh: /docker-entrypoint.d/ is not empty, will attempt to

perform configuration

/docker-entrypoint.sh: Looking for shell scripts in /docker-entrypoint.d/

/docker-entrypoint.sh: Launching /docker-entrypoint.d/10-listen-on-ipv6-bydefault.sh

10-listen-on-ipv6-by-default.sh: info: Getting the checksum of

/etc/nginx/conf.d/default.conf

10-listen-on-ipv6-by-default.sh: info: Enabled listen on IPv6 in

/etc/nginx/conf.d/default.conf

/docker-entrypoint.sh: Sourcing /docker-entrypoint.d/15-local-resolvers.envsh

/docker-entrypoint.sh: Launching /docker-entrypoint.d/20-envsubst-on-templates.sh

/docker-entrypoint.sh: Launching /docker-entrypoint.d/30-tune-worker-processes.sh

/docker-entrypoint.sh: Configuration complete; ready for start up

2024/08/14 07:50:01 [notice] 1#1: using the "epoll" event method

2024/08/14 07:50:01 [notice] 1#1: nginx/1.27.0

2024/08/14 07:50:01 [notice] 1#1: built by gcc 12.2.0 (Debian 12.2.0-14)

2024/08/14 07:50:01 [notice] 1#1: OS: Linux 5.14.0-427.13.1.el9_4.x86_64

2024/08/14 07:50:01 [notice] 1#1: getrlimit(RLIMIT_NOFILE): 1073741816:1073741816

2024/08/14 07:50:01 [notice] 1#1: start worker processes

2024/08/14 07:50:01 [notice] 1#1: start worker process 29

2024/08/14 07:50:01 [notice] 1#1: start worker process 30

172.17.0.1 - - [14/Aug/2024:07:50:20 +0000] "GET / HTTP/1.1" 200 615 "-"

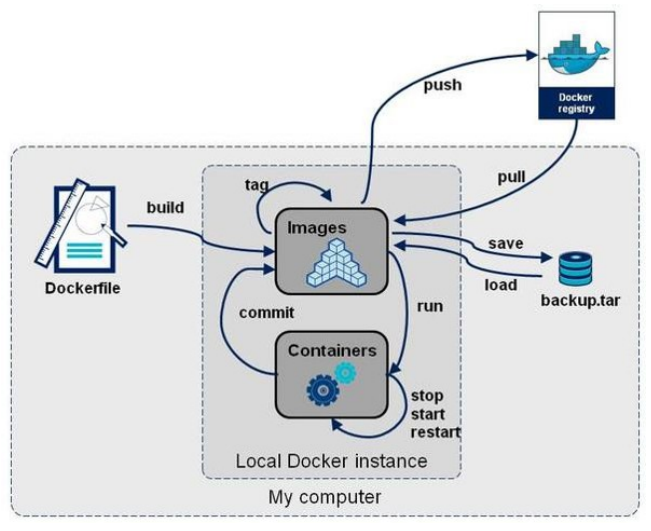

"curl/7.76.1" "-"4 鏡像構建

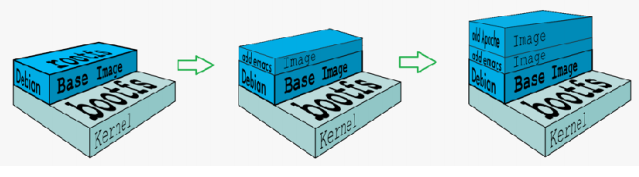

4.1 docker鏡像結構

- 共享宿主機的kernel

- base鏡像提供的是最小的Linux發行版

- 同一docker主機支持運行多種Linux發行版

- 采用分層結構的最大好處是:共享資源

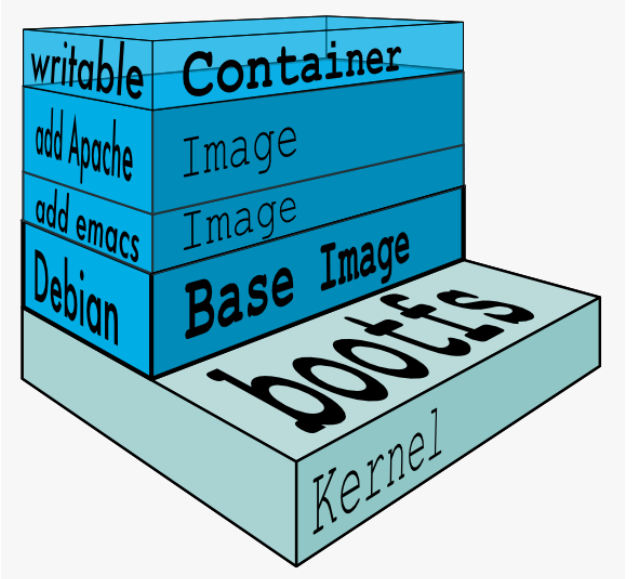

4.2?鏡像運行的基本原理

- Copy-on-Write 可寫容器層

- 容器層以下所有鏡像層都是只讀的

- docker從上往下依次查找文件

- 容器層保存鏡像變化的部分,并不會對鏡像本身進行任何修改

- 一個鏡像最多127層

4.3?鏡像獲得方式

- 基本鏡像通常由軟件官方提供

- 企業鏡像可以用官方鏡像+Dockerfile來生成

- 系統關于鏡像的獲取動作有兩種:

- docker pull 鏡像地址

- docker load –i 本地鏡像包

4.4 鏡像構建

4.4.1 構建參數

| FROM | 指定base鏡像 eg:FROM busybox:version |

|---|---|

| COPY | 復制文件 eg:COPY file /file 或者 COPY [“file”,”/”] |

| MAINTAINER | 指定作者信息,比如郵箱 eg:MAINTAINER user@example.com 在最新版的docker中用LABEL KEY="VALUE"代替 |

| ADD | 功能和copy相似,指定壓縮文件或url eg: ADD test.tar /mnt 或者 eg:ADD http://ip/test.tar /mnt |

| ENV | 指定環境變量 eg:ENV FILENAME test |

| EXPOSE | 暴漏容器端口 eg:EXPOSE 80 |

| VOLUME | 申明數據卷,通常指數據掛載點 eg:VOLUME [“/var/www/html”] |

| WORKDIR | 切換路徑 eg:WORKDIR /mnt |

| RUN | 在容器中運行的指令 eg: touch file |

| CMD | 在啟動容器時自動運行動作可以被覆蓋 eg:CMD echo $FILENAME 會調用shell解析 eg:CMD [“/bin/sh”,”-c”,“echo $FILENAME”] 不調用shell解析 |

| ENTRYPOINT | 和CMD功能和用法類似,但動作不可被覆蓋 |

參數示例及用法

FROM COPY 和MAINTAINER

導入要構建的鏡像:

docker load -i busybox-latest.tar.gz[root@docker packages]# docker images

REPOSITORY TAG IMAGE ID CREATED SIZE

busybox latest 65ad0d468eb1 2 years ago 4.26MB

timinglee/game2048 latest 19299002fdbe 8 years ago 55.5MB創建構建文件夾:

mkdir ~/docker

cd ~/docker/編輯構建文件:

touch rin_file

vim rin_fileFROM busybox:latest #指定使用的基礎鏡像

MAINTAINER rin@rin.com #指定作者信息

COPY rin_file / #復制當前目錄文件到容器指定位置,leefile必須在當前目錄中構建鏡像:

docker build -t example:v1 -f rin_file .查看構建情況:

[root@docker docker]# docker images

REPOSITORY TAG IMAGE ID CREATED SIZE

example v1 0f34c76c5f1c 9 minutes ago 4.26MB

busybox latest 65ad0d468eb1 2 years ago 4.26MB

timinglee/game2048 latest 19299002fdbe 8 years ago 55.5MB運行容器查看動作是否執行:

docker run -it --rm example:v1 sh[root@docker docker]# docker run -it --rm example:v1 sh

/ # ls

bin etc lib proc root tmp var

dev home lib64 rin_file sys usr

/ # cat rin_file

FROM busybox:latest

MAINTAINER rin@rin.com

COPY rin_file / / # exit4.1.2 鏡像構建實例:

當前contos7的軟件倉庫不可用,重新構建一個軟件倉庫能用contos7鏡像:

將原本的鏡像掛載:

docker load -i centos-7.tar.gz

創建構建文件夾,并切換路徑:

mkdir /docker/contos7-test

cd /docker/contos7-test編輯要更換的repo文件:

vim rhel7.repo [base]

name = rhel7

baseurl = https://mirrors.aliyun.com/centos/7/os/x86_64/

gpgcheck = 0編輯Dockerfile 文件:

vim Dockerfile

FROM centos:7

RUN rm -f /etc/yum.repos.d/*.repo

COPY rhel7.repo /etc/yum.repos.d/重新構建軟件倉庫能用的conntos7鏡像:

docker build -t centos7-repo:v1 .測試:

進入實例測試,

docker run -it --rm centos7-repo:v1 bash[root@f30a4e3588a0 /]# yum install gcc

Loaded plugins: fastestmirror, ovl

Determining fastest mirrors

base | 3.6 kB 00:00:00

(1/2): base/group_gz | 153 kB 00:00:06

(2/2): base/primary_db | 6.1 MB 00:00:11

Resolving Dependencies

--> Running transaction check

---> Package gcc.x86_64 0:4.8.5-44.el7 will be installed

--> Processing Dependency: libgomp = 4.8.5-44.el7 for package: gcc-4.8.5-44.el7.x86_64

--> Processing Dependency: cpp = 4.8.5-44.el7 for package: gcc-4.8.5-44.el7.x86_64

--> Processing Dependency: glibc-devel >= 2.2.90-12 for package: gcc-4.8.5-44.el7.x86_64

--> Processing Dependency: libmpfr.so.4()(64bit) for package: gcc-4.8.5-44.el7.x86_64

--> Processing Dependency: libmpc.so.3()(64bit) for package: gcc-4.8.5-44.el7.x86_64

--> Processing Dependency: libgomp.so.1()(64bit) for package: gcc-4.8.5-44.el7.x86_64

--> Running transaction check

---> Package cpp.x86_64 0:4.8.5-44.el7 will be installed

---> Package glibc-devel.x86_64 0:2.17-317.el7 will be installed

--> Processing Dependency: glibc-headers = 2.17-317.el7 for package: glibc-devel-2.17-317.el7.x86_64

--> Processing Dependency: glibc-headers for package: glibc-devel-2.17-317.el7.x86_64

---> Package libgomp.x86_64 0:4.8.5-44.el7 will be installed

---> Package libmpc.x86_64 0:1.0.1-3.el7 will be installed

---> Package mpfr.x86_64 0:3.1.1-4.el7 will be installed

--> Running transaction check

---> Package glibc-headers.x86_64 0:2.17-317.el7 will be installed

--> Processing Dependency: kernel-headers >= 2.2.1 for package: glibc-headers-2.17-317.el7.x86_64

--> Processing Dependency: kernel-headers for package: glibc-headers-2.17-317.el7.x86_64

--> Running transaction check

---> Package kernel-headers.x86_64 0:3.10.0-1160.el7 will be installed

--> Finished Dependency ResolutionDependencies Resolved============================================================================================================================Package Arch Version Repository Size

============================================================================================================================

Installing:gcc x86_64 4.8.5-44.el7 base 16 M

Installing for dependencies:cpp x86_64 4.8.5-44.el7 base 5.9 Mglibc-devel x86_64 2.17-317.el7 base 1.1 Mglibc-headers x86_64 2.17-317.el7 base 690 kkernel-headers x86_64 3.10.0-1160.el7 base 9.0 Mlibgomp x86_64 4.8.5-44.el7 base 159 klibmpc x86_64 1.0.1-3.el7 base 51 kmpfr x86_64 3.1.1-4.el7 base 203 kTransaction Summary

============================================================================================================================

Install 1 Package (+7 Dependent packages)Total download size: 33 M

Installed size: 60 M

Is this ok [y/d/N]: n

?

4.5 鏡像優化:

- 選擇最精簡的基礎鏡像

- 減少鏡像的層數

- 清理鏡像構建的中間產物

- 選擇最精簡的基礎鏡像

- 減少鏡像的層數

- 清理鏡像構建的中間產物

4.5.2?鏡像優化示例

[root@server1 docker]# vim Dockerfile

FROM centos:7 as build

ADD nginx-1.23.3.tar.gz /mnt

WORKDIR /mnt/nginx-1.23.3

RUN yum install -y gcc make pcre-devel openssl-devel && sed -i 's/CFLAGS="$CFLAGS

-g"/#CFLAGS="$CFLAGS -g"/g' auto/cc/gcc && ./configure --with-http_ssl_module --

with-http_stub_status_module && make && make install && cd .. && rm -fr nginx-

1.23.3 && yum clean all

EXPOSE 80

VOLUME ["/usr/local/nginx/html"]

CMD ["/usr/local/nginx/sbin/nginx", "-g", "daemon off;"]

[root@server1 docker]# docker build -t webserver:v2 .

[root@server1 docker]# docker images webserver

REPOSITORY TAG IMAGE ID CREATED SIZE

webserver v2 caf0f80f2332 4 seconds ago 317MB

webserver v1 bfd6774cc216 About an hour ago 494MB[root@server1 docker]# vim Dockerfile

FROM centos:7 as build

ADD nginx-1.23.3.tar.gz /mnt

WORKDIR /mnt/nginx-1.23.3

RUN yum install -y gcc make pcre-devel openssl-devel && sed -i 's/CFLAGS="$CFLAGS

-g"/#CFLAGS="$CFLAGS -g"/g' auto/cc/gcc && ./configure --with-http_ssl_module --

with-http_stub_status_module && make && make install && cd .. && rm -fr nginx-

1.23.3 && yum clean all

FROM centos:7

COPY --from=build /usr/local/nginx /usr/local/nginx

EXPOSE 80

VOLUME ["/usr/local/nginx/html"]

CMD ["/usr/local/nginx/sbin/nginx", "-g", "daemon off;"]

[root@server1 docker]# docker build -t webserver:v3 .

[root@server1 docker]# docker images webserver

REPOSITORY TAG IMAGE ID CREATED SIZE

webserver v3 1ac964f2cefe 29 seconds ago 205MB

webserver v2 caf0f80f2332 3 minutes ago 317MB

webserver v1 bfd6774cc216 About an hour ago 494MBdocker pull gcr.io/distroless/base[root@server1 ~]# mkdir new

[root@server1 ~]# cd new/

[root@server1 new]# vim Dockerfile

FROM nginx:latest as base

# https://en.wikipedia.org/wiki/List_of_tz_database_time_zones

ARG TIME_ZONE

RUN mkdir -p /opt/var/cache/nginx && \

cp -a --parents /usr/lib/nginx /opt && \

cp -a --parents /usr/share/nginx /opt && \

cp -a --parents /var/log/nginx /opt && \

cp -aL --parents /var/run /opt && \

cp -a --parents /etc/nginx /opt && \

cp -a --parents /etc/passwd /opt && \

cp -a --parents /etc/group /opt && \

cp -a --parents /usr/sbin/nginx /opt && \

cp -a --parents /usr/sbin/nginx-debug /opt && \

cp -a --parents /lib/x86_64-linux-gnu/ld-* /opt && \

cp -a --parents /usr/lib/x86_64-linux-gnu/libpcre* /opt && \

cp -a --parents /lib/x86_64-linux-gnu/libz.so.* /opt && \

cp -a --parents /lib/x86_64-linux-gnu/libc* /opt && \

cp -a --parents /lib/x86_64-linux-gnu/libdl* /opt && \

cp -a --parents /lib/x86_64-linux-gnu/libpthread* /opt && \

cp -a --parents /lib/x86_64-linux-gnu/libcrypt* /opt && \

cp -a --parents /usr/lib/x86_64-linux-gnu/libssl.so.* /opt && \

cp -a --parents /usr/lib/x86_64-linux-gnu/libcrypto.so.* /opt && \

cp /usr/share/zoneinfo/${TIME_ZONE:-ROC} /opt/etc/localtime

FROM gcr.io/distroless/base-debian11

COPY --from=base /opt /

EXPOSE 80 443

ENTRYPOINT ["nginx", "-g", "daemon off;"]

[root@server1 new]# docker build -t webserver:v4 .

[root@server1 new]# docker images webserver

REPOSITORY TAG IMAGE ID CREATED SIZE

webserver v4 c0c4e1d49f3d 4 seconds ago 34MB

webserver v3 1ac964f2cefe 12 minutes ago 205MB

webserver v2 caf0f80f2332 15 minutes ago 317MB

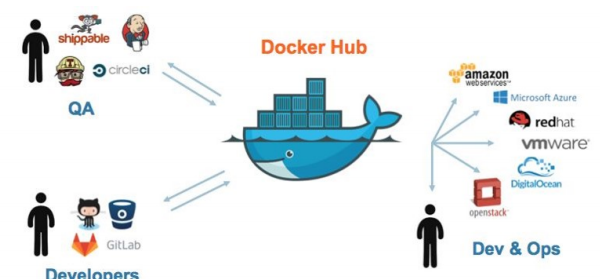

webserver v1 bfd6774cc216 About an hour ago 494MB5 Docker 鏡像倉庫的管理

5.1什么是docker倉庫

- 公共倉庫,如 Docker Hub,任何人都可以訪問和使用其中的鏡像。許多常用的軟件和應用都有在

- Docker Hub 上提供的鏡像,方便用戶直接獲取和使用。

- 例如,您想要部署一個 Nginx 服務器,就可以從 Docker Hub 上拉取 Nginx 的鏡像。

- 私有倉庫則是由組織或個人自己搭建和管理的,用于存儲內部使用的、不希望公開的鏡像。

- 比如,一家企業為其特定的業務應用創建了定制化的鏡像,并將其存儲在自己的私有倉庫中, 以保證安全性和控制訪問權限。

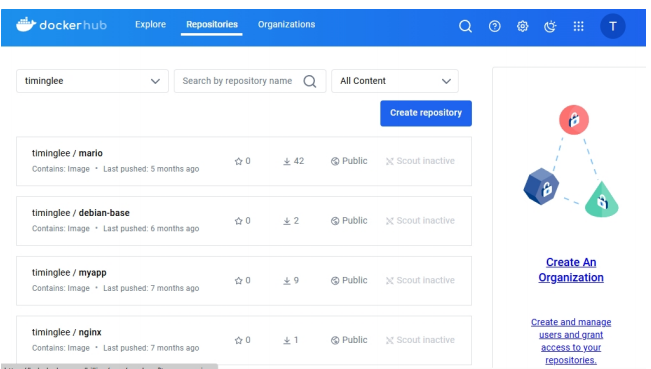

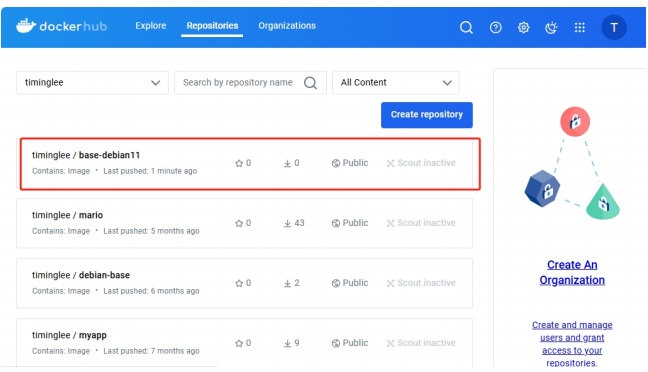

5.2 docker hub

- 例如,您可以輕松找到 Ubuntu、CentOS 等操作系統的鏡像,以及 MySQL、Redis 等數據庫 的鏡像。

5.2.1?docker hub的使用方法

#登陸官方倉庫

[root@docker ~]# docker login

Log in with your Docker ID or email address to push and pull images from Docker

Hub. If you don't have a Docker ID, head over to https://hub.docker.com/ to

create one.

You can log in with your password or a Personal Access Token (PAT). Using a

limited-scope PAT grants better security and is required for organizations using

SSO. Learn more at https://docs.docker.com/go/access-tokens/

Username: timinglee

Password:

WARNING! Your password will be stored unencrypted in /root/.docker/config.json.

Configure a credential helper to remove this warning. See

https://docs.docker.com/engine/reference/commandline/login/#credential-stores

Login Succeeded

#登陸信息保存位置

[root@docker ~]# cd .docker/

[root@docker .docker]# ls

config.json

[root@docker .docker]# cat config.json

{

"auths": {

"https://index.docker.io/v1/": {

"auth": "dGltaW5nbGVlOjY3NTE1MTVtaW5nemxu"

}

}

[root@docker ~]# docker tag gcr.io/distroless/base-debian11:latest

timinglee/base-debian11:latest

[root@docker ~]# docker push timinglee/base-debian11:latest

The push refers to repository [docker.io/timinglee/base-debian11]

6835249f577a: Pushed

24aacbf97031: Pushed

8451c71f8c1e: Pushed

2388d21e8e2b: Pushed

c048279a7d9f: Pushed

1a73b54f556b: Pushed

2a92d6ac9e4f: Pushed

bbb6cacb8c82: Pushed

ac805962e479: Pushed

af5aa97ebe6c: Pushed

4d049f83d9cf: Pushed

9ed498e122b2: Pushed

577c8ee06f39: Pushed

5342a2647e87: Pushed

latest: digest:

sha256:f8179c20f1f2b1168665003412197549bd4faab5ccc1b140c666f9b8aa958042 size:

3234

5.3?docker倉庫的工作原理

5.3.1pull原理

- 鏡像拉取分為以下幾步:

- docker客戶端向index發送鏡像拉去請求并完成與index的認證

- index發送認證token和鏡像位置給dockerclient

- dockerclient攜帶token和根據index指引的鏡像位置取連接registry

- Registry會根據client持有的token跟index核實身份合法性

- index確認此token合法性

- Registry會根據client的請求傳遞鏡像到客戶端

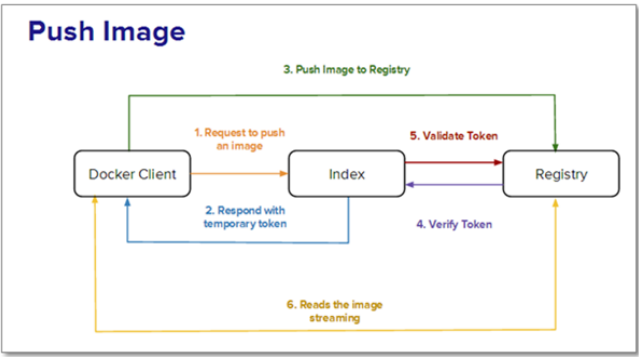

5.3.2 push原理

- client向index發送上傳請求并完成用戶認證

- index會發方token給client來證明client的合法性

- client攜帶index提供的token連接Registry

- Registry向index合適token的合法性

- index證實token的合法性

- Registry開始接收客戶端上傳過來的鏡像

5.4?搭建docker的私有倉庫

5.4.1 為什么搭建私有倉庫

- 需要internet連接,速度慢

- 所有人都可以訪問

- 由于安全原因企業不允許將鏡像放到外網

5.4.2 搭建簡單的Registry倉庫

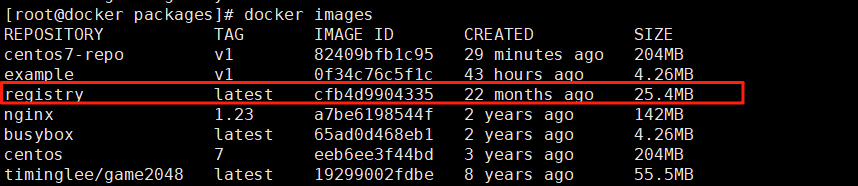

docker load -i registry.tag.gz

docker run -d -p 5000:5000 --restart=always --name registry registry:latest查看進程:

[root@docker packages]# docker ps

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

3cb75b901caa registry:latest "/entrypoint.sh /etc…" 39 seconds ago Up 38 seconds 0.0.0.0:5000->5000/tcp, [::]:5000->5000/tcp registry5.4.3上傳鏡像到倉庫:

5.4.3.1免密上傳:

vim /etc/docker/daemon.json{

"insecure-registries" : ["http://172.25.254.10:5000"]

}重啟docker:

?

systemctl restart docker上傳鏡像:

給要傳的鏡像打標簽:

docker tag busybox:latest 172.25.254.10:5000/busybox:latest上傳:

docker push 172.25.254.10:5000/busybox:latest查看上傳好的鏡像:

[root@docker packages]# curl 172.25.254.10:5000/v2/_catalog

{"repositories":["busybox"]}測試:

將鏡像目錄的busybox刪除:

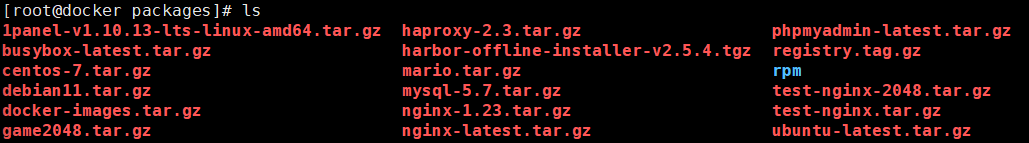

docker rmi busybox:latest[root@docker packages]# docker images

REPOSITORY TAG IMAGE ID CREATED SIZE

centos7-repo v1 82409bfb1c95 43 minutes ago 204MB

example v1 0f34c76c5f1c 43 hours ago 4.26MB

registry latest cfb4d9904335 22 months ago 25.4MB

nginx 1.23 a7be6198544f 2 years ago 142MB

172.25.254.100:5000/busybox latest 65ad0d468eb1 2 years ago 4.26MB

172.25.254.10:5000/busybox latest 65ad0d468eb1 2 years ago 4.26MB

centos 7 eeb6ee3f44bd 3 years ago 204MB

timinglee/game2048 latest 19299002fdbe 8 years ago 55.5MB再從本地倉庫拉取:

?

docker pull 172.25.254.10:5000/busybox:latest[root@docker packages]# docker images

REPOSITORY TAG IMAGE ID CREATED SIZE

centos7-repo v1 82409bfb1c95 46 minutes ago 204MB

example v1 0f34c76c5f1c 43 hours ago 4.26MB

registry latest cfb4d9904335 22 months ago 25.4MB

nginx 1.23 a7be6198544f 2 years ago 142MB

172.25.254.10:5000/busybox latest 65ad0d468eb1 2 years ago 4.26MB

centos 7 eeb6ee3f44bd 3 years ago 204MB

timinglee/game2048 latest 19299002fdbe 8 years ago 55.5MB5.4.3.2 加密傳輸:

創建證書存放文件夾:

mkdir -p /root/certs

cd /rootopenssl req -newkey rsa:4096 \

-nodes -sha256 -keyout certs/rin.key \

-addext "subjectAltName = DNS:www.rin.com" \

-x509 -days 365 -out certs/rin.crtCountry Name (2 letter code) [XX]:CN

State or Province Name (full name) []:guangxi

Locality Name (eg, city) [Default City]:nanning

Organization Name (eg, company) [Default Company Ltd]:rin

Organizational Unit Name (eg, section) []:docker

Common Name (eg, your name or your server's hostname) []:www.rin.com? ? ? ? ? ? ? ? ? ? ? ? ? ? ? ? ? ? ? ? ? ? ? ? ? ? ? ? ? ? ? ? ? ? ? ? ? ? ? ? ? ? ? ? ? ? ? #其余隨意填,這條得填跟上面dns一樣的內容

Email Address []:rin@rin.com

docker run -d -p 443:443 --restart=always --name registry \

--name registry -v /opt/registry:/var/lib/registry \

-v /root/certs:/certs \

-e REGISTRY_HTTP_ADDR=0.0.0.0:443 \

-e REGISTRY_HTTP_TLS_CERTIFICATE=/certs/rin.crt \

-e REGISTRY_HTTP_TLS_KEY=/certs/rin.key registry

將證書復制到 Docker 的證書信任目錄中

# 1. 創建證書目錄(注意替換為你的域名 www.rin.com)

sudo mkdir -p /etc/docker/certs.d/www.rin.com # 2. 將證書復制到該目錄(假設證書在當前主機的 /root/certs/rin.crt)

cp /root/certs/rin.crt /etc/docker/certs.d/www.rin.com/ca.crt # 3. 重啟 Docker 服務使配置生效

sudo systemctl restart docker# 為busybox鏡像添加標簽,并推送:

???????# 為busybox鏡像添加標簽(假設你要推送的是busybox這個鏡像)

docker tag busybox:latest www.rin.com/busybox:latest # 進行推送了

docker push www.rin.com/busybox:latest查看推送情況:

?

[root@docker ~]# curl -k https://www.rin.com/v2/_catalog

{"repositories":["busybox"]}5.4.4 為倉庫建立登陸認證

dnf install httpd-tools -ymkdir auth#-B 強制使用最安全加密方式,默認用md5加密

htpasswd -Bc auth/htpasswd rin[root@docker ~]# htpasswd -Bc /root/auth/htpasswd rin

New password:

Re-type new password:

Adding password for user rin docker run -d -p 443:443

--restart=always

--name registry

-v /opt/registry:/var/lib/registry

-v /root/certs:/certs

-e REGISTRY_HTTP_ADDR=0.0.0.0:443

-e REGISTRY_HTTP_TLS_CERTIFICATE=/certs/rin.crt

-e REGISTRY_HTTP_TLS_KEY=/certs/rin.key

-v /root/auth:/auth

-e "REGISTRY_AUTH=htpasswd"

-e "REGISTRY_AUTH_HTPASSWD_REALM=Registry Realm"

-e REGISTRY_AUTH_HTPASSWD_PATH=/auth/htpasswd registry

測試:

?

[root@docker ~]# curl -k https://www.rin.com/v2/_catalog -u rin:123

{"repositories":["busybox"]}登入測試:

docker login www.rin.com[root@docker ~]# docker login www.rin.com

Username: rin

Password: WARNING! Your credentials are stored unencrypted in '/root/.docker/config.json'.

Configure a credential helper to remove this warning. See

https://docs.docker.com/go/credential-store/Login Succeeded5.5?構建企業級私有倉庫

- 基于角色的訪問控制(RBAC):可以為不同的用戶和用戶組分配不同的權限,增強了安全性和管理的靈活性。

- 鏡像復制:支持在不同的 Harbor 實例之間復制鏡像,方便在多個數據中心或環境中分發鏡像。

- 圖形化用戶界面(UI):提供了直觀的 Web 界面,便于管理鏡像倉庫、項目、用戶等。

- 審計日志:記錄了對鏡像倉庫的各種操作,有助于追蹤和審查活動。

- ?垃圾回收:可以清理不再使用的鏡像,節省存儲空間。

5.5.1?部署harbor

tar xzf harbor-offline-installer-v2.5.4.tgz創建證書存放文件夾:

mkdir -p /data/certs

cd /dataopenssl req -newkey rsa:4096 \

-nodes -sha256 -keyout certs/rin.key \

-addext "subjectAltName = DNS:www.rin.com" \

-x509 -days 365 -out certs/rin.crtCountry Name (2 letter code) [XX]:CN

State or Province Name (full name) []:guangxi

Locality Name (eg, city) [Default City]:nanning

Organization Name (eg, company) [Default Company Ltd]:rin

Organizational Unit Name (eg, section) []:docker

Common Name (eg, your name or your server's hostname) []:www.rin.com? ? ? ? ? ? ? ? ? ? ? ? ? ? ? ? ? ? ? ? ? ? ? ? ? ? ? ? ? ? ? ? ? ? ? ? ? ? ? ? ? ? ? ? ? ? ? #其余隨意填,這條得填跟上面dns一樣的內容

Email Address []:rin@rin.com

編輯文件:

?

cd harbor/

cp harbor.yml.tmpl harbor.yml

vim harbor.yml# Configuration file of Harbor# The IP address or hostname to access admin UI and registry service.

# DO NOT use localhost or 127.0.0.1, because Harbor needs to be accessed by external clients.

hostname: www.rin.com# https related config

https:# https port for harbor, default is 443port: 443# The path of cert and key files for nginxcertificate: /data/certs/rin.crtprivate_key: /data/certs/rin.key# The initial password of Harbor admin

# It only works in first time to install harbor

# Remember Change the admin password from UI after launching Harbor.

harbor_admin_password: rin# The default data volume

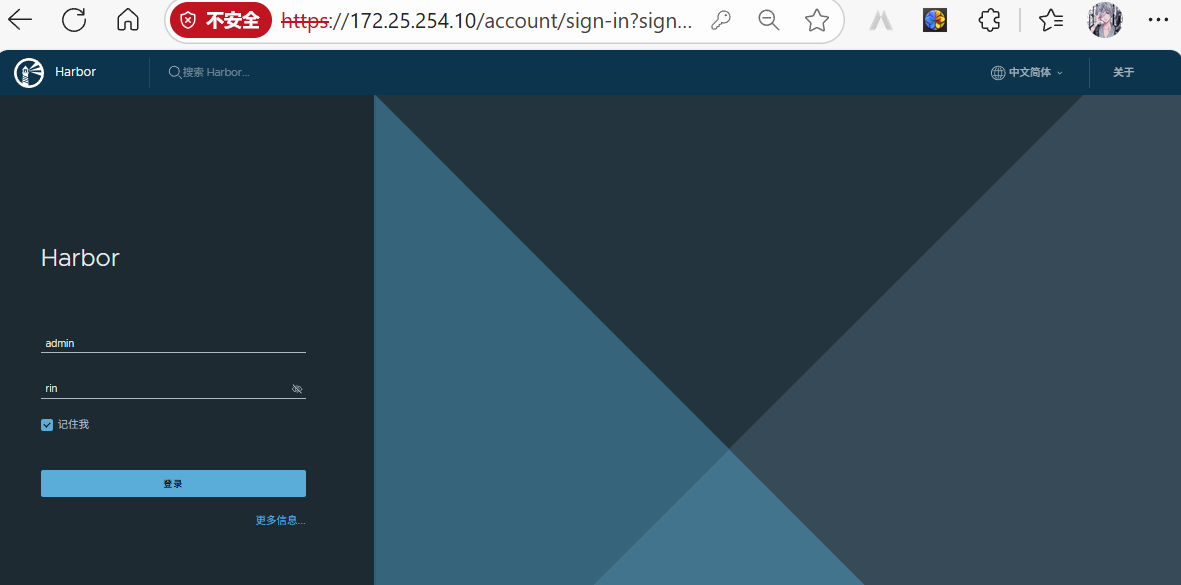

data_volume: /data執行安裝腳本:

執行前記得將同名鏡像容器刪除:

?

docker rm -f registry./install.sh --with-chartmuseumdocker compose stop

docker compose up -d[root@docker harbor]# docker compose stop

WARN[0000] /mnt/packages/harbor/docker-compose.yml: the attribute `version` is obsolete, it will be ignored, please remove it to avoid potential confusion

[+] Stopping 10/10? Container harbor-jobservice Stopped 0.6s ? Container registryctl Stopped 10.2s ? Container chartmuseum Stopped 0.6s ? Container nginx Stopped 0.5s ? Container harbor-portal Stopped 0.3s ? Container harbor-core Stopped 3.3s ? Container registry Stopped 0.2s ? Container redis Stopped 0.5s ? Container harbor-db Stopped 0.3s ? Container harbor-log Stopped 10.3s

[root@docker harbor]# docker compose up -d

WARN[0000] /mnt/packages/harbor/docker-compose.yml: the attribute `version` is obsolete, it will be ignored, please remove it to avoid potential confusion

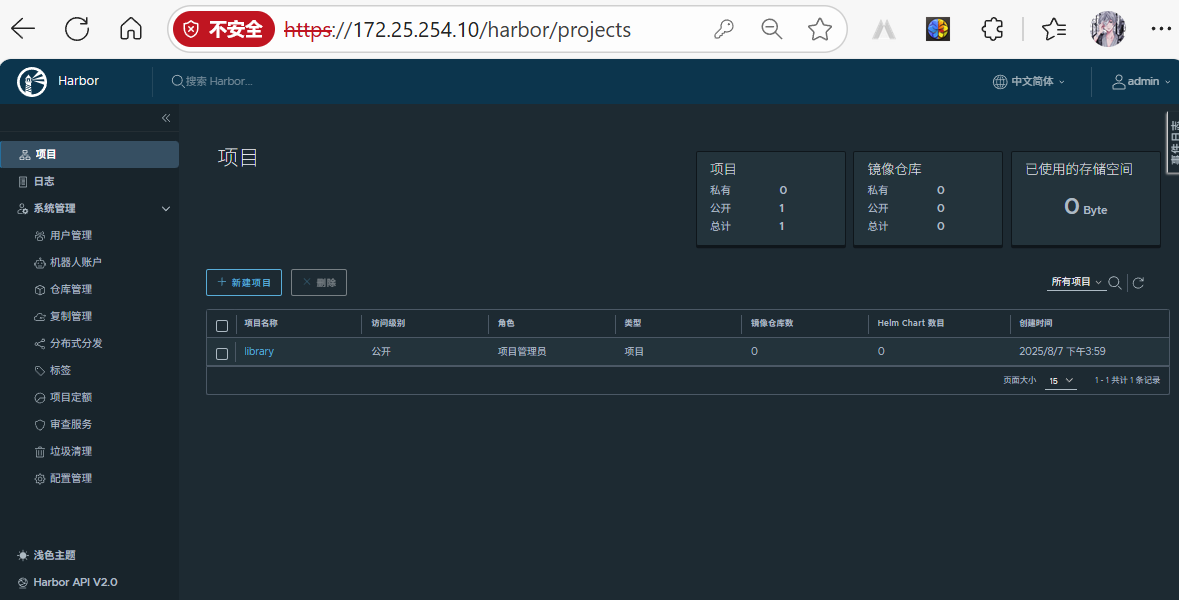

[+] Running 10/10? Container harbor-log Started 0.5s ? Container harbor-portal Started 2.6s ? Container harbor-db Started 1.1s ? Container redis Started 2.8s ? Container registry Started 2.8s ? Container chartmuseum Started 2.7s ? Container registryctl Started 2.7s ? Container harbor-core Started 1.0s ? Container nginx Started 1.3s ? Container harbor-jobservice Started 0.7s 5.5.2?管理倉庫

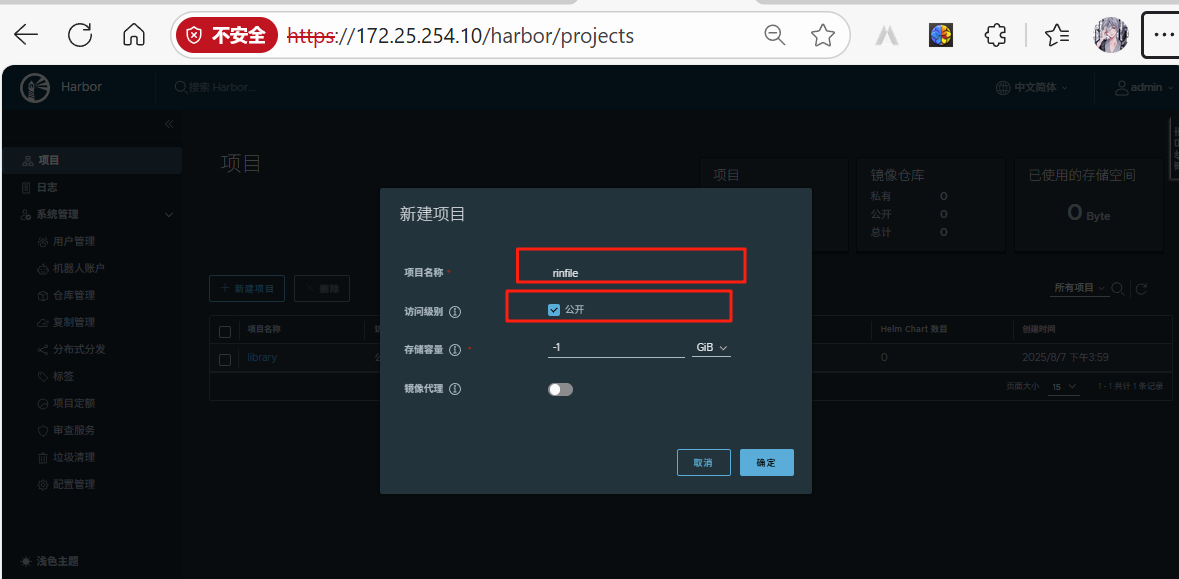

登錄私有倉庫:

建立倉庫項目:

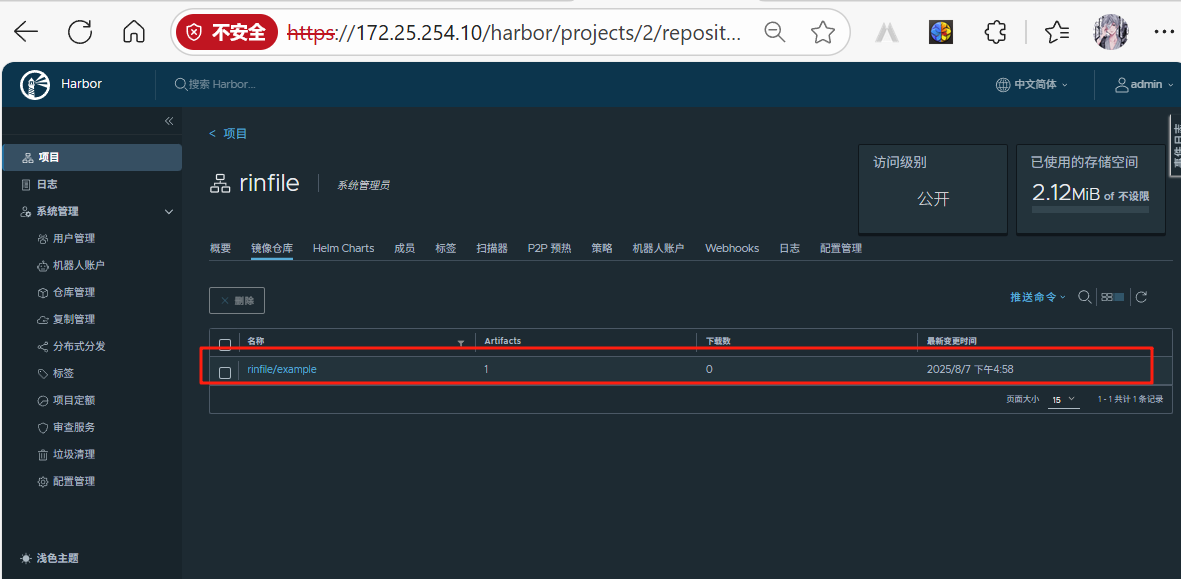

上傳鏡像:

[root@docker harbor]# docker push www.rin.com/rinfile/example:v1

The push refers to repository [www.rin.com/rinfile/example]

bcbeb1265327: Pushed

d51af96cf93e: Pushed

v1: digest: sha256:64743a2cdb84b0a21e7132de9bdc8d592cc3b90a035b93c05cf6941620b43d27 size: 734

?

添加標簽:

docker tag 本地鏡像 倉庫地址/項目名/鏡像名:標簽

docker tag example:v1 www.rin.com/rinfile/example:v1上傳鏡像:

docker push www.rin.com/rinfile/example:v1[root@docker harbor]# docker push www.rin.com/rinfile/example:v1

The push refers to repository [www.rin.com/rinfile/example]

bcbeb1265327: Pushed

d51af96cf93e: Pushed

v1: digest: sha256:64743a2cdb84b0a21e7132de9bdc8d592cc3b90a035b93c05cf6941620b43d27 size: 734查看上傳的鏡像:

6?Docker 網絡

[root@docker harbor]# docker network lsNETWORK ID NAME DRIVER SCOPE2a93d6859680 bridge bridge local4d81ddd9ed10 host host local8c8c95f16b68 none null local

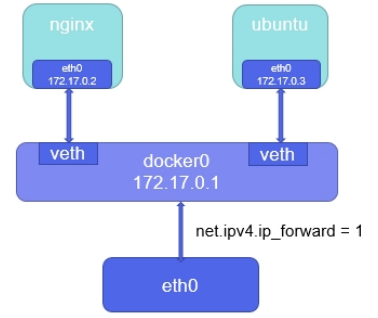

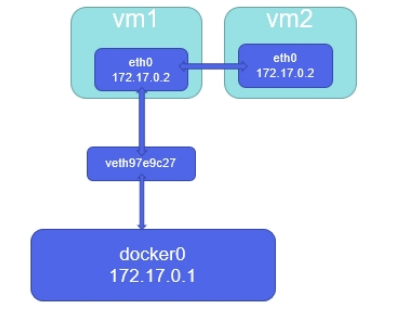

6.1?docker原生bridge網路

[root@docker mnt]# ip link show type bridge

3: docker0: <NO-CARRIER,BROADCAST,MULTICAST,UP> mtu 1500 qdisc noqueue state DOWN

mode DEFAULT group default

link/ether 02:42:5f:e2:34:6c brd ff:ff:ff:ff:ff:ff- bridge模式下容器沒有一個公有ip,只有宿主機可以直接訪問,外部主機是不可見的。

- 容器通過宿主機的NAT規則后可以訪問外網

[root@docker mnt]# docker run -d --name web -p 80:80 nginx:1.23

defeba839af1b95bac2a200fd1e06a45e55416be19c7e9ce7e0c8daafa7dd470

[root@docker mnt]# ifconfig

docker0: flags=4163<UP,BROADCAST,RUNNING,MULTICAST> mtu 1500

inet 172.17.0.1 netmask 255.255.0.0 broadcast 172.17.255.255

inet6 fe80::42:5fff:fee2:346c prefixlen 64 scopeid 0x20<link>

ether 02:42:5f:e2:34:6c txqueuelen 0 (Ethernet)

RX packets 21264 bytes 1497364 (1.4 MiB)

RX errors 0 dropped 0 overruns 0 frame 0

TX packets 27358 bytes 215202237 (205.2 MiB)

TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0

eth0: flags=4163<UP,BROADCAST,RUNNING,MULTICAST> mtu 1500

inet 172.25.254.100 netmask 255.255.255.0 broadcast 172.25.254.255

inet6 fe80::30b2:327e:b13a:31cf prefixlen 64 scopeid 0x20<link>

ether 00:0c:29:ec:fc:d3 txqueuelen 1000 (Ethernet)

RX packets 1867778 bytes 2163432019 (2.0 GiB)

RX errors 0 dropped 0 overruns 0 frame 0

TX packets 822980 bytes 848551940 (809.2 MiB)

TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0

lo: flags=73<UP,LOOPBACK,RUNNING> mtu 65536

inet 127.0.0.1 netmask 255.0.0.0

inet6 ::1 prefixlen 128 scopeid 0x10<host>

loop txqueuelen 1000 (Local Loopback)

RX packets 11819 bytes 1279944 (1.2 MiB)

RX errors 0 dropped 0 overruns 0 frame 0

TX packets 11819 bytes 1279944 (1.2 MiB)

TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0

veth022a7c9: flags=4163<UP,BROADCAST,RUNNING,MULTICAST> mtu 1500

inet6 fe80::a013:5fff:fefc:c9e4 prefixlen 64 scopeid 0x20<link>

ether a2:13:5f:fc:c9:e4 txqueuelen 0 (Ethernet)

RX packets 0 bytes 0 (0.0 B)

RX errors 0 dropped 0 overruns 0 frame 0

TX packets 15 bytes 2007 (1.9 KiB)

TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0[root@docker mnt]# brctl show

bridge name bridge id STP enabled interfaces

docker0 8000.02425fe2346c no veth022a7c96.2?docker原生網絡host

[root@docker ~]# docker run -it --name test --network host busybox

/ # ifconfig

docker0 Link encap:Ethernet HWaddr 02:42:5F:E2:34:6C

inet addr:172.17.0.1 Bcast:172.17.255.255 Mask:255.255.0.0

inet6 addr: fe80::42:5fff:fee2:346c/64 Scope:Link

UP BROADCAST MULTICAST MTU:1500 Metric:1

RX packets:21264 errors:0 dropped:0 overruns:0 frame:0

TX packets:27359 errors:0 dropped:0 overruns:0 carrier:0

collisions:0 txqueuelen:0

RX bytes:1497364 (1.4 MiB) TX bytes:215202367 (205.2 MiB)

eth0 Link encap:Ethernet HWaddr 00:0C:29:EC:FC:D3

inet addr:172.25.254.100 Bcast:172.25.254.255 Mask:255.255.255.0

inet6 addr: fe80::30b2:327e:b13a:31cf/64 Scope:Link

UP BROADCAST RUNNING MULTICAST MTU:1500 Metric:1

RX packets:1902507 errors:0 dropped:0 overruns:0 frame:0

TX packets:831640 errors:0 dropped:0 overruns:0 carrier:0

collisions:0 txqueuelen:1000

RX bytes:2202443300 (2.0 GiB) TX bytes:849412124 (810.0 MiB)

lo Link encap:Local Loopback

inet addr:127.0.0.1 Mask:255.0.0.0

inet6 addr: ::1/128 Scope:Host

UP LOOPBACK RUNNING MTU:65536 Metric:1

RX packets:11819 errors:0 dropped:0 overruns:0 frame:0

TX packets:11819 errors:0 dropped:0 overruns:0 carrier:0

collisions:0 txqueuelen:1000

RX bytes:1279944 (1.2 MiB) TX bytes:1279944 (1.2 MiB)6.3?docker 原生網絡none

[root@docker ~]# docker run -it --name test --rm --network none busybox

/ # ifconfig

lo Link encap:Local Loopback

inet addr:127.0.0.1 Mask:255.0.0.0

inet6 addr: ::1/128 Scope:Host

UP LOOPBACK RUNNING MTU:65536 Metric:1

RX packets:0 errors:0 dropped:0 overruns:0 frame:0

TX packets:0 errors:0 dropped:0 overruns:0 carrier:0

collisions:0 txqueuelen:1000

RX bytes:0 (0.0 B) TX bytes:0 (0.0 B)6.4?docker的自定義網絡

- bridge

- overlay

- macvlan

6.4.1?自定義橋接網絡

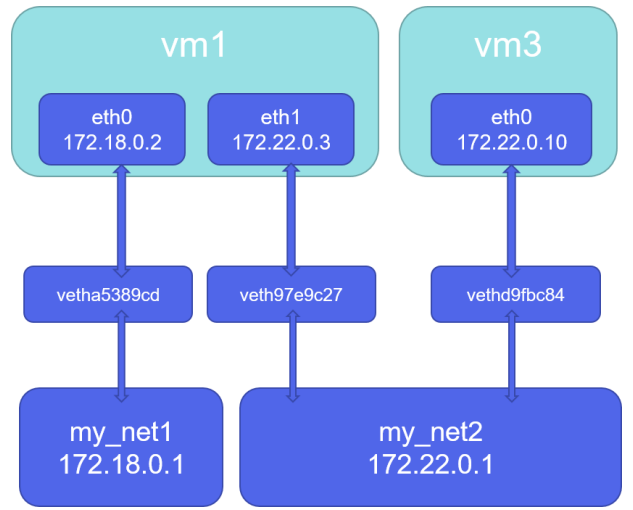

[root@docker ~]# docker network create my_net1

f2aae5ce8ce43e8d1ca80c2324d38483c2512d9fb17b6ba60d05561d6093f4c4

[root@docker ~]# docker network ls

NETWORK ID NAME DRIVER SCOPE

2a93d6859680 bridge bridge local

4d81ddd9ed10 host host local

f2aae5ce8ce4 my_net1 bridge local

8c8c95f16b68 none null local[root@docker ~]# ifconfig

br-f2aae5ce8ce4: flags=4099<UP,BROADCAST,MULTICAST> mtu 1500

inet 172.18.0.1 netmask 255.255.0.0 broadcast 172.18.255.255

ether 02:42:70:57:f2:82 txqueuelen 0 (Ethernet)

RX packets 21264 bytes 1497364 (1.4 MiB)

RX errors 0 dropped 0 overruns 0 frame 0

TX packets 27359 bytes 215202367 (205.2 MiB)

TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0

docker0: flags=4099<UP,BROADCAST,MULTICAST> mtu 1500

inet 172.17.0.1 netmask 255.255.0.0 broadcast 172.17.255.255

inet6 fe80::42:5fff:fee2:346c prefixlen 64 scopeid 0x20<link>

ether 02:42:5f:e2:34:6c txqueuelen 0 (Ethernet)

RX packets 21264 bytes 1497364 (1.4 MiB)

RX errors 0 dropped 0 overruns 0 frame 0

TX packets 27359 bytes 215202367 (205.2 MiB)

TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0[root@docker ~]# docker network create my_net2 --subnet 192.168.0.0/24 --gateway

192.168.0.100

7e77cd2e44c64ff3121a1f1e0395849453f8d524d24b915672da265615e0e4f9

[root@docker ~]# docker network inspect my_net2

[{"Name": "my_net2","Id": "7e77cd2e44c64ff3121a1f1e0395849453f8d524d24b915672da265615e0e4f9","Created": "2024-08-17T17:05:19.167808342+08:00","Scope": "local","Driver": "bridge","EnableIPv6": false,"IPAM": {"Driver": "default","Options": {},"Config": [{"Subnet": "192.168.0.0/24","Gateway": "192.168.0.100"}]},"Internal": false,"Attachable": false,"Ingress": false,"ConfigFrom": {"Network": ""},"ConfigOnly": false,"Containers": {},"Options": {},"Labels": {}}

]6.4.2?為什么要自定義橋接

[root@docker ~]# docker inspect web1

"Networks": {

"bridge": {

"IPAMConfig": null,

"Links": null,

"Aliases": null,

"MacAddress": "02:42:ac:11:00:03",

"DriverOpts": null,

"NetworkID":

"2a93d6859680b45eae97e5f6232c3b8e070b1ec3d01852b147d2e1385034bce5",

"EndpointID":

"4d54b12aeb2d857a6e025ee220741cbb3ef1022848d58057b2aab544bd3a4685",

"Gateway": "172.17.0.1",

"IPAddress": "172.17.0.2", #注意ip信息

"IPPrefixLen": 16,

"IPv6Gateway": "",

"GlobalIPv6Address": "",

"GlobalIPv6PrefixLen": 0,

"DNSNames": null

[root@docker ~]# docker inspect web1

"Networks": {

"bridge": {

"IPAMConfig": null,

"Links": null,

"Aliases": null,

"MacAddress": "02:42:ac:11:00:03",

"DriverOpts": null,

"NetworkID":

"2a93d6859680b45eae97e5f6232c3b8e070b1ec3d01852b147d2e1385034bce5",

"EndpointID":

"4d54b12aeb2d857a6e025ee220741cbb3ef1022848d58057b2aab544bd3a4685",

"Gateway": "172.17.0.1",

"IPAddress": "172.17.0.3", #注意ip信息

"IPPrefixLen": 16,

"IPv6Gateway": "",

"GlobalIPv6Address": "",

"GlobalIPv6PrefixLen": 0,

"DNSNames": null

#關閉容器后重啟容器,啟動順序調換

[root@docker ~]# docker stop web1 web2

web1

web2

[root@docker ~]# docker start web2

web2

[root@docker ~]# docker start web1

web1

#我們會發容器ip顛倒[root@docker ~]# docker run -d --network my_net1 --name web nginx

d9ed01850f7aae35eb1ca3e2c73ff2f83d13c255d4f68416a39949ebb8ec699f

[root@docker ~]# docker run -it --network my_net1 --name test busybox

/ # ping web

PING web (172.18.0.2): 56 data bytes

64 bytes from 172.18.0.2: seq=0 ttl=64 time=0.197 ms

64 bytes from 172.18.0.2: seq=1 ttl=64 time=0.096 ms

64 bytes from 172.18.0.2: seq=2 ttl=64 time=0.087 ms#在rhel7中使用的是iptables進行網絡隔離,在rhel9中使用nftpables

[root@docker ~]# nft list ruleset可以看到網絡隔離策略6.4.3?如何讓不同的自定義網絡互通?

[root@docker ~]# docker run -d --name web1 --network my_net1 nginx

[root@docker ~]# docker run -it --name test --network my_net2 busybox

/ # ifconfig

eth0 Link encap:Ethernet HWaddr 02:42:C0:A8:00:01

inet addr:192.168.0.1 Bcast:192.168.0.255 Mask:255.255.255.0

UP BROADCAST RUNNING MULTICAST MTU:1500 Metric:1

RX packets:36 errors:0 dropped:0 overruns:0 frame:0

TX packets:0 errors:0 dropped:0 overruns:0 carrier:0

collisions:0 txqueuelen:0

RX bytes:5244 (5.1 KiB) TX bytes:0 (0.0 B)

lo Link encap:Local Loopback

inet addr:127.0.0.1 Mask:255.0.0.0

inet6 addr: ::1/128 Scope:Host

UP LOOPBACK RUNNING MTU:65536 Metric:1

RX packets:0 errors:0 dropped:0 overruns:0 frame:0

TX packets:0 errors:0 dropped:0 overruns:0 carrier:0

collisions:0 txqueuelen:1000

RX bytes:0 (0.0 B) TX bytes:0 (0.0 B)

/ # ping 172.18.0.2

PING 172.18.0.2 (172.18.0.2): 56 data bytes

[root@docker ~]# docker network connect my_net1 test

#在上面test容器中加入網絡eth1

/ # ifconfig

eth0 Link encap:Ethernet HWaddr 02:42:C0:A8:00:01

inet addr:192.168.0.1 Bcast:192.168.0.255 Mask:255.255.255.0

UP BROADCAST RUNNING MULTICAST MTU:1500 Metric:1

RX packets:45 errors:0 dropped:0 overruns:0 frame:0

TX packets:8 errors:0 dropped:0 overruns:0 carrier:0

collisions:0 txqueuelen:0

RX bytes:5879 (5.7 KiB) TX bytes:602 (602.0 B)

eth1 Link encap:Ethernet HWaddr 02:42:AC:12:00:03

inet addr:172.18.0.3 Bcast:172.18.255.255 Mask:255.255.0.0

UP BROADCAST RUNNING MULTICAST MTU:1500 Metric:1

RX packets:15 errors:0 dropped:0 overruns:0 frame:0

TX packets:0 errors:0 dropped:0 overruns:0 carrier:0

collisions:0 txqueuelen:0

RX bytes:2016 (1.9 KiB) TX bytes:0 (0.0 B)

lo Link encap:Local Loopback

inet addr:127.0.0.1 Mask:255.0.0.0

inet6 addr: ::1/128 Scope:Host

UP LOOPBACK RUNNING MTU:65536 Metric:1

RX packets:4 errors:0 dropped:0 overruns:0 frame:0

TX packets:4 errors:0 dropped:0 overruns:0 carrier:0

collisions:0 txqueuelen:1000

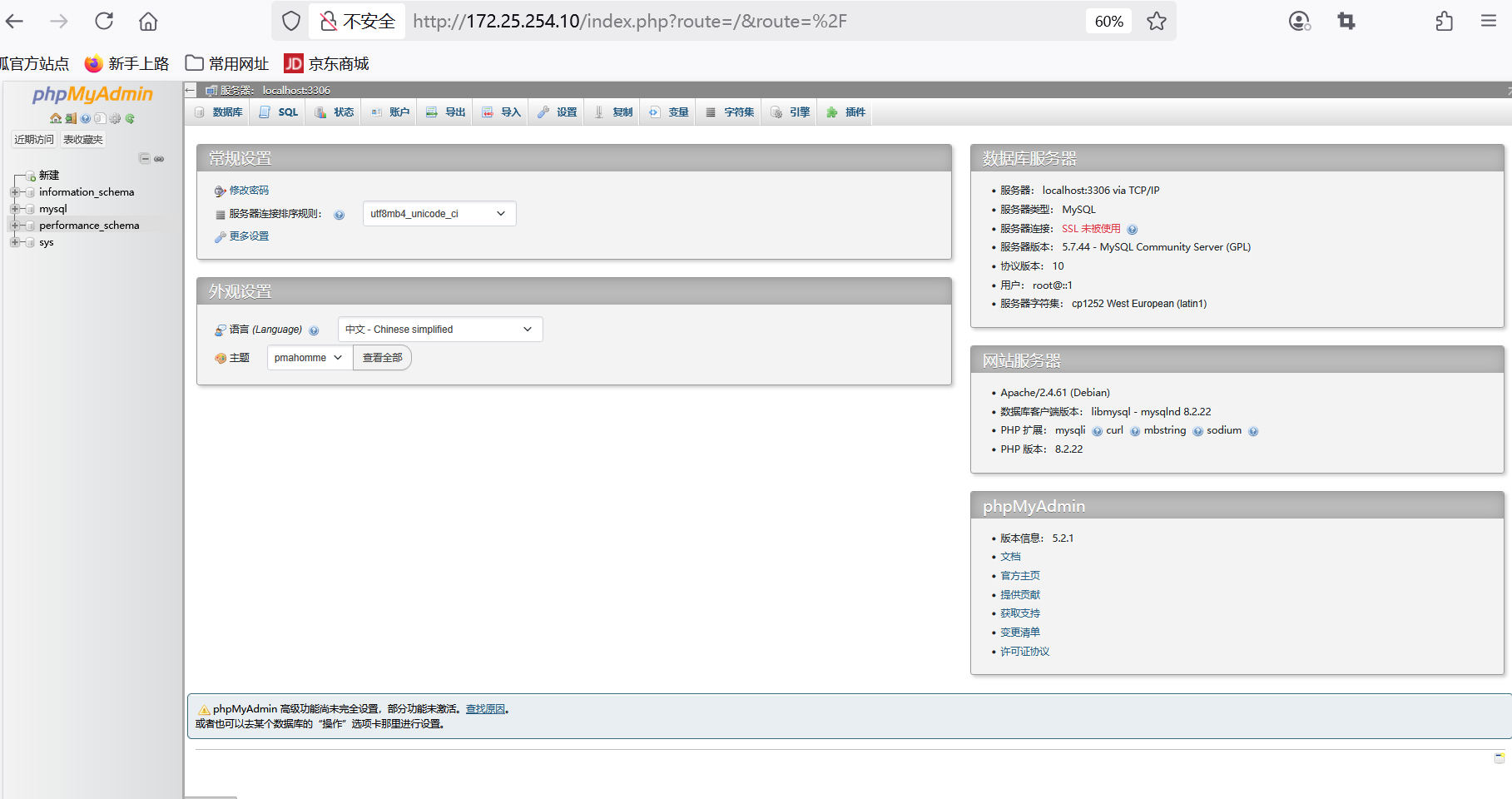

RX bytes:212 (212.0 B) TX bytes:212 (212.0 B)6.4.4 joined網絡示例演示

創建名為?my_net1?的網絡:

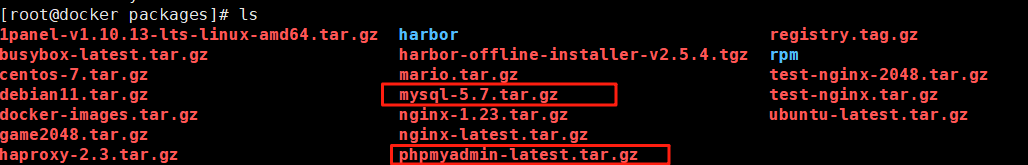

docker network create my_net1將phpmyadmin和mysq鏡像進行加載:

docker load -i phpmyadmin-latest.tar.gz docker load -i mysql-5.7.tar.gz docker run -d --name mysqladmin --network my_net1

-e PMA_ARBITRARY=1 #在web頁面中可以手動輸入數據庫地址和端口

-p 80:80 phpmyadmin:latestdocker run -d --name mysql

-e MYSQL_ROOT_PASSWORD='rin' #設定數據庫密碼

--network container:mysqladmin #把數據庫容器添加到phpmyadmin容器中

mysql:5.7在頁面進行訪問:

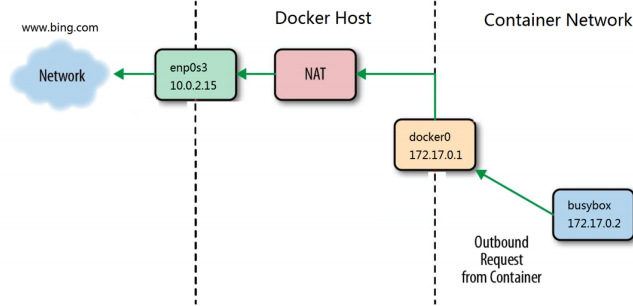

6.5 容器內外網的訪問

6.5.1?容器訪問外網

- 在rhel7中,docker訪問外網是通過iptables添加地址偽裝策略來完成容器網文外網

- 在rhel7之后的版本中通過nftables添加地址偽裝來訪問外網

[root@docker ~]# iptables -t nat -nL

Chain PREROUTING (policy ACCEPT)

target prot opt source destination

Chain INPUT (policy ACCEPT)

target prot opt source destination

Chain OUTPUT (policy ACCEPT)

target prot opt source destination

Chain POSTROUTING (policy ACCEPT)

target prot opt source destination

MASQUERADE 6 -- 172.17.0.2 172.17.0.2 tcp dpt:80 #

內網訪問外網策略

Chain DOCKER (0 references)

target prot opt source destination

DNAT 6 -- 0.0.0.0/0 0.0.0.0/0 tcp dpt:80

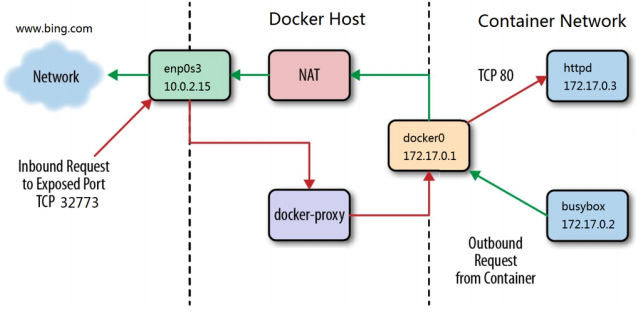

to:172.17.0.2:806.5.2?外網訪問docker容器

#通過docker-proxy對數據包進行內轉

[root@docker ~]# docker run -d --name webserver -p 80:80 nginx

[root@docker ~]# ps ax

133986 ? Sl 0:00 /usr/bin/docker-proxy -proto tcp -host-ip 0.0.0.0 -

host-port 80 -container-ip 172.17.0.2 -container-port 80

133995 ? Sl 0:00 /usr/bin/docker-proxy -proto tcp -host-ip :: -hostport 80 -container-ip 172.17.0.2 -container-port 80

134031 ? Sl 0:00 /usr/bin/containerd-shim-runc-v2 -namespace moby -id

cae79497a01c0b8c488c7597b43de4a43f361f21a398ff423b4504c0905db143 -address

/run/containerd/containerd.sock

134059 ? Ss 0:00 nginx: master process nginx -g daemon off;

134099 ? S 0:00 nginx: worker process

134100 ? S 0:00 nginx: worker process

#通過dnat策略來完成瀏覽內轉

[root@docker ~]# iptables -t nat -nL

Chain PREROUTING (policy ACCEPT)

target prot opt source destination

Chain INPUT (policy ACCEPT)

target prot opt source destination

Chain OUTPUT (policy ACCEPT)

target prot opt source destination

Chain POSTROUTING (policy ACCEPT)

target prot opt source destination

MASQUERADE 6 -- 172.17.0.2 172.17.0.2 tcp dpt:80

Chain DOCKER (0 references)

target prot opt source destination

DNAT 6 -- 0.0.0.0/0 0.0.0.0/0 tcp dpt:80

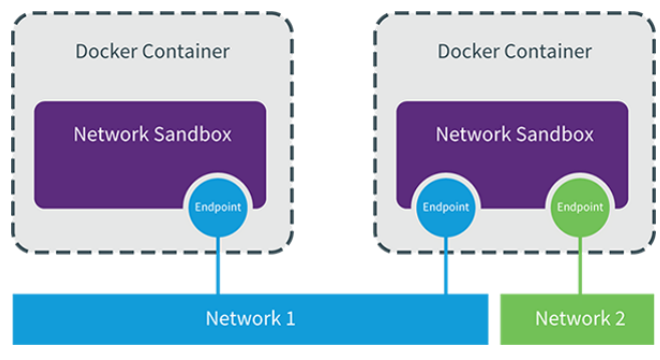

to:172.17.0.2:806.6 docker跨主機網絡

- 跨主機網絡解決方案

- docker原生的overlay和macvlan

- 第三方的flannel、weave、calico

- 眾多網絡方案是如何與docker集成在一起的

- libnetwork docker容器網絡庫

- CNM (Container Network Model)這個模型對容器網絡進行了抽象

6.6.1?CNM (Container Network Model)

6.6.2 macvlan網絡方式實現跨主機通信

- Linux kernel提供的一種網卡虛擬化技術。

- 無需Linux bridge,直接使用物理接口,性能極好

- 容器的接口直接與主機網卡連接,無需NAT或端口映射。macvlan會獨占主機網卡,但可以使用vlan子接口實現多macvlan網絡

- vlan可以將物理二層網絡劃分為4094個邏輯網絡,彼此隔離,vlan id取值為1~4094

- macvlan網絡在二層上是隔離的,所以不同macvlan網絡的容器是不能通信的

- 可以在三層上通過網關將macvlan網絡連通起來

- docker本身不做任何限制,像傳統vlan網絡那樣管理即可

ip link set eth1 promisc on

ip link set up eth1

ifconfig eth1添加macvlan網路

docker network create

-d macvlan

--subnet 1.1.1.0/24

--gateway 1.1.1.1

-o parent=eth1 macvlan1 掛載鏡像:

docker load -i busybox-latest.tar.gz 測試:

主機1:

docker run -it --name busybox --network macvlan1 --ip 1.1.1.100 --rm busybox主機2:

docker run -it --name busybox --network macvlan1 --ip 1.1.1.200 --rm busybox[root@docker packages]# docker run -it --name busybox --network macvlan1 --ip 1.1.1.100 --rm busybox

/ # ip a

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue qlen 1000link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00inet 127.0.0.1/8 scope host lovalid_lft forever preferred_lft foreverinet6 ::1/128 scope host valid_lft forever preferred_lft forever

13: eth0@if12: <BROADCAST,MULTICAST,UP,LOWER_UP,M-DOWN> mtu 1500 qdisc noqueue link/ether 66:6e:ba:48:ba:cc brd ff:ff:ff:ff:ff:ffinet 1.1.1.100/24 brd 1.1.1.255 scope global eth0valid_lft forever preferred_lft forever

/ # ping 1.1.1.200

PING 1.1.1.200 (1.1.1.200): 56 data bytes

64 bytes from 1.1.1.200: seq=0 ttl=64 time=1.086 ms

64 bytes from 1.1.1.200: seq=1 ttl=64 time=0.841 ms

^C

--- 1.1.1.200 ping statistics ---

2 packets transmitted, 2 packets received, 0% packet loss

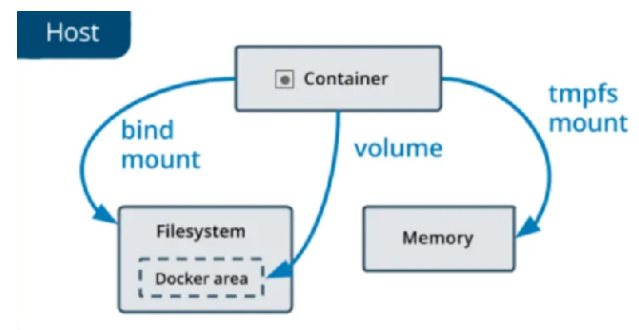

round-trip min/avg/max = 0.841/0.963/1.086 ms7 Docker 數據卷管理及優化

- 數據持久化:即使容器被刪除或重新創建,數據卷中的數據仍然存在,不會丟失。

- 數據共享:多個容器可以同時掛載同一個數據卷,實現數據的共享和交互。

- 獨立于容器生命周期:數據卷的生命周期獨立于容器,不受容器的啟動、停止和刪除的影響。

7.1?為什么要用數據卷

- 性能差

- 生命周期與容器相同

- mount到主機中,繞開分層文件系統

- 和主機磁盤性能相同,容器刪除后依然保留

- 僅限本地磁盤,不能隨容器遷移

- bind mount

- docker managed volume

7.2?bind mount 數據卷

- 是將主機上的目錄或文件mount到容器里。

- 使用直觀高效,易于理解。

- 使用 -v 選項指定路徑,格式 :-v選項指定的路徑,如果不存在,掛載時會自動創建

在 Docker 中,Bind Mount(綁定掛載)?是一種將宿主機的文件或目錄直接掛載到容器內的方式,屬于數據持久化的一種手段。它與 Docker 管理的數據卷(Volume)不同,Bind Mount 完全依賴宿主機的文件系統結構,容器直接訪問宿主機的指定路徑。

Bind Mount 的核心特點

- 直接映射:容器內的路徑與宿主機的路徑直接綁定,雙方的修改會實時同步(無論容器是否運行)。

- 依賴宿主機路徑:必須指定宿主機的絕對路徑(或相對路徑,Docker 會自動轉換為絕對路徑),若路徑不存在,Docker 會自動創建(目錄)。

- 權限控制:可通過?

:ro(read-only)設置為只讀,默認是讀寫(rw)。- 無命名管理:Docker 不會對 Bind Mount 進行命名或跟蹤,完全由用戶管理宿主機路徑。

創建測試文件:

?

[root@docker test-2]# echo data1 data1 > data1

[root@docker test-2]# echo data2 data2 > data2[root@docker test-2]# cat data2

data2 data2

[root@docker test-2]# cat data1

data1 data1進行掛載:

docker run -it --rm \-v ./data1:/data1:rw \ # 1. 讀寫掛載當前目錄下的data1到容器內的/data1-v ./data2:/data2:ro \ # 2. 只讀掛載當前目錄下的data2到容器內的/data2-v /etc/passwd:/data/passwd:ro \ # 3. 只讀掛載宿主機的/etc/passwd到容器內的/data/passwdbusybox # 使用的鏡像測試:

data1為可讀可寫,data2為只讀

/ # ls

bin data data1 data2 dev etc home lib lib64 proc root sys tmp usr var

/ # cat data1

data1 data1

/ # cat data2

data2 data2

/ # echo docker 1 >> data1

/ # cat data1

data1 data1

docker 1

/ # echo docker 2 >> data2

sh: can't create data2: Read-only file system

/ # cat data2

data2 data2

/ # exit

退出容器后查看本機剛掛載的目錄文件情況:

因為文件為掛載文件,所有在容器內部進行更改后,主機的文件也進行了相應的更改

[root@docker test-2]# cat data1

data1 data1

docker 1

[root@docker test-2]# cat data2

data2 data27.3 Docker managed 數據卷

在 Docker 中,Managed Volume(Docker 管理的數據卷)?是由 Docker 引擎自動創建和管理的持久化存儲方式,無需用戶指定主機上的具體路徑,完全由 Docker 負責存儲位置的分配和維護。這種方式簡化了數據卷的使用,尤其適合需要持久化容器數據但不想手動管理主機文件路徑的場景。

Docker Managed Volume 的核心特點

自動創建與管理

- 無需指定主機目錄(如?

-v /host/path:/container/path),只需在運行容器時指定容器內的掛載點(如?-v /data),Docker 會自動在主機的特定目錄(通常是?/var/lib/docker/volumes/<volume-id>/_data)創建數據卷。- 數據卷的生命周期獨立于容器,即使容器被刪除,數據卷仍會保留,除非手動刪除。

持久化存儲

- 容器內寫入?

/data(假設掛載點為?/data)的數據會被持久化到 Docker 管理的主機目錄中,避免容器刪除后數據丟失。與容器解耦

- 可被多個容器共享和重用,適合多容器協作場景(如數據庫容器與應用容器共享數據)

8 Docker的安全加固

[root@docker packages]# free -mtotal used free shared buff/cache available

Mem: 3627 721 2411 9 739 2906

Swap: 4011 0 4011

[root@docker packages]# docker run --rm --memory 200M -it ubuntu:latest

root@e0d90039aafc:/# free -mtotal used free shared buff/cache available

Mem: 3627 756 2377 9 739 2871

Swap: 4011 0 40118.1 解決Docker的默認隔離性

安裝lxcfs:

[root@docker rpm]# ls

bridge-utils-1.7.1-3.el9.x86_64.rpm libcgroup-tools-0.41-19.el8.x86_64.rpm lxc-templates-4.0.12-1.el9.x86_64.rpm

docker.tar.gz lxcfs-5.0.4-1.el9.x86_64.rpm

libcgroup-0.41-19.el8.x86_64.rpm lxc-libs-4.0.12-1.el9.x86_64.rpm

dnf install lxcfs-5.0.4-1.el9.x86_64.rpm lxc-libs-4.0.12-1.el9.x86_64.rpm lxc-templates-4.0.12-1.el9.x86_64.rpm -y運行lxcfs并解決容器隔離性

[root@docker rpm]# lxcfs /var/lib/lxcfs &

[root@docker rpm]# docker run -it -m 256m \

-v /var/lib/lxcfs/proc/cpuinfo:/proc/cpuinfo:rw \

-v /var/lib/lxcfs/proc/diskstats:/proc/diskstats:rw \

-v /var/lib/lxcfs/proc/meminfo:/proc/meminfo:rw \

-v /var/lib/lxcfs/proc/stat:/proc/stat:rw \

-v /var/lib/lxcfs/proc/swaps:/proc/swaps:rw \

-v /var/lib/lxcfs/proc/uptime:/proc/uptime:rw \

ubuntu

root@cc08b2ff703b:/# free -mtotal used free shared buff/cache available

Mem: 256 1 254 0 0 254

Swap: 0 0 08.2?容器特權

在容器中默認情況下即使我是容器的超級用戶也無法修改某些系統設定,比如網絡

[root@docker rpm]# docker run --rm -it busybox

/ # whoami

root

/ # ip a

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue qlen 1000link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00inet 127.0.0.1/8 scope host lovalid_lft forever preferred_lft foreverinet6 ::1/128 scope host valid_lft forever preferred_lft forever

2: eth0@if12: <BROADCAST,MULTICAST,UP,LOWER_UP,M-DOWN> mtu 1500 qdisc noqueue link/ether 9a:f8:5a:46:af:fd brd ff:ff:ff:ff:ff:ffinet 172.17.0.2/16 brd 172.17.255.255 scope global eth0valid_lft forever preferred_lft forever

/ # ip a a 172.25.254.111/24 dev eth0

ip: RTNETLINK answers: Operation not permitted這是因為容器使用的很多資源都是和系統真實主機公用的,如果允許容器修改這些重要資源,系統的穩定性會變的非常差

但是由于某些需要求,容器需要控制一些默認控制不了的資源,如何解決此問題,這時我們就要設置容器特權

[root@docker rpm]# docker run --rm -it --privileged busybox

/ # whoami

root

/ # ip a a 172.25.254.111/24 dev eth0

/ # ip a

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue qlen 1000link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00inet 127.0.0.1/8 scope host lovalid_lft forever preferred_lft foreverinet6 ::1/128 scope host valid_lft forever preferred_lft forever

2: eth0@if14: <BROADCAST,MULTICAST,UP,LOWER_UP,M-DOWN> mtu 1500 qdisc noqueue link/ether 7e:d4:04:1d:99:1a brd ff:ff:ff:ff:ff:ffinet 172.17.0.2/16 brd 172.17.255.255 scope global eth0valid_lft forever preferred_lft foreverinet 172.25.254.111/24 scope global eth0valid_lft forever preferred_lft forever#如果添加了--privileged 參數開啟容器,容器獲得權限近乎于宿主機的root用戶

8.3 容器特權的白名單

--privileged=true 的權限非常大,接近于宿主機的權限,為了防止用戶的濫用,需要增加限制,只提供給容器必須的權限。此時Docker 提供了權限白名單的機制,使用--cap-add添加必要的權限

capabilities手冊地址:capabilities(7) - Linux manual page

#限制容器對網絡有root權限

[root@docker rpm]# docker run --rm -it --cap-add NET_ADMIN busybox

/ # ip a

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue qlen 1000link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00inet 127.0.0.1/8 scope host lovalid_lft forever preferred_lft foreverinet6 ::1/128 scope host valid_lft forever preferred_lft forever

2: eth0@if16: <BROADCAST,MULTICAST,UP,LOWER_UP,M-DOWN> mtu 1500 qdisc noqueue link/ether e2:87:85:ef:35:9e brd ff:ff:ff:ff:ff:ffinet 172.17.0.2/16 brd 172.17.255.255 scope global eth0valid_lft forever preferred_lft forever

/ # ip a a 172.25.254.111/24 dev eth0 #網絡可以設定

/ # ip a

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue qlen 1000link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00inet 127.0.0.1/8 scope host lovalid_lft forever preferred_lft foreverinet6 ::1/128 scope host valid_lft forever preferred_lft forever

2: eth0@if16: <BROADCAST,MULTICAST,UP,LOWER_UP,M-DOWN> mtu 1500 qdisc noqueue link/ether e2:87:85:ef:35:9e brd ff:ff:ff:ff:ff:ffinet 172.17.0.2/16 brd 172.17.255.255 scope global eth0valid_lft forever preferred_lft foreverinet 172.25.254.111/24 scope global eth0 valid_lft forever preferred_lft forever

/ # fdisk -l #無法管理磁盤9 容器編排工具Docker Compose

9.1 Docker Compose 概述

???????

- 使用 YAML 格式的配置文件來定義一組相關的容器服務。每個服務可以指定鏡像、端口映射、 環境變量、存儲卷等參數。

- 例如,可以在配置文件中定義一個 Web 服務和一個數據庫服務,以及它們之間的連接關系。

- 通過一個簡單的命令,可以啟動或停止整個應用程序所包含的所有容器。這大大簡化了多容器 應用的部署和管理過程。

- 例如,使用 docker-compose up 命令可以啟動配置文件中定義的所有服務,使用 dockercompose down 命令可以停止并刪除這些服務。

- 可以定義容器之間的依賴關系,確保服務按照正確的順序啟動和停止。例如,可以指定數據庫 服務必須在 Web 服務之前啟動。

- 支持網絡配置,使不同服務的容器可以相互通信。可以定義一個自定義的網絡,將所有相關的 容器連接到這個網絡上。

- 可以在配置文件中定義環境變量,并在容器啟動時傳遞給容器。這使得在不同環境(如開發、 測試和生產環境)中使用不同的配置變得更加容易。

- 例如,可以定義一個數據庫連接字符串的環境變量,在不同環境中可以設置不同的值。

- Docker Compose 讀取 YAML 配置文件,解析其中定義的服務和參數。

- 根據配置文件中的定義,Docker Compose 調用 Docker 引擎創建相應的容器。它會下載所需 的鏡像(如果本地沒有),并設置容器的各種參數。

- Docker Compose 監控容器的狀態,并在需要時啟動、停止、重啟容器。

- 它還可以處理容器的故障恢復,例如自動重啟失敗的容器。

- 服務 (service) 一個應用的容器,實際上可以包括若干運行相同鏡像的容器實例

- 項目 (project) 由一組關聯的應用容器組成的一個完整業務單元,在 docker-compose.yml 文件中定義

- ?容器(container)容器是服務的具體實例,每個服務可以有一個或多個容器。容器是基于服務定義的鏡像創建的運行實例

9.2 Docker Compose 的常用命令參數

[root@docker packages]# cat docker-compose.yml

services:web:image: nginx:1.23ports:- "80:80"db:image: mysql:5.7environment:MYSQL_ROOT_PASSWORD: rin以下是一些 Docker Compose 常用命令:

一、服務管理

-

docker-compose up:-

啟動配置文件中定義的所有服務。

-

可以使用

-d參數在后臺啟動服務。 -

可以使用-f 來指定yml文件

-

例如:

docker-compose up -d。

-

9.3 企業示例

利用容器編排完成haproxy和nginx負載均衡架構實施

編輯docker-compose.yml文件:

?

[root@docker retest]# cat docker-compose.yml

services:web1:image: nginx:latestcontainer_name: web1networks:- mynet1expose:- 80volumes:- /docker/web/html1:/usr/share/nginx/htmlweb2:image: nginx:latestcontainer_name: web2networks:- mynet1expose:- 80volumes:- /docker/web/html2:/usr/share/nginx/htmlhaproxy:image: haproxy:2.3container_name: haproxyrestart: alwaysnetworks:- mynet1- mynet2volumes:- /docker/conf/haproxy/haproxy.cfg:/usr/local/etc/haproxy/haproxy.cfgports:- 80:80networks:mynet1:driver: bridgemynet2:driver: bridge編輯兩臺web的默認發布命令,文件中將其鏈接在本機的/docker/web/html1和/docker/web/html2下:

創建默認發布目錄文件:

mkdir /docker/web/{html1,html2} -p編寫內容:

echo web1 > /docker/web/html1/index.html

echo web2 > /docker/web/html2/index.html編輯haproxy的配置文件,并放到容器與主機鏈接的目錄:

創建配置文件目錄:

mkdir /docker/conf/haproxy編輯配置文件:

[root@docker retest]# vim /docker/conf/haproxy/haproxy.cfg

[root@docker retest]# cat /docker/conf/haproxy/haproxy.cfg

globallog 127.0.0.1 local0 infomaxconn 4096daemondefaultslog globalmode httpoption httplogoption dontlognullretries 3timeout connect 5stimeout client 30stimeout server 30stimeout check 5slisten http_load_balancerbind *:80balance roundrobinoption httpchk GET /server web1 web1:80 weight 1 maxconn 200 checkserver web2 web2:80 weight 1 maxconn 200 check

掛載相應的鏡像:

[root@docker retest]# docker images

REPOSITORY TAG IMAGE ID CREATED SIZE

nginx latest 5ef79149e0ec 12 months ago 188MB

haproxy 2.3 7ecd3fda00f4 3 years ago 99.4MB

啟動服務棧:

docker compose up -d[root@docker retest]# docker compose up -d

[+] Running 5/5? Network retest_mynet1 Created 0.5s ? Network retest_mynet2 Created 0.5s ? Container web2 Started 1.0s ? Container haproxy Started 2.4s ? Container web1 Started 1.7s

[root@docker retest]# docker compose ps

NAME IMAGE COMMAND SERVICE CREATED STATUS PORTS

haproxy haproxy:2.3 "docker-entrypoint.s…" haproxy 11 seconds ago Up 11 seconds 0.0.0.0:80->80/tcp, [::]:80->80/tcp

web1 nginx:latest "/docker-entrypoint.…" web1 11 seconds ago Up 11 seconds 80/tcp

web2 nginx:latest "/docker-entrypoint.…" web2 11 seconds ago Up 11 seconds 80/tcp測試:

[root@docker retest]# curl 172.25.254.10

web1

[root@docker retest]# curl 172.25.254.10

web2

[root@docker retest]# curl 172.25.254.10

web1

[root@docker retest]# curl 172.25.254.10

web2

學習筆記:客戶端和服務器端同在一個項目中)

)

)