使用llama.cpp實現LLM大模型的量化、推理、部署

- 大模型的格式轉換、量化、推理、部署

- 概述

- 克隆和編譯

- 環境準備

- 模型格式轉換

- GGUF格式

- bin格式

- 模型量化

- 模型加載與推理

- 模型API服務

- 模型API服務(第三方)

- GPU推理

大模型的格式轉換、量化、推理、部署

概述

llama.cpp的主要目標是能夠在各種硬件上實現LLM推理,只需最少的設置,并提供最先進的性能。提供1.5位、2位、3位、4位、5位、6位和8位整數量化,以加快推理速度并減少內存使用。

GitHub:https://github.com/ggerganov/llama.cpp

克隆和編譯

克隆最新版llama.cpp倉庫代碼

git clone https://github.com/ggerganov/llama.cpp

對llama.cpp項目進行編譯,在目錄下會生成一系列可執行文件

main:使用模型進行推理quantize:量化模型server:提供模型API服務

1.編譯構建CPU執行環境,安裝簡單,適用于沒有GPU的操作系統

cd llama.cppmkdir

2.編譯構建GPU執行環境,確保安裝CUDA工具包,適用于有GPU的操作系統

如果CUDA設置正確,那么執行

nvidia-smi、nvcc --version沒有錯誤提示,則表示一切設置正確。

make clean && make LLAMA_CUDA=1

3.如果編譯失敗或者需要重新編譯,可嘗試清理并重新編譯,直至編譯成功

make clean

環境準備

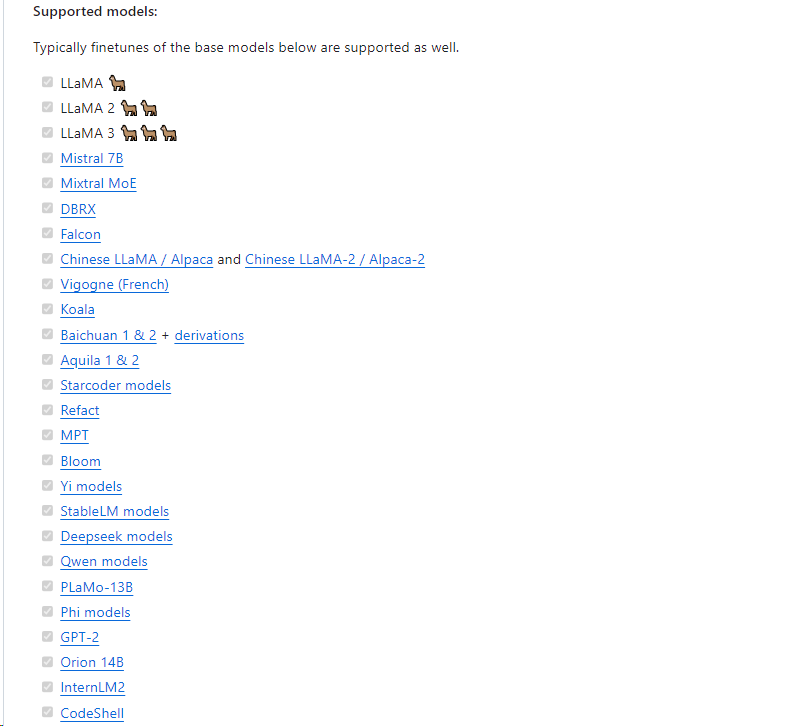

1.下載受支持的模型

要使用llamma.cpp,首先需要準備它支持的模型。在官方文檔中給出了說明,這里僅僅截取其中一部分

2.安裝依賴

llama.cpp項目下帶有requirements.txt 文件,直接安裝依賴即可。

pip install -r requirements.txt

模型格式轉換

根據模型架構,可以使用

convert.py或convert-hf-to-gguf.py文件。

轉換腳本讀取模型配置、分詞器、張量名稱+數據,并將它們轉換為GGUF元數據和張量。

GGUF格式

Llama-3相比其前兩代顯著擴充了詞表大小,由32K擴充至128K,并且改為BPE詞表。因此需要使用

--vocab-type參數指定分詞算法,默認值是spm,如果是bpe,需要顯示指定

注意:

官方文檔說convert.py不支持LLaMA 3,喊使用convert-hf-to-gguf.py,但它不支持

--vocab-type,且出現異常:error: unrecognized arguments: --vocab-type bpe,因此使用convert.py且沒出問題

使用llama.cpp項目中的convert.py腳本轉換模型為GGUF格式

root@master:~/work/llama.cpp# python3 ./convert.py /root/work/models/Llama3-Chinese-8B-Instruct/ --outtype f16 --vocab-type bpe --outfile ./models/Llama3-FP16.gguf

INFO:convert:Loading model file /root/work/models/Llama3-Chinese-8B-Instruct/model-00001-of-00004.safetensors

INFO:convert:Loading model file /root/work/models/Llama3-Chinese-8B-Instruct/model-00001-of-00004.safetensors

INFO:convert:Loading model file /root/work/models/Llama3-Chinese-8B-Instruct/model-00002-of-00004.safetensors

INFO:convert:Loading model file /root/work/models/Llama3-Chinese-8B-Instruct/model-00003-of-00004.safetensors

INFO:convert:Loading model file /root/work/models/Llama3-Chinese-8B-Instruct/model-00004-of-00004.safetensors

INFO:convert:model parameters count : 8030261248 (8B)

INFO:convert:params = Params(n_vocab=128256, n_embd=4096, n_layer=32, n_ctx=8192, n_ff=14336, n_head=32, n_head_kv=8, n_experts=None, n_experts_used=None, f_norm_eps=1e-05, rope_scaling_type=None, f_rope_freq_base=500000.0, f_rope_scale=None, n_orig_ctx=None, rope_finetuned=None, ftype=<GGMLFileType.MostlyF16: 1>, path_model=PosixPath('/root/work/models/Llama3-Chinese-8B-Instruct'))

INFO:convert:Loaded vocab file PosixPath('/root/work/models/Llama3-Chinese-8B-Instruct/tokenizer.json'), type 'bpe'

INFO:convert:Vocab info: <BpeVocab with 128000 base tokens and 256 added tokens>

INFO:convert:Special vocab info: <SpecialVocab with 280147 merges, special tokens {'bos': 128000, 'eos': 128001}, add special tokens unset>

INFO:convert:Writing models/Llama3-FP16.gguf, format 1

WARNING:convert:Ignoring added_tokens.json since model matches vocab size without it.

INFO:gguf.gguf_writer:gguf: This GGUF file is for Little Endian only

INFO:gguf.vocab:Adding 280147 merge(s).

INFO:gguf.vocab:Setting special token type bos to 128000

INFO:gguf.vocab:Setting special token type eos to 128001

INFO:gguf.vocab:Setting chat_template to {% set loop_messages = messages %}{% for message in loop_messages %}{% set content = '<|start_header_id|>' + message['role'] + '<|end_header_id|>'+ message['content'] | trim + '<|eot_id|>' %}{% if loop.index0 == 0 %}{% set content = bos_token + content %}{% endif %}{{ content }}{% endfor %}{{ '<|start_header_id|>assistant<|end_header_id|>' }}

INFO:convert:[ 1/291] Writing tensor token_embd.weight | size 128256 x 4096 | type F16 | T+ 1

INFO:convert:[ 2/291] Writing tensor blk.0.attn_norm.weight | size 4096 | type F32 | T+ 2

INFO:convert:[ 3/291] Writing tensor blk.0.ffn_down.weight | size 4096 x 14336 | type F16 | T+ 2

INFO:convert:[ 4/291] Writing tensor blk.0.ffn_gate.weight | size 14336 x 4096 | type F16 | T+ 2

INFO:convert:[ 5/291] Writing tensor blk.0.ffn_up.weight | size 14336 x 4096 | type F16 | T+ 2

INFO:convert:[ 6/291] Writing tensor blk.0.ffn_norm.weight | size 4096 | type F32 | T+ 2

INFO:convert:[ 7/291] Writing tensor blk.0.attn_k.weight | size 1024 x 4096 | type F16 | T+ 2

INFO:convert:[ 8/291] Writing tensor blk.0.attn_output.weight | size 4096 x 4096 | type F16 | T+ 2

INFO:convert:[ 9/291] Writing tensor blk.0.attn_q.weight | size 4096 x 4096 | type F16 | T+ 3

INFO:convert:[ 10/291] Writing tensor blk.0.attn_v.weight | size 1024 x 4096 | type F16 | T+ 3

INFO:convert:[ 11/291] Writing tensor blk.1.attn_norm.weight | size 4096 | type F32 | T+ 3

轉換為FP16的GGUF格式,模型體積大概15G。

root@master:~/work/llama.cpp# ll models -h

-rw-r--r-- 1 root root 15G May 17 07:47 Llama3-FP16.gguf

bin格式

root@master:~/work/llama.cpp# python3 ./convert.py /root/work/models/Llama3-Chinese-8B-Instruct/ --outtype f16 --vocab-type bpe --outfile ./models/Llama3-FP16.bin

INFO:convert:Loading model file /root/work/models/Llama3-Chinese-8B-Instruct/model-00001-of-00004.safetensors

INFO:convert:Loading model file /root/work/models/Llama3-Chinese-8B-Instruct/model-00001-of-00004.safetensors

INFO:convert:Loading model file /root/work/models/Llama3-Chinese-8B-Instruct/model-00002-of-00004.safetensors

INFO:convert:Loading model file /root/work/models/Llama3-Chinese-8B-Instruct/model-00003-of-00004.safetensors

INFO:convert:Loading model file /root/work/models/Llama3-Chinese-8B-Instruct/model-00004-of-00004.safetensors

INFO:convert:model parameters count : 8030261248 (8B)

INFO:convert:params = Params(n_vocab=128256, n_embd=4096, n_layer=32, n_ctx=8192, n_ff=14336, n_head=32, n_head_kv=8, n_experts=None, n_experts_used=None, f_norm_eps=1e-05, rope_scaling_type=None, f_rope_freq_base=500000.0, f_rope_scale=None, n_orig_ctx=None, rope_finetuned=None, ftype=<GGMLFileType.MostlyF16: 1>, path_model=PosixPath('/root/work/models/Llama3-Chinese-8B-Instruct'))

INFO:convert:Loaded vocab file PosixPath('/root/work/models/Llama3-Chinese-8B-Instruct/tokenizer.json'), type 'bpe'

INFO:convert:Vocab info: <BpeVocab with 128000 base tokens and 256 added tokens>

INFO:convert:Special vocab info: <SpecialVocab with 280147 merges, special tokens {'bos': 128000, 'eos': 128001}, add special tokens unset>

INFO:convert:Writing models/Llama3-FP16.bin, format 1

WARNING:convert:Ignoring added_tokens.json since model matches vocab size without it.

INFO:gguf.gguf_writer:gguf: This GGUF file is for Little Endian only

INFO:gguf.vocab:Adding 280147 merge(s).

INFO:gguf.vocab:Setting special token type bos to 128000

INFO:gguf.vocab:Setting special token type eos to 128001

INFO:gguf.vocab:Setting chat_template to {% set loop_messages = messages %}{% for message in loop_messages %}{% set content = '<|start_header_id|>' + message['role'] + '<|end_header_id|>'+ message['content'] | trim + '<|eot_id|>' %}{% if loop.index0 == 0 %}{% set content = bos_token + content %}{% endif %}{{ content }}{% endfor %}{{ '<|start_header_id|>assistant<|end_header_id|>' }}

INFO:convert:[ 1/291] Writing tensor token_embd.weight | size 128256 x 4096 | type F16 | T+ 4

INFO:convert:[ 2/291] Writing tensor blk.0.attn_norm.weight | size 4096 | type F32 | T+ 4

INFO:convert:[ 3/291] Writing tensor blk.0.ffn_down.weight | size 4096 x 14336 | type F16 | T+ 4

INFO:convert:[ 4/291] Writing tensor blk.0.ffn_gate.weight | size 14336 x 4096 | type F16 | T+ 5

INFO:convert:[ 5/291] Writing tensor blk.0.ffn_up.weight | size 14336 x 4096 | type F16 | T+ 5

INFO:convert:[ 6/291] Writing tensor blk.0.ffn_norm.weight | size 4096 | type F32 | T+ 5

INFO:convert:[ 7/291] Writing tensor blk.0.attn_k.weight | size 1024 x 4096 | type F16 | T+ 5

INFO:convert:[ 8/291] Writing tensor blk.0.attn_output.weight | size 4096 x 4096 | type F16 | T+ 5

INFO:convert:[ 9/291] Writing tensor blk.0.attn_q.weight | size 4096 x 4096 | type F16 | T+ 5

INFO:convert:[ 10/291] Writing tensor blk.0.attn_v.weight | size 1024 x 4096 | type F16 | T+ 5

INFO:convert:[ 11/291] Writing tensor blk.1.attn_norm.weight | size 4096 | type F32 | T+ 5

INFO:convert:[ 12/291] Writing tensor blk.1.ffn_down.weight | size 4096 x 14336 | type F16 | T+ 5

INFO:convert:[ 13/291] Writing tensor blk.1.ffn_gate.weight | size 14336 x 4096 | type F16 | T+ 5

root@master:~/work/llama.cpp# ll models -h

-rw-r--r-- 1 root root 15G May 17 07:47 Llama3-FP16.gguf

-rw-r--r-- 1 root root 15G May 17 08:02 Llama3-FP16.bin

模型量化

模型量化使用quantize命令,其具體可用參數與允許量化的類型如下:

root@master:~/work/llama.cpp# ./quantize

usage: ./quantize [--help] [--allow-requantize] [--leave-output-tensor] [--pure] [--imatrix] [--include-weights] [--exclude-weights] [--output-tensor-type] [--token-embedding-type] [--override-kv] model-f32.gguf [model-quant.gguf] type [nthreads]--allow-requantize: Allows requantizing tensors that have already been quantized. Warning: This can severely reduce quality compared to quantizing from 16bit or 32bit--leave-output-tensor: Will leave output.weight un(re)quantized. Increases model size but may also increase quality, especially when requantizing--pure: Disable k-quant mixtures and quantize all tensors to the same type--imatrix file_name: use data in file_name as importance matrix for quant optimizations--include-weights tensor_name: use importance matrix for this/these tensor(s)--exclude-weights tensor_name: use importance matrix for this/these tensor(s)--output-tensor-type ggml_type: use this ggml_type for the output.weight tensor--token-embedding-type ggml_type: use this ggml_type for the token embeddings tensor--keep-split: will generate quatized model in the same shards as input --override-kv KEY=TYPE:VALUEAdvanced option to override model metadata by key in the quantized model. May be specified multiple times.

Note: --include-weights and --exclude-weights cannot be used togetherAllowed quantization types:2 or Q4_0 : 3.56G, +0.2166 ppl @ LLaMA-v1-7B3 or Q4_1 : 3.90G, +0.1585 ppl @ LLaMA-v1-7B8 or Q5_0 : 4.33G, +0.0683 ppl @ LLaMA-v1-7B9 or Q5_1 : 4.70G, +0.0349 ppl @ LLaMA-v1-7B19 or IQ2_XXS : 2.06 bpw quantization20 or IQ2_XS : 2.31 bpw quantization28 or IQ2_S : 2.5 bpw quantization29 or IQ2_M : 2.7 bpw quantization24 or IQ1_S : 1.56 bpw quantization31 or IQ1_M : 1.75 bpw quantization10 or Q2_K : 2.63G, +0.6717 ppl @ LLaMA-v1-7B21 or Q2_K_S : 2.16G, +9.0634 ppl @ LLaMA-v1-7B23 or IQ3_XXS : 3.06 bpw quantization26 or IQ3_S : 3.44 bpw quantization27 or IQ3_M : 3.66 bpw quantization mix12 or Q3_K : alias for Q3_K_M22 or IQ3_XS : 3.3 bpw quantization11 or Q3_K_S : 2.75G, +0.5551 ppl @ LLaMA-v1-7B12 or Q3_K_M : 3.07G, +0.2496 ppl @ LLaMA-v1-7B13 or Q3_K_L : 3.35G, +0.1764 ppl @ LLaMA-v1-7B25 or IQ4_NL : 4.50 bpw non-linear quantization30 or IQ4_XS : 4.25 bpw non-linear quantization15 or Q4_K : alias for Q4_K_M14 or Q4_K_S : 3.59G, +0.0992 ppl @ LLaMA-v1-7B15 or Q4_K_M : 3.80G, +0.0532 ppl @ LLaMA-v1-7B17 or Q5_K : alias for Q5_K_M16 or Q5_K_S : 4.33G, +0.0400 ppl @ LLaMA-v1-7B17 or Q5_K_M : 4.45G, +0.0122 ppl @ LLaMA-v1-7B18 or Q6_K : 5.15G, +0.0008 ppl @ LLaMA-v1-7B7 or Q8_0 : 6.70G, +0.0004 ppl @ LLaMA-v1-7B1 or F16 : 14.00G, -0.0020 ppl @ Mistral-7B32 or BF16 : 14.00G, -0.0050 ppl @ Mistral-7B0 or F32 : 26.00G @ 7BCOPY : only copy tensors, no quantizing

使用quantize量化模型,它提供各種量化位數的模型:Q2、Q3、Q4、Q5、Q6、Q8、F16。

量化模型的命名方法遵循: Q + 量化比特位 + 變種。量化位數越少,對硬件資源的要求越低,但是模型的精度也越低。

模型經過量化之后,可以發現模型的大小從15G降低到8G,但模型精度從16位浮點數降低到8位整數。

root@master:~/work/llama.cpp# ./quantize ./models/Llama3-FP16.gguf ./models/Llama3-q8.gguf q8_0

main: build = 2908 (359cbe3f)

main: built with cc (Ubuntu 11.4.0-1ubuntu1~22.04) 11.4.0 for x86_64-linux-gnu

main: quantizing '/root/work/models/Llama3-FP16.gguf' to '/root/work/models/Llama3-q8.gguf' as Q8_0

llama_model_loader: loaded meta data with 21 key-value pairs and 291 tensors from /root/work/models/Llama3-FP16.gguf (version GGUF V3 (latest))

llama_model_loader: Dumping metadata keys/values. Note: KV overrides do not apply in this output.

llama_model_loader: - kv 0: general.architecture str = llama

llama_model_loader: - kv 1: general.name str = Llama3-Chinese-8B-Instruct

llama_model_loader: - kv 2: llama.vocab_size u32 = 128256

llama_model_loader: - kv 3: llama.context_length u32 = 8192

llama_model_loader: - kv 4: llama.embedding_length u32 = 4096

llama_model_loader: - kv 5: llama.block_count u32 = 32

llama_model_loader: - kv 6: llama.feed_forward_length u32 = 14336

llama_model_loader: - kv 7: llama.rope.dimension_count u32 = 128

llama_model_loader: - kv 8: llama.attention.head_count u32 = 32

llama_model_loader: - kv 9: llama.attention.head_count_kv u32 = 8

llama_model_loader: - kv 10: llama.attention.layer_norm_rms_epsilon f32 = 0.000010

llama_model_loader: - kv 11: llama.rope.freq_base f32 = 500000.000000

llama_model_loader: - kv 12: general.file_type u32 = 1

llama_model_loader: - kv 13: tokenizer.ggml.model str = gpt2

llama_model_loader: - kv 14: tokenizer.ggml.tokens arr[str,128256] = ["!", "\"", "#", "$", "%", "&", "'", ...

llama_model_loader: - kv 15: tokenizer.ggml.scores arr[f32,128256] = [0.000000, 0.000000, 0.000000, 0.0000...

llama_model_loader: - kv 16: tokenizer.ggml.token_type arr[i32,128256] = [1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, ...

llama_model_loader: - kv 17: tokenizer.ggml.merges arr[str,280147] = ["? ?", "? ???", "?? ??", "...

llama_model_loader: - kv 18: tokenizer.ggml.bos_token_id u32 = 128000

llama_model_loader: - kv 19: tokenizer.ggml.eos_token_id u32 = 128001

llama_model_loader: - kv 20: tokenizer.chat_template str = {% set loop_messages = messages %}{% ...

llama_model_loader: - type f32: 65 tensors

llama_model_loader: - type f16: 226 tensors

[ 1/ 291] token_embd.weight - [ 4096, 128256, 1, 1], type = f16, converting to q8_0 .. size = 1002.00 MiB -> 532.31 MiB

[ 2/ 291] blk.0.attn_norm.weight - [ 4096, 1, 1, 1], type = f32, size = 0.016 MB

[ 3/ 291] blk.0.ffn_down.weight - [14336, 4096, 1, 1], type = f16, converting to q8_0 .. size = 112.00 MiB -> 59.50 MiB

[ 4/ 291] blk.0.ffn_gate.weight - [ 4096, 14336, 1, 1], type = f16, converting to q8_0 .. size = 112.00 MiB -> 59.50 MiB

[ 5/ 291] blk.0.ffn_up.weight - [ 4096, 14336, 1, 1], type = f16, converting to q8_0 .. size = 112.00 MiB -> 59.50 MiB

[ 6/ 291] blk.0.ffn_norm.weight - [ 4096, 1, 1, 1], type = f32, size = 0.016 MB

[ 7/ 291] blk.0.attn_k.weight - [ 4096, 1024, 1, 1], type = f16, converting to q8_0 .. size = 8.00 MiB -> 4.25 MiB

[ 8/ 291] blk.0.attn_output.weight - [ 4096, 4096, 1, 1], type = f16, converting to q8_0 .. size = 32.00 MiB -> 17.00 MiB

[ 9/ 291] blk.0.attn_q.weight - [ 4096, 4096, 1, 1], type = f16, converting to q8_0 .. size = 32.00 MiB -> 17.00 MiB

[ 10/ 291] blk.0.attn_v.weight - [ 4096, 1024, 1, 1], type = f16, converting to q8_0 .. size = 8.00 MiB -> 4.25 MiB

[ 11/ 291] blk.1.attn_norm.weight - [ 4096, 1, 1, 1], type = f32, size = 0.016 MB

[ 12/ 291] blk.1.ffn_down.weight - [14336, 4096, 1, 1], type = f16, converting to q8_0 .. size = 112.00 MiB -> 59.50 MiB

[ 13/ 291] blk.1.ffn_gate.weight - [ 4096, 14336, 1, 1], type = f16, converting to q8_0 .. size = 112.00 MiB -> 59.50 MiB

[ 14/ 291] blk.1.ffn_up.weight - [ 4096, 14336, 1, 1], type = f16, converting to q8_0 .. size = 112.00 MiB -> 59.50 MiB

[ 15/ 291] blk.1.ffn_norm.weight - [ 4096, 1, 1, 1], type = f32, size = 0.016 MB

[ 16/ 291] blk.1.attn_k.weight - [ 4096, 1024, 1, 1], type = f16, converting to q8_0 .. size = 8.00 MiB -> 4.25 MiB

[ 17/ 291] blk.1.attn_output.weight - [ 4096, 4096, 1, 1], type = f16, converting to q8_0 .. size = 32.00 MiB -> 17.00 MiB

[ 18/ 291] blk.1.attn_q.weight - [ 4096, 4096, 1, 1], type = f16, converting to q8_0 .. size = 32.00 MiB -> 17.00 MiB

[ 19/ 291] blk.1.attn_v.weight - [ 4096, 1024, 1, 1], type = f16, converting to q8_0 .. size = 8.00 MiB -> 4.25 MiB

root@master:~/work/llama.cpp# ll -h models/

-rw-r--r-- 1 root root 8.0G May 17 07:54 Llama3-q8.gguf

模型加載與推理

模型加載與推理使用main命令,其支持如下可用參數:

root@master:~/work/llama.cpp# ./main -husage: ./main [options]options:-h, --help show this help message and exit--version show version and build info-i, --interactive run in interactive mode--interactive-specials allow special tokens in user text, in interactive mode--interactive-first run in interactive mode and wait for input right away-cnv, --conversation run in conversation mode (does not print special tokens and suffix/prefix)-ins, --instruct run in instruction mode (use with Alpaca models)-cml, --chatml run in chatml mode (use with ChatML-compatible models)--multiline-input allows you to write or paste multiple lines without ending each in '\'-r PROMPT, --reverse-prompt PROMPThalt generation at PROMPT, return control in interactive mode(can be specified more than once for multiple prompts).--color colorise output to distinguish prompt and user input from generations-s SEED, --seed SEED RNG seed (default: -1, use random seed for < 0)-t N, --threads N number of threads to use during generation (default: 30)-tb N, --threads-batch Nnumber of threads to use during batch and prompt processing (default: same as --threads)-td N, --threads-draft N number of threads to use during generation (default: same as --threads)-tbd N, --threads-batch-draft Nnumber of threads to use during batch and prompt processing (default: same as --threads-draft)-p PROMPT, --prompt PROMPTprompt to start generation with (default: empty)

可以加載預訓練模型或者經過量化之后的模型,這里選擇加載量化后的模型進行推理。

在llama.cpp項目的根目錄,執行如下命令,加載模型進行推理。

root@master:~/work/llama.cpp# ./main -m models/Llama3-q8.gguf --color -f prompts/alpaca.txt -ins -c 2048 --temp 0.2 -n 256 --repeat_penalty 1.1

Log start

main: build = 2908 (359cbe3f)

main: built with cc (Ubuntu 11.4.0-1ubuntu1~22.04) 11.4.0 for x86_64-linux-gnu

main: seed = 1715935175

llama_model_loader: loaded meta data with 22 key-value pairs and 291 tensors from models/Llama3-q8.gguf (version GGUF V3 (latest))

llama_model_loader: Dumping metadata keys/values. Note: KV overrides do not apply in this output.

llama_model_loader: - kv 0: general.architecture str = llama

llama_model_loader: - kv 1: general.name str = Llama3-Chinese-8B-Instruct

llama_model_loader: - kv 2: llama.vocab_size u32 = 128256

llama_model_loader: - kv 3: llama.context_length u32 = 8192

llama_model_loader: - kv 4: llama.embedding_length u32 = 4096

llama_model_loader: - kv 5: llama.block_count u32 = 32

llama_model_loader: - kv 6: llama.feed_forward_length u32 = 14336

llama_model_loader: - kv 7: llama.rope.dimension_count u32 = 128== Running in interactive mode. ==- Press Ctrl+C to interject at any time.- Press Return to return control to LLaMa.- To return control without starting a new line, end your input with '/'.- If you want to submit another line, end your input with '\'.<|begin_of_text|>Below is an instruction that describes a task. Write a response that appropriately completes the request.

> hi

Hello! How can I help you today?<|eot_id|>>

在提示符>之后輸入prompt,使用ctrl+c中斷輸出,多行信息以\作為行尾。執行./main -h命令查看幫助和參數說明,以下是一些常用的參數:

`

| 命令 | 描述 |

|---|---|

| -m | 指定 LLaMA 模型文件的路徑 |

| -mu | 指定遠程 http url 來下載文件 |

| -i | 以交互模式運行程序,允許直接提供輸入并接收實時響應。 |

| -ins | 以指令模式運行程序,這在處理羊駝模型時特別有用。 |

| -f | 指定prompt模板,alpaca模型請加載prompts/alpaca.txt |

| -n | 控制回復生成的最大長度(默認:128) |

| -c | 設置提示上下文的大小,值越大越能參考更長的對話歷史(默認:512) |

| -b | 控制batch size(默認:8),可適當增加 |

| -t | 控制線程數量(默認:4),可適當增加 |

--repeat_penalty | 控制生成回復中對重復文本的懲罰力度 |

--temp | 溫度系數,值越低回復的隨機性越小,反之越大 |

--top_p, top_k | 控制解碼采樣的相關參數 |

--color | 區分用戶輸入和生成的文本 |

模型API服務

llama.cpp提供了完全與OpenAI API兼容的API接口,使用經過編譯生成的server可執行文件啟動API服務。

root@master:~/work/llama.cpp# ./server -m models/Llama3-q8.gguf --host 0.0.0.0 --port 8000

{"tid":"140018656950080","timestamp":1715936504,"level":"INFO","function":"main","line":2942,"msg":"build info","build":2908,"commit":"359cbe3f"}

{"tid":"140018656950080","timestamp":1715936504,"level":"INFO","function":"main","line":2947,"msg":"system info","n_threads":30,"n_threads_batch":-1,"total_threads":30,"system_info":"AVX = 1 | AVX_VNNI = 0 | AVX2 = 1 | AVX512 = 1 | AVX512_VBMI = 0 | AVX512_VNNI = 0 | FMA = 1 | NEON = 0 | ARM_FMA = 0 | F16C = 1 | FP16_VA = 0 | WASM_SIMD = 0 | BLAS = 0 | SSE3 = 1 | SSSE3 = 1 | VSX = 0 | MATMUL_INT8 = 0 | LLAMAFILE = 1 | "}

llama_model_loader: loaded meta data with 22 key-value pairs and 291 tensors from models/Llama3-q8.gguf (version GGUF V3 (latest))

llama_model_loader: Dumping metadata keys/values. Note: KV overrides do not apply in this output.

llama_model_loader: - kv 0: general.architecture str = llama

llama_model_loader: - kv 1: general.name str = Llama3-Chinese-8B-Instruct

llama_model_loader: - kv 2: llama.vocab_size u32 = 128256

llama_model_loader: - kv 3: llama.context_length u32 = 8192

llama_model_loader: - kv 4: llama.embedding_length u32 = 4096

llama_model_loader: - kv 5: llama.block_count u32 = 32

llama_model_loader: - kv 6: llama.feed_forward_length u32 = 14336

啟動API服務后,可以使用curl命令進行測試

curl --request POST \--url http://localhost:8000/completion \--header "Content-Type: application/json" \--data '{"prompt": "Hi"}'

模型API服務(第三方)

在llamm.cpp項目中有提到各種語言編寫的第三方工具包,可以使用這些工具包提供API服務,這里以Python為例,使用llama-cpp-python提供API服務。

安裝依賴

pip install llama-cpp-pythonpip install llama-cpp-python -i https://mirrors.aliyun.com/pypi/simple/

注意:可能還需要安裝以下缺失依賴,可根據啟動時的異常提示分別安裝。

pip install sse_starlette starlette_context pydantic_settings

啟動API服務,默認運行在http://localhost:8000

python -m llama_cpp.server --model models/Llama3-q8.gguf

安裝openai依賴

pip install openai

使用openai調用API服務

import os

from openai import OpenAI # 導入OpenAI庫# 設置OpenAI的BASE_URL

os.environ["OPENAI_BASE_URL"] = "http://localhost:8000/v1"client = OpenAI() # 創建OpenAI客戶端對象# 調用模型

completion = client.chat.completions.create(model="llama3", # 任意填messages=[{"role": "system", "content": "你是一個樂于助人的助手。"},{"role": "user", "content": "你好!"}]

)# 輸出模型回復

print(completion.choices[0].message)

GPU推理

如果編譯構建了GPU執行環境,可以使用

-ngl N或--n-gpu-layers N參數,指定offload層數,讓模型在GPU上運行推理

例如:

-ngl 40表示offload 40層模型參數到GPU

未使用-ngl N或 --n-gpu-layers N參數,程序默認在CPU上運行

root@master:~/work/llama.cpp# ./server -m models/Llama3-FP16.gguf --host 0.0.0.0 --port 8000

可從以下關鍵啟動日志看出,模型并沒有在GPU上執行

ggml_cuda_init: GGML_CUDA_FORCE_MMQ: no

ggml_cuda_init: CUDA_USE_TENSOR_CORES: yes

ggml_cuda_init: found 1 CUDA devices:Device 0: Tesla V100S-PCIE-32GB, compute capability 7.0, VMM: yes

llm_load_tensors: ggml ctx size = 0.15 MiB

llm_load_tensors: offloading 0 repeating layers to GPU

llm_load_tensors: offloaded 0/33 layers to GPU

llm_load_tensors: CPU buffer size = 8137.64 MiB

.........................................................................................

llama_new_context_with_model: n_ctx = 2048

llama_new_context_with_model: n_batch = 2048

llama_new_context_with_model: n_ubatch = 512

使用-ngl N或 --n-gpu-layers N參數,程序默認在GPU上運行

root@master:~/work/llama.cpp# ./server -m models/Llama3-FP16.gguf --host 0.0.0.0 --port 8000 --n-gpu-layers 1000

可從以下關鍵啟動日志看出,模型在GPU上執行

ggml_cuda_init: GGML_CUDA_FORCE_MMQ: no

ggml_cuda_init: CUDA_USE_TENSOR_CORES: yes

ggml_cuda_init: found 1 CUDA devices:Device 0: Tesla V100S-PCIE-32GB, compute capability 7.0, VMM: yes

llm_load_tensors: ggml ctx size = 0.30 MiB

llm_load_tensors: offloading 32 repeating layers to GPU

llm_load_tensors: offloading non-repeating layers to GPU

llm_load_tensors: offloaded 33/33 layers to GPU

llm_load_tensors: CPU buffer size = 1002.00 MiB

llm_load_tensors: CUDA0 buffer size = 14315.02 MiB

.........................................................................................

llama_new_context_with_model: n_ctx = 512

llama_new_context_with_model: n_batch = 512

llama_new_context_with_model: n_ubatch = 512

llama_new_context_with_model: flash_attn = 0

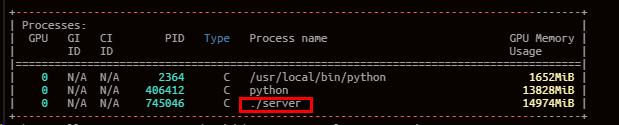

執行nvidia-smi命令,可以進一步驗證模型已在GPU上運行。

logback配置詳解)

命令使用)

)

)