Kafka部署(3.7)

生產環境推薦的kafka部署方式為operator方式部署,Strimzi是目前最主流的operator方案。集群數據量較小的話,可以采用NFS共享存儲,數據量較大的話可使用local pv存儲

部署operator

operator部署方式為helm或yaml文件部署,此處以helm方式部署為例:

必須優先部署operator (版本要適配否則安裝不上kafka)

注意:0.45以上不在支持zk

helm pull strimzi/strimzi-kafka-operator --version 0.42.0

tar -zxf strimzi-kafka-operator-helm-3-chart-0.42.0.tgz

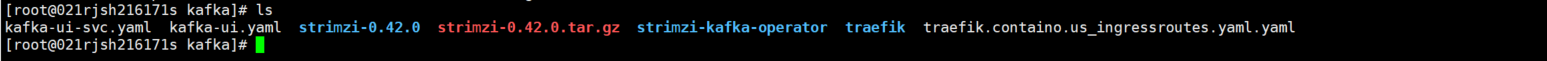

ls

helm install strimzi ./strimzi-kafka-operator -n kafka --create-namespace

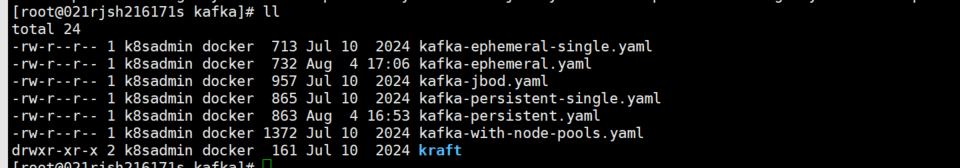

查看示例文件

Strimzi官方倉庫提供了各種場景下的示例文件,資源清單下載地址:https://github.com/strimzi/strimzi-kafka-operator/releases

strimzi-0.42.0.tar.gz

tar 解壓

/root/lq-service/kafka/strimzi-0.42.0/examples/kafka

kafka-persistent.yaml:部署具有三個 ZooKeeper 和三個 Kafka 節點的持久集群。(推薦)

kafka-jbod.yaml:部署具有三個 ZooKeeper 和三個 Kafka 節點(每個節點使用多個持久卷)的持久集群。

kafka-persistent-single.yaml:部署具有單個 ZooKeeper 節點和單個 Kafka 節點的持久集群。

kafka-ephemeral.yaml:部署具有三個 ZooKeeper 和三個 Kafka 節點的臨時群集。

kafka-ephemeral-single.yaml:部署具有三個 ZooKeeper 節點和一個 Kafka 節點的臨時群集。

創建pvc資源

[root@tiaoban kafka]# cat kafka-pvc.yaml

kind: PersistentVolumeClaim

apiVersion: v1

metadata:name: data-my-cluster-zookeeper-0namespace: kafka

spec:storageClassName: nfs-clientaccessModes:- ReadWriteOnceresources:requests:storage: 100Gi

---

kind: PersistentVolumeClaim

apiVersion: v1

metadata:name: data-my-cluster-zookeeper-1namespace: kafka

spec:storageClassName: nfs-clientaccessModes:- ReadWriteOnceresources:requests:storage: 100Gi

---

kind: PersistentVolumeClaim

apiVersion: v1

metadata:name: data-my-cluster-zookeeper-2namespace: kafka

spec:storageClassName: nfs-clientaccessModes:- ReadWriteOnceresources:requests:storage: 100Gi

---

kind: PersistentVolumeClaim

apiVersion: v1

metadata:name: data-0-my-cluster-kafka-0namespace: kafka

spec:storageClassName: nfs-clientaccessModes:- ReadWriteOnceresources:requests:storage: 100Gi

---

kind: PersistentVolumeClaim

apiVersion: v1

metadata:name: data-0-my-cluster-kafka-1namespace: kafka

spec:storageClassName: nfs-clientaccessModes:- ReadWriteOnceresources:requests:storage: 100Gi

---

kind: PersistentVolumeClaim

apiVersion: v1

metadata:name: data-0-my-cluster-kafka-2namespace: kafka

spec:storageClassName: nfs-clientaccessModes:- ReadWriteOnceresources:requests:storage: 100Gi

部署kafka和zookeeper

參考官方倉庫的kafka-persistent.yaml示例文件,部署三個 ZooKeeper 和三個 Kafka 節點的持久集群。kafka-persistent.yaml

apiVersion: kafka.strimzi.io/v1beta2

kind: Kafka

metadata:name: my-cluster

spec:kafka:version: 3.7.1replicas: 3listeners:- name: plainport: 9092type: internaltls: false- name: tlsport: 9093type: internaltls: trueconfig:offsets.topic.replication.factor: 3transaction.state.log.replication.factor: 3transaction.state.log.min.isr: 2default.replication.factor: 3min.insync.replicas: 2inter.broker.protocol.version: "3.7"storage:type: jbodvolumes:- id: 0type: persistent-claimsize: 10GideleteClaim: falsezookeeper:replicas: 3storage:type: persistent-claimsize: 10GideleteClaim: falseentityOperator:topicOperator: {}userOperator: {}kubectl apply -f kafka-persistent.yaml

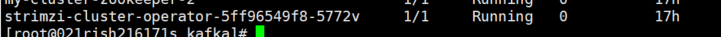

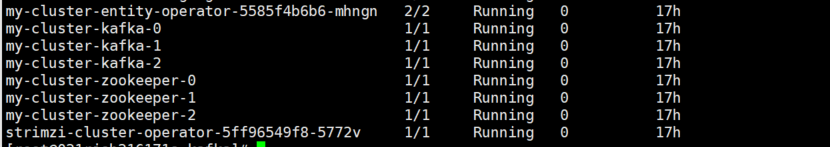

kubectl get po -n kafka

訪問驗證

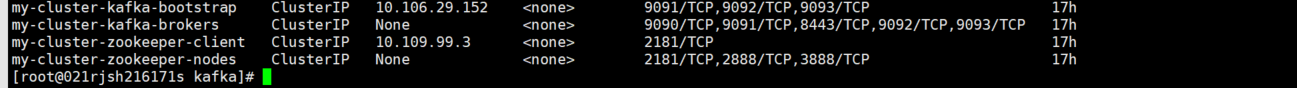

查看資源信息,已成功創建相關pod和svc資源。

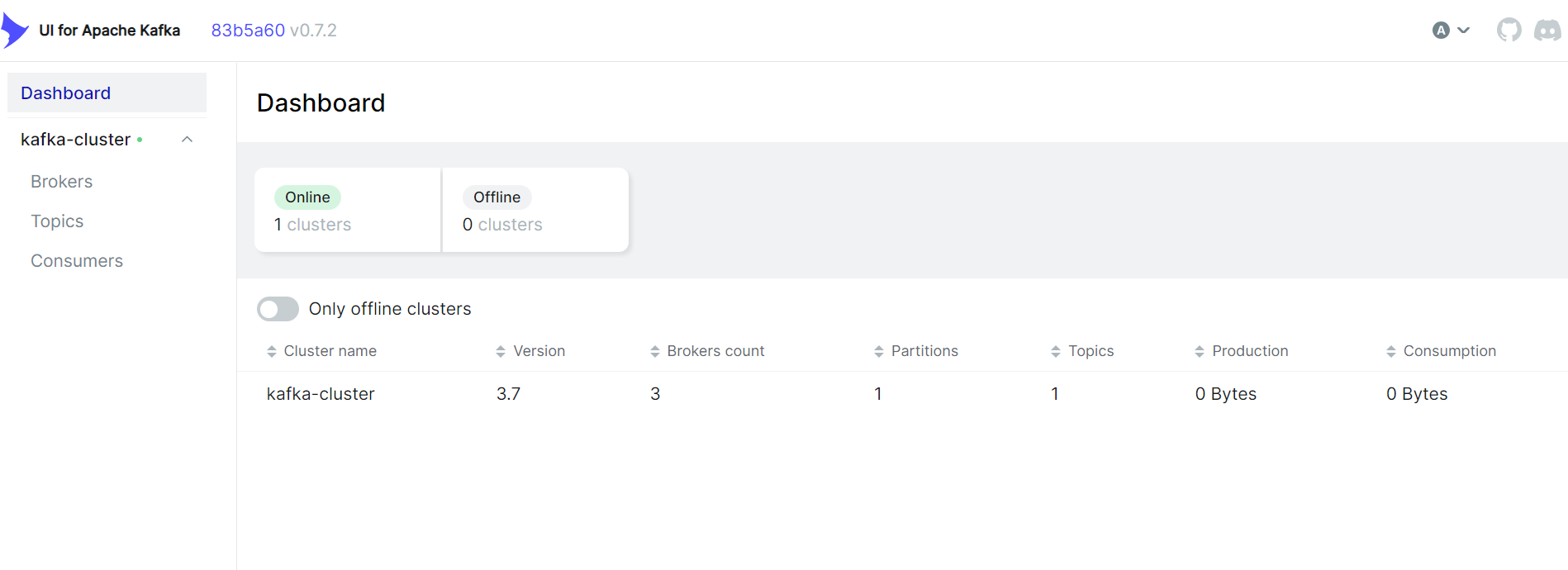

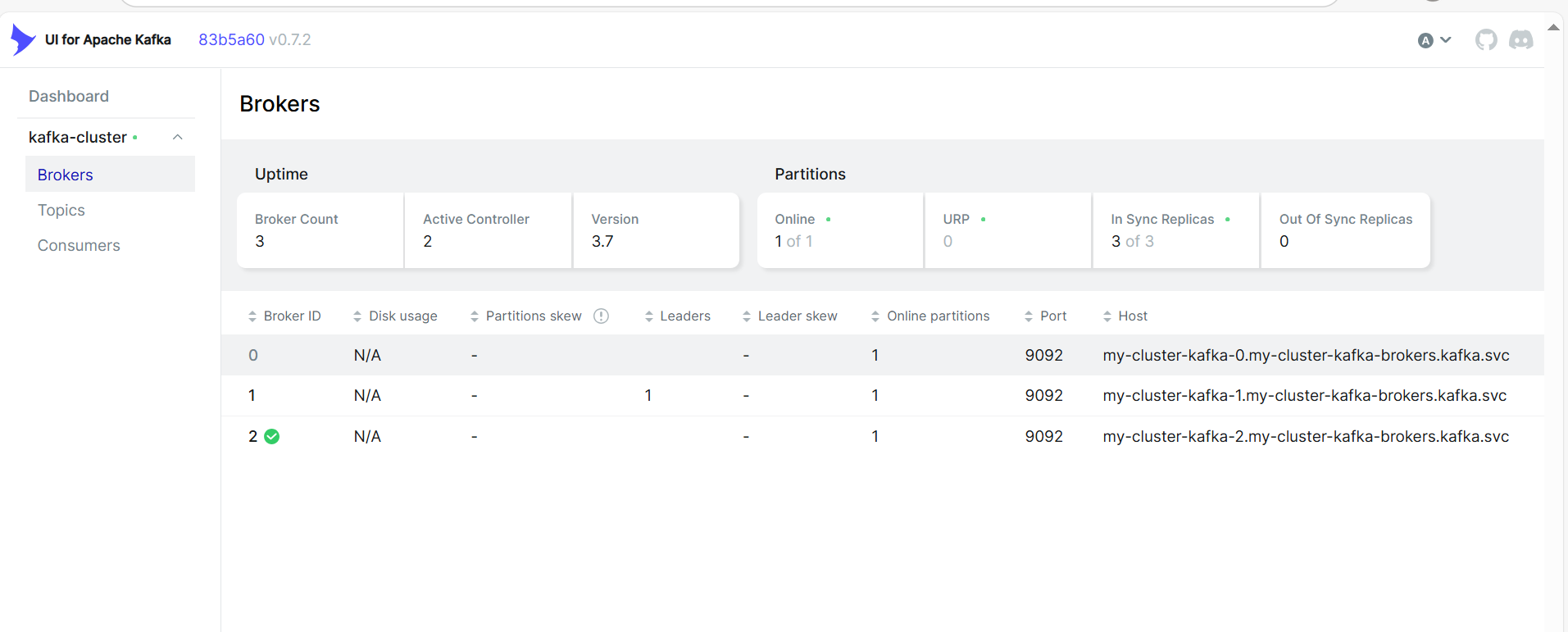

部署kafka-ui

創建configmap和ingress資源,在configmap中指定kafka連接地址。以traefik為例,創建ingress資源便于通過域名方式訪問。

需要先授權

traefik.containo.us_ingressroutes.yaml.yaml

地址:https://raw.githubusercontent.com/traefik/traefik/v2.6/docs/content/reference/dynamic-configuration/traefik.containo.us_ingressroutes.yaml

[root@021rjsh216171s kafka]# cat kafka-ui.yaml

apiVersion: v1

kind: ConfigMap

metadata:name: kafka-ui-helm-valuesnamespace: kafka

data:KAFKA_CLUSTERS_0_NAME: "kafka-cluster"KAFKA_CLUSTERS_0_BOOTSTRAPSERVERS: "my-cluster-kafka-brokers.kafka.svc:9092"AUTH_TYPE: "DISABLED"MANAGEMENT_HEALTH_LDAP_ENABLED: "FALSE"

---

apiVersion: traefik.containo.us/v1alpha1

kind: IngressRoute

metadata:name: kafka-uinamespace: kafka

spec:entryPoints:- webroutes:- match: Host(`kafka-ui.local.com`) 域名kind: Ruleservices:- name: kafka-uiport: 80

helm方式部署kafka-ui并指定配置文件

helm repo add kafka-ui https://provectus.github.io/kafka-ui

helm install kafka-ui kafka-ui/kafka-ui -n kafka --set existingConfigMap="kafka-ui-helm-values"

)

)

:拉索回歸Lasso)

|SVM-KKT條件回顧)