目錄

谷歌筆記本(可選)

準備數據:從文本文件中解析數據

編寫算法:編寫kNN算法

分析數據:使用Matplotlib創建散點圖

準備數據:歸一化數值

測試算法:作為完整程序驗證分類器

使用算法:構建完整可用系統

谷歌筆記本(可選)

from?google.colab?import?drive

drive.mount("/content/drive")Mounted at /content/drive

準備數據:從文本文件中解析數據

def?file2matrix(filename):fr?=?open(filename)arrayOfLines?=?fr.readlines()numberOfLines?=?len(arrayOfLines)returnMat?=?zeros((numberOfLines,?3))classLabelVector?=?[]index?=?0for?line?in?arrayOfLines:line?=?line.strip()listFromLine?=?line.split('\t')returnMat[index,?:]?=?listFromLine[0:3]classLabelVector.append(int(listFromLine[-1]))index?+=?1return?returnMat,?classLabelVectordatingDataMat,?datingLabels?=?file2matrix('/content/drive/MyDrive/MachineLearning/機器學習/k-近鄰算法/使用k-近鄰算法改進約會網站的配對效果/datingTestSet2.txt')

datingDataMatarray([[4.0920000e+04, 8.3269760e+00, 9.5395200e-01], [1.4488000e+04, 7.1534690e+00, 1.6739040e+00], [2.6052000e+04, 1.4418710e+00, 8.0512400e-01], ..., [2.6575000e+04, 1.0650102e+01, 8.6662700e-01], [4.8111000e+04, 9.1345280e+00, 7.2804500e-01], [4.3757000e+04, 7.8826010e+00, 1.3324460e+00]])

datingLabels[:10][3, 2, 1, 1, 1, 1, 3, 3, 1, 3]

編寫算法:編寫kNN算法

from?numpy?import?*

import?operatordef?classify0(inX,?dataSet,?labels,?k):dataSetSize?=?dataSet.shape[0]diffMat?=?tile(inX,?(dataSetSize,?1))?-?dataSetsqDiffMat?=?diffMat?**?2sqDistances?=?sqDiffMat.sum(axis=1)distances?=?sqDistances**0.5sortedDistIndicies?=?distances.argsort()classCount?=?{}for?i?in?range(k):voteIlabel?=?labels[sortedDistIndicies[i]]classCount[voteIlabel]?=?classCount.get(voteIlabel,?0)?+?1sortedClassCount?=?sorted(classCount.items(),?key=operator.itemgetter(1),?reverse=True)return?sortedClassCount[0][0]分析數據:使用Matplotlib創建散點圖

import?matplotlib

import?matplotlib.pyplot?as?plt

fig?=?plt.figure()

ax?=?fig.add_subplot(111)

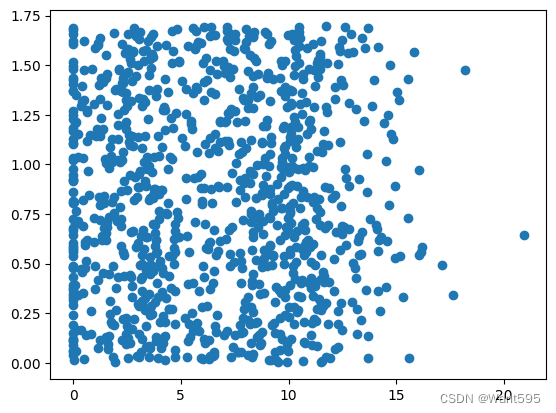

ax.scatter(datingDataMat[:,?1],?datingDataMat[:,?2])

plt.show()

?

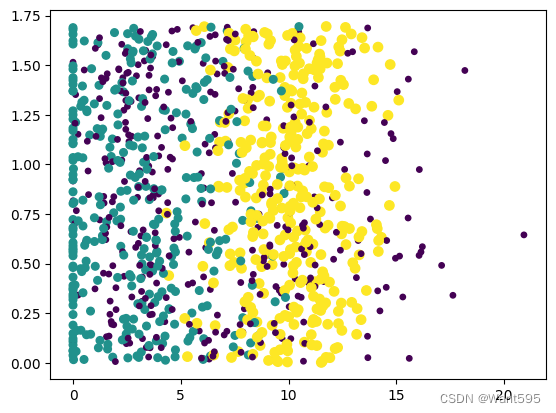

import?matplotlib

import?matplotlib.pyplot?as?plt

fig?=?plt.figure()

ax?=?fig.add_subplot(111)

ax.scatter(datingDataMat[:,?1],?datingDataMat[:,?2],15.0*array(datingLabels),?15.0*array(datingLabels))

plt.show()

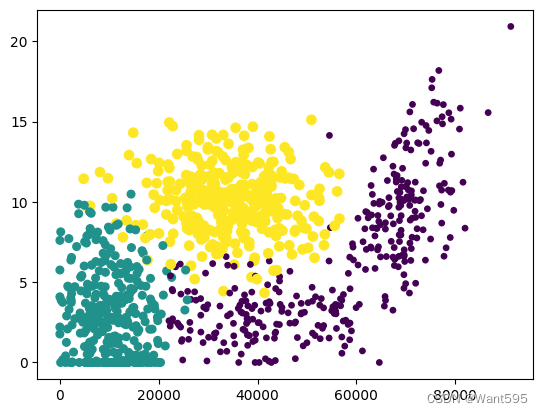

import?matplotlib

import?matplotlib.pyplot?as?plt

fig?=?plt.figure()

ax?=?fig.add_subplot(111)

ax.scatter(datingDataMat[:,?0],?datingDataMat[:,?1],15.0*array(datingLabels),?15.0*array(datingLabels))

plt.show()

準備數據:歸一化數值

def?autoNorm(dataSet):minVals?=?dataSet.min(0)maxVals?=?dataSet.max(0)ranges?=?maxVals?-?minValsnormDataSet?=?zeros(shape(dataSet))m?=?dataSet.shape[0]normDataSet?=?dataSet?-?tile(minVals,?(m,1))normDataSet?=?normDataSet/tile(ranges,?(m,1))return?normDataSet,?ranges,?minValsnormMat,?ranges,?minVals?=?autoNorm(datingDataMat)

normMatarray([[0.44832535, 0.39805139, 0.56233353],[0.15873259, 0.34195467, 0.98724416],[0.28542943, 0.06892523, 0.47449629],...,[0.29115949, 0.50910294, 0.51079493],[0.52711097, 0.43665451, 0.4290048 ],[0.47940793, 0.3768091 , 0.78571804]])

rangesarray([9.1273000e+04, 2.0919349e+01, 1.6943610e+00])

minValsarray([0. , 0. , 0.001156])

測試算法:作為完整程序驗證分類器

def?datingClassTest():hoRatio?=?0.1datingDataMat,?datingLabels?=?file2matrix('/content/drive/MyDrive/MachineLearning/機器學習/k-近鄰算法/使用k-近鄰算法改進約會網站的配對效果/datingTestSet2.txt')normMat,?ranges,?minVals?=?autoNorm(datingDataMat)m?=?normMat.shape[0]numTestVecs?=?int(m*hoRatio)errorCount?=?0for?i?in?range(numTestVecs):classifierResult?=?classify0(normMat[i,:],?normMat[numTestVecs:m,:],datingLabels[numTestVecs:m],3)print("the?classifierResult?came?back?with:?%d,\the?real?answer?is:?%d"?%?(classifierResult,?datingLabels[i]))if?(classifierResult?!=?datingLabels[i]):errorCount?+=?1print("the?total?error?rate?is:?%f"?%?(errorCount/float(numTestVecs)))datingClassTest()the classifierResult came back with: 3, the real answer is: 3 the classifierResult came back with: 2, the real answer is: 2 the classifierResult came back with: 1, the real answer is: 1 the classifierResult came back with: 1, the real answer is: 1 the classifierResult came back with: 1, the real answer is: 1 the classifierResult came back with: 1, the real answer is: 1 the classifierResult came back with: 3, the real answer is: 3 the classifierResult came back with: 3, the real answer is: 3 the classifierResult came back with: 1, the real answer is: 1 the classifierResult came back with: 3, the real answer is: 3 the classifierResult came back with: 1, the real answer is: 1 the classifierResult came back with: 1, the real answer is: 1 the classifierResult came back with: 2, the real answer is: 2 the classifierResult came back with: 1, the real answer is: 1 the classifierResult came back with: 1, the real answer is: 1 the classifierResult came back with: 1, the real answer is: 1 the classifierResult came back with: 1, the real answer is: 1 the classifierResult came back with: 1, the real answer is: 1 the classifierResult came back with: 2, the real answer is: 2 the classifierResult came back with: 3, the real answer is: 3 the classifierResult came back with: 2, the real answer is: 2 the classifierResult came back with: 1, the real answer is: 1 the classifierResult came back with: 3, the real answer is: 2 the classifierResult came back with: 3, the real answer is: 3 the classifierResult came back with: 2, the real answer is: 2 the classifierResult came back with: 3, the real answer is: 3 the classifierResult came back with: 2, the real answer is: 2 the classifierResult came back with: 3, the real answer is: 3 the classifierResult came back with: 2, the real answer is: 2 the classifierResult came back with: 1, the real answer is: 1 the classifierResult came back with: 3, the real answer is: 3 the classifierResult came back with: 1, the real answer is: 1 the classifierResult came back with: 3, the real answer is: 3 the classifierResult came back with: 1, the real answer is: 1 the classifierResult came back with: 2, the real answer is: 2 the classifierResult came back with: 1, the real answer is: 1 the classifierResult came back with: 1, the real answer is: 1 the classifierResult came back with: 2, the real answer is: 2 the classifierResult came back with: 3, the real answer is: 3 the classifierResult came back with: 3, the real answer is: 3 the classifierResult came back with: 1, the real answer is: 1 the classifierResult came back with: 2, the real answer is: 2 the classifierResult came back with: 3, the real answer is: 3 the classifierResult came back with: 3, the real answer is: 3 the classifierResult came back with: 3, the real answer is: 3 the classifierResult came back with: 1, the real answer is: 1 the classifierResult came back with: 1, the real answer is: 1 the classifierResult came back with: 1, the real answer is: 1 the classifierResult came back with: 1, the real answer is: 1 the classifierResult came back with: 2, the real answer is: 2 the classifierResult came back with: 2, the real answer is: 2 the classifierResult came back with: 1, the real answer is: 1 the classifierResult came back with: 3, the real answer is: 3 the classifierResult came back with: 2, the real answer is: 2 the classifierResult came back with: 2, the real answer is: 2 the classifierResult came back with: 2, the real answer is: 2 the classifierResult came back with: 2, the real answer is: 2 the classifierResult came back with: 3, the real answer is: 3 the classifierResult came back with: 1, the real answer is: 1 the classifierResult came back with: 2, the real answer is: 2 the classifierResult came back with: 1, the real answer is: 1 the classifierResult came back with: 2, the real answer is: 2 the classifierResult came back with: 2, the real answer is: 2 the classifierResult came back with: 2, the real answer is: 2 the classifierResult came back with: 2, the real answer is: 2 the classifierResult came back with: 2, the real answer is: 2 the classifierResult came back with: 3, the real answer is: 3 the classifierResult came back with: 2, the real answer is: 2 the classifierResult came back with: 3, the real answer is: 3 the classifierResult came back with: 1, the real answer is: 1 the classifierResult came back with: 2, the real answer is: 2 the classifierResult came back with: 3, the real answer is: 3 the classifierResult came back with: 2, the real answer is: 2 the classifierResult came back with: 2, the real answer is: 2 the classifierResult came back with: 3, the real answer is: 1 the classifierResult came back with: 3, the real answer is: 3 the classifierResult came back with: 1, the real answer is: 1 the classifierResult came back with: 1, the real answer is: 1 the classifierResult came back with: 3, the real answer is: 3 the classifierResult came back with: 3, the real answer is: 3 the classifierResult came back with: 1, the real answer is: 1 the classifierResult came back with: 2, the real answer is: 2 the classifierResult came back with: 3, the real answer is: 3 the classifierResult came back with: 3, the real answer is: 1 the classifierResult came back with: 3, the real answer is: 3 the classifierResult came back with: 1, the real answer is: 1 the classifierResult came back with: 2, the real answer is: 2 the classifierResult came back with: 2, the real answer is: 2 the classifierResult came back with: 1, the real answer is: 1 the classifierResult came back with: 1, the real answer is: 1 the classifierResult came back with: 3, the real answer is: 3 the classifierResult came back with: 2, the real answer is: 3 the classifierResult came back with: 1, the real answer is: 1 the classifierResult came back with: 2, the real answer is: 2 the classifierResult came back with: 1, the real answer is: 1 the classifierResult came back with: 3, the real answer is: 3 the classifierResult came back with: 3, the real answer is: 3 the classifierResult came back with: 2, the real answer is: 2 the classifierResult came back with: 1, the real answer is: 1 the classifierResult came back with: 3, the real answer is: 1 the total error rate is: 0.050000

使用算法:構建完整可用系統

def?classifyPerson():resultList?=?['not?at?all','in?small?doses','in?large?doses',]percentTats?=?float(input("percentage?of?time?spent?playing?video?games?"))ffMiles?=?float(input("frequent?flier?miles?earned?per?year?"))iceCream?=?float(input("liters?of?ice?cream?consumed?per?year?"))datingDataMat,?datingLabels?=?file2matrix('/content/drive/MyDrive/MachineLearning/機器學習/k-近鄰算法/使用k-近鄰算法改進約會網站的配對效果/datingTestSet2.txt')normMat,?ranges,?minVals?=?autoNorm(datingDataMat)inArr?=?array([ffMiles,?percentTats,?iceCream])classifierResult?=?classify0((inArr?-?minVals)/ranges,?normMat,?datingLabels,?3)print("You?will?probably?like?this?person:",?resultList[classifierResult?-?1])classifyPerson()percentage of time spent playing video games?10 frequent flier miles earned per year?10000 liters of ice cream consumed per year?0.5 You will probably like this person: in small doses

之SpringCloud Consul)

)

)

)