目錄

1. 前言

2. 儲備知識

3. 準備工作

4. 代碼修改的地方

5.結果展示

1. 前言

????????之前一直在忙著寫文檔,之前一直做分類,檢測和分割,現在看到跟蹤算法,花了幾天時間找代碼調試,看了看,展示效果比單純的檢測要更加的炸裂一點。

2. 儲備知識

????????DeepSORT(Deep Learning to Track Multi-Object in SORT)是一種基于深度學習的多目標跟蹤算法,它結合了深度學習的目標檢測和傳統的軌跡跟蹤方法,旨在實現在復雜場景中準確和穩定地跟蹤多個移動目標。以下是關于DeepSORT的檢測思想、特點和應用方面的介紹:

????????檢測思想: DeepSORT的核心思想是結合深度學習目標檢測和軌跡跟蹤方法,以實現多目標跟蹤。首先,利用深度學習目標檢測模型(如YOLO、Faster R-CNN等)檢測出每一幀圖像中的所有目標物體,并提取其特征。然后,通過應用傳統的軌跡跟蹤算法(如卡爾曼濾波器和軌跡關聯等),將目標在連續幀之間進行關聯,從而生成每個目標的運動軌跡。

????????特點:

- 多目標跟蹤: DeepSORT專注于同時跟蹤多個目標,適用于需要同時監測和追蹤多個物體的場景,如交通監控、人群管理等。

- 深度特征: 通過使用深度學習模型提取目標的特征,DeepSORT可以更準確地表示目標,從而提高跟蹤的精度和魯棒性。

- 軌跡關聯: DeepSORT使用傳統的軌跡關聯技術來連接不同幀之間的目標,確保在物體出現、消失、重疊等情況下仍能準確跟蹤。

- 實時性能: DeepSORT設計用于實時應用,可以在視頻流中高效地進行目標跟蹤,適用于要求實時性能的應用場景。

需要了解的算法內容:詳細介紹

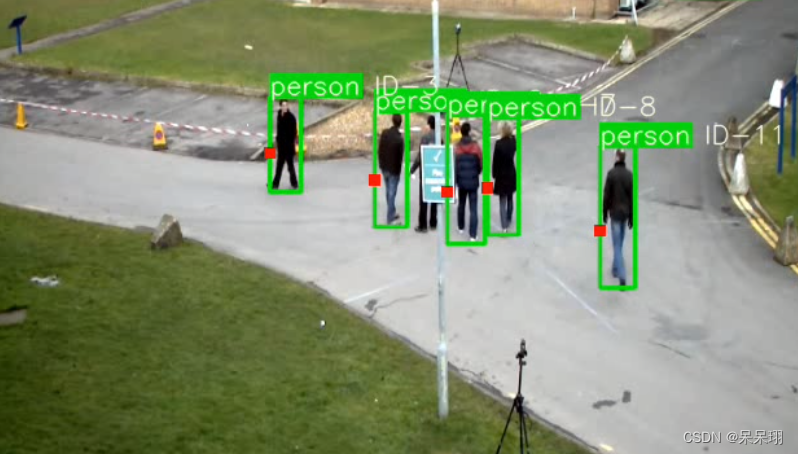

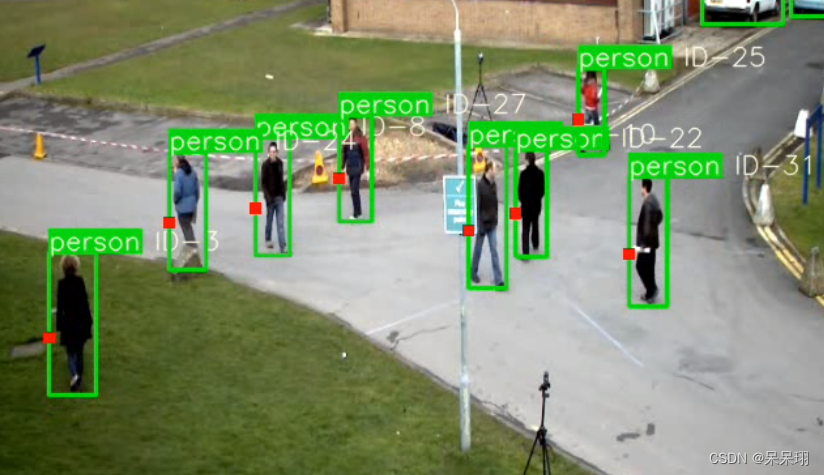

- 目前主流的目標跟蹤算法都是基于Tracking-by-Detecton策略,即基于目標檢測的結果來進行目標跟蹤。DeepSORT運用的就是這個策略,上面的視頻是DeepSORT對人群進行跟蹤的結果,每個bbox左上角的數字是用來標識某個人的唯一ID號。

-

這里就有個問題,視頻中不同時刻的同一個人,位置發生了變化,那么是如何關聯上的呢?答案就是匈牙利算法和卡爾曼濾波。

匈牙利算法可以告訴我們當前幀的某個目標,是否與前一幀的某個目標相同。卡爾曼濾波可以基于目標前一時刻的位置,來預測當前時刻的位置,并且可以比傳感器(在目標跟蹤中即目標檢測器,比如Yolo等)更準確的估計目標的位置。

3. 準備工作

????????基礎代碼:黃老師的github,參考的是這位博主的,我做了相應的修改

4. 代碼修改的地方

具體需要修改的有兩個py文件

(1) main.py文件,里面的檢測器yolo用onnx做推理,onnx模型參考我的博文yolov5轉rknn(聰明的你應該會的)

import cv2

import torch

import numpy as np

import onnxruntime as rtdef sigmoid(x):return 1 / (1 + np.exp(-x))def nms_boxes(boxes, scores):"""Suppress non-maximal boxes.# Argumentsboxes: ndarray, boxes of objects.scores: ndarray, scores of objects.# Returnskeep: ndarray, index of effective boxes."""x = boxes[:, 0]y = boxes[:, 1]w = boxes[:, 2] - boxes[:, 0]h = boxes[:, 3] - boxes[:, 1]areas = w * horder = scores.argsort()[::-1]keep = []while order.size > 0:i = order[0]keep.append(i)xx1 = np.maximum(x[i], x[order[1:]])yy1 = np.maximum(y[i], y[order[1:]])xx2 = np.minimum(x[i] + w[i], x[order[1:]] + w[order[1:]])yy2 = np.minimum(y[i] + h[i], y[order[1:]] + h[order[1:]])w1 = np.maximum(0.0, xx2 - xx1 + 0.00001)h1 = np.maximum(0.0, yy2 - yy1 + 0.00001)inter = w1 * h1ovr = inter / (areas[i] + areas[order[1:]] - inter)inds = np.where(ovr <= 0.45)[0]order = order[inds + 1]keep = np.array(keep)return keepdef process(input, mask, anchors):anchors = [anchors[i] for i in mask]grid_h, grid_w = map(int, input.shape[0:2])box_confidence = sigmoid(input[..., 4])box_confidence = np.expand_dims(box_confidence, axis=-1)box_class_probs = sigmoid(input[..., 5:])box_xy = sigmoid(input[..., :2])*2 - 0.5col = np.tile(np.arange(0, grid_w), grid_w).reshape(-1, grid_w)row = np.tile(np.arange(0, grid_h).reshape(-1, 1), grid_h)col = col.reshape(grid_h, grid_w, 1, 1).repeat(3, axis=-2)row = row.reshape(grid_h, grid_w, 1, 1).repeat(3, axis=-2)grid = np.concatenate((col, row), axis=-1)box_xy += gridbox_xy *= int(img_size/grid_h)box_wh = pow(sigmoid(input[..., 2:4])*2, 2)box_wh = box_wh * anchorsbox = np.concatenate((box_xy, box_wh), axis=-1)return box, box_confidence, box_class_probsdef filter_boxes(boxes, box_confidences, box_class_probs):"""Filter boxes with box threshold. It's a bit different with origin yolov5 post process!# Argumentsboxes: ndarray, boxes of objects.box_confidences: ndarray, confidences of objects.box_class_probs: ndarray, class_probs of objects.# Returnsboxes: ndarray, filtered boxes.classes: ndarray, classes for boxes.scores: ndarray, scores for boxes."""box_classes = np.argmax(box_class_probs, axis=-1)box_class_scores = np.max(box_class_probs, axis=-1)pos = np.where(box_confidences[..., 0] >= 0.5)boxes = boxes[pos]classes = box_classes[pos]scores = box_class_scores[pos]return boxes, classes, scoresdef yolov5_post_process(input_data):masks = [[0, 1, 2], [3, 4, 5], [6, 7, 8]]anchors = [[10, 13], [16, 30], [33, 23], [30, 61], [62, 45],[59, 119], [116, 90], [156, 198], [373, 326]]boxes, classes, scores = [], [], []for input,mask in zip(input_data, masks):b, c, s = process(input, mask, anchors)b, c, s = filter_boxes(b, c, s)boxes.append(b)classes.append(c)scores.append(s)boxes = np.concatenate(boxes)boxes = xywh2xyxy(boxes)classes = np.concatenate(classes)scores = np.concatenate(scores)nboxes, nclasses, nscores = [], [], []for c in set(classes):inds = np.where(classes == c)b = boxes[inds]c = classes[inds]s = scores[inds]keep = nms_boxes(b, s)nboxes.append(b[keep])nclasses.append(c[keep])nscores.append(s[keep])if not nclasses and not nscores:return None, None, Noneboxes = np.concatenate(nboxes)classes = np.concatenate(nclasses)scores = np.concatenate(nscores)return boxes, classes, scoresdef letterbox(img, new_shape=(640, 640), color=(114, 114, 114), auto=True, scaleFill=False, scaleup=True, stride=32):# Resize and pad image while meeting stride-multiple constraintsshape = img.shape[:2] # current shape [height, width]if isinstance(new_shape, int):new_shape = (new_shape, new_shape)# Scale ratio (new / old)r = min(new_shape[0] / shape[0], new_shape[1] / shape[1])if not scaleup: # only scale down, do not scale up (for better test mAP)r = min(r, 1.0)# Compute paddingratio = r, r # width, height ratiosnew_unpad = int(round(shape[1] * r)), int(round(shape[0] * r))dw, dh = new_shape[1] - new_unpad[0], new_shape[0] - new_unpad[1] # wh paddingif auto: # minimum rectangledw, dh = np.mod(dw, stride), np.mod(dh, stride) # wh paddingelif scaleFill: # stretchdw, dh = 0.0, 0.0new_unpad = (new_shape[1], new_shape[0])ratio = new_shape[1] / shape[1], new_shape[0] / shape[0] # width, height ratiosdw /= 2 # divide padding into 2 sidesdh /= 2if shape[::-1] != new_unpad: # resizeimg = cv2.resize(img, new_unpad, interpolation=cv2.INTER_LINEAR)top, bottom = int(round(dh - 0.1)), int(round(dh + 0.1))left, right = int(round(dw - 0.1)), int(round(dw + 0.1))img = cv2.copyMakeBorder(img, top, bottom, left, right, cv2.BORDER_CONSTANT, value=color) # add borderreturn img, ratio, (dw, dh)def clip_coords(boxes, img_shape):# Clip bounding xyxy bounding boxes to image shape (height, width)boxes[:, 0].clamp_(0, img_shape[1]) # x1boxes[:, 1].clamp_(0, img_shape[0]) # y1boxes[:, 2].clamp_(0, img_shape[1]) # x2boxes[:, 3].clamp_(0, img_shape[0]) # y2def xywh2xyxy(x):# Convert nx4 boxes from [x, y, w, h] to [x1, y1, x2, y2] where xy1=top-left, xy2=bottom-righty = x.clone() if isinstance(x, torch.Tensor) else np.copy(x)y[:, 0] = x[:, 0] - x[:, 2] / 2 # top left xy[:, 1] = x[:, 1] - x[:, 3] / 2 # top left yy[:, 2] = x[:, 0] + x[:, 2] / 2 # bottom right xy[:, 3] = x[:, 1] + x[:, 3] / 2 # bottom right yreturn yCLASSES = ['person', 'bicycle', 'car', 'motorcycle', 'airplane', 'bus', 'train', 'truck', 'boat', 'traffic light','fire hydrant', 'stop sign', 'parking meter', 'bench', 'bird', 'cat', 'dog', 'horse', 'sheep', 'cow','elephant', 'bear', 'zebra', 'giraffe', 'backpack', 'umbrella', 'handbag', 'tie', 'suitcase', 'frisbee','skis', 'snowboard', 'sports ball', 'kite', 'baseball bat', 'baseball glove', 'skateboard', 'surfboard','tennis racket', 'bottle', 'wine glass', 'cup', 'fork', 'knife', 'spoon', 'bowl', 'banana', 'apple', 'sandwich','orange', 'broccoli', 'carrot', 'hot dog', 'pizza', 'donut', 'cake', 'chair', 'couch', 'potted plant', 'bed','dining table', 'toilet', 'tv', 'laptop', 'mouse', 'remote', 'keyboard', 'cell phone', 'microwave', 'oven', 'toaster', 'sink','refrigerator', 'book', 'clock', 'vase', 'scissors', 'teddy bear', 'hair drier', 'toothbrush']def preprocess(img, img_size):img0 = img.copy()img = letterbox(img, new_shape=img_size)[0]img = img[:, :, ::-1].transpose(2, 0, 1)img = np.ascontiguousarray(img).astype(np.float32)img = torch.from_numpy(img)img /= 255.0if img.ndimension() == 3:img = img.unsqueeze(0)return img0, imgdef draw(image, boxes, scores, classes):"""Draw the boxes on the image.# Argument:image: original image.boxes: ndarray, boxes of objects.classes: ndarray, classes of objects.scores: ndarray, scores of objects.all_classes: all classes name."""for box, score, cl in zip(boxes, scores, classes):top, left, right, bottom = box# print('class: {}, score: {}'.format(CLASSES[cl], score))# print('box coordinate left,top,right,down: [{}, {}, {}, {}]'.format(top, left, right, bottom))top = int(top)left = int(left)right = int(right)bottom = int(bottom)cv2.rectangle(image, (top, left), (right, bottom), (255, 0, 0), 2)cv2.putText(image, '{0} {1:.2f}'.format(CLASSES[cl], score),(top, left - 6),cv2.FONT_HERSHEY_SIMPLEX,0.6, (0, 0, 255), 2)def detect(im, img_size, sess, input_name, outputs_name):im0, img = preprocess(im, img_size)input_data = onnx_inference(img.numpy(), sess, input_name, outputs_name)boxes, classes, scores = yolov5_post_process(input_data)if boxes is not None:draw(im, boxes, scores, classes)cv2.imshow('demo', im)cv2.waitKey(1)def onnx_inference(img, sess, input_name, outputs_name):# 模型推理:模型輸出節點名,模型輸入節點名,輸入數據(注意節點名的格式!!!!!)outputs = sess.run(outputs_name, {input_name: img})input0_data = outputs[0]input1_data = outputs[1]input2_data = outputs[2]input0_data = input0_data.reshape([3, 80, 80, 85])input1_data = input1_data.reshape([3, 40, 40, 85])input2_data = input2_data.reshape([3, 20, 20, 85])input_data = list()input_data.append(np.transpose(input0_data, (1, 2, 0, 3)))input_data.append(np.transpose(input1_data, (1, 2, 0, 3)))input_data.append(np.transpose(input2_data, (1, 2, 0, 3)))return input_datadef load_onnx_model():# onnx模型前向推理sess = rt.InferenceSession('./weights/modified_yolov5s.onnx')# 模型的輸入和輸出節點名,可以通過netron查看input_name = 'images'outputs_name = ['396', '440', '484']return sess, input_name, outputs_nameif __name__ == '__main__':# create onnx_modelsess, input_name, outputs_name = load_onnx_model()# input_model_sizeimg_size = 640# read videovideo = cv2.VideoCapture('./video/cut3.avi')print("Loaded video ...")frame_interval = 2 # 間隔幀數,例如每隔10幀獲取一次frame_count = 0while True:# 讀取每幀圖片_, im = video.read()if frame_count % frame_interval == 0:if im is None:break# 縮小尺寸,1920x1080->960x540im = cv2.resize(im, (640, 640))list_bboxs = []# det_objectdetect(im, img_size, sess, input_name, outputs_name)frame_count += 1video.release()cv2.destroyAllWindows()(2) feature_extractor.py的修改:

這里有4種推理情況:ckpt.t7是ReID(?Re-identification利用算法),在圖像庫中找到要搜索的目標的技術,所以它是屬于圖像檢索的一個子問題。

????????(1) 動態的batch_size推理:由于檢測到的目標是多個object,在本項目的代碼REID推理中,會將目標通過torch.cat連接起來,變成(n, 64, 128)的形狀,所以需要用動態的onnx模型

????????(2)那我就想要靜態的怎么辦,安排!!!,思路就是將cat的拆分開就行了,shape變成(1, 64 , 128),單個推理后將結果cat起來就行了,easy的。

重要!!!!ckpt文件轉onnx的代碼

import os

import cv2

import time

import argparse

import torch

import numpy as np

from deep_sort import build_tracker

from utils.draw import draw_boxes

from utils.parser import get_config

from tqdm import tqdmif __name__ == '__main__':parser = argparse.ArgumentParser()parser.add_argument("--config_deepsort", type=str, default="./configs/deep_sort.yaml", help='Configure tracker')parser.add_argument("--cpu", dest="use_cuda", action="store_false", default=True, help='Run in CPU')args = parser.parse_args()cfg = get_config()cfg.merge_from_file(args.config_deepsort)use_cuda = args.use_cuda and torch.cuda.is_available()torch.set_grad_enabled(False)model = build_tracker(cfg, use_cuda=False)model.reid = Truemodel.extractor.net.eval()device = 'cpu'output_onnx = 'deepsort.onnx'# ------------------------ export -----------------------------print("==> Exporting model to ONNX format at '{}'".format(output_onnx))input_names = ['input']output_names = ['output']input_tensor = torch.randn(1, 3, 128, 64, device=device)torch.onnx.export(model.extractor.net, input_tensor, output_onnx, export_params=True, verbose=False,input_names=input_names, output_names=output_names, opset_version=13,do_constant_folding=True)

????????(3)但是要轉rknn怎么辦,ckpt.t7轉onnx后,有一個ReduceL2,不支持量化,我就轉的fp16(在RK3588上是可以的,rk1808不知道行不行),不過我嘗試了將最后兩個節點刪除,對結果好像沒有什么影響(用的是cut后的onnx推理),有懂的朋友可以解釋一下!!!

????????(4) 就是rknn的推理,這里就不展示了,需要的私聊我吧

import torch

import torchvision.transforms as transforms

import numpy as np

import cv2

# import onnxruntime as rt

# from rknnlite.api import RKNNLiteclass Extractor(object):def __init__(self, model_path):self.model_path = model_pathself.device = "cpu"self.size = (64, 128)self.norm = transforms.Compose([transforms.ToTensor(),transforms.Normalize([0.485, 0.456, 0.406], [0.229, 0.224, 0.225]),])def _preprocess(self, im_crops):"""TODO:1. to float with scale from 0 to 12. resize to (64, 128) as Market1501 dataset did3. concatenate to a numpy array3. to torch Tensor4. normalize"""def _resize(im, size):return cv2.resize(im.astype(np.float32) / 255., size)im_batch = torch.cat([self.norm(_resize(im, self.size)).unsqueeze(0) for im in im_crops], dim=0).float()return im_batchdef __call__(self, im_crops):im_batch = self._preprocess(im_crops)# sess = rt.InferenceSession(self.model_path)# 模型的輸入和輸出節點名,可以通過netron查看# input_name = 'input'# outputs_name = ['output']# (1)動態輸出# features = sess.run(outputs_name, {input_name: im_batch.numpy()})# print('features:', np.array(features)[0, :, :].shape)# return np.array(features)[0, :, :]# (2)靜態態輸出# sort_results = []# n = im_batch.numpy().shape[0]# for i in range(n):# img = im_batch.numpy()[i, :, :].reshape(1, 3, 128, 64)# feature = sess.run(outputs_name, {input_name: img})# feature = np.array(feature)# sort_results.append(feature)# features = np.concatenate(sort_results, axis=1)[0, :, :]# print(features.shape)# return np.array(features)# (3)去掉onnx的最后兩個節點的靜態模型輸出# input_name = 'input'# outputs_name = ['204']# sort_results = []# n = im_batch.numpy().shape[0]# for i in range(n):# img = im_batch.numpy()[i, :, :].reshape(1, 3, 128, 64)# feature = sess.run(outputs_name, {input_name: img})# feature = np.array(feature)# sort_results.append(feature)# features = np.concatenate(sort_results, axis=1)[0, :, :]# print(features.shape)# return np.array(features)# (4 )rk模型修改# rknn_lite = RKNNLite()# rknn_lite.load_rknn('./weights/ckpt_fp16.rknn')# ret = rknn_lite.init_runtime(core_mask=RKNNLite.NPU_CORE_0_1_2)# if ret != 0:# print('Init runtime environment failed')# exit(ret)# print('done')# sort_results = []# n = im_batch.numpy().shape[0]# for i in range(n):# img = im_batch.numpy()[i, :, :].reshape(1, 3, 128, 64)# feature = self.model_path.inference(inputs=[img])# feature = np.array(feature)# sort_results.append(feature)# features = np.concatenate(sort_results, axis=1)[0, :, :]# print(features.shape)# return np.array(features)5.結果展示

????????onnx的轉換結果(測試視頻地址)

?

檢測結果

結果為什么是true)

?如何設置字體堆棧?)

springboot添加html頁面,實現數據庫數據的訪問)

系統臨時IP以及靜態IP配置(關閉、啟動網卡等操作))