一 項目展示

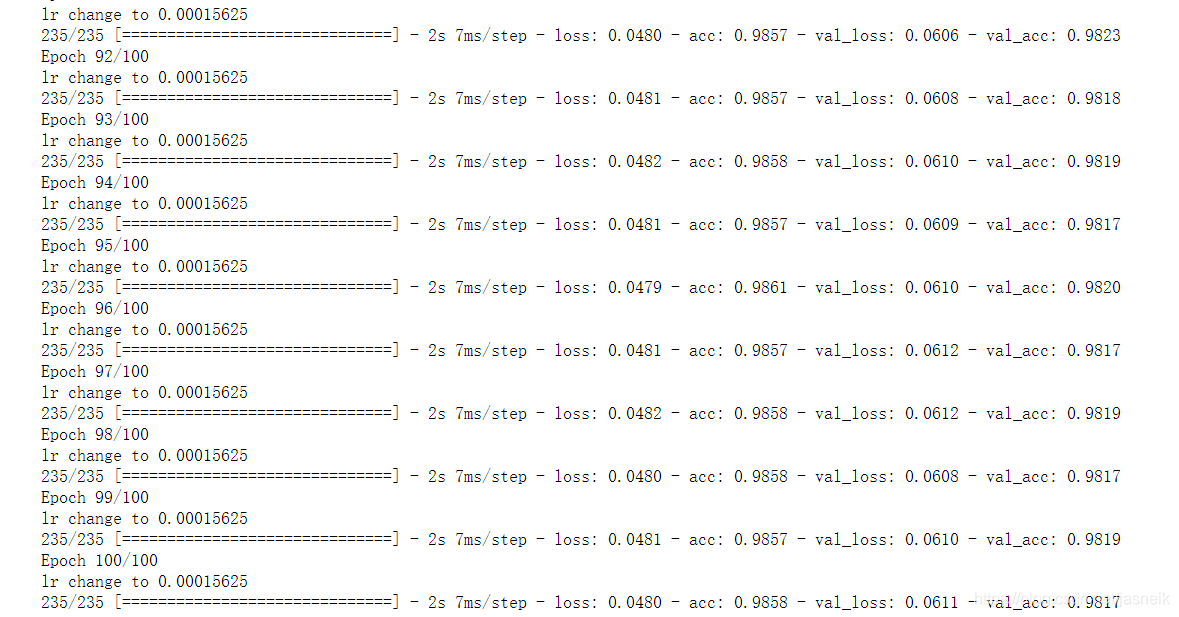

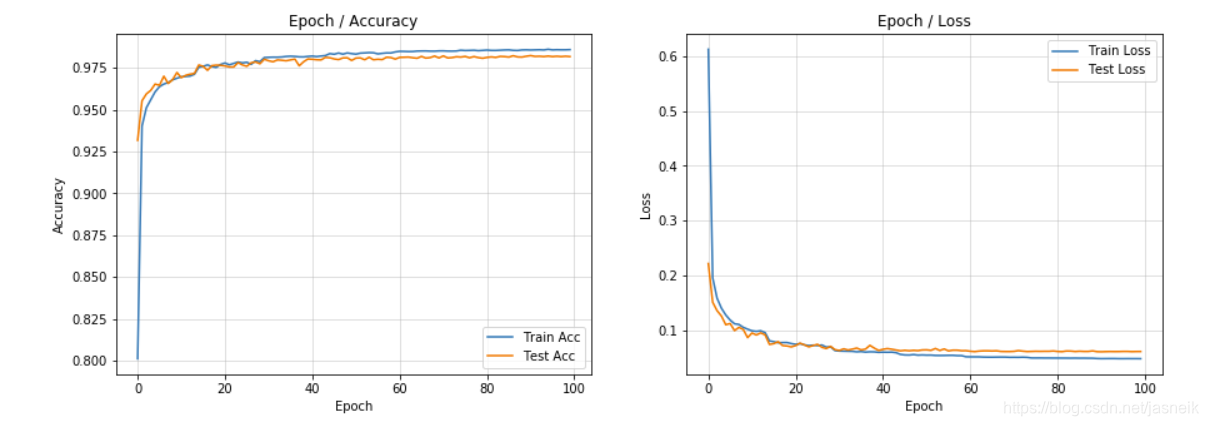

下面可以看到驗證集可以到了0.9823了,實際上,在下面的另外一個訓練,可以得到0.9839,我保守的寫了0.982

二 項目參數展示

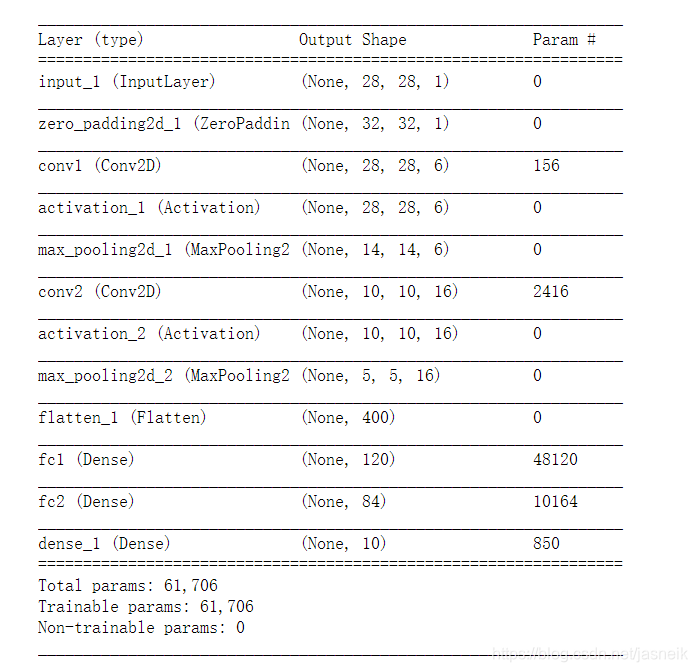

我們先來看看LeNet 5 的結構與參數,參數有61,706個。

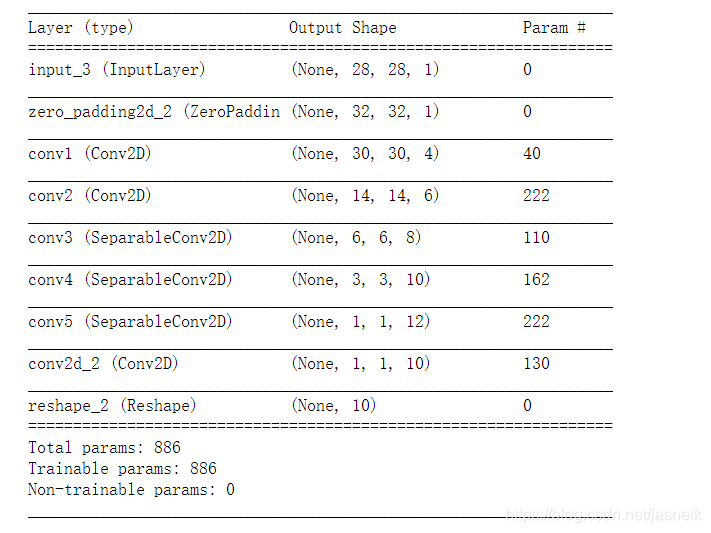

這個是我用keras寫的,可以看到參數只有886個。

項目代碼

我們來看一下模型的代碼,模型由傳統卷積改成了可分離卷積;

這里padding 主要是為了后面降維方便而設置

這里設置了5層卷積,是考慮到如果層數比較少的話,感受野比較小,增加卷積層,從而增加感受野(我覺得感受野,這時候比模型的復雜度重要)

因為mnist的字體大概占圖像面積70-85之間

代碼的注釋也寫得比較多,可以自行研究。

# 我用的是GPU訓練,如果訓練報錯的話,可以把SperableConv2D改成Conv2D

# 先訓練1個epoch,再改成如下的sperableconv2d

def my_network(input_shape=(32, 32, 1), classes=10):X_input = Input(input_shape)X = X_input# 這里padding 主要是為了后面降維方便而設置# 這里設置了5層卷積,是考慮到如果層數比較少的話,感受野比較小,增加卷積層,從而增加感受野# 因為mnist的字體大概占圖像面積70-85之間X = ZeroPadding2D((2, 2))(X_input)X = Conv2D(4, (3, 3), strides=(1, 1), padding='valid', activation='elu', name='conv1')(X)X = Conv2D(6, (3, 3), strides=(2, 2), padding='valid', activation='elu', name='conv2')(X)X = SeparableConv2D(8, (3, 3), strides=(2, 2), padding='valid', activation='elu', name='conv3')(X)X = SeparableConv2D(10, (3, 3), strides=(2, 2), padding='same', activation='elu', name='conv4')(X)X = SeparableConv2D(12, (3, 3), strides=(2, 2), padding='valid', activation='elu', name='conv5')(X)# 利用浮點卷積做為輸出,注意激活函數是softmaxX = Conv2D(classes, (1, 1), strides=(1, 1), padding='same', activation='softmax')(X)X = keras.layers.Reshape((classes,))(X)model = Model(inputs=X_input, outputs=X, name='my_network')return modelmodel_con = my_network(input_shape=(28, 28, 1), classes=10)# 這里的lr,如果用了學習衰減的話,就可以不設置了

optimizer = keras.optimizers.Adam(lr=0.001)

model_con.compile(optimizer=optimizer, loss='categorical_crossentropy', metrics=['accuracy'])

model_con.summary()

訓練過程

訓練的過程,用的是Adam優化器,利用了學習率衰減的策略,先由大的學習,加快訓練,再調整比較小的學習率來訓練,慢慢訓練得出的結果比較穩定。

下面是100個epochs的全部,識別的輸出,其它lr初始值為0.01,而不是0.001。

Epoch 1/100

lr change to 0.01

235/235 [==============================] - 2s 7ms/step - loss: 0.1065 - acc: 0.9673 - val_loss: 0.0792 - val_acc: 0.9755

Epoch 2/100

lr change to 0.01

235/235 [==============================] - 1s 6ms/step - loss: 0.0886 - acc: 0.9726 - val_loss: 0.1013 - val_acc: 0.9686

Epoch 3/100

lr change to 0.01

235/235 [==============================] - 1s 6ms/step - loss: 0.0884 - acc: 0.9732 - val_loss: 0.0744 - val_acc: 0.9768

Epoch 4/100

lr change to 0.01

235/235 [==============================] - 2s 6ms/step - loss: 0.0873 - acc: 0.9730 - val_loss: 0.0815 - val_acc: 0.9740

Epoch 5/100

lr change to 0.01

235/235 [==============================] - 1s 6ms/step - loss: 0.0851 - acc: 0.9733 - val_loss: 0.0724 - val_acc: 0.9768

Epoch 6/100

lr change to 0.01

235/235 [==============================] - 2s 6ms/step - loss: 0.0817 - acc: 0.9752 - val_loss: 0.0826 - val_acc: 0.9745

Epoch 7/100

lr change to 0.01

235/235 [==============================] - 1s 6ms/step - loss: 0.0855 - acc: 0.9741 - val_loss: 0.0830 - val_acc: 0.9740

Epoch 8/100

lr change to 0.01

235/235 [==============================] - 2s 6ms/step - loss: 0.0870 - acc: 0.9728 - val_loss: 0.0847 - val_acc: 0.9729

Epoch 9/100

lr change to 0.01

235/235 [==============================] - 2s 7ms/step - loss: 0.0813 - acc: 0.9752 - val_loss: 0.0701 - val_acc: 0.9787

Epoch 10/100

lr change to 0.01

235/235 [==============================] - 2s 7ms/step - loss: 0.0815 - acc: 0.9748 - val_loss: 0.0742 - val_acc: 0.9765

Epoch 11/100

lr change to 0.01

235/235 [==============================] - 2s 7ms/step - loss: 0.0809 - acc: 0.9749 - val_loss: 0.0721 - val_acc: 0.9770

Epoch 12/100

lr change to 0.005

235/235 [==============================] - 1s 6ms/step - loss: 0.0672 - acc: 0.9796 - val_loss: 0.0635 - val_acc: 0.9788

Epoch 13/100

lr change to 0.005

235/235 [==============================] - 2s 6ms/step - loss: 0.0662 - acc: 0.9800 - val_loss: 0.0575 - val_acc: 0.9808

Epoch 14/100

lr change to 0.005

235/235 [==============================] - 2s 6ms/step - loss: 0.0659 - acc: 0.9796 - val_loss: 0.0624 - val_acc: 0.9803

Epoch 15/100

lr change to 0.005

235/235 [==============================] - 1s 6ms/step - loss: 0.0662 - acc: 0.9793 - val_loss: 0.0631 - val_acc: 0.9791

Epoch 16/100

lr change to 0.005

235/235 [==============================] - 2s 6ms/step - loss: 0.0655 - acc: 0.9802 - val_loss: 0.0561 - val_acc: 0.9816

Epoch 17/100

lr change to 0.005

235/235 [==============================] - 2s 7ms/step - loss: 0.0659 - acc: 0.9799 - val_loss: 0.0653 - val_acc: 0.9808

Epoch 18/100

lr change to 0.005

235/235 [==============================] - 2s 6ms/step - loss: 0.0653 - acc: 0.9798 - val_loss: 0.0765 - val_acc: 0.9748

Epoch 19/100

lr change to 0.005

235/235 [==============================] - 2s 7ms/step - loss: 0.0656 - acc: 0.9792 - val_loss: 0.0565 - val_acc: 0.9811

Epoch 20/100

lr change to 0.005

235/235 [==============================] - 2s 7ms/step - loss: 0.0645 - acc: 0.9800 - val_loss: 0.0569 - val_acc: 0.9814

Epoch 21/100

lr change to 0.005

235/235 [==============================] - 2s 7ms/step - loss: 0.0639 - acc: 0.9804 - val_loss: 0.0566 - val_acc: 0.9814

Epoch 22/100

lr change to 0.005

235/235 [==============================] - 2s 7ms/step - loss: 0.0637 - acc: 0.9801 - val_loss: 0.0672 - val_acc: 0.9778

Epoch 23/100

lr change to 0.005

235/235 [==============================] - 2s 7ms/step - loss: 0.0631 - acc: 0.9807 - val_loss: 0.0607 - val_acc: 0.9804

Epoch 24/100

lr change to 0.0025

235/235 [==============================] - 2s 6ms/step - loss: 0.0562 - acc: 0.9825 - val_loss: 0.0587 - val_acc: 0.9804

Epoch 25/100

lr change to 0.0025

235/235 [==============================] - 2s 6ms/step - loss: 0.0550 - acc: 0.9831 - val_loss: 0.0542 - val_acc: 0.9819

Epoch 26/100

lr change to 0.0025

235/235 [==============================] - 2s 7ms/step - loss: 0.0541 - acc: 0.9833 - val_loss: 0.0541 - val_acc: 0.9818

Epoch 27/100

lr change to 0.0025

235/235 [==============================] - 2s 7ms/step - loss: 0.0544 - acc: 0.9829 - val_loss: 0.0545 - val_acc: 0.9825

Epoch 28/100

lr change to 0.0025

235/235 [==============================] - 2s 7ms/step - loss: 0.0548 - acc: 0.9829 - val_loss: 0.0514 - val_acc: 0.9827

Epoch 29/100

lr change to 0.0025

235/235 [==============================] - 2s 7ms/step - loss: 0.0536 - acc: 0.9833 - val_loss: 0.0587 - val_acc: 0.9805

Epoch 30/100

lr change to 0.0025

235/235 [==============================] - 2s 7ms/step - loss: 0.0534 - acc: 0.9831 - val_loss: 0.0683 - val_acc: 0.9783

Epoch 31/100

lr change to 0.0025

235/235 [==============================] - 2s 7ms/step - loss: 0.0535 - acc: 0.9839 - val_loss: 0.0517 - val_acc: 0.9831

Epoch 32/100

lr change to 0.0025

235/235 [==============================] - 2s 7ms/step - loss: 0.0537 - acc: 0.9831 - val_loss: 0.0524 - val_acc: 0.9821

Epoch 33/100

lr change to 0.0025

235/235 [==============================] - 2s 8ms/step - loss: 0.0533 - acc: 0.9833 - val_loss: 0.0543 - val_acc: 0.9820

Epoch 34/100

lr change to 0.0025

235/235 [==============================] - 2s 8ms/step - loss: 0.0527 - acc: 0.9836 - val_loss: 0.0529 - val_acc: 0.9814

Epoch 35/100

lr change to 0.0025

235/235 [==============================] - 2s 7ms/step - loss: 0.0534 - acc: 0.9835 - val_loss: 0.0558 - val_acc: 0.9814

Epoch 36/100

lr change to 0.00125

235/235 [==============================] - 2s 7ms/step - loss: 0.0487 - acc: 0.9852 - val_loss: 0.0523 - val_acc: 0.9823

Epoch 37/100

lr change to 0.00125

235/235 [==============================] - 2s 7ms/step - loss: 0.0478 - acc: 0.9854 - val_loss: 0.0500 - val_acc: 0.9837

Epoch 38/100

lr change to 0.00125

235/235 [==============================] - 2s 7ms/step - loss: 0.0482 - acc: 0.9851 - val_loss: 0.0532 - val_acc: 0.9823

Epoch 39/100

lr change to 0.00125

235/235 [==============================] - 2s 7ms/step - loss: 0.0480 - acc: 0.9849 - val_loss: 0.0509 - val_acc: 0.9832

Epoch 40/100

lr change to 0.00125

235/235 [==============================] - 2s 7ms/step - loss: 0.0477 - acc: 0.9850 - val_loss: 0.0509 - val_acc: 0.9824

Epoch 41/100

lr change to 0.00125

235/235 [==============================] - 2s 7ms/step - loss: 0.0481 - acc: 0.9850 - val_loss: 0.0509 - val_acc: 0.9831

Epoch 42/100

lr change to 0.00125

235/235 [==============================] - 2s 7ms/step - loss: 0.0482 - acc: 0.9847 - val_loss: 0.0511 - val_acc: 0.9827

Epoch 43/100

lr change to 0.00125

235/235 [==============================] - 2s 7ms/step - loss: 0.0480 - acc: 0.9851 - val_loss: 0.0513 - val_acc: 0.9838

Epoch 44/100

lr change to 0.00125

235/235 [==============================] - 2s 7ms/step - loss: 0.0474 - acc: 0.9850 - val_loss: 0.0530 - val_acc: 0.9831

Epoch 45/100

lr change to 0.00125

235/235 [==============================] - 2s 7ms/step - loss: 0.0476 - acc: 0.9852 - val_loss: 0.0513 - val_acc: 0.9831

Epoch 46/100

lr change to 0.00125

235/235 [==============================] - 2s 7ms/step - loss: 0.0470 - acc: 0.9855 - val_loss: 0.0554 - val_acc: 0.9817

Epoch 47/100

lr change to 0.00125

235/235 [==============================] - 2s 7ms/step - loss: 0.0471 - acc: 0.9850 - val_loss: 0.0527 - val_acc: 0.9817

Epoch 48/100

lr change to 0.000625

235/235 [==============================] - 2s 7ms/step - loss: 0.0451 - acc: 0.9857 - val_loss: 0.0506 - val_acc: 0.9828

Epoch 49/100

lr change to 0.000625

235/235 [==============================] - 2s 7ms/step - loss: 0.0448 - acc: 0.9861 - val_loss: 0.0501 - val_acc: 0.9838

Epoch 50/100

lr change to 0.000625

235/235 [==============================] - 2s 7ms/step - loss: 0.0448 - acc: 0.9859 - val_loss: 0.0490 - val_acc: 0.9829

Epoch 51/100

lr change to 0.000625

235/235 [==============================] - 2s 7ms/step - loss: 0.0447 - acc: 0.9865 - val_loss: 0.0501 - val_acc: 0.9834

Epoch 52/100

lr change to 0.000625

235/235 [==============================] - 2s 7ms/step - loss: 0.0444 - acc: 0.9864 - val_loss: 0.0488 - val_acc: 0.9826

Epoch 53/100

lr change to 0.000625

235/235 [==============================] - 2s 7ms/step - loss: 0.0446 - acc: 0.9860 - val_loss: 0.0507 - val_acc: 0.9826

Epoch 54/100

lr change to 0.000625

235/235 [==============================] - 2s 7ms/step - loss: 0.0446 - acc: 0.9856 - val_loss: 0.0511 - val_acc: 0.9827

Epoch 55/100

lr change to 0.000625

235/235 [==============================] - 2s 7ms/step - loss: 0.0446 - acc: 0.9862 - val_loss: 0.0506 - val_acc: 0.9830

Epoch 56/100

lr change to 0.000625

235/235 [==============================] - 2s 7ms/step - loss: 0.0446 - acc: 0.9858 - val_loss: 0.0500 - val_acc: 0.9830

Epoch 57/100

lr change to 0.000625

235/235 [==============================] - 2s 7ms/step - loss: 0.0443 - acc: 0.9861 - val_loss: 0.0517 - val_acc: 0.9831

Epoch 58/100

lr change to 0.000625

235/235 [==============================] - 2s 7ms/step - loss: 0.0442 - acc: 0.9860 - val_loss: 0.0507 - val_acc: 0.9828

Epoch 59/100

lr change to 0.000625

235/235 [==============================] - 2s 7ms/step - loss: 0.0445 - acc: 0.9864 - val_loss: 0.0504 - val_acc: 0.9833

Epoch 60/100

lr change to 0.0003125

235/235 [==============================] - 2s 7ms/step - loss: 0.0430 - acc: 0.9868 - val_loss: 0.0499 - val_acc: 0.9825

Epoch 61/100

lr change to 0.0003125

235/235 [==============================] - 2s 7ms/step - loss: 0.0429 - acc: 0.9865 - val_loss: 0.0507 - val_acc: 0.9834

Epoch 62/100

lr change to 0.0003125

235/235 [==============================] - 2s 7ms/step - loss: 0.0430 - acc: 0.9866 - val_loss: 0.0494 - val_acc: 0.9830

Epoch 63/100

lr change to 0.0003125

235/235 [==============================] - 2s 7ms/step - loss: 0.0432 - acc: 0.9865 - val_loss: 0.0501 - val_acc: 0.9836

Epoch 64/100

lr change to 0.0003125

235/235 [==============================] - 2s 7ms/step - loss: 0.0426 - acc: 0.9866 - val_loss: 0.0518 - val_acc: 0.9830

Epoch 65/100

lr change to 0.0003125

235/235 [==============================] - 2s 7ms/step - loss: 0.0428 - acc: 0.9865 - val_loss: 0.0500 - val_acc: 0.9824

Epoch 66/100

lr change to 0.0003125

235/235 [==============================] - 2s 7ms/step - loss: 0.0427 - acc: 0.9866 - val_loss: 0.0506 - val_acc: 0.9824

Epoch 67/100

lr change to 0.0003125

235/235 [==============================] - 2s 7ms/step - loss: 0.0430 - acc: 0.9866 - val_loss: 0.0504 - val_acc: 0.9826

Epoch 68/100

lr change to 0.0003125

235/235 [==============================] - 2s 7ms/step - loss: 0.0427 - acc: 0.9868 - val_loss: 0.0501 - val_acc: 0.9832

Epoch 69/100

lr change to 0.0003125

235/235 [==============================] - 2s 7ms/step - loss: 0.0425 - acc: 0.9866 - val_loss: 0.0505 - val_acc: 0.9823

Epoch 70/100

lr change to 0.0003125

235/235 [==============================] - 2s 7ms/step - loss: 0.0427 - acc: 0.9869 - val_loss: 0.0505 - val_acc: 0.9839

Epoch 71/100

lr change to 0.0003125

235/235 [==============================] - 2s 7ms/step - loss: 0.0432 - acc: 0.9868 - val_loss: 0.0494 - val_acc: 0.9838

Epoch 72/100

lr change to 0.00015625

235/235 [==============================] - 2s 7ms/step - loss: 0.0419 - acc: 0.9870 - val_loss: 0.0493 - val_acc: 0.9833

Epoch 73/100

lr change to 0.00015625

235/235 [==============================] - 2s 7ms/step - loss: 0.0419 - acc: 0.9871 - val_loss: 0.0498 - val_acc: 0.9835

Epoch 74/100

lr change to 0.00015625

235/235 [==============================] - 2s 7ms/step - loss: 0.0419 - acc: 0.9871 - val_loss: 0.0496 - val_acc: 0.9831

Epoch 75/100

lr change to 0.00015625

235/235 [==============================] - 2s 7ms/step - loss: 0.0419 - acc: 0.9868 - val_loss: 0.0499 - val_acc: 0.9834

Epoch 76/100

lr change to 0.00015625

235/235 [==============================] - 2s 7ms/step - loss: 0.0419 - acc: 0.9868 - val_loss: 0.0496 - val_acc: 0.9835

Epoch 77/100

lr change to 0.00015625

235/235 [==============================] - 2s 7ms/step - loss: 0.0420 - acc: 0.9870 - val_loss: 0.0500 - val_acc: 0.9834

Epoch 78/100

lr change to 0.00015625

235/235 [==============================] - 2s 7ms/step - loss: 0.0419 - acc: 0.9871 - val_loss: 0.0496 - val_acc: 0.9833

Epoch 79/100

lr change to 0.00015625

235/235 [==============================] - 2s 7ms/step - loss: 0.0419 - acc: 0.9868 - val_loss: 0.0497 - val_acc: 0.9835

Epoch 80/100

lr change to 0.00015625

235/235 [==============================] - 2s 7ms/step - loss: 0.0419 - acc: 0.9869 - val_loss: 0.0499 - val_acc: 0.9835

Epoch 81/100

lr change to 0.00015625

235/235 [==============================] - 2s 7ms/step - loss: 0.0420 - acc: 0.9866 - val_loss: 0.0499 - val_acc: 0.9830

Epoch 82/100

lr change to 0.00015625

235/235 [==============================] - 2s 7ms/step - loss: 0.0418 - acc: 0.9870 - val_loss: 0.0503 - val_acc: 0.9839

Epoch 83/100

lr change to 0.00015625

235/235 [==============================] - 2s 7ms/step - loss: 0.0420 - acc: 0.9868 - val_loss: 0.0494 - val_acc: 0.9832

Epoch 84/100

lr change to 7.8125e-05

235/235 [==============================] - 2s 7ms/step - loss: 0.0414 - acc: 0.9873 - val_loss: 0.0499 - val_acc: 0.9837

Epoch 85/100

lr change to 7.8125e-05

235/235 [==============================] - 2s 7ms/step - loss: 0.0416 - acc: 0.9871 - val_loss: 0.0496 - val_acc: 0.9833

Epoch 86/100

lr change to 7.8125e-05

235/235 [==============================] - 2s 7ms/step - loss: 0.0414 - acc: 0.9872 - val_loss: 0.0501 - val_acc: 0.9837

Epoch 87/100

lr change to 7.8125e-05

235/235 [==============================] - 2s 7ms/step - loss: 0.0415 - acc: 0.9871 - val_loss: 0.0502 - val_acc: 0.9836

Epoch 88/100

lr change to 7.8125e-05

235/235 [==============================] - 2s 7ms/step - loss: 0.0415 - acc: 0.9871 - val_loss: 0.0501 - val_acc: 0.9834

Epoch 89/100

lr change to 7.8125e-05

235/235 [==============================] - 2s 7ms/step - loss: 0.0414 - acc: 0.9870 - val_loss: 0.0496 - val_acc: 0.9835

Epoch 90/100

lr change to 7.8125e-05

235/235 [==============================] - 2s 7ms/step - loss: 0.0414 - acc: 0.9872 - val_loss: 0.0498 - val_acc: 0.9837

Epoch 91/100

lr change to 7.8125e-05

235/235 [==============================] - 2s 7ms/step - loss: 0.0416 - acc: 0.9870 - val_loss: 0.0502 - val_acc: 0.9839

Epoch 92/100

lr change to 7.8125e-05

235/235 [==============================] - 2s 7ms/step - loss: 0.0414 - acc: 0.9872 - val_loss: 0.0503 - val_acc: 0.9834

Epoch 93/100

lr change to 7.8125e-05

235/235 [==============================] - 2s 7ms/step - loss: 0.0415 - acc: 0.9870 - val_loss: 0.0500 - val_acc: 0.9836

Epoch 94/100

lr change to 7.8125e-05

235/235 [==============================] - 2s 7ms/step - loss: 0.0415 - acc: 0.9869 - val_loss: 0.0499 - val_acc: 0.9832

Epoch 95/100

lr change to 7.8125e-05

235/235 [==============================] - 2s 7ms/step - loss: 0.0415 - acc: 0.9871 - val_loss: 0.0502 - val_acc: 0.9836

Epoch 96/100

lr change to 6.25e-05

235/235 [==============================] - 2s 7ms/step - loss: 0.0413 - acc: 0.9871 - val_loss: 0.0501 - val_acc: 0.9838

Epoch 97/100

lr change to 6.25e-05

235/235 [==============================] - 2s 7ms/step - loss: 0.0413 - acc: 0.9872 - val_loss: 0.0501 - val_acc: 0.9837

Epoch 98/100

lr change to 6.25e-05

235/235 [==============================] - 2s 7ms/step - loss: 0.0413 - acc: 0.9872 - val_loss: 0.0503 - val_acc: 0.9834

Epoch 99/100

lr change to 6.25e-05

235/235 [==============================] - 2s 7ms/step - loss: 0.0413 - acc: 0.9870 - val_loss: 0.0499 - val_acc: 0.9833

Epoch 100/100

lr change to 6.25e-05

235/235 [==============================] - 2s 7ms/step - loss: 0.0417 - acc: 0.9870 - val_loss: 0.0500 - val_acc: 0.9834

總結

通過此次,認識到了感受野有時候比模型的復雜度更重要,還有學習率衰減策略也對模型有比較大的影響。

就是你用CPU訓練,這么少的參數,也會很快的。

下面附上本項目的代碼的Jupyter notebook。

鏈接:https://pan.baidu.com/s/1EEzfRDD_PAgSeS999Eq9-Q 提取碼:3131

如果你覺得好,請給我個star,如有問題,也可以與我聯系。

--數字圖像基礎1 - 視覺感知要素 - 亮度適應與辨別)

--緒論)

)

--數字圖像基礎5 -- 算術運算、集合、幾何變換、傅里葉變換等)