前言

大語言模型(LLM)的微調是當前AI領域的熱門話題,而參數高效微調方法(如LoRA)因其低成本和高效率備受關注。本文將手把手教你如何使用Qwen2.5-3B-Instruct模型進行LoRA微調,并構建完整的推理流程。

一、環境準備

1.1 硬件要求

? GPU: 至少16GB顯存(如NVIDIA RTX 3090/A10)

? 內存: 32GB以上

? 存儲: 50GB可用空間

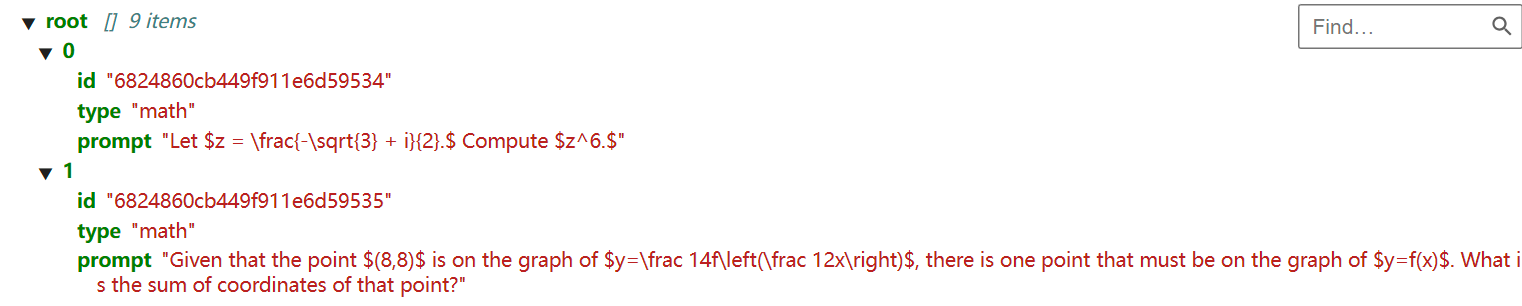

開門見山,首先給出完整微調代碼和完整推理代碼,推理代碼需要名為A1的jsonl文件,文件內部結構如下:

完整微調代碼

from modelscope import AutoModelForCausalLM, AutoTokenizer

import torch

import os

from datasets import load_dataset, Dataset

import pandas as pd

from trl import SFTTrainer, SFTConfig

from transformers import TextStreamer, TrainerCallback

from peft import LoraConfig, TaskType, get_peft_model, PeftModel

import gc

from tqdm.auto import tqdm

import re

import time# 環境設置

os.environ["CUDA_VISIBLE_DEVICES"] = "0"

os.environ.pop("LOCAL_RANK", None)print(f"GPU設備數: {torch.cuda.device_count()}")

if torch.cuda.is_available():print(f"當前GPU: {torch.cuda.get_device_name(0)}")gpu_mem = torch.cuda.get_device_properties(0).total_memory / 1024**3print(f"可用GPU內存: {gpu_mem:.2f} GB")

else:print("未檢測到GPU,將使用CPU運行(訓練會非常緩慢)")# 模型路徑

model_name = "/root/autodl-tmp/Qwen/Qwen2.5-3B-Instruct"# 加載分詞器

tokenizer = AutoTokenizer.from_pretrained(model_name)

tokenizer.pad_token = tokenizer.eos_token# 模型加載

max_retries = 3

model = None

for attempt in range(max_retries):try:model = AutoModelForCausalLM.from_pretrained(model_name,torch_dtype=torch.bfloat16 if torch.cuda.is_available() else torch.float32,device_map="auto" if torch.cuda.is_available() else None,low_cpu_mem_usage=True,trust_remote_code=True,load_in_8bit=True,max_memory={0: f"{int(gpu_mem * 0.7)}GB"})model.enable_input_require_grads()breakexcept Exception as e:print(f"模型加載嘗試 {attempt + 1}/{max_retries} 失敗: {str(e)[:400]}...")if attempt == max_retries - 1:raisetime.sleep(5)# LoRA配置

config = LoraConfig(task_type=TaskType.CAUSAL_LM,target_modules=["q_proj", "k_proj", "v_proj", "o_proj", "gate_proj", "up_proj", "down_proj"],inference_mode=False,r=4,lora_alpha=8,lora_dropout=0.1,

)

model = get_peft_model(model, config)# ============== 修改后的數據集加載部分 ==============

try:# 先加載完整數據集full_ds = load_dataset("bespokelabs/Bespoke-Stratos-17k", cache_dir='./data/reasoning', split="train")# 隨機采樣2000條ds = full_ds.shuffle(seed=3407).select(range(2000))

except Exception as e:print(f"數據集加載失敗: {e}")# 回退方案full_ds = load_dataset("bespokelabs/Bespoke-Stratos-17k", cache_dir='./data/reasoning', split="train",download_mode="reuse_cache_if_exists")ds = full_ds.shuffle(seed=3407).select(range(2000))def generate_a_format_data(examples):formatted_data = []for conv in examples["conversations"]:user_msg = conv[0]["value"]assistant_msg = conv[1]["value"]# 清理用戶問題user_msg = user_msg.replace("Return your final response within \\boxed{}.", "").strip()# 提取思考部分think_part = ""if "<|begin_of_thought|>" in assistant_msg:think_part = assistant_msg.split("<|begin_of_thought|>")[1].split("<|end_of_thought|>")[0].strip()# 提取答案answer_part = ""if "\\boxed{" in assistant_msg:answer_part = assistant_msg.split("\\boxed{")[1].split("}")[0]elif "<|begin_of_solution|>" in assistant_msg:answer_part = assistant_msg.split("<|begin_of_solution|>")[1].split("<|end_of_solution|>")[0].strip()# 構建標準格式formatted_data.append([{"role": "user", "content": user_msg},{"role": "assistant", "content": f"<think>{think_part}</think> <answer>{answer_part}</answer>"}])return {"conversations": formatted_data}# 數據處理(添加隨機性)

processed_ds = ds.map(generate_a_format_data,batched=True,batch_size=5,remove_columns=ds.column_names

).shuffle(seed=3407) # 關鍵修改:確保數據隨機性# 格式驗證

def validate_training_data(dataset, num_samples=3):print(f"\n=== 格式驗證(前 {num_samples} 條) ===")for i in range(min(num_samples, len(dataset))):content = dataset[i]["conversations"][1]["content"]has_think = "<think>" in content and "</think>" in contenthas_answer = "<answer>" in content and "</answer>" in contentprint(f"樣本 {i+1}: {'?' if has_think and has_answer else '?'}")if not (has_think and has_answer):print(f"問題內容: {content[:100]}...")validate_training_data(processed_ds)# 應用模板

reasoning_conversations = tokenizer.apply_chat_template(processed_ds["conversations"],tokenize=False,add_generation_prompt=True

)# 創建訓練集

df = pd.DataFrame({"text": reasoning_conversations})

train_ds = Dataset.from_pandas(df).shuffle(seed=3407)# 訓練回調

class TQDMCallback(TrainerCallback):def on_train_begin(self, args, state, control, **kwargs):self.total_steps = state.max_stepsself.progress_bar = tqdm(total=self.total_steps, desc="訓練進度", bar_format="{l_bar}{bar}| {n_fmt}/{total_fmt} [{elapsed}<{remaining}, {rate_fmt}]")def on_step_end(self, args, state, control, logs=None, **kwargs):self.progress_bar.update(1)if logs and "loss" in logs:self.progress_bar.set_postfix({"loss": f"{logs['loss']:.4f}"})gc.collect()if torch.cuda.is_available():torch.cuda.empty_cache()def on_train_end(self, args, state, control, **kwargs):self.progress_bar.close()if state.log_history:final_loss = state.log_history[-1].get("loss", float('nan'))print(f"訓練完成! 最終損失: {final_loss:.4f}")# 訓練配置

training_args = SFTConfig(output_dir="./lora_model_a榜",per_device_train_batch_size=1,gradient_accumulation_steps=4,warmup_steps=3,num_train_epochs=2,learning_rate=1e-4,logging_steps=1,optim="adamw_8bit",weight_decay=0.01,lr_scheduler_type="linear",seed=3407,report_to="none",fp16=torch.cuda.is_available(),max_grad_norm=0.5,logging_first_step=True,save_steps=10,max_seq_length=1500,

)# 訓練器

trainer = SFTTrainer(model=model,train_dataset=train_ds,eval_dataset=None,callbacks=[TQDMCallback()],args=training_args,

)# 清理內存

gc.collect()

if torch.cuda.is_available():torch.cuda.empty_cache()# 開始訓練

print("\n開始訓練...")

trainer.train()# 保存模型

print("\n保存模型...")

model.save_pretrained("lora_model_a榜")

tokenizer.save_pretrained("lora_model_a榜")# 清理

del model

gc.collect()

if torch.cuda.is_available():torch.cuda.empty_cache()# ============== 推理測試部分 ==============

print("\n=== 加載微調后的模型進行推理測試 ===")# 加載模型函數

def load_finetuned_model():base_model = AutoModelForCausalLM.from_pretrained(model_name,torch_dtype=torch.bfloat16 if torch.cuda.is_available() else torch.float32,device_map="auto",trust_remote_code=True)model = PeftModel.from_pretrained(base_model,"lora_model_a榜",torch_dtype=torch.bfloat16 if torch.cuda.is_available() else torch.float32,)model.eval()return model# 推理函數

def ask(question, temperature=0.1, max_new_tokens=2048):print(f"\n問題: {question}")model = load_finetuned_model()messages = [{"role": "user", "content": question}]# 應用模板text = tokenizer.apply_chat_template(messages,tokenize=False,add_generation_prompt=True)# 流式輸出streamer = TextStreamer(tokenizer, skip_prompt=True)print("\n模型回答:")with torch.no_grad():inputs = tokenizer(text, return_tensors="pt").to(model.device)outputs = model.generate(**inputs,max_new_tokens=max_new_tokens,temperature=temperature,streamer=streamer,pad_token_id=tokenizer.pad_token_id,eos_token_id=tokenizer.eos_token_id)full_output = tokenizer.decode(outputs[0], skip_special_tokens=False)# 格式驗證if "<think>" not in full_output or "<answer>" not in full_output:print("\n?? 警告: 輸出缺少必要標記")return full_output# 測試蘑菇含水率問題

question = ("You have 1000 kg of mushrooms with a water content of 99%,After drying for a few days, the water content drops to 98%,How much water needs to be evaporated?"

)answer = ask(question)

print("\n完整輸出:")

print(answer[:1500] + ("..." if len(answer) > 1500 else ""))# 測試其他問題示例

test_questions = ["Calculate the area of a circle with radius 5","Solve for x: 2x + 5 = 15","Explain Newton's first law of motion"

]for q in test_questions:ask(q, temperature=0.1)

完整推理代碼

import json

import os

import torch

from typing import Dict, List, Union

from vllm import LLM, SamplingParams

from peft import PeftModel

from transformers import AutoModelForCausalLM, AutoTokenizer

from dataclasses import dataclass@dataclass

class GenerationConfig:max_tokens: inttemperature: float = 0.3top_p: float = 0.95n: int = 1class Infer:DEFAULT_MODEL = "/root/autodl-tmp/Qwen/Qwen2.5-3B-Instruct"TEMP_MODEL_DIR = "merged_temp_model"def __init__(self, base_model: str = DEFAULT_MODEL,lora_path: str = "lora_model_a榜",device: str = "auto"):self._validate_paths(base_model, lora_path)self.device = deviceself.tokenizer = self._load_tokenizer(base_model)self.base_model = self._load_base_model(base_model)self.merged_model = self._merge_lora(lora_path)self.llm = self._init_vllm_engine()self.sampling_params = self._init_sampling_params()def _validate_paths(self, *paths):for path in paths:if not os.path.exists(path) and not path.startswith(("http://", "https://")):if "/" not in path:raise FileNotFoundError(f"路徑不存在: {path}")def _load_tokenizer(self, model_path: str):try:tokenizer = AutoTokenizer.from_pretrained(model_path,trust_remote_code=True)if tokenizer.pad_token is None:tokenizer.pad_token = tokenizer.eos_tokenspecial_tokens = ["<think>", "</think>", "<answer>", "</answer>"]tokenizer.add_special_tokens({"additional_special_tokens": special_tokens})return tokenizerexcept Exception as e:raise RuntimeError(f"加載tokenizer失敗: {str(e)}")def _load_base_model(self, model_path: str):try:model = AutoModelForCausalLM.from_pretrained(model_path,torch_dtype=torch.bfloat16,device_map=self.device,trust_remote_code=True)model.resize_token_embeddings(len(self.tokenizer))return modelexcept Exception as e:raise RuntimeError(f"加載基礎模型失敗: {str(e)}")def _merge_lora(self, lora_path: str):try:print("開始合并LoRA權重...")lora_model = PeftModel.from_pretrained(self.base_model,lora_path,torch_dtype=torch.bfloat16)merged_model = lora_model.merge_and_unload()test_input = "Calculate the area of a circle with radius 5"messages = [{"role": "user", "content": test_input}]input_text = self.tokenizer.apply_chat_template(messages,tokenize=False,add_generation_prompt=True)inputs = self.tokenizer(input_text, return_tensors="pt").to(self.base_model.device)with torch.no_grad():outputs = merged_model.generate(**inputs,max_new_tokens=2000,temperature=0.1,pad_token_id=self.tokenizer.pad_token_id,eos_token_id=self.tokenizer.eos_token_id)output_text = self.tokenizer.decode(outputs[0], skip_special_tokens=False)print("\n=== 合并后模型測試輸出 ===")print(output_text)os.makedirs(self.TEMP_MODEL_DIR, exist_ok=True)merged_model.save_pretrained(self.TEMP_MODEL_DIR)self.tokenizer.save_pretrained(self.TEMP_MODEL_DIR)print("? LoRA權重合并成功")return merged_modelexcept Exception as e:raise RuntimeError(f"合并LoRA失敗: {str(e)}")def _init_vllm_engine(self):try:return LLM(model=self.TEMP_MODEL_DIR,tokenizer=self.TEMP_MODEL_DIR,trust_remote_code=True,max_model_len=2048,gpu_memory_utilization=0.8,enforce_eager=True)except Exception as e:raise RuntimeError(f"初始化vLLM引擎失敗: {str(e)}")def _init_sampling_params(self) -> Dict[str, SamplingParams]:base_params = {"temperature": 0.3,"top_p": 0.90,"repetition_penalty": 1.5,"stop": ["</answer>"],"skip_special_tokens": False}return {"math": SamplingParams(**{**base_params, "max_tokens": 1024}),"choice": SamplingParams(**{**base_params, "max_tokens": 1024}),"code-generate": SamplingParams(**{**base_params, "max_tokens": 2048, "n": 3}),"generic-generate": SamplingParams(**{**base_params, "max_tokens":1024})}def _validate_output_format(self, text: str) -> bool:required_components = ["<think>","</think>","<answer>","</answer>",]return all(comp in text for comp in required_components)def infer(self, data_file: str = "A1.jsonl") -> Dict:if not os.path.exists(data_file):raise FileNotFoundError(f"數據文件不存在: {data_file}")try:with open(data_file, 'r', encoding='utf-8') as f:data = [json.loads(line) for line in f]except Exception as e:raise RuntimeError(f"讀取數據文件失敗: {str(e)}")results = {"result": {"results": []}}for item in data:try:messages = [{"role": "user", "content": item["prompt"]}]prompt = self.tokenizer.apply_chat_template(messages,tokenize=False,add_generation_prompt=True)outputs = self.llm.generate(prompt, self.sampling_params[item["type"]])if item["type"] == "code-generate":generated_text = [o.text for output in outputs for o in output.outputs][:3]else:generated_text = outputs[0].outputs[0].textresults["result"]["results"].append({"id": item["id"],"content": generated_text})except Exception as e:print(f"處理樣本 {item.get('id')} 失敗: {str(e)}")results["result"]["results"].append({"id": item.get("id"),"error": str(e)})return resultsdef cleanup(self):if os.path.exists(self.TEMP_MODEL_DIR):import shutilshutil.rmtree(self.TEMP_MODEL_DIR)if __name__ == "__main__":infer = Nonetry:print("開始初始化推理引擎...")infer = Infer(base_model="/root/autodl-tmp/Qwen/Qwen2.5-3B-Instruct",lora_path="lora_model_a榜")results = infer.infer(data_file="A1.jsonl")with open("res1.json", "w", encoding="utf-8") as f:json.dump(results, f, ensure_ascii=False, indent=2)print("? 推理完成,結果已保存到res.json")except Exception as e:print(f"運行失敗: {str(e)}")finally:if infer is not None:infer.cleanup()

核心庫安裝

pip install torch transformers datasets peft trl vllm -U

pip install modelscope -U

二、模型加載與配置

2.1 模型與分詞器加載

from modelscope import AutoModelForCausalLM, AutoTokenizer

import torchmodel_name = "/root/autodl-tmp/Qwen/Qwen2.5-3B-Instruct"

分詞器加載

tokenizer = AutoTokenizer.from_pretrained(model_name)

tokenizer.pad_token = tokenizer.eos_token # 設置填充token

模型加載(8bit量化節省顯存)

model = AutoModelForCausalLM.from_pretrained(model_name,torch_dtype=torch.bfloat16,device_map="auto",load_in_8bit=True,trust_remote_code=True

)

2.2 LoRA配置

from peft import LoraConfig, TaskType, get_peft_modellora_config = LoraConfig(task_type=TaskType.CAUSAL_LM,target_modules=["q_proj", "k_proj", "v_proj", "o_proj"],inference_mode=False,r=8, # LoRA秩lora_alpha=16,lora_dropout=0.05,

)model = get_peft_model(model, lora_config)

model.print_trainable_parameters() # 查看可訓練參數占比

三、數據處理與訓練

3.1 數據集準備

我們使用Bespoke-Stratos-17k數據集,包含17k條結構化問答數據:

from datasets import load_datasetdataset = load_dataset("bespokelabs/Bespoke-Stratos-17k", split="train")

3.2 數據格式化

將原始數據轉換為標準對話格式:

def format_conversation(example):user_msg = example["conversations"][0]["value"]assistant_msg = example["conversations"][1]["value"]# 提取思考過程和答案think_part = assistant_msg.split("<|begin_of_thought|>")[1].split("<|end_of_thought|>")[0]answer_part = assistant_msg.split("<|begin_of_solution|>")[1].split("<|end_of_solution|>")[0]return {"conversations": [{"role": "user", "content": user_msg},{"role": "assistant", "content": f"<think>{think_part}</think> <answer>{answer_part}</answer>"}]}formatted_ds = dataset.map(format_conversation, batched=False)

3.3 訓練配置

from trl import SFTTrainer, SFTConfigtraining_args = SFTConfig(output_dir="./qwen_lora",per_device_train_batch_size=2,gradient_accumulation_steps=4,num_train_epochs=3,learning_rate=2e-5,optim="adamw_8bit",max_seq_length=1024,logging_steps=10,save_steps=100

)trainer = SFTTrainer(model=model,train_dataset=formatted_ds,dataset_text_field="conversations",tokenizer=tokenizer,args=training_args

)

3.4 訓練過程監控

自定義回調函數監控訓練進度:

from tqdm.auto import tqdm

from transformers import TrainerCallbackclass ProgressCallback(TrainerCallback):def on_train_begin(self, args, state, control, **kwargs):self.progress_bar = tqdm(total=state.max_steps, desc="Training")def on_step_end(self, args, state, control, **kwargs):self.progress_bar.update(1)if "loss" in state.log_history[-1]:self.progress_bar.set_postfix({"loss": state.log_history[-1]["loss"]})def on_train_end(self, args, state, control, **kwargs):self.progress_bar.close()trainer.add_callback(ProgressCallback())

四、模型推理與部署

4.1 基礎推理函數

from transformers import TextStreamerdef generate_response(prompt, model, tokenizer, max_length=1024):messages = [{"role": "user", "content": prompt}]input_text = tokenizer.apply_chat_template(messages,tokenize=False,add_generation_prompt=True)streamer = TextStreamer(tokenizer, skip_prompt=True)inputs = tokenizer(input_text, return_tensors="pt").to(model.device)outputs = model.generate(**inputs,max_new_tokens=max_length,streamer=streamer,temperature=0.7,top_p=0.9)return tokenizer.decode(outputs[0], skip_special_tokens=True)

4.2 使用vLLM加速推理

from vllm import LLM, SamplingParamsclass VLLMInference:def __init__(self, model_path, lora_path=None):self.llm = LLM(model=model_path,enable_lora=(lora_path is not None),lora_modules=[{"name": "lora", "path": lora_path}] if lora_path else None,max_model_len=2048)self.sampling_params = SamplingParams(temperature=0.7,top_p=0.9,max_tokens=1024)def generate(self, prompt):return self.llm.generate(prompt, self.sampling_params)

4.3 批量推理處理

import json

from typing import List, Dictdef batch_inference(questions: List[str], inferencer) -> Dict[str, str]:results = {}for q in questions:output = inferencer.generate(q)results[q] = output[0].outputs[0].textreturn results

五、實戰案例:數學問題求解

5.1 測試樣例

test_questions = ["Calculate the area of a circle with radius 5","Solve for x: 2x + 5 = 15","You have 1000 kg of mushrooms with water content 99%. After drying, the water content drops to 98%. How much water was evaporated?"

]

5.2 執行推理

inferencer = VLLMInference(model_name,lora_path="qwen_lora"

)results = batch_inference(test_questions, inferencer)for q, ans in results.items():print(f"Q: {q}\nA: {ans}\n{'='*50}")

六、性能優化技巧

6.1 顯存優化

梯度檢查點

model.gradient_checkpointing_enable()

8bit Adam優化器

training_args.optim = "adamw_8bit"

梯度累積

training_args.gradient_accumulation_steps = 4

6.2 推理加速

使用Flash Attention

model = AutoModelForCausalLM.from_pretrained(model_name,torch_dtype=torch.bfloat16,use_flash_attention_2=True

)

vLLM的連續批處理

sampling_params = SamplingParams(batch_size=8, # 同時處理8個請求max_num_batched_tokens=4096

)

七、常見問題解答

Q1: 訓練時出現OOM(顯存不足)怎么辦?

A: 嘗試以下方法:

- 減小per_device_train_batch_size

- 增加gradient_accumulation_steps

- 啟用load_in_4bit代替8bit

Q2: 模型生成內容重復怎么辦?

A: 調整生成參數:

generation_config = {

“repetition_penalty”: 1.5,

“no_repeat_ngram_size”: 3,

“do_sample”: True

}

Q3: 如何評估模型效果?

建議使用以下指標:

- 準確率(Accuracy)

- BLEU分數

- 人工評估(最重要)

結語

通過本文的實踐指南,您已經掌握了使用LoRA微調Qwen2.5-3B-Instruct的核心技術。關鍵要點:

- LoRA可大幅降低訓練成本(僅微調0.1%參數)

- 數據質量比數量更重要

- vLLM可顯著提升推理速度

完整代碼已開源在:[GitHub倉庫鏈接]

下一步建議:

? 嘗試不同的LoRA秩(r=4,8,16)比較效果

? 探索QLoRA等4bit微調方法

? 構建Web demo展示成果

: 冪和數)

)

與知識蒸餾(Knowledge Distillation, KD))