com編程創建快捷方式中文

Recently, I was in need of an image for our blog and wanted it to have some wow effect or at least a better fit than anything typical we’ve been using. Pondering over ideas for a while, word cloud flashed in my mind. 💡Usually, you would just need a long string of text to generate one, but I thought of parsing our entire blog data to see if anything interesting pops out and to also get the holistic view of the keywords our blog uses in its entirety. So, I took this as a weekend fun project for myself.

最近,我在博客中需要一個圖片,希望它具有一定的效果或至少比我們一直在使用的典型圖片更適合 。 思考了一會兒,詞云在我腦海中閃過。 💡通常,您只需要一個長文本字符串即可生成一個文本字符串,但是我想解析整個博客數據,以查看是否彈出了一些有趣的東西,并獲得我們博客整體使用的關鍵字的整體視圖。 因此,我將此作為自己的周末娛樂項目。

PS: Images have a lot of importance in marketing. Give it quality!👀

PS:圖像在營銷中非常重要。 給它質量!👀

弄臟您的手: (Getting your hands dirty:)

Our blog is hosted on Ghost and it allows us to export all the posts and settings into a single, glorious JSON file. And, we have in-built json package in python for parsing JSON data. Our stage is set. 🤞

我們的博客托管在Ghost上 并且它允許我們將所有帖子和設置導出到一個光榮的JSON文件中。 并且,我們在python中有內置的json包,用于解析JSON數據。 我們的舞臺已經準備好了。 🤞

For other popular platforms like WordPress, Blogger, Substack, etc. it could be one or many XML files, you might need to switch the packages and do the groundwork in python accordingly.

對于其他流行的平臺,例如WordPress,Blogger,Substack等,它可能是一個或多個XML文件,您可能需要切換軟件包并相應地在python中做基礎。

Before you read into that JSON in python, you should get the idea of how it’s structured, what you need to read, what you need to filter out, etc. For that, use some JSON processor to pretty print your json file, I’d used jqplay.org and it helped me figure out where my posts are located ? data['db'][0]['data']['post']

在用python閱讀JSON之前,您應該了解它的結構,需要閱讀的內容,需要過濾的內容等。為此,使用一些JSON處理器漂亮地打印JSON文件,我d使用了jqplay.org ,它幫助我弄清楚了我的帖子所在的位置?data data['db'][0]['data']['post']

Next, you’d like to call upon pd.json.normalize() to convert your data into a flat table and save it as a data frame.

接下來,您想調用pd.json.normalize()將數據轉換為平面表并將其保存為數據框。

👉 Note: You should have updated version of pandas installed for

pd.json.normalize()to work as it has tweaked names in older versions.Also, keep the encoding as UTF-8, as otherwise, you’re likely to run into UnicodeDecodeErrors. (We have these bad guys: ‘\xa0’ , ‘\n’, and ‘\t’ etc.)👉注意:您應該已安裝pandas的更新版本,以便

pd.json.normalize()可以正常運行,因為它已對舊版本中的名稱進行了調整。此外,請保持編碼為UTF-8,否則可能會遇到UnicodeDecodeErrors。 (我們有這些壞家伙:'\ xa0','\ n'和'\ t'等)

import pandas as pd

import jsonwith open('fleetx.ghost.2020-07-28-20-18-49.json', encoding='utf-8') as file:

data = json.load(file)

posts_df = pd.json_normalize(data['db'][0]['data']['posts'])

posts_df.head()Looking at the dataframe you can see that ghost is keeping three formats of the posts we created, mobiledoc (simple and fast renderer without an HTML parser), HTML and plaintext, and range of other attributes of the post. I choose to work with the plaintext version as it would require the least cleaning.

查看數據框,您可以看到ghost保留了我們創建的帖子的三種格式, mobiledoc (沒有HTML解析器的簡單快速渲染器),HTML和純文本以及帖子的其他屬性范圍。 我選擇使用純文本版本,因為它需要最少的清理。

清潔工作: (The Cleaning Job:)

- Drop missing values (any blank post you might have) to not handicap your analysis while charting at some point later. We had one blog post in drafts with nothing in it. 🤷?♂? 刪除丟失的值(您可能有任何空白的帖子),以便稍后在進行圖表繪制時不會影響您的分析。 我們的草稿中只有一篇博文,沒有任何內容。 ♂?♂?

The plaintext of the posts had almost every possible unwanted character from spacing and tabs (\n, \xao, \t), to 14 marks from grammar punctuations (dot, comma, semicolon, colon, dash, hyphen,s etc.) and even bullet points. Replace all of them with whitespace.

帖子的純文本幾乎包含從空格和制表符(\ n,\ xao,\ t)到語法標點的14個標記(點,逗號,分號,冒號,破折號,連字符等)以及幾乎所有可能不需要的字符,并且甚至是要點。 將它們全部替換為空格。

Next, I split up the words in each blog post under the plaintext column and then joined the resulting lists from each cell to have a really long list of words. This resulted in 34000 words; we have around 45 published blogs each having 700 words on average and a few more in drafts, so this works out 45*700=31500 words. Consistent!🤜

接下來,我將每個博客帖子中的單詞都以純文本形式拆分 列,然后將每個單元格的結果列表加入其中,以獲得非常長的單詞列表。 結果是34000個單詞; 我們大約有45個已發布的博客,每個博客平均包含700個單詞,草稿中還有幾個單詞,因此得出的結果是45 * 700 = 31500個單詞。 一致!🤜

posts_df.dropna(subset=['plaintext'], axis=0, inplace=True)posts_df.plaintext = posts_df.plaintext.str.replace('\n', ' ')

.str.replace('\xa0',' ').str.replace('.',' ').str.replace('·', ' ')

.str.replace('?',' ').str.replace('\t', ' ').str.replace(',',' ')

.str.replace('-', ' ').str.replace(':', ' ').str.replace('/',' ')

.str.replace('*',' ')posts_df.plaintext = posts_df.plaintext.apply(lambda x: x.split())

words_list =[]

for i in range(0,posts_df.shape[0]):

words_list.extend(posts_df.iloc[i].plaintext)If you’re eager for results now, you can run collections.Counter on that words_list and get the frequency of each word to get an idea of how your wordcloud might look like.

如果您現在渴望獲得結果,則可以在words_list上運行collections.Counter并獲取每個單詞的出現頻率,以了解單詞云的外觀。

import collectionsword_freq = collections.Counter(words_list)

word_freq.most_common(200)Any guesses on what could be the most used word for a blog? 🤞If you said ‘the’, you’re right. For really long texts, the article ‘the’ is going to take precedence over any other word. And, not just ‘the’ there were several other prepositions, pronouns, conjunction, and action verbs in the top frequency list. We certainly don’t need them and, to remove them, we must first define them. Fortunately, wordcloud library that we will use to generate the wordcloud comes with default stopwords of its own but it’s rather conservative and has only 192 words. So, let’s head over to the libraries in Natural Language Processing (NLP) that do huge text processing and are dedicated to such tasks. 🔎

關于博客最常用的詞有什么猜想? 🤞如果您說“ the”,那是對的。 對于非常長的文本,文章“ the”將優先于其他任何單詞。 而且,不僅僅是“ the”,在最常見的頻率列表中還有其他幾個介詞,代詞,連詞和動作動詞。 我們當然不需要它們,并且要刪除它們,我們必須首先定義它們。 幸運的是,我們將用于生成wordcloud的wordcloud庫帶有自己的默認停用詞,但它相當保守,只有192個單詞。 因此,讓我們進入自然語言處理(NLP)中的庫,這些庫可以進行大量的文本處理并致力于此類任務。 🔎

- National Language Toolkit library (NLTK): It has 179 stopwords, that’s even lower than wordcloud stopwords collection. Don’t give it an evil eye for this reason alone, this is the leading NLP library in python. 國家語言工具包庫(NLTK):它有179個停用詞,甚至比wordcloud停用詞集合還低。 不要僅僅因為這個原因就對它視而不見,這是python中領先的NLP庫。

- Genism: It has 337 stopwords in its collection. Genism:它的集合中有337個停用詞。

- Sci-kit learn: They also have a stopword collection of 318 words. 科學工具學習:他們也有318個單詞的停用詞集合。

- And, there is Spacy: It has 326 stopwords. 而且,還有Spacy:它有326個停用詞。

I went ahead with the Spacy, you can choose your own based on your preferences.

我使用Spacy,您可以根據自己的喜好選擇自己的。

但…。 😓 (But…. 😓)

This wasn’t enough! Still, there were words that won’t look good from a marketing standpoint, also we didn’t do the best cleaning possible. So, I’d put them in a text file (each word on a new line) and then read it and joined with the spacy’s stopwords list.

這還不夠! 不過,從營銷的角度來看,有些話看起來不太好,而且我們也沒有做到最好的清潔效果。 因此,我將它們放在一個文本文件中(每個單詞都換行),然后閱讀并與spacy的停用詞列表一起加入。

Instructions on setting up Spacy.

有關設置Spacy的說明 。

import spacynlp=spacy.load('en_core_web_sm')

spacy_stopwords = nlp.Defaults.stop_wordswith open("more stopwords.txt") as file:

more_stopwords = {line.rstrip() for line in file}final_stopwords = spacy_stopwords | more_stopwords設置設計工作室: (Setting up the design shop:)

Now that we have our re-engineered stopwords list ready, we’re good to invoke the magic maker ? the wordcloud function. Install the wordcloud library with pip command via Jupyter/CLI/Conda.

現在我們已經準備好重新設計的停用詞列表,現在可以調用魔術制作器word wordcloud函數了。 通過Jupyter / CLI / Conda使用pip命令安裝wordcloud庫。

pip install wordcloudimport matplotlib.pyplot as plt

import wordcloud#Instantiate the wordcloud objectwc = wordcloud.WordCloud(background_color='white', max_words=300, stopwords=final_stopwords, collocations=False, max_font_size=40, random_state=42)# Generate word cloud

wc=wc.generate(" ".join(words_list).lower())# Show word cloud

plt.figure(figsize=(20,15))

plt.imshow(wc, interpolation='bilinear')

plt.axis("off")

plt.show()# save the wordcloud

wc.to_file('wordcloud.png');Much of the above code block would be self-explanatory for python users, though let’s do a brief round of introduction:

上面的許多代碼塊對于python用戶來說都是不言而喻的,盡管讓我們做一輪簡短的介紹:

background_color: background of your wordcloud, black and white is most common.background_color:您的wordcloud背景, 黑色和白色最為常見。max_words: maximum words you would like to show up in the wordcloud, default is 200.max_words:您想在wordcloud中顯示的最大單詞數,默認值為200。stopwords: set of stopwords to be eliminated from wordcloud.stopwords:從wordcloud中消除的停用stopwords集。collocations: Whether to include collocations (bigrams) of two words or not, default is True.collocations:是否包含兩個單詞的搭配詞(字母組合圖),默認值為True。

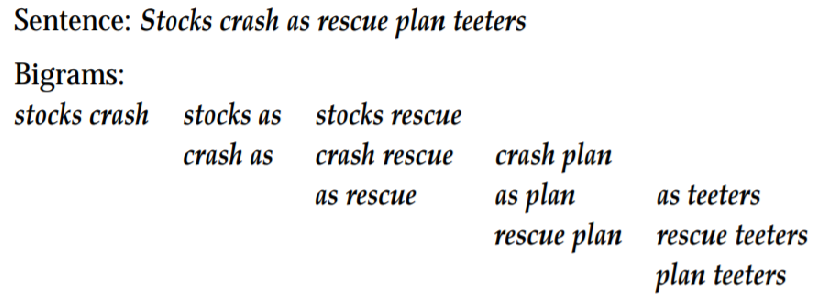

什么是二元組? (What are Bigrams?)

These are sequences of two adjacent words. Take a look at the below example.

這些是兩個相鄰單詞的序列。 看下面的例子。

Note: Parse all the text to wordcloud generator in lowercase, as all stopwords are defined in lowercase. It won’t elimiate uppercase stopwords.

注意:將所有文本以小寫形式解析到wordcloud生成器,因為所有停用詞均以小寫形式定義。 它不會消除大寫停用詞。

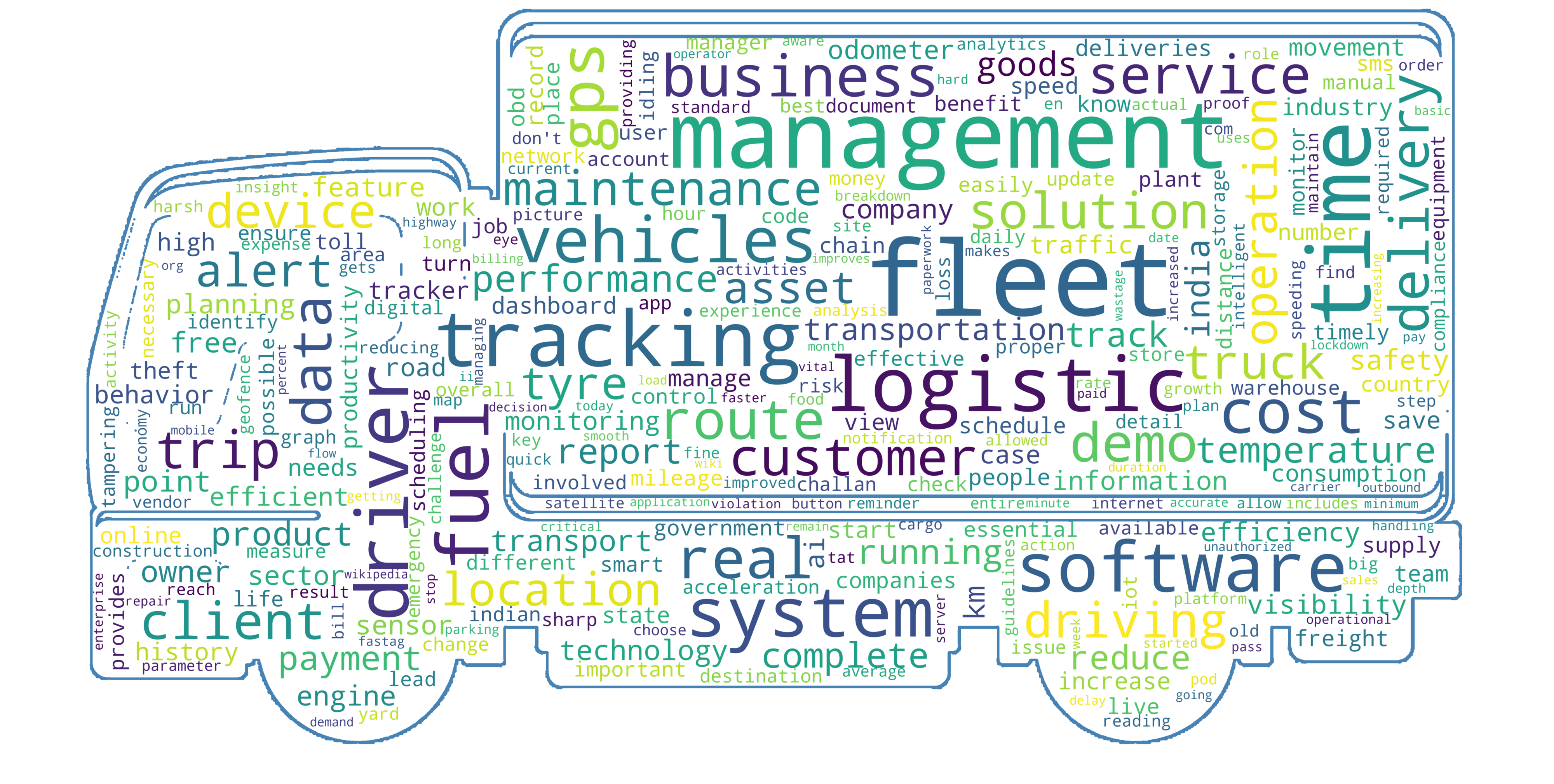

Alright, so the output is like this:

好了,所以輸出是這樣的:

For a company doing fleet management, it’s spot on! The keyword fleet management has heavy weightage than anything else.

對于進行車隊管理的公司而言,它就來了! 關鍵字“ 車隊管理”比其他任何東西都重要。

Though, the above image misses the very element all this is about: the vehicle. Fortunately, you can mask the above wordcloud on an image of your choice with the wordcloud library. So, let’s do this.

雖然,上面的圖像錯過了所有這一切的要素:車輛。 幸運的是,您可以使用wordcloud庫將上述wordcloud屏蔽在您選擇的映像上。 所以,讓我們這樣做。

Choose a vector image of your choice. I’d picked my image from Vecteezy.

選擇您想要的矢量圖像。 我是從Vecteezy挑選圖像的。

You would also need to import the

您還需要導入

Pillow and NumPy library this time to read and convert the image into a NumPy array.

這次使用Pillow和NumPy庫讀取圖像并將其轉換為NumPy數組。

- Below is the commented code block to generate the masked wordcloud, much of which is the same as before. 下面是注釋的代碼塊,用于生成被屏蔽的詞云,其中大部分與以前相同。

import matplotlib.pyplot as plt

from PIL import Image

import numpy as np

import os# Read your image and convert it to an numpy array.

truck_mask=np.array(Image.open("Truck2.png"))# Instantiate the word cloud object.

wc = wordcloud.WordCloud(background_color='white', max_words=500, stopwords=final_stopwords, mask= truck_mask, scale=3, width=640, height=480, collocations=False, contour_width=5, contour_color='steelblue')# Generate word cloud

wc=wc.generate(" ".join(words_list).lower())# Show word cloud

plt.figure(figsize=(18,12))

plt.imshow(wc, interpolation='bilinear')

plt.axis("off")

plt.show()# save the masked wordcloud

wc.to_file('masked_wsordcloud.png');這是輸出: (Here’s the output:)

Voila! We produced our wordcloud programmatically! 🚚💨

瞧! 我們以編程方式產生了wordcloud! 🚚💨

Thank you for reading this far! 🙌

感謝您閱讀本文! 🙌

參考: (Ref:)

https://amueller.github.io/word_cloud/generated/wordcloud.WordCloud.html

https://amueller.github.io/word_cloud/generated/wordcloud.WordCloud.html

https://nlp.stanford.edu/fsnlp/promo/colloc.pdf

https://nlp.stanford.edu/fsnlp/promo/colloc.pdf

翻譯自: https://towardsdatascience.com/how-to-make-a-wordcloud-of-your-blog-programmatically-6c2bad1baa4

com編程創建快捷方式中文

本文來自互聯網用戶投稿,該文觀點僅代表作者本人,不代表本站立場。本站僅提供信息存儲空間服務,不擁有所有權,不承擔相關法律責任。 如若轉載,請注明出處:http://www.pswp.cn/news/388168.shtml 繁體地址,請注明出處:http://hk.pswp.cn/news/388168.shtml 英文地址,請注明出處:http://en.pswp.cn/news/388168.shtml

如若內容造成侵權/違法違規/事實不符,請聯系多彩編程網進行投訴反饋email:809451989@qq.com,一經查實,立即刪除!

的內部運行機制分析)

![[UE4]刪除UI:Remove from Parent](http://pic.xiahunao.cn/[UE4]刪除UI:Remove from Parent)

)