python dash

📌 Learn how to deliver AI for Big Data using Dash & Databricks this recorded webinar with Peter Kim of Plotly and Prasad Kona of Databricks.

this通過Plotly的Peter Kim和Databricks的Prasad Kona的網絡研討會了解如何使用Dash&Databricks交付用于大數據的AI。

We’re delighted to announce that Plotly and Databricks are partnering to bring cloud-distributed Artificial Intelligence (AI) & Machine Learning (ML) to a vastly wider audience of business users. By integrating the Plotly Dash frontend with the Databricks backend, we are offering a seamless process to transform AI and ML models into production-ready, dynamic, interactive, web applications. This partnership with Databricks empowers Python developers to easily and quickly build Dash apps that are connected to a Databricks Spark cluster. The direct integration, databricks-dash, is distributed by Plotly and available with Plotly’s Dash Enterprise.

我們很高興地宣布, Plotly和Databricks正在合作,將云分布式的人工智能(AI)和機器學習(ML)帶給更廣泛的業務用戶。 通過將Plotly Dash前端與Databricks后端集成,我們提供了一個無縫流程,可將AI和ML模型轉換為可用于生產,動態,交互式的Web應用程序。 通過與Databricks的合作,Python開發人員可以輕松快速地構建連接到Databricks Spark集群的Dash應用程序。 直接集成 databricks-dash 由Plotly分發,可用于 Plotly的Dash Enterprise。

Plotly’s Dash is a Python framework that enables developers to build interactive, data-rich analytical web apps in pure Python, with no JavaScript required. Traditional “full-stack” app development is done in teams with some members specializing in backend/server technologies like Python, some specializing in front-end technologies like React, and some specializing in data science. Dash provides a tightly-integrated backend and front-end, entirely written in Python. This means that data science teams producing models, visualizations and complex analyses no longer need to rely on backend specialists to expose these models to the front-end via APIs, and no longer need to rely on front-end specialists to build user interfaces to connect to these APIs. If you’re interested in Dash’s architecture, please see our “Dash is React for Python” article.

Plotly的Dash是一個Python框架,可讓開發人員使用純Python構建交互式,數據豐富的分析Web應用程序,而無需使用JavaScript。 傳統的“全棧”應用程序開發是由團隊完成的,其中一些成員專門研究Python等后端/服務器技術,一些成員專門研究React等前端技術,還有一些專門研究數據科學。 Dash提供了完全使用Python編寫的緊密集成的后端和前端。 這意味著產生模型,可視化和復雜分析的數據科學團隊不再需要依靠后端專家通過API將這些模型公開給前端,也不再需要依靠前端專家來構建用戶界面進行連接這些API。 如果您對Dash的體系結構感興趣,請參閱我們的“ Dash是Python的React ”一文。

Databricks’ unified platform for data and AI rests on top of Apache Spark, a distributed general-purpose cluster computing framework originally developed by the Databricks founders. With enough hardware and networking availability, Apache Spark scales horizontally naturally due to its distributed architecture. Apache Spark has a rich collection of APIs, MLlib, and integration with popular Python scientific libraries (e.g. pandas, scikit-learn, etc). The Databricks Data Science Workspace provides managed, optimized, and secure Spark clusters. This enables developers and data scientists to focus on building and optimizing models and worry less about infrastructure aspects such as speed, reliability, building fault-tolerant systems, etc. Databricks also abstracts away many manual administrative duties (such as creating a cluster, auto-scaling hardware, and managing users) and simplifies the development process by enabling users to create IPython-like notebooks.

Databricks的數據和AI統一平臺位于Apache Spark之上, Apache Spark是由Databricks創始人最初開發的分布式通用集群計算框架。 憑借足夠的硬件和網絡可用性,Apache Spark由于其分布式架構而可以自然地水平擴展。 Apache Spark具有豐富的API,MLlib以及與流行的Python科學庫(例如,pandas,scikit-learn等)的集成。 Databricks數據科學工作區提供了托管,優化和安全的Spark集群。 這使開發人員和數據科學家可以專注于構建和優化模型,而不必擔心基礎架構方面的問題,例如速度,可靠性,構建容錯系統等。Databricks還抽象出許多手動管理職責(例如創建集群,擴展硬件并管理用戶),并通過使用戶能夠創建類似于IPython的筆記本來簡化開發過程。

With Dash apps connected to Databricks Spark clusters, Dash + Databricks gives business users the powerful magic of Python and

pyspark.通過將Dash應用程序連接到Databricks Spark集群,Dash + Databricks為業務用戶提供了Python和

pyspark的強大魔力。

Databricks is the industry-leading Spark platform, and Plotly’s Dash is the industry-leading library for building UIs and web apps in Python. By using Dash and Databricks together, data scientists can quickly deliver production-ready AI and ML apps to business users that are backed by Databricks Spark clusters. A typical Dash + Databricks app is usually less than a thousand lines of code written in Python (no Javascript required). These Dash apps can vary from simple UIs for simulation models to complex dashboards acting as read/write interfaces to your Databricks Spark cluster and large amounts of data stored in a data warehouse. With Dash apps connected to Databricks Spark clusters, Dash + Databricks gives business users the powerful magic of Python and pyspark.

Databricks是行業領先的Spark平臺,而Plotly的Dash是行業領先的庫,用于在Python中構建UI和Web應用程序。 通過將Dash和Databricks一起使用,數據科學家可以為由Databricks Spark集群支持的業務用戶快速交付可用于生產的AI和ML應用程序。 一個典型的Dash + Databricks應用程序通常少于一千行用Python編寫的代碼(不需要Javascript)。 這些Dash應用程序的范圍從模擬模型的簡單UI到充當Databricks Spark集群的讀/寫界面以及存儲在數據倉庫中的大量數據的復雜儀表板不等。 通過將Dash應用程序連接到Databricks Spark集群,Dash + Databricks為業務用戶提供了Python和pyspark的強大魔力。

Currently, there are two ways to integrate Dash with Databricks:

當前,有兩種方法可以將Dash與Databricks集成:

databricks-dashsupports a Notebook-like approach meant for quick Dash app prototyping within the Databricks notebook environment.databricks-dash支持類似于Notebook的方法,旨在在Databricks筆記本環境中快速進行Dash應用原型設計。databricks-connectsupports a development-like approach meant for productionizing.databricks-connect支持用于生產的類似于開發的方法。

More details on each integration methods follow:

每種集成方法的更多詳細信息如下:

數據塊-破折號 (databricks-dash)

databricks-dash is a closed-source, custom library that can be installed and imported on any Databricks notebook. With the use of import, developers can start building Dash applications on the Databricks notebook itself. Like regular Dash applications, Dash applications in Databricks notebooks maintain their usage of app layouts and callbacks. Any PySpark code that deals with complex models or simple ETL processes written on Databricks notebooks can be easily integrated into Dash applications with minimal code migrations. Once a Flask (Python) server runs, the generated Dash application becomes hosted on your Databricks instance with a unique url. It is important to note that these Dash applications on Databricks notebooks are running on shared resources and lack a load balancer. Thus, databricks-dash is great for quick prototyping and iterating but is not recommended for production deployments. For any data scientist or developer interested in taking this Dash application using databricks-dash to production, Plotly’s Dash Enterprise documentation can provide you all the steps to help you get there by using databricks-connect.

databricks-dash是一個封閉源代碼,自定義庫,可以在任何Databricks筆記本上安裝和導入。 通過使用import ,開發人員可以開始在Databricks筆記本本身上構建Dash應用程序。 像常規的Dash應用程序一樣,Databricks筆記本中的Dash應用程序保持其對應用程序布局和回調的使用。 任何處理Databricks筆記本上編寫的復雜模型或簡單ETL流程的PySpark代碼都可以輕松地集成到Dash應用程序中,而無需進行最少的代碼遷移。 Flask(Python)服務器運行后,生成的Dash應用程序將使用唯一的URL托管在您的Databricks實例上。 重要的是要注意,Databricks筆記本上的這些Dash應用程序在共享資源上運行,并且沒有負載平衡器。 因此, databricks-dash非常適合快速進行原型制作和迭代,但不建議用于生產部署。 對于有興趣將使用databricks-dash Dash應用程序databricks-dash生產的任何數據科學家或開發人員,Plotly的Dash Enterprise文檔都可以為您提供所有步驟,以幫助您使用databricks-connect到達那里。

Here is a minimal self-contained example of using databricks-dash to create a Dash app from the Databricks notebook interface. After installing the databricks-dash library, run the example by copying and pasting the following code block into a Databricks notebook cell. Here’s a video demo of how to use databricks-dash to accompany the code below.

這是一個使用databricks-dash從Databricks筆記本界面創建Dash應用程序的獨立示例。 安裝databricks-dash庫后,通過將以下代碼塊復制并粘貼到Databricks筆記本單元中來運行示例。 以下是有關如何使用databricks-dash伴隨以下代碼的視頻演示 。

# Imports

import plotly.express as px

import dash_core_components as dcc

import dash_html_components as html

from dash.dependencies import Input, Output

from databricks_dash import DatabricksDash# Load Data

df = px.data.tips()# Build App

app = DatabricksDash(__name__)

server = app.serverapp.layout = html.Div([

html.H1("DatabricksDash Demo"),

dcc.Graph(id='graph'),

html.Label([

"colorscale",

dcc.Dropdown(

id='colorscale-dropdown', clearable=False,

value='plasma', options=[

{'label': c,'value': c}

for c in px.colors.named_colorscales() ])

]),

])# Define callback to update graph

@app.callback(

Output('graph', 'figure'),

[Input("colorscale-dropdown", "value")]

)

def update_figure(colorscale):

return px.scatter(

df, x="total_bill", y="tip", color="size",

color_continuous_scale=colorscale,

render_mode="webgl", title="Tips"

)if __name__ == "__main__":

app.run_server(mode='inline', debug=True)The result of this code block is this app:

該代碼塊的結果是該應用程序:

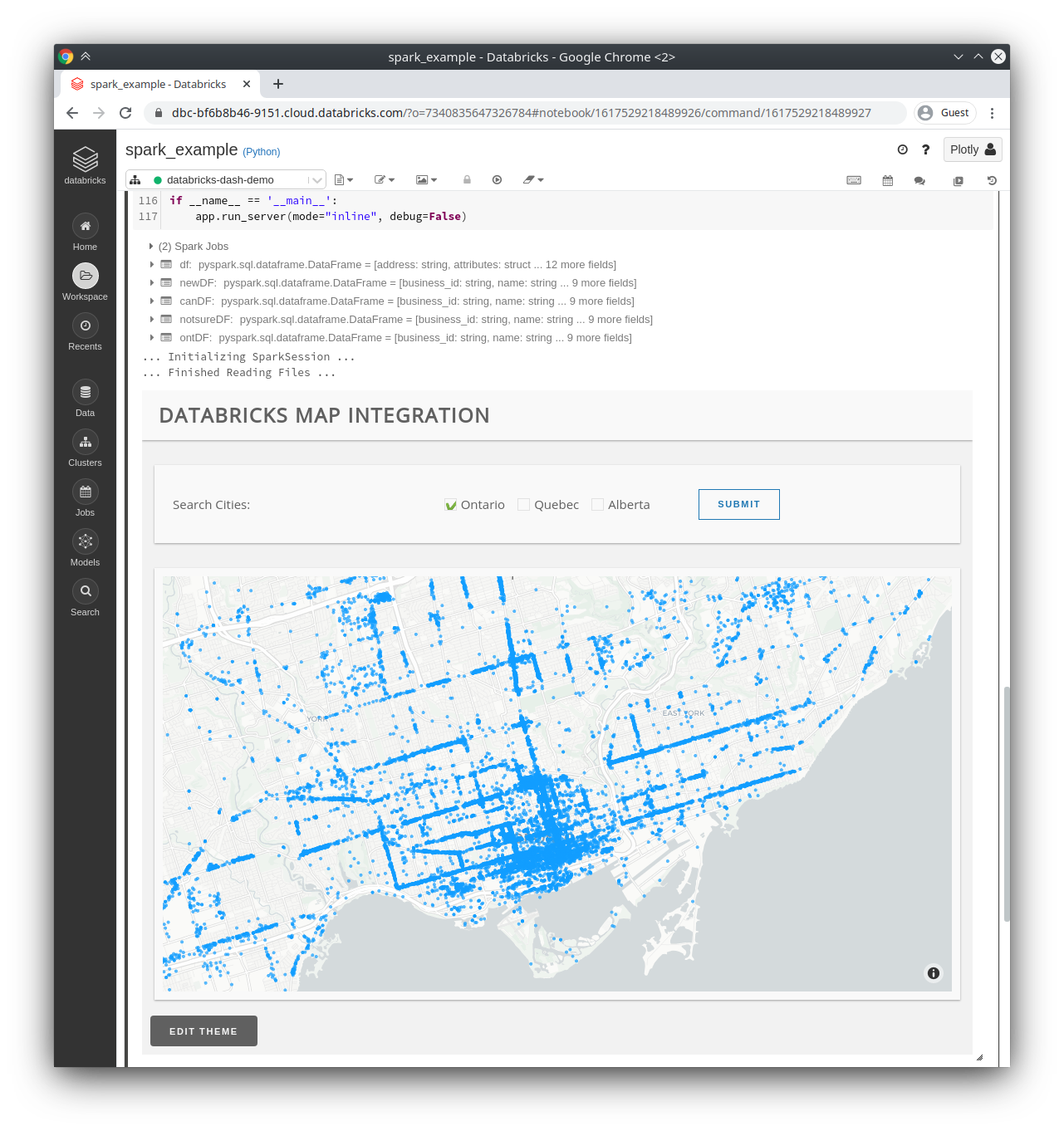

Here is a slightly larger example that uses PySpark to perform data pre-processing on the Databricks cluster. The dashboard itself is styled using Dash Design Kit, so the dash-design-kit package must be installed along with databricks-dash. This example is based on the Databricks-connect application template but has been modified to use databricks_dash.DatabricksDash instead of dash.Dash.

這是一個稍大的示例,該示例使用PySpark在Databricks群集上執行數據預處理。 儀表板本身使用Dash Design Kit設置樣式,因此dash-design-kit軟件包必須與databricks-dash一起安裝。 本示例基于Databricks-connect應用程序模板,但已修改為使用databricks_dash.DatabricksDash而不是dash.Dash 。

數據塊連接 (databricks-connect)

databricks-connectis the recommended way to get PySpark models and Dash applications on Databricks notebooks to production. databricks-connect is a Spark client library distributed by Databricks that allows locally written Spark jobs to be run on a remote Databricks cluster. After installing and configuring databricks-connect and PySpark, developers and data scientists can run Dash and PySpark code on their favorite IDEs and no longer need to use Databricks notebooks. To make this happen, simply import PySpark, as you would import any other python modules, and write PySpark code with your Dash code base. We’ve made this video demo of how to utilize databricks-connect. The end result of this is a Dash application that can query our Databricks cluster for distributed processing, which is essential for big data use cases. This is important because using databricks-connect means our Dash application can be deployed to Plotly’s Dash Enterprise and be production-ready, which is the ideal workflow in Python!

建議使用databricks-connect Databricks筆記本上的PySpark模型和Dash應用程序投入生產。 databricks-connect是由Databricks分發的Spark客戶端庫,它允許在遠程Databricks群集上運行本地編寫的Spark作業。 安裝并配置了databricks-connect和PySpark之后,開發人員和數據科學家可以在自己喜歡的IDE上運行Dash和PySpark代碼,而不再需要使用Databricks筆記本。 為此,只需導入PySpark,就像導入其他任何python模塊一樣,然后使用Dash代碼庫編寫PySpark代碼。 我們已經制作了這個視頻演示 , 演示了如何利用databricks-connect 。 這樣的最終結果是一個Dash應用程序,該應用程序可以查詢我們的Databricks集群以進行分布式處理,這對于大數據用例至關重要。 這很重要,因為使用databricks-connect意味著我們的Dash應用程序可以部署到Plotly的Dash Enterprise并可以投入生產,這是Python中的理想工作流程!

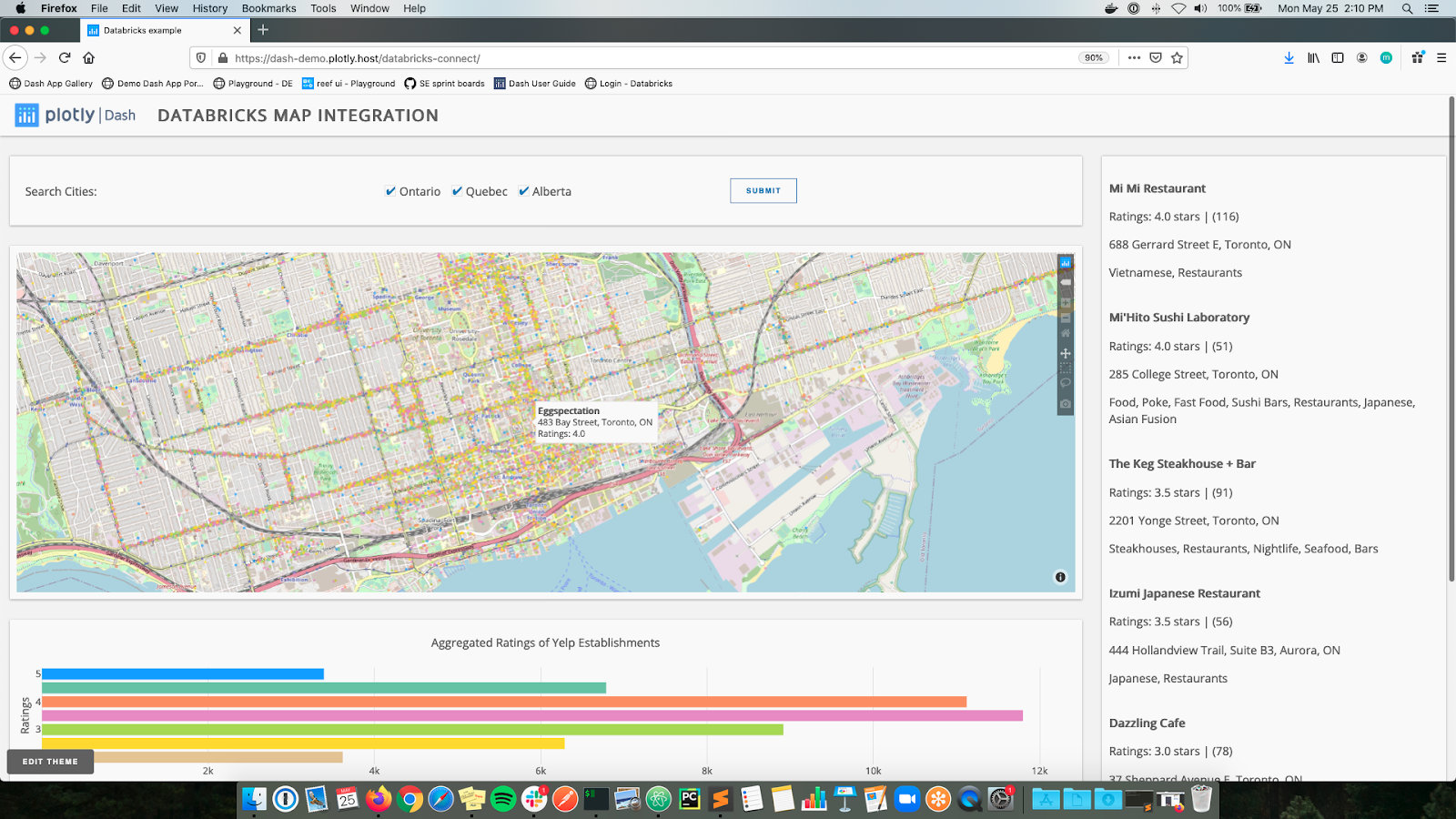

Here is an example of a Dash application with databricks-connect. This Dash application uses Yelp’s open dataset and plots out restaurant establishments in Toronto, Calgary, and Montreal on a map. Once we click Submit, this triggers a Spark job on our Databricks cluster, with filtering and matching based on given criteria.

這是帶有databricks-connect的Dash應用程序的示例 。 該Dash應用程序使用Yelp的開放數據集 ,并在地圖上繪制多倫多,卡爾加里和蒙特利爾的餐廳。 單擊“提交”后,這將觸發Databricks集群上的Spark作業,并根據給定條件進行過濾和匹配。

So in summary, the two ways to integrate Dash with Databricks offer advantages for quick prototyping in a Notebook-like fashion or for high-performance production deployment of analytical apps. Both methods provide a path to leverage Plotly’s Dash Enterprise as the recommended solution to operationalize AI/ML models and data directly to business users.

因此,總而言之,將Dash與Databricks集成的兩種方式為以類似于Notebook的方式快速進行原型制作或分析應用程序的高性能生產部署提供了優勢。 兩種方法都提供了一條途徑,可以利用Plotly的Dash Enterprise作為推薦的解決方案來直接將AI / ML模型和數據投入業務用戶。

Databricks brings the best-in-class Python analytic processing backend and Plotly’s Dash brings the best-in-class Python front-end! The documentation for installing, creating, and deploying databricks-dash applications will be available in the next version of Dash Enterprise 4.0 in July 2020.

Databricks帶來了一流的Python分析處理后端,而Plotly的Dash帶來了一流的Python前端! 2020年7月 ,下一版本的 Dash Enterprise 4.0 將提供 用于安裝,創建和部署 databricks-dash 應用程序 的文檔 。

We’ll be posting some more info about our Databricks partnership in the coming weeks on our Twitter and LinkedIn, so stay tuned! If you have any questions or would like to learn more about Plotly Dash and Databricks integration, email info@plotly.com, and we’ll get you started!

我們將在未來幾周內在Twitter和LinkedIn上發布有關Databricks合作伙伴關系的更多信息,敬請期待! 如果您有任何疑問或想了解有關Plotly Dash和Databricks集成的更多信息,請發送電子郵件至info@plotly.com ,我們將幫助您入門!

翻譯自: https://medium.com/plotly/dash-is-an-ideal-front-end-for-your-databricks-spark-backend-212ee3cae6cc

python dash

本文來自互聯網用戶投稿,該文觀點僅代表作者本人,不代表本站立場。本站僅提供信息存儲空間服務,不擁有所有權,不承擔相關法律責任。 如若轉載,請注明出處:http://www.pswp.cn/news/388141.shtml 繁體地址,請注明出處:http://hk.pswp.cn/news/388141.shtml 英文地址,請注明出處:http://en.pswp.cn/news/388141.shtml

如若內容造成侵權/違法違規/事實不符,請聯系多彩編程網進行投訴反饋email:809451989@qq.com,一經查實,立即刪除!

![[LeetCode] 3. Longest Substring Without Repeating Characters 題解](http://pic.xiahunao.cn/[LeetCode] 3. Longest Substring Without Repeating Characters 題解)

![[翻譯 EF Core in Action 2.0] 查詢數據庫](http://pic.xiahunao.cn/[翻譯 EF Core in Action 2.0] 查詢數據庫)