YOLOv5 分類模型 預處理 OpenCV實現

flyfish

YOLOv5 分類模型 預處理 PIL 實現

YOLOv5 分類模型 OpenCV和PIL兩者實現預處理的差異

YOLOv5 分類模型 數據集加載 1 樣本處理

YOLOv5 分類模型 數據集加載 2 切片處理

YOLOv5 分類模型 數據集加載 3 自定義類別

YOLOv5 分類模型的預處理(1) Resize 和 CenterCrop

YOLOv5 分類模型的預處理(2)ToTensor 和 Normalize

YOLOv5 分類模型 Top 1和Top 5 指標說明

YOLOv5 分類模型 Top 1和Top 5 指標實現

判斷圖像是否是np.ndarray類型和維度

OpenCV讀取一張圖像時,類型類型就是<class 'numpy.ndarray'>,這里判斷圖像是否是np.ndarray類型

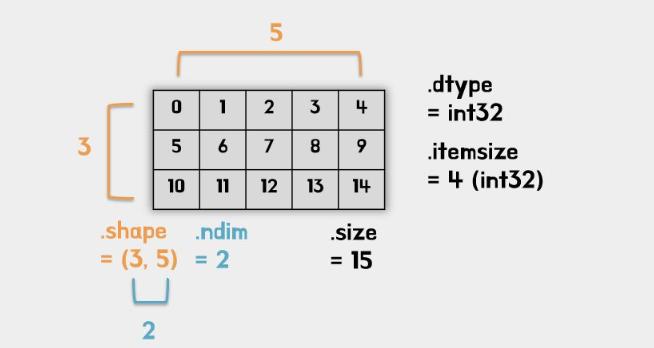

dim是dimension維度的縮寫,shape屬性的長度也是它的ndim

灰度圖的shape為HW,二個維度

RGB圖的shape為HWC,三個維度

def _is_numpy_image(img):return isinstance(img, np.ndarray) and (img.ndim in {2, 3})

實現ToTensor和Normalize

def totensor_normalize(img):print("preprocess:",img.shape)images = (img/255-mean)/stdimages = images.transpose((2, 0, 1))# HWC to CHWimages = np.ascontiguousarray(images)return images

實現Resize

插值可以是以下參數

# 'nearest': cv2.INTER_NEAREST,

# 'bilinear': cv2.INTER_LINEAR,

# 'area': cv2.INTER_AREA,

# 'bicubic': cv2.INTER_CUBIC,

# 'lanczos': cv2.INTER_LANCZOS4

def resize(img, size, interpolation=cv2.INTER_LINEAR):r"""Resize the input numpy ndarray to the given size.Args:img (numpy ndarray): Image to be resized.size: like pytroch about size interpretation flyfish.interpolation (int, optional): Desired interpolation. Default is``cv2.INTER_LINEAR`` Returns:numpy Image: Resized image.like opencv"""if not _is_numpy_image(img):raise TypeError('img should be numpy image. Got {}'.format(type(img)))if not (isinstance(size, int) or (isinstance(size, collections.abc.Iterable) and len(size) == 2)):raise TypeError('Got inappropriate size arg: {}'.format(size))h, w = img.shape[0], img.shape[1]if isinstance(size, int):if (w <= h and w == size) or (h <= w and h == size):return imgif w < h:ow = sizeoh = int(size * h / w)else:oh = sizeow = int(size * w / h)else:ow, oh = size[1], size[0]output = cv2.resize(img, dsize=(ow, oh), interpolation=interpolation)if img.shape[2] == 1:return output[:, :, np.newaxis]else:return output

實現CenterCrop

def crop(img, i, j, h, w):"""Crop the given Image flyfish.Args:img (numpy ndarray): Image to be cropped.i: Upper pixel coordinate.j: Left pixel coordinate.h: Height of the cropped image.w: Width of the cropped image.Returns:numpy ndarray: Cropped image."""if not _is_numpy_image(img):raise TypeError('img should be numpy image. Got {}'.format(type(img)))return img[i:i + h, j:j + w, :]def center_crop(img, output_size):if isinstance(output_size, numbers.Number):output_size = (int(output_size), int(output_size))h, w = img.shape[0:2]th, tw = output_sizei = int(round((h - th) / 2.))j = int(round((w - tw) / 2.))return crop(img, i, j, th, tw)

完整

import time

from models.common import DetectMultiBackend

import os

import os.path

from typing import Any, Callable, cast, Dict, List, Optional, Tuple, Union

import cv2

import numpy as np

import collections

import torch

import numbersclasses_name=['n02086240', 'n02087394', 'n02088364', 'n02089973', 'n02093754', 'n02096294', 'n02099601', 'n02105641', 'n02111889', 'n02115641']mean=[0.485, 0.456, 0.406]

std=[0.229, 0.224, 0.225]def _is_numpy_image(img):return isinstance(img, np.ndarray) and (img.ndim in {2, 3})def totensor_normalize(img):print("preprocess:",img.shape)images = (img/255-mean)/stdimages = images.transpose((2, 0, 1))# HWC to CHWimages = np.ascontiguousarray(images)return imagesdef resize(img, size, interpolation=cv2.INTER_LINEAR):r"""Resize the input numpy ndarray to the given size.Args:img (numpy ndarray): Image to be resized.size: like pytroch about size interpretation flyfish.interpolation (int, optional): Desired interpolation. Default is``cv2.INTER_LINEAR`` Returns:numpy Image: Resized image.like opencv"""if not _is_numpy_image(img):raise TypeError('img should be numpy image. Got {}'.format(type(img)))if not (isinstance(size, int) or (isinstance(size, collections.abc.Iterable) and len(size) == 2)):raise TypeError('Got inappropriate size arg: {}'.format(size))h, w = img.shape[0], img.shape[1]if isinstance(size, int):if (w <= h and w == size) or (h <= w and h == size):return imgif w < h:ow = sizeoh = int(size * h / w)else:oh = sizeow = int(size * w / h)else:ow, oh = size[1], size[0]output = cv2.resize(img, dsize=(ow, oh), interpolation=interpolation)if img.shape[2] == 1:return output[:, :, np.newaxis]else:return outputdef crop(img, i, j, h, w):"""Crop the given Image flyfish.Args:img (numpy ndarray): Image to be cropped.i: Upper pixel coordinate.j: Left pixel coordinate.h: Height of the cropped image.w: Width of the cropped image.Returns:numpy ndarray: Cropped image."""if not _is_numpy_image(img):raise TypeError('img should be numpy image. Got {}'.format(type(img)))return img[i:i + h, j:j + w, :]def center_crop(img, output_size):if isinstance(output_size, numbers.Number):output_size = (int(output_size), int(output_size))h, w = img.shape[0:2]th, tw = output_sizei = int(round((h - th) / 2.))j = int(round((w - tw) / 2.))return crop(img, i, j, th, tw)class DatasetFolder:def __init__(self,root: str,) -> None:self.root = rootif classes_name is None or not classes_name:classes, class_to_idx = self.find_classes(self.root)print("not classes_name")else:classes = classes_nameclass_to_idx ={cls_name: i for i, cls_name in enumerate(classes)}print("is classes_name")print("classes:",classes)print("class_to_idx:",class_to_idx)samples = self.make_dataset(self.root, class_to_idx)self.classes = classesself.class_to_idx = class_to_idxself.samples = samplesself.targets = [s[1] for s in samples]@staticmethoddef make_dataset(directory: str,class_to_idx: Optional[Dict[str, int]] = None,) -> List[Tuple[str, int]]:directory = os.path.expanduser(directory)if class_to_idx is None:_, class_to_idx = self.find_classes(directory)elif not class_to_idx:raise ValueError("'class_to_index' must have at least one entry to collect any samples.")instances = []available_classes = set()for target_class in sorted(class_to_idx.keys()):class_index = class_to_idx[target_class]target_dir = os.path.join(directory, target_class)if not os.path.isdir(target_dir):continuefor root, _, fnames in sorted(os.walk(target_dir, followlinks=True)):for fname in sorted(fnames):path = os.path.join(root, fname)if 1: # 驗證:item = path, class_indexinstances.append(item)if target_class not in available_classes:available_classes.add(target_class)empty_classes = set(class_to_idx.keys()) - available_classesif empty_classes:msg = f"Found no valid file for the classes {', '.join(sorted(empty_classes))}. "return instancesdef find_classes(self, directory: str) -> Tuple[List[str], Dict[str, int]]:classes = sorted(entry.name for entry in os.scandir(directory) if entry.is_dir())if not classes:raise FileNotFoundError(f"Couldn't find any class folder in {directory}.")class_to_idx = {cls_name: i for i, cls_name in enumerate(classes)}return classes, class_to_idxdef __getitem__(self, index: int) -> Tuple[Any, Any]:path, target = self.samples[index]sample = self.loader(path)return sample, targetdef __len__(self) -> int:return len(self.samples)def loader(self, path):print("path:", path)img = cv2.imread(path) # BGR HWCimg=cv2.cvtColor(img,cv2.COLOR_BGR2RGB)#RGBprint("type:",type(img))return imgdef time_sync():return time.time()dataset = DatasetFolder(root="/media/flyfish/datasets/imagewoof/val")

weights = "/home/classes.pt"

device = "cpu"

model = DetectMultiBackend(weights, device=device, dnn=False, fp16=False)

model.eval()def classify_transforms(img):img=resize(img,224)img=center_crop(img,224)img=totensor_normalize(img)return img;pred, targets, loss, dt = [], [], 0, [0.0, 0.0, 0.0]

# current batch size =1

for i, (images, labels) in enumerate(dataset):print("i:", i)print(images.shape, labels)im = classify_transforms(images)images=torch.from_numpy(im).to(torch.float32) # numpy to tensorimages = images.unsqueeze(0).to("cpu")print(images.shape)t1 = time_sync()images = images.to(device, non_blocking=True)t2 = time_sync()# dt[0] += t2 - t1y = model(images)y=y.numpy()print("y:", y)t3 = time_sync()# dt[1] += t3 - t2tmp1=y.argsort()[:,::-1][:, :5]print("tmp1:", tmp1)pred.append(tmp1)print("labels:", labels)targets.append(labels)print("for pred:", pred) # listprint("for targets:", targets) # list# dt[2] += time_sync() - t3pred, targets = np.concatenate(pred), np.array(targets)

print("pred:", pred)

print("pred:", pred.shape)

print("targets:", targets)

print("targets:", targets.shape)

correct = ((targets[:, None] == pred)).astype(np.float32)

print("correct:", correct.shape)

print("correct:", correct)

acc = np.stack((correct[:, 0], correct.max(1)), axis=1) # (top1, top5) accuracy

print("acc:", acc.shape)

print("acc:", acc)

top = acc.mean(0)

print("top1:", top[0])

print("top5:", top[1])

結果

pred: [[0 3 6 2 1][0 7 2 9 3][0 5 6 2 9]...[9 8 7 6 1][9 3 6 7 0][9 5 0 2 7]]

pred: (3929, 5)

targets: [0 0 0 ... 9 9 9]

targets: (3929,)

correct: (3929, 5)

correct: [[ 1 0 0 0 0][ 1 0 0 0 0][ 1 0 0 0 0]...[ 1 0 0 0 0][ 1 0 0 0 0][ 1 0 0 0 0]]

acc: (3929, 2)

acc: [[ 1 1][ 1 1][ 1 1]...[ 1 1][ 1 1][ 1 1]]

top1: 0.86230594

top5: 0.98167473

)

開發構建鋼鐵產業產品智能自動化檢測識別系統)

上安裝mysql)