【服務器與部署 14】消息隊列部署:RabbitMQ、Kafka生產環境搭建指南

關鍵詞:消息隊列、RabbitMQ集群、Kafka集群、消息中間件、異步通信、微服務架構、高可用部署、消息持久化、生產環境配置、分布式系統

摘要:本文從實際業務場景出發,深入解析RabbitMQ和Kafka消息隊列的生產環境部署方案。通過生動的比喻和實戰案例,幫助讀者理解消息隊列的核心原理,掌握高可用消息隊列架構的設計與部署,解決分布式系統中的異步通信難題。

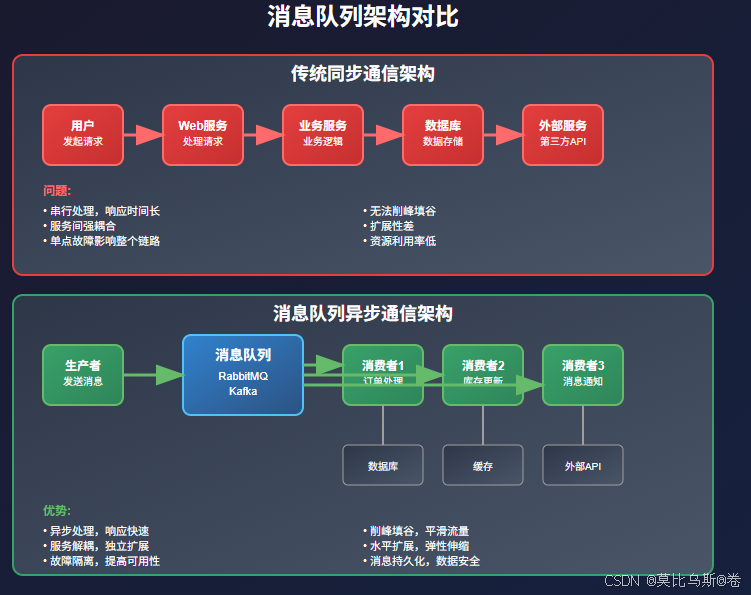

為什么需要消息隊列?

想象一下,你經營著一家繁忙的餐廳。顧客點餐、廚師做菜、服務員上菜,如果每個環節都要等待前一個環節完成,整個餐廳的效率會非常低。聰明的做法是什么?

引入"傳菜單"系統:顧客點餐后,服務員把訂單放到廚房的訂單架上,廚師按順序處理,做好的菜放到出菜口,服務員再取走上菜。這樣,每個環節都能并行工作,大大提高了效率。

這就是消息隊列的基本思想——通過異步消息傳遞,解耦系統各個組件,提高整體性能和可靠性。

消息隊列的核心概念

生產者與消費者模式

消息隊列就像是一個智能的郵局系統:

- 生產者(Producer):寄信人,負責發送消息

- 消息隊列(Queue):郵箱,暫存消息

- 消費者(Consumer):收信人,接收和處理消息

- 代理(Broker):郵局,管理消息的存儲和轉發

消息隊列的優勢

- 解耦:系統組件之間不需要直接通信

- 異步:發送方不需要等待接收方處理完成

- 削峰填谷:應對流量突發,保護下游系統

- 可靠性:消息持久化,保證不丟失

- 擴展性:可以靈活增加生產者和消費者

RabbitMQ:可靠的消息傳遞專家

RabbitMQ的特點

RabbitMQ就像一個經驗豐富的郵局局長,擅長處理各種復雜的消息路由需求。它基于AMQP協議,提供了豐富的消息路由功能。

RabbitMQ單機部署

1. 安裝RabbitMQ

# Ubuntu/Debian

sudo apt-get update

sudo apt-get install rabbitmq-server# CentOS/RHEL

sudo yum install epel-release

sudo yum install rabbitmq-server# 啟動服務

sudo systemctl start rabbitmq-server

sudo systemctl enable rabbitmq-server

2. 基礎配置

# 啟用管理插件

sudo rabbitmq-plugins enable rabbitmq_management# 創建管理員用戶

sudo rabbitmqctl add_user admin admin123

sudo rabbitmqctl set_user_tags admin administrator

sudo rabbitmqctl set_permissions -p / admin ".*" ".*" ".*"# 刪除默認用戶

sudo rabbitmqctl delete_user guest

3. 配置文件優化

# 創建配置文件 /etc/rabbitmq/rabbitmq.conf

cat > /etc/rabbitmq/rabbitmq.conf << EOF

# 網絡配置

listeners.tcp.default = 5672

management.tcp.port = 15672# 內存配置

vm_memory_high_watermark.relative = 0.6

vm_memory_high_watermark_paging_ratio = 0.5# 磁盤配置

disk_free_limit.relative = 2.0# 日志配置

log.console = true

log.console.level = info

log.file = /var/log/rabbitmq/rabbitmq.log

log.file.level = info# 集群配置

cluster_formation.peer_discovery_backend = rabbit_peer_discovery_classic_config

EOF

RabbitMQ集群部署

1. 集群規劃

# 節點規劃

# rabbit-node1: 192.168.1.10 (主節點)

# rabbit-node2: 192.168.1.11 (從節點)

# rabbit-node3: 192.168.1.12 (從節點)# 配置hosts文件

echo "192.168.1.10 rabbit-node1" >> /etc/hosts

echo "192.168.1.11 rabbit-node2" >> /etc/hosts

echo "192.168.1.12 rabbit-node3" >> /etc/hosts

2. 集群配置

# 在所有節點上設置相同的Erlang Cookie

sudo systemctl stop rabbitmq-server

echo "RABBITMQ_CLUSTER_COOKIE" | sudo tee /var/lib/rabbitmq/.erlang.cookie

sudo chmod 600 /var/lib/rabbitmq/.erlang.cookie

sudo chown rabbitmq:rabbitmq /var/lib/rabbitmq/.erlang.cookie# 啟動所有節點

sudo systemctl start rabbitmq-server# 在node2和node3上加入集群

sudo rabbitmqctl stop_app

sudo rabbitmqctl join_cluster rabbit@rabbit-node1

sudo rabbitmqctl start_app# 驗證集群狀態

sudo rabbitmqctl cluster_status

3. 高可用隊列配置

# 設置隊列鏡像策略

sudo rabbitmqctl set_policy ha-all "^ha\." '{"ha-mode":"all","ha-sync-mode":"automatic"}'# 設置高可用交換器

sudo rabbitmqctl set_policy ha-federation "^federation\." '{"federation-upstream-set":"all"}'

RabbitMQ監控與管理

Python監控腳本

import pika

import json

import requests

import time

from datetime import datetimeclass RabbitMQMonitor:def __init__(self, host='localhost', port=15672, username='admin', password='admin123'):self.host = hostself.port = portself.username = usernameself.password = passwordself.base_url = f"http://{host}:{port}/api"def get_cluster_status(self):"""獲取集群狀態"""try:response = requests.get(f"{self.base_url}/nodes",auth=(self.username, self.password))if response.status_code == 200:nodes = response.json()cluster_info = {'total_nodes': len(nodes),'running_nodes': len([n for n in nodes if n['running']]),'nodes': []}for node in nodes:cluster_info['nodes'].append({'name': node['name'],'running': node['running'],'memory_used': node.get('mem_used', 0),'disk_free': node.get('disk_free', 0),'uptime': node.get('uptime', 0)})return cluster_infoelse:return Noneexcept Exception as e:print(f"獲取集群狀態失敗: {e}")return Nonedef get_queue_metrics(self):"""獲取隊列指標"""try:response = requests.get(f"{self.base_url}/queues",auth=(self.username, self.password))if response.status_code == 200:queues = response.json()metrics = {'total_queues': len(queues),'total_messages': sum(q.get('messages', 0) for q in queues),'total_consumers': sum(q.get('consumers', 0) for q in queues),'queues': []}for queue in queues:metrics['queues'].append({'name': queue['name'],'messages': queue.get('messages', 0),'consumers': queue.get('consumers', 0),'memory': queue.get('memory', 0),'message_stats': queue.get('message_stats', {})})return metricselse:return Noneexcept Exception as e:print(f"獲取隊列指標失敗: {e}")return Nonedef health_check(self):"""健康檢查"""try:# 檢查API連接response = requests.get(f"{self.base_url}/overview",auth=(self.username, self.password),timeout=5)if response.status_code == 200:overview = response.json()return {'status': 'healthy','version': overview.get('rabbitmq_version', 'unknown'),'erlang_version': overview.get('erlang_version', 'unknown'),'total_messages': overview.get('queue_totals', {}).get('messages', 0)}else:return {'status': 'unhealthy', 'reason': 'API不可訪問'}except Exception as e:return {'status': 'unhealthy', 'reason': str(e)}def generate_report(self):"""生成監控報告"""print("RabbitMQ集群監控報告")print("=" * 50)print(f"時間: {datetime.now().strftime('%Y-%m-%d %H:%M:%S')}")# 健康檢查health = self.health_check()print(f"集群狀態: {health['status']}")if health['status'] == 'healthy':print(f"RabbitMQ版本: {health['version']}")print(f"Erlang版本: {health['erlang_version']}")# 集群信息cluster = self.get_cluster_status()if cluster:print(f"節點數量: {cluster['running_nodes']}/{cluster['total_nodes']}")# 隊列信息queues = self.get_queue_metrics()if queues:print(f"隊列數量: {queues['total_queues']}")print(f"消息總數: {queues['total_messages']}")print(f"消費者總數: {queues['total_consumers']}")else:print(f"錯誤原因: {health['reason']}")# 使用示例

monitor = RabbitMQMonitor()

monitor.generate_report()

Kafka:高吞吐量的流處理平臺

Kafka的特點

Kafka就像一個高速公路系統,專門設計用來處理大量的數據流。它不僅是消息隊列,更是一個分布式流處理平臺。

Kafka單機部署

1. 安裝Java環境

# 安裝OpenJDK 11

sudo apt-get update

sudo apt-get install openjdk-11-jdk# 設置JAVA_HOME

echo 'export JAVA_HOME=/usr/lib/jvm/java-11-openjdk-amd64' >> ~/.bashrc

source ~/.bashrc

2. 安裝Kafka

# 下載Kafka

wget https://downloads.apache.org/kafka/2.8.0/kafka_2.13-2.8.0.tgz

tar -xzf kafka_2.13-2.8.0.tgz

sudo mv kafka_2.13-2.8.0 /opt/kafka# 創建用戶和目錄

sudo useradd -r -s /bin/false kafka

sudo mkdir -p /var/log/kafka

sudo chown -R kafka:kafka /opt/kafka /var/log/kafka

3. 配置Zookeeper

# 配置Zookeeper

cat > /opt/kafka/config/zookeeper.properties << EOF

dataDir=/var/lib/zookeeper

clientPort=2181

maxClientCnxns=0

admin.enableServer=false

server.1=localhost:2888:3888

EOF# 啟動Zookeeper

sudo -u kafka /opt/kafka/bin/zookeeper-server-start.sh -daemon /opt/kafka/config/zookeeper.properties

4. 配置Kafka

# 配置Kafka服務器

cat > /opt/kafka/config/server.properties << EOF

# 服務器ID

broker.id=0# 監聽地址

listeners=PLAINTEXT://localhost:9092

advertised.listeners=PLAINTEXT://localhost:9092# 日志目錄

log.dirs=/var/log/kafka# 分區數量

num.partitions=3

default.replication.factor=1# 日志保留時間

log.retention.hours=168

log.retention.bytes=1073741824# Zookeeper連接

zookeeper.connect=localhost:2181# 其他配置

group.initial.rebalance.delay.ms=0

offsets.topic.replication.factor=1

transaction.state.log.replication.factor=1

transaction.state.log.min.isr=1

EOF# 啟動Kafka

sudo -u kafka /opt/kafka/bin/kafka-server-start.sh -daemon /opt/kafka/config/server.properties

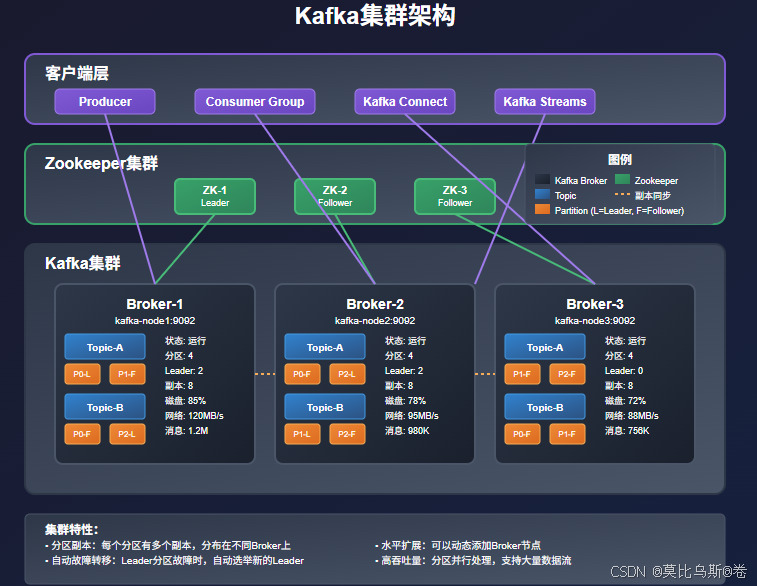

Kafka集群部署

1. 集群規劃

# 節點規劃

# kafka-node1: 192.168.1.20 (broker.id=1)

# kafka-node2: 192.168.1.21 (broker.id=2)

# kafka-node3: 192.168.1.22 (broker.id=3)# Zookeeper集群

# zk-node1: 192.168.1.20:2181

# zk-node2: 192.168.1.21:2181

# zk-node3: 192.168.1.22:2181

2. Zookeeper集群配置

# 在每個節點上配置Zookeeper

cat > /opt/kafka/config/zookeeper.properties << EOF

dataDir=/var/lib/zookeeper

clientPort=2181

maxClientCnxns=0

admin.enableServer=false# 集群配置

server.1=192.168.1.20:2888:3888

server.2=192.168.1.21:2888:3888

server.3=192.168.1.22:2888:3888# 選舉配置

initLimit=10

syncLimit=5

EOF# 創建myid文件

sudo mkdir -p /var/lib/zookeeper

echo "1" | sudo tee /var/lib/zookeeper/myid # 節點1

echo "2" | sudo tee /var/lib/zookeeper/myid # 節點2

echo "3" | sudo tee /var/lib/zookeeper/myid # 節點3

3. Kafka集群配置

# 節點1配置

cat > /opt/kafka/config/server.properties << EOF

broker.id=1

listeners=PLAINTEXT://0.0.0.0:9092

advertised.listeners=PLAINTEXT://192.168.1.20:9092

log.dirs=/var/log/kafka

num.partitions=3

default.replication.factor=3

min.insync.replicas=2

zookeeper.connect=192.168.1.20:2181,192.168.1.21:2181,192.168.1.22:2181

EOF# 節點2配置 (broker.id=2, IP改為192.168.1.21)

# 節點3配置 (broker.id=3, IP改為192.168.1.22)

4. 啟動集群

# 啟動順序:先啟動Zookeeper,再啟動Kafka

# 在所有節點上啟動Zookeeper

sudo -u kafka /opt/kafka/bin/zookeeper-server-start.sh -daemon /opt/kafka/config/zookeeper.properties# 等待Zookeeper集群穩定后啟動Kafka

sudo -u kafka /opt/kafka/bin/kafka-server-start.sh -daemon /opt/kafka/config/server.properties

Kafka監控與管理

Python監控腳本

from kafka import KafkaProducer, KafkaConsumer, KafkaAdminClient

from kafka.admin import ConfigResource, ConfigResourceType

import json

import time

from datetime import datetimeclass KafkaMonitor:def __init__(self, bootstrap_servers=['localhost:9092']):self.bootstrap_servers = bootstrap_serversself.admin_client = KafkaAdminClient(bootstrap_servers=bootstrap_servers,client_id='kafka_monitor')def get_cluster_metadata(self):"""獲取集群元數據"""try:metadata = self.admin_client._client.clustercluster_info = {'cluster_id': metadata.cluster_id,'controller': metadata.controller,'brokers': [],'topics': list(metadata.topics())}for broker in metadata.brokers():cluster_info['brokers'].append({'id': broker.nodeId,'host': broker.host,'port': broker.port,'rack': broker.rack})return cluster_infoexcept Exception as e:print(f"獲取集群元數據失敗: {e}")return Nonedef get_topic_info(self, topic_name):"""獲取主題信息"""try:metadata = self.admin_client._client.clustertopic_metadata = metadata.topics_to_brokers().get(topic_name)if topic_metadata:partitions = []for partition in topic_metadata.partitions.values():partitions.append({'partition': partition.partition,'leader': partition.leader,'replicas': partition.replicas,'isr': partition.isr})return {'topic': topic_name,'partitions': partitions,'partition_count': len(partitions)}else:return Noneexcept Exception as e:print(f"獲取主題信息失敗: {e}")return Nonedef create_topic(self, topic_name, num_partitions=3, replication_factor=3):"""創建主題"""try:from kafka.admin import NewTopictopic = NewTopic(name=topic_name,num_partitions=num_partitions,replication_factor=replication_factor)result = self.admin_client.create_topics([topic])# 等待創建完成for topic, future in result.items():try:future.result()print(f"主題 {topic} 創建成功")except Exception as e:print(f"主題 {topic} 創建失敗: {e}")except Exception as e:print(f"創建主題失敗: {e}")def performance_test(self, topic_name, num_messages=1000):"""性能測試"""try:# 生產者性能測試producer = KafkaProducer(bootstrap_servers=self.bootstrap_servers,value_serializer=lambda v: json.dumps(v).encode('utf-8'))start_time = time.time()for i in range(num_messages):message = {'id': i,'timestamp': datetime.now().isoformat(),'data': f'test_message_{i}'}producer.send(topic_name, message)producer.flush()producer.close()produce_time = time.time() - start_time# 消費者性能測試consumer = KafkaConsumer(topic_name,bootstrap_servers=self.bootstrap_servers,auto_offset_reset='earliest',value_deserializer=lambda m: json.loads(m.decode('utf-8')))start_time = time.time()message_count = 0for message in consumer:message_count += 1if message_count >= num_messages:breakconsume_time = time.time() - start_timeconsumer.close()return {'messages_sent': num_messages,'produce_time': produce_time,'consume_time': consume_time,'produce_rate': num_messages / produce_time,'consume_rate': num_messages / consume_time}except Exception as e:print(f"性能測試失敗: {e}")return Nonedef health_check(self):"""健康檢查"""try:# 檢查集群連接metadata = self.get_cluster_metadata()if metadata:active_brokers = len(metadata['brokers'])total_topics = len(metadata['topics'])return {'status': 'healthy','active_brokers': active_brokers,'total_topics': total_topics,'cluster_id': metadata['cluster_id']}else:return {'status': 'unhealthy', 'reason': '無法獲取集群元數據'}except Exception as e:return {'status': 'unhealthy', 'reason': str(e)}def generate_report(self):"""生成監控報告"""print("Kafka集群監控報告")print("=" * 50)print(f"時間: {datetime.now().strftime('%Y-%m-%d %H:%M:%S')}")# 健康檢查health = self.health_check()print(f"集群狀態: {health['status']}")if health['status'] == 'healthy':print(f"活躍Broker數: {health['active_brokers']}")print(f"主題總數: {health['total_topics']}")print(f"集群ID: {health['cluster_id']}")# 集群詳細信息metadata = self.get_cluster_metadata()if metadata:print("\nBroker信息:")for broker in metadata['brokers']:print(f" Broker {broker['id']}: {broker['host']}:{broker['port']}")else:print(f"錯誤原因: {health['reason']}")# 使用示例

monitor = KafkaMonitor(['localhost:9092'])

monitor.generate_report()# 創建測試主題

monitor.create_topic('test-topic', num_partitions=6, replication_factor=3)# 性能測試

perf_result = monitor.performance_test('test-topic', 10000)

if perf_result:print(f"\n性能測試結果:")print(f"生產速率: {perf_result['produce_rate']:.2f} msg/s")print(f"消費速率: {perf_result['consume_rate']:.2f} msg/s")

消息隊列對比與選擇

RabbitMQ vs Kafka

| 特性 | RabbitMQ | Kafka |

|---|---|---|

| 消息模型 | 傳統消息隊列 | 分布式日志 |

| 吞吐量 | 中等 | 非常高 |

| 延遲 | 低 | 低到中等 |

| 持久化 | 可選 | 默認持久化 |

| 消息順序 | 隊列級別 | 分區級別 |

| 消息路由 | 豐富的路由功能 | 基于主題分區 |

| 消費模式 | 推送和拉取 | 拉取 |

| 運維復雜度 | 相對簡單 | 較復雜 |

選擇建議

選擇RabbitMQ的場景:

- 需要復雜的消息路由

- 消息量不是特別大

- 需要消息確認和事務

- 團隊對AMQP協議熟悉

選擇Kafka的場景:

- 需要處理大量數據流

- 需要消息持久化存儲

- 構建實時數據管道

- 需要流處理能力

生產環境最佳實踐

性能優化

RabbitMQ優化

# 系統級別優化

# 增加文件描述符限制

echo "rabbitmq soft nofile 65536" >> /etc/security/limits.conf

echo "rabbitmq hard nofile 65536" >> /etc/security/limits.conf# 內核參數優化

echo "net.core.rmem_default = 262144" >> /etc/sysctl.conf

echo "net.core.rmem_max = 16777216" >> /etc/sysctl.conf

echo "net.core.wmem_default = 262144" >> /etc/sysctl.conf

echo "net.core.wmem_max = 16777216" >> /etc/sysctl.conf

sysctl -p

Kafka優化

# JVM參數優化

export KAFKA_HEAP_OPTS="-Xmx4G -Xms4G"

export KAFKA_JVM_PERFORMANCE_OPTS="-server -XX:+UseG1GC -XX:MaxGCPauseMillis=20 -XX:InitiatingHeapOccupancyPercent=35"# 操作系統優化

echo "vm.swappiness=1" >> /etc/sysctl.conf

echo "vm.dirty_background_ratio=5" >> /etc/sysctl.conf

echo "vm.dirty_ratio=60" >> /etc/sysctl.conf

echo "vm.dirty_expire_centisecs=12000" >> /etc/sysctl.conf

sysctl -p

監控告警

監控指標

class MessageQueueAlerts:def __init__(self):self.thresholds = {'rabbitmq': {'queue_length': 10000,'memory_usage': 0.8,'disk_free': 0.2,'connection_count': 1000},'kafka': {'consumer_lag': 100000,'disk_usage': 0.8,'under_replicated_partitions': 0,'offline_partitions': 0}}def check_rabbitmq_alerts(self, metrics):"""檢查RabbitMQ告警"""alerts = []for queue in metrics.get('queues', []):if queue['messages'] > self.thresholds['rabbitmq']['queue_length']:alerts.append({'type': 'queue_length','severity': 'warning','message': f"隊列 {queue['name']} 消息堆積: {queue['messages']}"})return alertsdef check_kafka_alerts(self, metrics):"""檢查Kafka告警"""alerts = []# 檢查消費者延遲if metrics.get('consumer_lag', 0) > self.thresholds['kafka']['consumer_lag']:alerts.append({'type': 'consumer_lag','severity': 'critical','message': f"消費者延遲過高: {metrics['consumer_lag']}"})return alerts

安全配置

RabbitMQ安全

# SSL/TLS配置

cat > /etc/rabbitmq/rabbitmq.conf << EOF

listeners.ssl.default = 5671

ssl_options.cacertfile = /etc/ssl/certs/ca-cert.pem

ssl_options.certfile = /etc/ssl/certs/server-cert.pem

ssl_options.keyfile = /etc/ssl/private/server-key.pem

ssl_options.verify = verify_peer

ssl_options.fail_if_no_peer_cert = false

EOF# 用戶權限管理

rabbitmqctl add_user producer producer_pass

rabbitmqctl add_user consumer consumer_pass

rabbitmqctl set_permissions -p / producer ".*" ".*" ""

rabbitmqctl set_permissions -p / consumer "" ".*" ".*"

Kafka安全

# SASL配置

cat > /opt/kafka/config/kafka_server_jaas.conf << EOF

KafkaServer {org.apache.kafka.common.security.plain.PlainLoginModule requiredusername="admin"password="admin-secret"user_admin="admin-secret"user_producer="producer-secret"user_consumer="consumer-secret";

};

EOF# 服務器配置

cat >> /opt/kafka/config/server.properties << EOF

listeners=SASL_PLAINTEXT://localhost:9092

security.inter.broker.protocol=SASL_PLAINTEXT

sasl.mechanism.inter.broker.protocol=PLAIN

sasl.enabled.mechanisms=PLAIN

EOF

常見問題與解決方案

問題1:消息丟失

RabbitMQ解決方案:

# 生產者確認

def publish_with_confirm(channel, exchange, routing_key, message):channel.confirm_delivery()try:channel.basic_publish(exchange=exchange,routing_key=routing_key,body=message,properties=pika.BasicProperties(delivery_mode=2) # 持久化)return Trueexcept pika.exceptions.UnroutableError:return False

Kafka解決方案:

# 生產者配置

producer = KafkaProducer(bootstrap_servers=['localhost:9092'],acks='all', # 等待所有副本確認retries=3,batch_size=16384,linger_ms=1,buffer_memory=33554432

)

問題2:消息重復

解決方案:

# 冪等性處理

def process_message_idempotent(message_id, message_data):# 檢查消息是否已處理if redis_client.exists(f"processed:{message_id}"):return "already_processed"try:# 處理消息result = process_business_logic(message_data)# 標記為已處理redis_client.setex(f"processed:{message_id}", 3600, "true")return resultexcept Exception as e:# 處理失敗,不標記為已處理raise e

問題3:消息堆積

解決方案:

# 動態擴容消費者

class AutoScaleConsumer:def __init__(self, topic, min_consumers=1, max_consumers=10):self.topic = topicself.min_consumers = min_consumersself.max_consumers = max_consumersself.consumers = []def scale_consumers(self, queue_size):target_consumers = min(self.max_consumers,max(self.min_consumers, queue_size // 1000))current_consumers = len(self.consumers)if target_consumers > current_consumers:# 擴容for _ in range(target_consumers - current_consumers):self.start_consumer()elif target_consumers < current_consumers:# 縮容for _ in range(current_consumers - target_consumers):self.stop_consumer()def start_consumer(self):# 啟動新的消費者實例passdef stop_consumer(self):# 停止消費者實例pass

總結

消息隊列就像是現代分布式系統的"神經系統",負責各個組件之間的信息傳遞。通過本文的學習,我們掌握了:

- 消息隊列的核心概念:理解生產者-消費者模式和異步通信的優勢

- RabbitMQ部署與管理:從單機到集群的完整部署方案

- Kafka部署與管理:高吞吐量流處理平臺的配置與優化

- 技術選型指導:根據業務需求選擇合適的消息隊列

- 生產環境最佳實踐:性能優化、監控告警、安全配置

- 常見問題解決:消息丟失、重復、堆積等問題的處理方案

在實際應用中,消息隊列的選擇和部署需要考慮多個因素:業務規模、性能要求、團隊技術棧、運維能力等。沒有最好的技術,只有最適合的方案。

記住,好的消息隊列架構不是一次性設計完成的,而是在實踐中不斷演進和優化的。從簡單開始,逐步完善,在實戰中積累經驗,才能真正掌握消息隊列的精髓。

參考資料

- RabbitMQ官方文檔

- Apache Kafka官方文檔

- 消息隊列設計模式

- 分布式系統消息傳遞

- 高可用消息隊列架構

)

)

)