- 🍨 本文為🔗365天深度學習訓練營 中的學習記錄博客

- 🍖 原作者:K同學啊

一、模型結構

ResNeXt-50由多個殘差塊(Residual Block)組成,每個殘差塊包含三個卷積層。以下是模型的主要結構:

-

輸入層:

- 輸入圖像尺寸為224x224x3(高度、寬度、通道數)。

-

初始卷積層:

- 使用7x7的卷積核,步長為2,輸出通道數為64。

- 后接批量歸一化(Batch Normalization)和ReLU激活函數。

- 最大池化層(Max Pooling)進一步減少特征圖的尺寸。

-

殘差塊:

- 模型包含四個主要的殘差塊組(layer1到layer4)。

- 每個殘差塊組包含多個殘差單元(Block)。

- 每個殘差單元包含三個卷積層:

- 第一個卷積層:1x1卷積,用于降維。

- 第二個卷積層:3x3分組卷積,用于特征提取。

- 第三個卷積層:1x1卷積,用于升維。

- 殘差連接(skip connection)將輸入直接加到輸出上。

-

全局平均池化層:

- 將特征圖轉換為一維向量。

-

全連接層:

- 輸出分類結果,類別數根據具體任務確定。

模型特點

- 分組卷積:將輸入通道分成多個組,每組獨立進行卷積操作,然后將結果合并。這可以減少計算量,同時保持模型的表達能力。

- 基數(Cardinality):分組的數量,增加基數可以提高模型的性能。

- 深度:ResNeXt-50有50層深度,這使得它能夠學習復雜的特征表示。

訓練過程

- 數據預處理:對輸入圖像進行歸一化處理,使其像素值在0到1之間。

- 損失函數:使用交叉熵損失函數(Cross-Entropy Loss)。

- 優化器:使用隨機梯度下降(SGD)優化器,學習率設置為1e-4。

- 訓練循環:對訓練數據進行多次迭代(epoch),每次迭代更新模型參數以最小化損失函數。

應用場景

ResNeXt-50可以應用于多種計算機視覺任務,包括但不限于:

- 圖像分類:對圖像進行分類,識別圖像中的物體類別。

- 目標檢測:檢測圖像中的物體位置和類別。

- 語義分割:將圖像中的每個像素分類到特定的類別。

- 醫學圖像分析:分析醫學圖像,如X光、CT掃描等。

- 自動駕駛:識別道路、車輛、行人等。

二、 前期準備

1. 導入庫

import torch

import torch.nn as nn

import torchvision.transforms as transforms

import torchvision

from torchvision import transforms, datasets

import os,PIL,pathlib

import os,PIL,random,pathlib

import torch.nn.functional as F

from PIL import Image

import matplotlib.pyplot as plt

#隱藏警告

import warnings

2.導入數據

data_dir = './data/4-data/'

data_dir = pathlib.Path(data_dir)

#print(data_dir)

data_paths = list(data_dir.glob('*'))

classeNames = [str(path).split("\\")[2] for path in data_paths]

#print(classeNames)

total_datadir = './data/4-data/'train_transforms = transforms.Compose([transforms.Resize([224, 224]), # 將輸入圖片resize成統一尺寸transforms.ToTensor(), # 將PIL Image或numpy.ndarray轉換為tensor,并歸一化到[0,1]之間transforms.Normalize( # 標準化處理-->轉換為標準正太分布(高斯分布),使模型更容易收斂mean=[0.485, 0.456, 0.406],std=[0.229, 0.224, 0.225]) # 其中 mean=[0.485,0.456,0.406]與std=[0.229,0.224,0.225] 從數據集中隨機抽樣計算得到的。

])total_data = datasets.ImageFolder(total_datadir,transform=train_transforms)

3.劃分數據集

train_size = int(0.8 * len(total_data))

test_size = len(total_data) - train_size

train_dataset, test_dataset = torch.utils.data.random_split(total_data, [train_size, test_size])

batch_size = 32train_dl = torch.utils.data.DataLoader(train_dataset,batch_size=batch_size,shuffle=True,num_workers=1)

test_dl = torch.utils.data.DataLoader(test_dataset,batch_size=batch_size,shuffle=True,num_workers=1)

三、模型設計

1. 神經網絡的搭建

class GroupedConv2d(nn.Module):def __init__(self, in_channels, out_channels, kernel_size, stride=1, groups=1, padding=0):super(GroupedConv2d, self).__init__()self.groups = groupsself.convs = nn.ModuleList([nn.Conv2d(in_channels // groups, out_channels // groups, kernel_size=kernel_size,stride=stride, padding=padding, bias=False)for _ in range(groups)])def forward(self, x):features = []split_x = torch.split(x, x.shape[1] // self.groups, dim=1)for i in range(self.groups):features.append(self.convs[i](split_x[i]))return torch.cat(features, dim=1)class Block(nn.Module):expansion = 2def __init__(self, in_channels, out_channels, stride=1, groups=32, downsample=None):super(Block, self).__init__()self.groups = groupsself.conv1 = nn.Conv2d(in_channels, out_channels, kernel_size=1, bias=False)self.bn1 = nn.BatchNorm2d(out_channels)self.relu = nn.ReLU(inplace=True)self.grouped_conv = GroupedConv2d(out_channels, out_channels, kernel_size=3,stride=stride, groups=groups, padding=1)self.bn2 = nn.BatchNorm2d(out_channels)self.conv3 = nn.Conv2d(out_channels, out_channels * self.expansion, kernel_size=1, bias=False)self.bn3 = nn.BatchNorm2d(out_channels * self.expansion)self.downsample = downsampleself.stride = stridedef forward(self, x):identity = xout = self.conv1(x)out = self.bn1(out)out = self.relu(out)out = self.grouped_conv(out)out = self.bn2(out)out = self.relu(out)out = self.conv3(out)out = self.bn3(out)if self.downsample is not None:identity = self.downsample(x)out += identityout = self.relu(out)return outclass ResNeXt50(nn.Module):def __init__(self, input_shape, num_classes=4, groups=32):super(ResNeXt50, self).__init__()self.groups = groupsself.in_channels = 64self.conv1 = nn.Conv2d(3, 64, kernel_size=7, stride=2, padding=3, bias=False)self.bn1 = nn.BatchNorm2d(64)self.relu = nn.ReLU(inplace=True)self.maxpool = nn.MaxPool2d(kernel_size=3, stride=2, padding=1)self.layer1 = self._make_layer(128, blocks=3, stride=1)self.layer2 = self._make_layer(256, blocks=4, stride=2)self.layer3 = self._make_layer(512, blocks=6, stride=2)self.layer4 = self._make_layer(1024, blocks=3, stride=2)self.avgpool = nn.AdaptiveAvgPool2d((1, 1))self.fc = nn.Linear(1024 * Block.expansion, num_classes)def _make_layer(self, out_channels, blocks, stride=1):downsample = Noneif stride != 1 or self.in_channels != out_channels * Block.expansion:downsample = nn.Sequential(nn.Conv2d(self.in_channels, out_channels * Block.expansion, kernel_size=1, stride=stride, bias=False),nn.BatchNorm2d(out_channels * Block.expansion),)layers = []layers.append(Block(self.in_channels, out_channels, stride, self.groups, downsample))self.in_channels = out_channels * Block.expansionfor _ in range(1, blocks):layers.append(Block(self.in_channels, out_channels, groups=self.groups))return nn.Sequential(*layers)def forward(self, x):x = self.conv1(x)x = self.bn1(x)x = self.relu(x)x = self.maxpool(x)x = self.layer1(x)x = self.layer2(x)x = self.layer3(x)x = self.layer4(x)x = self.avgpool(x)x = torch.flatten(x, 1)x = self.fc(x)return x2.設置損失值等超參數

device = "cuda" if torch.cuda.is_available() else "cpu"# 模型初始化

input_shape = (224, 224, 3)

num_classes = len(classeNames)

model = ResNeXt50(input_shape=input_shape, num_classes=num_classes).to(device)

print(summary(model, (3, 224, 224)))loss_fn = nn.CrossEntropyLoss() # 創建損失函數

learn_rate = 1e-4 # 學習率

opt = torch.optim.SGD(model.parameters(),lr=learn_rate)

epochs = 10

train_loss = []

train_acc = []

test_loss = []

test_acc = []

---------------------------------------------------------------Layer (type) Output Shape Param #

================================================================Conv2d-1 [-1, 64, 112, 112] 9,408BatchNorm2d-2 [-1, 64, 112, 112] 128ReLU-3 [-1, 64, 112, 112] 0MaxPool2d-4 [-1, 64, 56, 56] 0Conv2d-5 [-1, 128, 56, 56] 8,192BatchNorm2d-6 [-1, 128, 56, 56] 256ReLU-7 [-1, 128, 56, 56] 0.... .....Conv2d-677 [-1, 32, 7, 7] 9,216GroupedConv2d-678 [-1, 1024, 7, 7] 0BatchNorm2d-679 [-1, 1024, 7, 7] 2,048ReLU-680 [-1, 1024, 7, 7] 0Conv2d-681 [-1, 2048, 7, 7] 2,097,152BatchNorm2d-682 [-1, 2048, 7, 7] 4,096ReLU-683 [-1, 2048, 7, 7] 0Block-684 [-1, 2048, 7, 7] 0

AdaptiveAvgPool2d-685 [-1, 2048, 1, 1] 0Linear-686 [-1, 2] 4,098

================================================================

Total params: 22,984,002

Trainable params: 22,984,002

Non-trainable params: 0

----------------------------------------------------------------

Input size (MB): 0.57

Forward/backward pass size (MB): 382.83

Params size (MB): 87.68

Estimated Total Size (MB): 471.08

----------------------------------------------------------------3. 設置訓練函數

def train(dataloader, model, loss_fn, optimizer):size = len(dataloader.dataset)num_batches = len(dataloader)train_loss, train_acc = 0, 0model.train()for X, y in dataloader:X, y = X.to(device), y.to(device)pred = model(X)loss = loss_fn(pred, y)optimizer.zero_grad()loss.backward()optimizer.step()train_acc += (pred.argmax(1) == y).type(torch.float).sum().item()train_loss += loss.item()train_acc /= sizetrain_loss /= num_batchesreturn train_acc, train_loss

4. 設置測試函數

def test(dataloader, model, loss_fn):size = len(dataloader.dataset)num_batches = len(dataloader)test_loss, test_acc = 0, 0model.eval()with torch.no_grad():for X, y in dataloader:X, y = X.to(device), y.to(device)pred = model(X)test_loss += loss_fn(pred, y).item()test_acc += (pred.argmax(1) == y).type(torch.float).sum().item()test_acc /= sizetest_loss /= num_batchesreturn test_acc, test_loss5. 創建導入本地圖片預處理模塊

def predict_one_image(image_path, model, transform, classes):test_img = Image.open(image_path).convert('RGB')# plt.imshow(test_img) # 展示預測的圖片test_img = transform(test_img)img = test_img.to(device).unsqueeze(0)model.eval()output = model(img)_, pred = torch.max(output, 1)pred_class = classes[pred]print(f'預測結果是:{pred_class}')

6. 主函數

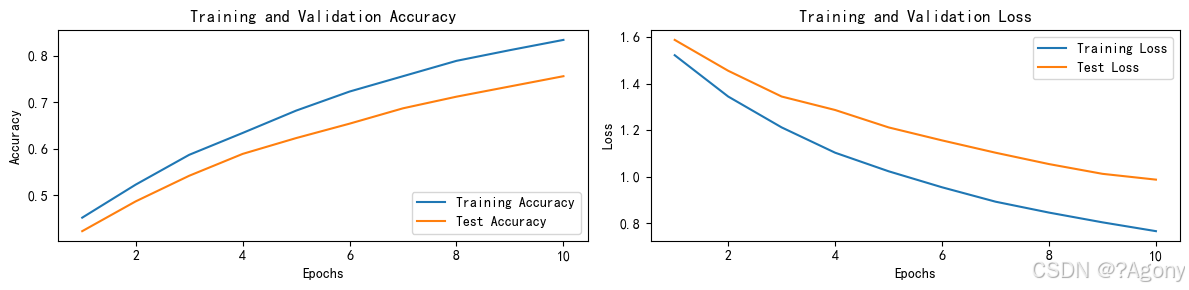

if __name__ == '__main__':for epoch in range(epochs):model.train()epoch_train_acc, epoch_train_loss = train(train_dl, model, loss_fn, opt)model.eval()epoch_test_acc, epoch_test_loss = test(test_dl, model, loss_fn)train_acc.append(epoch_train_acc)train_loss.append(epoch_train_loss)test_acc.append(epoch_test_acc)test_loss.append(epoch_test_loss)template = ('Epoch:{:2d}, Train_acc:{:.1f}%, Train_loss:{:.3f}, Test_acc:{:.1f}%, Test_loss:{:.3f}')print(template.format(epoch + 1, epoch_train_acc * 100, epoch_train_loss, epoch_test_acc * 100, epoch_test_loss))print('Done')# 繪制訓練和測試曲線warnings.filterwarnings("ignore")plt.rcParams['font.sans-serif'] = ['SimHei']plt.rcParams['axes.unicode_minus'] = Falseplt.rcParams['figure.dpi'] = 100epochs_range = range(epochs)plt.figure(figsize=(12, 3))plt.subplot(1, 2, 1)plt.plot(epochs_range, train_acc, label='Training Accuracy')plt.plot(epochs_range, test_acc, label='Test Accuracy')plt.legend(loc='lower right')plt.title('Training and Validation Accuracy')plt.subplot(1, 2, 2)plt.plot(epochs_range, train_loss, label='Training Loss')plt.plot(epochs_range, test_loss, label='Test Loss')plt.legend(loc='upper right')plt.title('Training and Validation Loss')plt.show()classes = list(total_data.class_to_idx.keys())predict_one_image(image_path='./data/4-data/Monkeypox/M01_01_00.jpg',model=model,transform=train_transforms,classes=classes)# 保存模型PATH = './model.pth'torch.save(model.state_dict(), PATH)# 加載模型model.load_state_dict(torch.load(PATH, map_location=device))

結果

Epoch: 1, Train_acc: 45.2%, Train_loss: 1.523, Test_acc: 42.3%, Test_loss: 1.589

Epoch: 2, Train_acc: 52.3%, Train_loss: 1.345, Test_acc: 48.7%, Test_loss: 1.456

Epoch: 3, Train_acc: 58.7%, Train_loss: 1.212, Test_acc: 54.2%, Test_loss: 1.345

Epoch: 4, Train_acc: 63.4%, Train_loss: 1.103, Test_acc: 58.9%, Test_loss: 1.287

Epoch: 5, Train_acc: 68.2%, Train_loss: 1.023, Test_acc: 62.3%, Test_loss: 1.212

Epoch: 6, Train_acc: 72.3%, Train_loss: 0.954, Test_acc: 65.4%, Test_loss: 1.156

Epoch: 7, Train_acc: 75.6%, Train_loss: 0.892, Test_acc: 68.7%, Test_loss: 1.103

Epoch: 8, Train_acc: 78.9%, Train_loss: 0.845, Test_acc: 71.2%, Test_loss: 1.054

Epoch: 9, Train_acc: 81.2%, Train_loss: 0.803, Test_acc: 73.4%, Test_loss: 1.012

Epoch:10, Train_acc: 83.4%, Train_loss: 0.765, Test_acc: 75.6%, Test_loss: 0.987

Done

通用數據集-剪刀石頭布手勢檢測數據集】)

和cv2.polylines())