一、定義

- qwen-moe 代碼講解, 代碼qwen-moe與Mixtral-moe 一樣, 專家模塊

- qwen-moe 開源教程

- Mixture of Experts (MoE) 模型在Transformer結構中如何實現,Gate的實現一般采用什么函數? Sparse MoE的優勢有哪些?MoE是如何提高模型容量而不顯著增加計算負

擔的?

二、實現

- qwen-moe 代碼講解

參考:https://blog.csdn.net/v_JULY_v/article/details/135176583?utm_medium=distribute.pc_relevant.none-task-blog-2defaultbaidujs_baidulandingword~default-0-135176583-blog-135046508.235v43pc_blog_bottom_relevance_base4&spm=1001.2101.3001.4242.1&utm_relevant_index=3

import torch

from torch import nn

from torch.nn import functional as F

from transformers.activations import ACT2FNclass Qwen2MoeMLP(nn.Module):def __init__(self, config, intermediate_size=None):super().__init__()self.config = configself.hidden_size = config.hidden_sizeself.intermediate_size = intermediate_sizeself.gate_proj = nn.Linear(self.hidden_size, self.intermediate_size, bias=False)self.up_proj = nn.Linear(self.hidden_size, self.intermediate_size, bias=False)self.down_proj = nn.Linear(self.intermediate_size, self.hidden_size, bias=False)#self.act_fn = ACT2FN[config.hidden_act]def forward(self, x):return self.down_proj(self.gate_proj(x) * self.up_proj(x))class Qwen2MoeSparseMoeBlock(nn.Module):def __init__(self, config):super().__init__()self.num_experts = config.num_expertsself.top_k = config.num_experts_per_tokself.norm_topk_prob = config.norm_topk_prob# gatingself.gate = nn.Linear(config.hidden_size, config.num_experts, bias=False)self.experts = nn.ModuleList([Qwen2MoeMLP(config, intermediate_size=config.moe_intermediate_size) for _ in range(self.num_experts)])self.shared_expert = Qwen2MoeMLP(config, intermediate_size=config.shared_expert_intermediate_size)self.shared_expert_gate = torch.nn.Linear(config.hidden_size, 1, bias=False)def forward(self, hidden_states: torch.Tensor) -> torch.Tensor:""" """batch_size, sequence_length, hidden_dim = hidden_states.shapehidden_states = hidden_states.view(-1, hidden_dim)# router_logits: (batch * sequence_length, n_experts)router_logits = self.gate(hidden_states)routing_weights = F.softmax(router_logits, dim=1, dtype=torch.float)#選取每個token 對應的前k 個專家routing_weights, selected_experts = torch.topk(routing_weights, self.top_k, dim=-1)if self.norm_topk_prob:routing_weights /= routing_weights.sum(dim=-1, keepdim=True) #權重歸一化 確保每個token的專家權重之和為1# we cast back to the input dtyperouting_weights = routing_weights.to(hidden_states.dtype)#全為0的張量final_hidden_states = torch.zeros((batch_size * sequence_length, hidden_dim), dtype=hidden_states.dtype, device=hidden_states.device)# One hot encode the selected experts to create an expert mask# this will be used to easily index which expert is going to be sollicitatedexpert_mask = torch.nn.functional.one_hot(selected_experts, num_classes=self.num_experts).permute(2, 1, 0) #稀疏矩陣# Loop over all available experts in the model and perform the computation on each expertfor expert_idx in range(self.num_experts):expert_layer = self.experts[expert_idx] # 第idx 專家對應的函數idx, top_x = torch.where(expert_mask[expert_idx]) #idx 專家,關注的token, top_x 對應第x 個tokenprint(expert_idx,top_x.cpu().tolist() ) #專家,處理的token# Index the correct hidden states and compute the expert hidden state for# the current expert. We need to make sure to multiply the output hidden# states by `routing_weights` on the corresponding tokens (top-1 and top-2) 專家輸入信息:current_state = hidden_states[None, top_x].reshape(-1, hidden_dim) #取出對應的token信息current_hidden_states = expert_layer(current_state) * routing_weights[top_x, idx, None] #專家輸出# However `index_add_` only support torch tensors for indexing so we'll use# the `top_x` tensor here. 使用.index_add_函數后在指定位置(top_x)加上了指定值(current_hidden_states)final_hidden_states.index_add_(0, top_x, current_hidden_states.to(hidden_states.dtype))shared_expert_output = self.shared_expert(hidden_states)shared_expert_output = F.sigmoid(self.shared_expert_gate(hidden_states)) * shared_expert_outputfinal_hidden_states = final_hidden_states + shared_expert_outputfinal_hidden_states = final_hidden_states.reshape(batch_size, sequence_length, hidden_dim)return final_hidden_states, router_logits# 假設的配置

class Config:def __init__(self):self.num_experts = 8self.num_experts_per_tok = 2self.norm_topk_prob = Trueself.hidden_size = 2self.moe_intermediate_size = 209self.shared_expert_intermediate_size = 20# 檢查是否有可用的GPUdevice = torch.device("cpu")# 創建模型實例

config = Config()

model = Qwen2MoeSparseMoeBlock(config).to(device)input_tensor = torch.randn(1,3,2).to(device)# 前向傳播

output = model(input_tensor)

print(output)

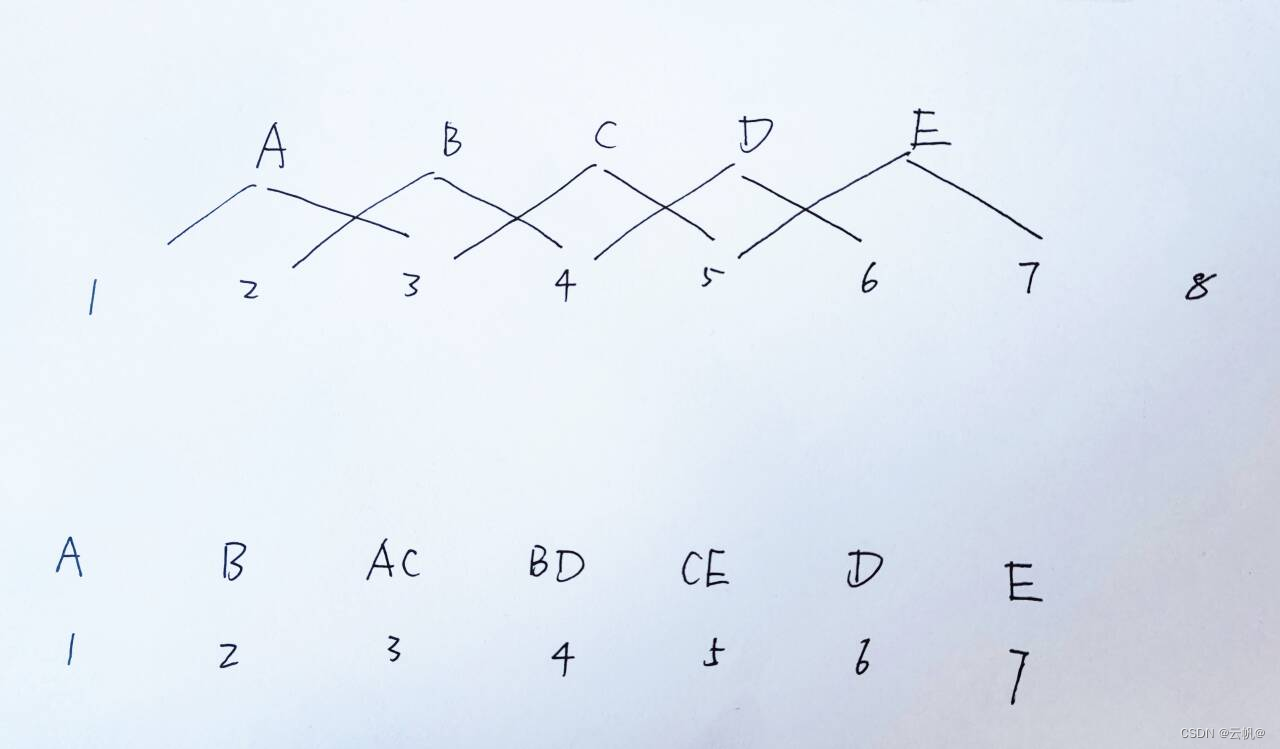

注意:1. 常規思路: 每個token 選擇2 個專家, 然后每個token 傳入2個專家中,進行處理。----->為了加快推理速度----->關注視角由token 轉為專家。

便把關注視角從“各個token”變成了“各個專家”,當然,大部分情況下 token數遠遠不止下圖這5個,而是比專家數多很多。總之,這么一轉換,最終可以省掉很多循環。

遍歷每個專家,對token 對應的信息整體輸入專家模塊。

# 【代碼塊A】routing_weights

# 每行對應1個token,第0列為其對應排位第1的expert、第1列為其對應排位第2的expert,元素值為相應權重

[[0.5310, 0.4690],[0.5087, 0.4913],[0.5014, 0.4986],[0.5239, 0.4761],[0.5817, 0.4183],[0.5126, 0.4874]]

# 【代碼塊B】expert_mask[expert_idx]

# 下述兩行例子的物理含義為:

# 第一行是“該expert作為排位1的exert存在時,需要處理第9個token;

# 第二行是“該expert作為排位2的expert存在時,需要處理第10、11個token”

[[0, 0, 0, 0, 0, 0, 0, 0, 0, 1, 0, 0, 0, 0, 0, 0],[0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 1, 1, 0, 0, 0, 0]]

# 【代碼塊C】idx, top_x = torch.where(expert_mask[expert_idx])

# 以上述expert_mask[expert_idx]樣例為例,對應的torch.where(expert_mask[expert_idx])結果如下

idx: [0, 1, 1]

top_x: [9, 10, 11]

idx對應行索引,top_x對應列索引,例如張量expert_mask[expert_idx]中,出現元素1的索引為(0, 9)、(1, 10)、(1, 11)

從物理含義來理解,top_x實際上就對應著“關乎當前expert的token索引”,第9、第10、第11個token被“路由”導向了當前所關注的expert,通過top_x可以取到“需要傳入該expert的輸入”,也即第9、第10、第11個token對應的隱向量因此top_x將作為索引用于從全部token的隱向量hidden_states中取出對應token的隱向量

而idx和top_x也會組合起來被用于從expert權重張量routing_weights中取出對應的權重

current_state = hidden_states[None, top_x].reshape(-1, hidden_dim) #取出top_x的token信息

current_hidden_states = expert_layer(current_state) * routing_weights[top_x, idx, None] #專家輸出# However `index_add_` only support torch tensors for indexing so we'll use

# the `top_x` tensor here. 使用.index_add_函數后在指定位置(top_x)加上了指定值(current_hidden_states)

final_hidden_states.index_add_(0, top_x, current_hidden_states.to(hidden_states.dtype))

-

開源教程

https://developer.aliyun.com/article/1471903?spm=a2c6h.28954702.blog-index-detail.67.536b4c2d9ZzdBw -

Mixture of Experts (MoE) 模型在Transformer結構中如何實現,Gate的實現一般采用什么函數? Sparse MoE的優勢有哪些?MoE是如何提高模型容量而不顯著增加計算負擔的?

self.gate = nn.Linear(config.hidden_size, config.num_experts, bias=False)

)

+ labelimg的使用(數據標準))

![[數據集][目標檢測]獼猴桃檢測數據集VOC+YOLO格式1838張1類別](http://pic.xiahunao.cn/[數據集][目標檢測]獼猴桃檢測數據集VOC+YOLO格式1838張1類別)

)

)

![[C++]vector的模擬實現](http://pic.xiahunao.cn/[C++]vector的模擬實現)

![[sylar]后端學習:配置環境(一)](http://pic.xiahunao.cn/[sylar]后端學習:配置環境(一))