文章目錄

- 1、消費模式

- 1.1、創建一個3分區1副本的 主題 my_topic1

- 1.2、創建生產者 KafkaProducer1

- 1.2、創建消費者

- 1.2.1、創建消費者 KafkaConsumer1Group1 并指定組 my_group1

- 1.2.3、創建消費者 KafkaConsumer2Group1 并指定組 my_group1

- 1.2.3、創建消費者 KafkaConsumer3Group1 并指定組 my_group1

- 1.2.4、創建消費者 KafkaConsumer1Group2 并指定組 my_group2

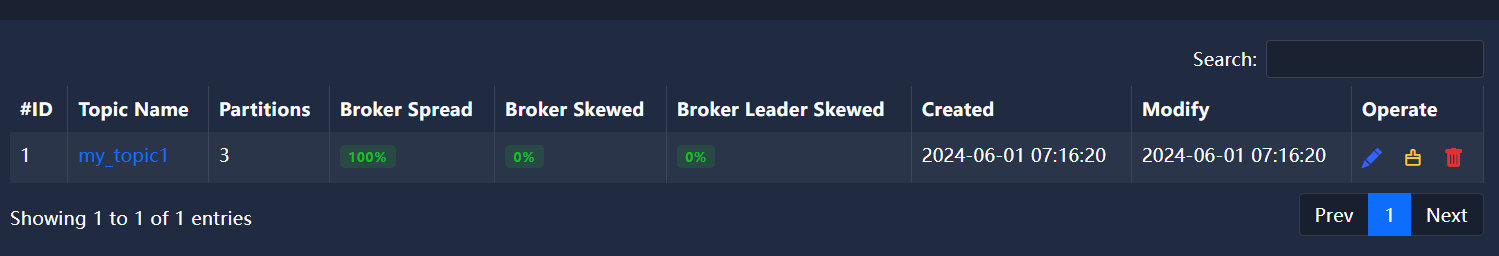

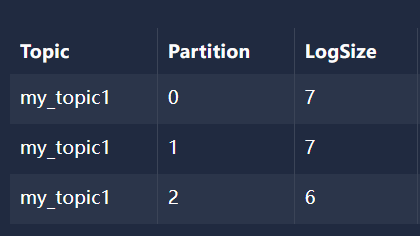

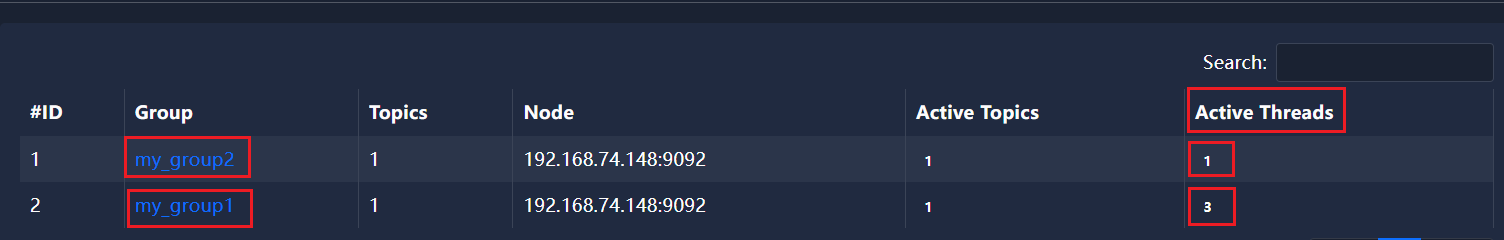

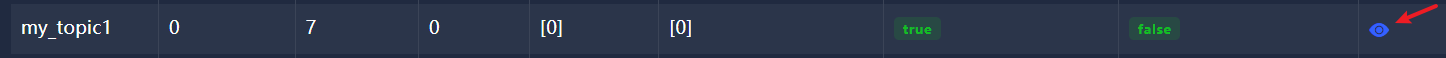

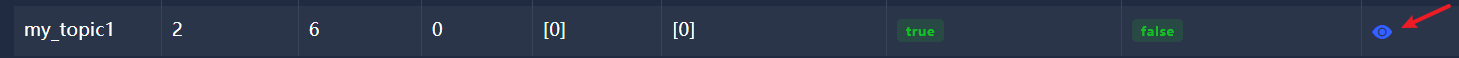

- 1.3、eagle for apache kafka

- 1.3.1、查看分區0的數據

- 1.3.2、查看分區1的數據

- 1.3.3、查看分區2的數據

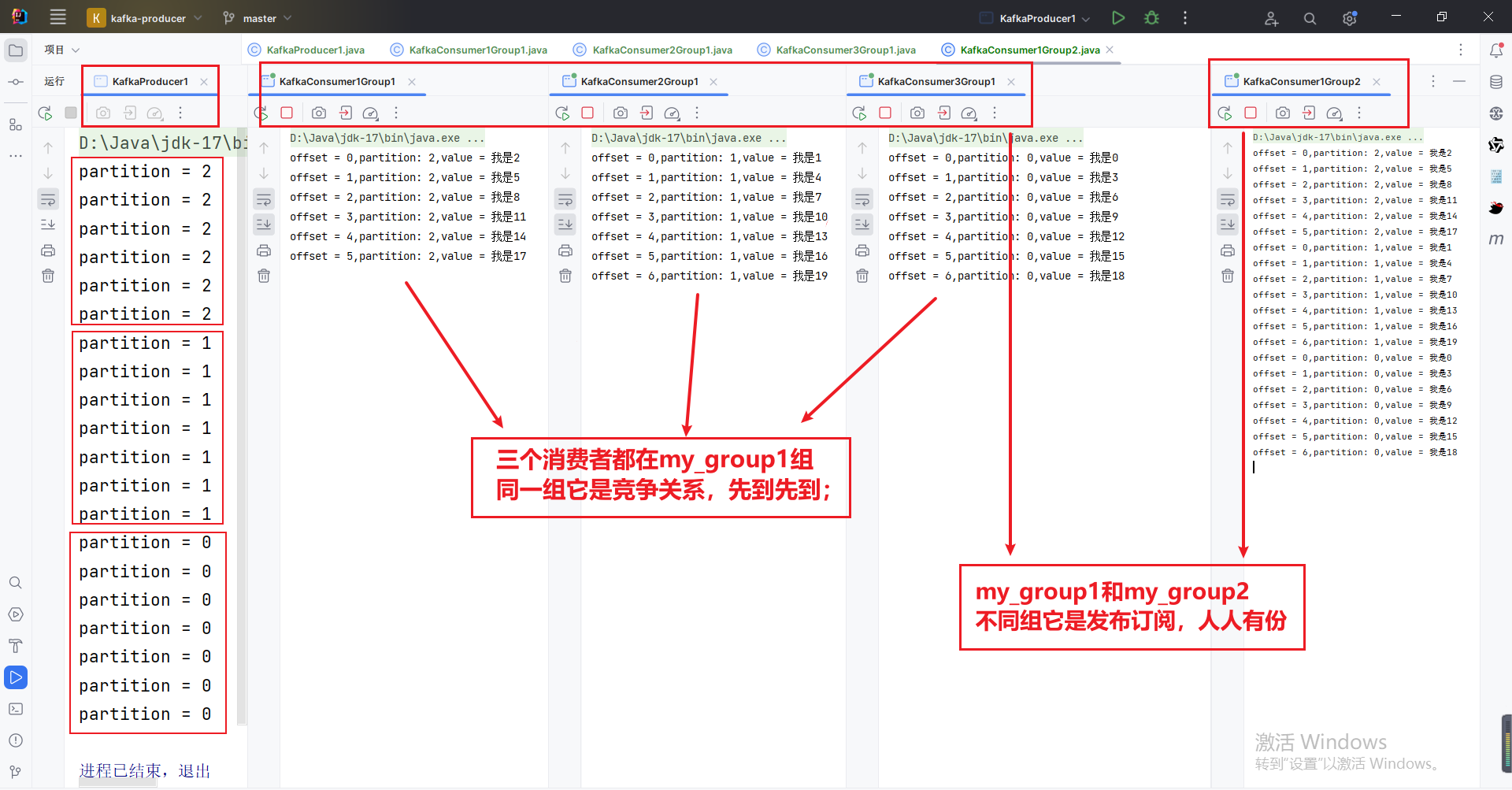

1、消費模式

消費模式

點對點:一個組消費消息時,只能由組內的一個消費者消費一次 避免重復消費發布訂閱:多個組消費消息時,每個組都可以消費一次消息

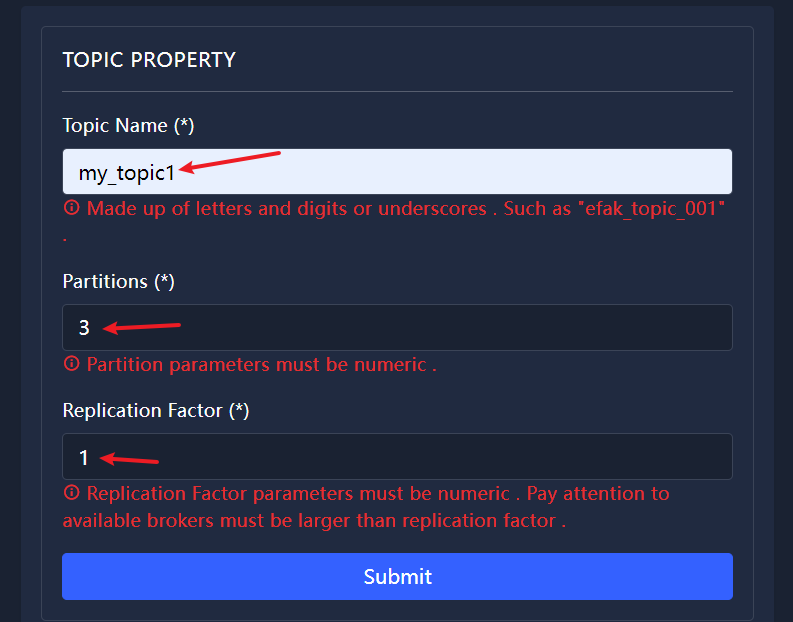

1.1、創建一個3分區1副本的 主題 my_topic1

1.2、創建生產者 KafkaProducer1

package com.atguigu.kafka.producer;

import org.apache.kafka.clients.producer.*;

import java.util.Properties;

import java.util.concurrent.ExecutionException;

public class KafkaProducer1 {/*** 主函數用于演示如何向Kafka的特定主題發送消息。** @param args 命令行參數(未使用)*/public static void main(String[] args) throws ExecutionException, InterruptedException {// 初始化Kafka生產者配置Properties props = new Properties();props.put(ProducerConfig.BOOTSTRAP_SERVERS_CONFIG, "192.168.74.148:9092"); // 指定Kafka broker的地址和端口props.put("acks", "all"); // 確認消息寫入策略props.put("retries", 0); // 消息發送失敗時的重試次數props.put("linger.ms", 1); // 發送緩沖區等待時間props.put("key.serializer", "org.apache.kafka.common.serialization.StringSerializer"); // 指定鍵的序列化器props.put("value.serializer", "org.apache.kafka.common.serialization.StringSerializer"); // 指定值的序列化器// 創建Kafka生產者實例Producer<String, String> producer = new KafkaProducer<>(props);// 發送消息到主題"my_topic3"// 異步發送消息:不接收kafka的響應//producer.send(new ProducerRecord<String, String>("my_topic3", "hello,1,2,3"));// 注釋掉的循環代碼塊展示了如何批量發送消息//for (int i = 0; i < 100; i++)// producer.send(new ProducerRecord<String, String>("my-topic", Integer.toString(i), Integer.toString(i)));for (int i=0;i<20;i++) {producer.send(new ProducerRecord<String, String>("my_topic1",i%3,"null","我是"+i),new Callback() {//消息發送成功,kafka broker ack 以后回調@Overridepublic void onCompletion(RecordMetadata recordMetadata, Exception e) {//exception:如果有異常代表消息未能正常發送到kafka,沒有異常代表消息發送成功://此時kafka的消息不一定持久化成功(需要kafka生產者加配置)//RecordMetadata代表發送成功的消息的元數據System.out.println("partition = " + recordMetadata.partition());}});}// 關閉生產者實例producer.close();}

}partition = 2

partition = 2

partition = 2

partition = 2

partition = 2

partition = 2

partition = 1

partition = 1

partition = 1

partition = 1

partition = 1

partition = 1

partition = 1

partition = 0

partition = 0

partition = 0

partition = 0

partition = 0

partition = 0

partition = 0

1.2、創建消費者

1.2.1、創建消費者 KafkaConsumer1Group1 并指定組 my_group1

package com.atguigu.kafka.consumer;

import org.apache.kafka.clients.consumer.ConsumerConfig;

import org.apache.kafka.clients.consumer.ConsumerRecord;

import org.apache.kafka.clients.consumer.ConsumerRecords;

import org.apache.kafka.clients.consumer.KafkaConsumer;

import java.time.Duration;

import java.util.Arrays;

import java.util.Properties;

public class KafkaConsumer1Group1 {/*** 主函數入口,創建并運行一個Kafka消費者來消費主題"foo"和"bar"的消息。** @param args 命令行參數(未使用)*/public static void main(String[] args) {// 初始化Kafka消費者配置Properties props = new Properties();props.setProperty("bootstrap.servers", "192.168.74.148:9092"); // Kafka broker的地址和端口props.setProperty("group.id", "my_group1"); // 消費者組IDprops.setProperty("enable.auto.commit", "true"); // 自動提交偏移量props.setProperty("auto.commit.interval.ms", "1000"); // 自動提交偏移量的時間間隔props.setProperty("key.deserializer", "org.apache.kafka.common.serialization.StringDeserializer"); // 鍵的反序列化器props.setProperty("value.deserializer", "org.apache.kafka.common.serialization.StringDeserializer"); // 值的反序列化器props.setProperty(ConsumerConfig.AUTO_OFFSET_RESET_CONFIG, "earliest");// 使用配置創建KafkaConsumer實例KafkaConsumer<String, String> consumer = new KafkaConsumer<>(props);// 訂閱要消費的主題consumer.subscribe(Arrays.asList("my_topic1"));// 持續消費消息while (true) {// 從Kafka服務器拉取一批消息ConsumerRecords<String, String> records = consumer.poll(Duration.ofMillis(100));// 遍歷并處理收到的消息記錄for (ConsumerRecord<String, String> record : records)System.out.printf("offset = %d,partition: %d,value = %s%n",record.offset(),record.partition(), record.value());}}}offset = 0,partition: 2,value = 我是2

offset = 1,partition: 2,value = 我是5

offset = 2,partition: 2,value = 我是8

offset = 3,partition: 2,value = 我是11

offset = 4,partition: 2,value = 我是14

offset = 5,partition: 2,value = 我是17

1.2.3、創建消費者 KafkaConsumer2Group1 并指定組 my_group1

package com.atguigu.kafka.consumer;

import org.apache.kafka.clients.consumer.ConsumerConfig;

import org.apache.kafka.clients.consumer.ConsumerRecord;

import org.apache.kafka.clients.consumer.ConsumerRecords;

import org.apache.kafka.clients.consumer.KafkaConsumer;

import java.time.Duration;

import java.util.Arrays;

import java.util.Properties;

public class KafkaConsumer2Group1 {/*** 主函數入口,創建并運行一個Kafka消費者來消費主題"foo"和"bar"的消息。** @param args 命令行參數(未使用)*/public static void main(String[] args) {// 初始化Kafka消費者配置Properties props = new Properties();props.setProperty("bootstrap.servers", "192.168.74.148:9092"); // Kafka broker的地址和端口props.setProperty("group.id", "my_group1"); // 消費者組IDprops.setProperty("enable.auto.commit", "true"); // 自動提交偏移量props.setProperty("auto.commit.interval.ms", "1000"); // 自動提交偏移量的時間間隔props.setProperty("key.deserializer", "org.apache.kafka.common.serialization.StringDeserializer"); // 鍵的反序列化器props.setProperty("value.deserializer", "org.apache.kafka.common.serialization.StringDeserializer"); // 值的反序列化器props.setProperty(ConsumerConfig.AUTO_OFFSET_RESET_CONFIG, "earliest");// 使用配置創建KafkaConsumer實例KafkaConsumer<String, String> consumer = new KafkaConsumer<>(props);// 訂閱要消費的主題consumer.subscribe(Arrays.asList("my_topic1"));// 持續消費消息while (true) {// 從Kafka服務器拉取一批消息ConsumerRecords<String, String> records = consumer.poll(Duration.ofMillis(100));// 遍歷并處理收到的消息記錄for (ConsumerRecord<String, String> record : records)System.out.printf("offset = %d,partition: %d,value = %s%n",record.offset(),record.partition(), record.value());}}}offset = 0,partition: 1,value = 我是1

offset = 1,partition: 1,value = 我是4

offset = 2,partition: 1,value = 我是7

offset = 3,partition: 1,value = 我是10

offset = 4,partition: 1,value = 我是13

offset = 5,partition: 1,value = 我是16

offset = 6,partition: 1,value = 我是19

1.2.3、創建消費者 KafkaConsumer3Group1 并指定組 my_group1

package com.atguigu.kafka.consumer;

import org.apache.kafka.clients.consumer.ConsumerConfig;

import org.apache.kafka.clients.consumer.ConsumerRecord;

import org.apache.kafka.clients.consumer.ConsumerRecords;

import org.apache.kafka.clients.consumer.KafkaConsumer;

import java.time.Duration;

import java.util.Arrays;

import java.util.Properties;

public class KafkaConsumer3Group1 {/*** 主函數入口,創建并運行一個Kafka消費者來消費主題"foo"和"bar"的消息。** @param args 命令行參數(未使用)*/public static void main(String[] args) {// 初始化Kafka消費者配置Properties props = new Properties();props.setProperty("bootstrap.servers", "192.168.74.148:9092"); // Kafka broker的地址和端口props.setProperty("group.id", "my_group1"); // 消費者組IDprops.setProperty("enable.auto.commit", "true"); // 自動提交偏移量props.setProperty("auto.commit.interval.ms", "1000"); // 自動提交偏移量的時間間隔props.setProperty("key.deserializer", "org.apache.kafka.common.serialization.StringDeserializer"); // 鍵的反序列化器props.setProperty("value.deserializer", "org.apache.kafka.common.serialization.StringDeserializer"); // 值的反序列化器props.setProperty(ConsumerConfig.AUTO_OFFSET_RESET_CONFIG, "earliest");// 使用配置創建KafkaConsumer實例KafkaConsumer<String, String> consumer = new KafkaConsumer<>(props);// 訂閱要消費的主題consumer.subscribe(Arrays.asList("my_topic1"));// 持續消費消息while (true) {// 從Kafka服務器拉取一批消息ConsumerRecords<String, String> records = consumer.poll(Duration.ofMillis(100));// 遍歷并處理收到的消息記錄for (ConsumerRecord<String, String> record : records)System.out.printf("offset = %d,partition: %d,value = %s%n",record.offset(),record.partition(), record.value());}}}offset = 0,partition: 0,value = 我是0

offset = 1,partition: 0,value = 我是3

offset = 2,partition: 0,value = 我是6

offset = 3,partition: 0,value = 我是9

offset = 4,partition: 0,value = 我是12

offset = 5,partition: 0,value = 我是15

offset = 6,partition: 0,value = 我是18

1.2.4、創建消費者 KafkaConsumer1Group2 并指定組 my_group2

package com.atguigu.kafka.consumer;

import org.apache.kafka.clients.consumer.ConsumerConfig;

import org.apache.kafka.clients.consumer.ConsumerRecord;

import org.apache.kafka.clients.consumer.ConsumerRecords;

import org.apache.kafka.clients.consumer.KafkaConsumer;

import java.time.Duration;

import java.util.Arrays;

import java.util.Properties;

public class KafkaConsumer1Group2 {/*** 主函數入口,創建并運行一個Kafka消費者來消費主題"foo"和"bar"的消息。** @param args 命令行參數(未使用)*/public static void main(String[] args) {// 初始化Kafka消費者配置Properties props = new Properties();props.setProperty("bootstrap.servers", "192.168.74.148:9092"); // Kafka broker的地址和端口props.setProperty("group.id", "my_group2"); // 消費者組IDprops.setProperty("enable.auto.commit", "true"); // 自動提交偏移量props.setProperty("auto.commit.interval.ms", "1000"); // 自動提交偏移量的時間間隔props.setProperty("key.deserializer", "org.apache.kafka.common.serialization.StringDeserializer"); // 鍵的反序列化器props.setProperty("value.deserializer", "org.apache.kafka.common.serialization.StringDeserializer"); // 值的反序列化器props.setProperty(ConsumerConfig.AUTO_OFFSET_RESET_CONFIG, "earliest");// 使用配置創建KafkaConsumer實例KafkaConsumer<String, String> consumer = new KafkaConsumer<>(props);// 訂閱要消費的主題consumer.subscribe(Arrays.asList("my_topic1"));// 持續消費消息while (true) {// 從Kafka服務器拉取一批消息ConsumerRecords<String, String> records = consumer.poll(Duration.ofMillis(100));// 遍歷并處理收到的消息記錄for (ConsumerRecord<String, String> record : records)System.out.printf("offset = %d,partition: %d,value = %s%n",record.offset(),record.partition(), record.value());}}}offset = 0,partition: 2,value = 我是2

offset = 1,partition: 2,value = 我是5

offset = 2,partition: 2,value = 我是8

offset = 3,partition: 2,value = 我是11

offset = 4,partition: 2,value = 我是14

offset = 5,partition: 2,value = 我是17

offset = 0,partition: 1,value = 我是1

offset = 1,partition: 1,value = 我是4

offset = 2,partition: 1,value = 我是7

offset = 3,partition: 1,value = 我是10

offset = 4,partition: 1,value = 我是13

offset = 5,partition: 1,value = 我是16

offset = 6,partition: 1,value = 我是19

offset = 0,partition: 0,value = 我是0

offset = 1,partition: 0,value = 我是3

offset = 2,partition: 0,value = 我是6

offset = 3,partition: 0,value = 我是9

offset = 4,partition: 0,value = 我是12

offset = 5,partition: 0,value = 我是15

offset = 6,partition: 0,value = 我是18

1.3、eagle for apache kafka

1.3.1、查看分區0的數據

[[{"partition": 0,"offset": 0,"msg": "我是0","timespan": 1717226677707,"date": "2024-06-01 07:24:37"},{"partition": 0,"offset": 1,"msg": "我是3","timespan": 1717226677720,"date": "2024-06-01 07:24:37"},{"partition": 0,"offset": 2,"msg": "我是6","timespan": 1717226677721,"date": "2024-06-01 07:24:37"},{"partition": 0,"offset": 3,"msg": "我是9","timespan": 1717226677721,"date": "2024-06-01 07:24:37"},{"partition": 0,"offset": 4,"msg": "我是12","timespan": 1717226677721,"date": "2024-06-01 07:24:37"},{"partition": 0,"offset": 5,"msg": "我是15","timespan": 1717226677722,"date": "2024-06-01 07:24:37"},{"partition": 0,"offset": 6,"msg": "我是18","timespan": 1717226677722,"date": "2024-06-01 07:24:37"}]

]

1.3.2、查看分區1的數據

[[{"partition": 1,"offset": 0,"msg": "我是1","timespan": 1717226677720,"date": "2024-06-01 07:24:37"},{"partition": 1,"offset": 1,"msg": "我是4","timespan": 1717226677721,"date": "2024-06-01 07:24:37"},{"partition": 1,"offset": 2,"msg": "我是7","timespan": 1717226677721,"date": "2024-06-01 07:24:37"},{"partition": 1,"offset": 3,"msg": "我是10","timespan": 1717226677721,"date": "2024-06-01 07:24:37"},{"partition": 1,"offset": 4,"msg": "我是13","timespan": 1717226677721,"date": "2024-06-01 07:24:37"},{"partition": 1,"offset": 5,"msg": "我是16","timespan": 1717226677722,"date": "2024-06-01 07:24:37"},{"partition": 1,"offset": 6,"msg": "我是19","timespan": 1717226677722,"date": "2024-06-01 07:24:37"}]

]

1.3.3、查看分區2的數據

[[{"partition": 2,"offset": 0,"msg": "我是2","timespan": 1717226677720,"date": "2024-06-01 07:24:37"},{"partition": 2,"offset": 1,"msg": "我是5","timespan": 1717226677721,"date": "2024-06-01 07:24:37"},{"partition": 2,"offset": 2,"msg": "我是8","timespan": 1717226677721,"date": "2024-06-01 07:24:37"},{"partition": 2,"offset": 3,"msg": "我是11","timespan": 1717226677721,"date": "2024-06-01 07:24:37"},{"partition": 2,"offset": 4,"msg": "我是14","timespan": 1717226677721,"date": "2024-06-01 07:24:37"},{"partition": 2,"offset": 5,"msg": "我是17","timespan": 1717226677722,"date": "2024-06-01 07:24:37"}]

]

-python)

)

)